一、准备操作

(1)修改所有主机名和解析

hostnamectl set-hostname master01

hostnamectl set-hostname master02

hostnamectl set-hostname master03

hostnamectl set-hostname node01(2)所有主机添加解析

cat >> /etc/hosts <<EOF

192.168.110.101 master01

192.168.110.102 master02

192.168.110.103 master03

192.168.110.104 node01

192.168.110.200 api-server

EOF(3)关闭防火墙和selinux等

sed -i 's#enforcing#disabled#g' /etc/selinux/config

setenforce 0

systemctl disable --now firewalld NetworkManager postfix

swapoff -a(4)sshd服务优化

# 1、加速访问(所有节点上)

sed -ri 's@^#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config

sed -ri 's#^GSSAPIAuthentication yes#GSSAPIAuthentication no#g' /etc/ssh/sshd_config

grep ^UseDNS /etc/ssh/sshd_config

grep ^GSSAPIAuthentication /etc/ssh/sshd_config

systemctl restart sshd

# 2、密钥登录(主机点做)

# 目的:为了让后续一些远程拷贝操作更方便

ssh-keygen -t rsa -b 4096

ssh-copy-id -i ~/.ssh/id_rsa.pub root@master02

ssh-copy-id -i ~/.ssh/id_rsa.pub root@master03

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node01(5)增大文件打开数量(退出当前会话立即生效)

cat > /etc/security/limits.d/k8s.conf <<'EOF'

* soft nofile 1048576

* hard nofile 1048576

EOF

ulimit -Sn

ulimit -Hn(6)所有节点配置模块自动加载,此步骤不做的话(kubeadm init时会直接失败!)

modprobe br_netfilter

modprobe ip_conntrack

cat >/etc/rc.sysinit<<EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

echo "modprobe br_netfilter" >/etc/sysconfig/modules/br_netfilter.modules

echo "modprobe ip_conntrack" >/etc/sysconfig/modules/ip_conntrack.modules

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

chmod 755 /etc/sysconfig/modules/ip_conntrack.modules

lsmod | grep br_netfilter(7)同步集群时间

采取的是master01做内网集群的ntp服务端,它与公网ntp服务同步时间,其他节点都跟master01同步时间

# =====================》chrony服务端:服务端我们可以自己搭建,也可以直接用公网上的时间服务

器,所以是否部署服务端看你自己

# 1、安装

yum -y install chrony

# 2、修改配置文件

mv /etc/chrony.conf /etc/chrony.conf.bak

cat > /etc/chrony.conf << EOF

server ntp1.aliyun.com iburst minpoll 4 maxpoll 10

server ntp2.aliyun.com iburst minpoll 4 maxpoll 10

server ntp3.aliyun.com iburst minpoll 4 maxpoll 10

server ntp4.aliyun.com iburst minpoll 4 maxpoll 10

server ntp5.aliyun.com iburst minpoll 4 maxpoll 10

server ntp6.aliyun.com iburst minpoll 4 maxpoll 10

server ntp7.aliyun.com iburst minpoll 4 maxpoll 10

driftfile /var/lib/chrony/drift

makestep 10 3

rtcsync

allow 0.0.0.0/0

local stratum 10

keyfile /etc/chrony.keys

logdir /var/log/chrony

stratumweight 0.05

noclientlog

logchange 0.5

EOF

# 4、启动chronyd服务

systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置

systemctl enable chronyd.service

systemctl status chronyd.service

# =====================》chrony客户端:在需要与外部同步时间的机器上安装,启动后会自动与你指

定的服务端同步时间

# 下述步骤一次性粘贴到每个客户端执行即可

# 1、安装chrony

yum -y install chrony

# 2、需改客户端配置文件

/usr/bin/mv /etc/chrony.conf /etc/chrony.conf.bak

cat > /etc/chrony.conf << EOF

# server master01 iburst

server master01 iburst

driftfile /var/lib/chrony/drift

makestep 10 3

rtcsync

local stratum 10

keyfile /etc/chrony.key

logdir /var/log/chrony

stratumweight 0.05

noclientlog

logchange 0.5

EOF

# 3、启动chronyd

systemctl restart chronyd.service

systemctl enable chronyd.service

systemctl status chronyd.service

# 4、验证

chronyc sources -v(8)更新基础yum源(所有机器)

# 1、清理

rm -rf /etc/yum.repos.d/*

yum remove epel-release -y

rm -rf /var/cache/yum/x86_64/6/epel/

# 2、安装阿里的base与epel源

curl -s -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

curl -s -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

# 或者用华为的也行

# curl -o /etc/yum.repos.d/CentOS-Base.repo

https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

# yum install -y https://repo.huaweicloud.com/epel/epel-release-latest-7.noarch.rpm(9)安装系统软件(排除内核)

yum update -y --exclud=kernel*(10)安装基础常用软件

yum -y install expect wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git ntpdate chrony bind-utils rsync unzip git(11)更新内核(docker 对系统内核要求比较高,最好使用4.4+)主节点操作

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.274-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.274-1.el7.elrepo.x86_64.rpm

for i in n1 n2 m1 ; do scp kernel-lt-* $i:/opt; done

补充:如果下载的慢就从网盘里拿吧

链接:https://pan.baidu.com/s/1gVyeBQsJPZjc336E8zGjyQ

提取码:Egon

三个节点操作

#安装

yum localinstall -y /root/kernel-lt*

yum localinstall -y /opt/kernel-lt*

#调到默认启动

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

#查看当前默认启动的内核

grubby --default-kernel

#重启系统

reboot(12)所有节点安装IPVS

# 1、安装ipvsadm等相关工具

yum -y install ipvsadm ipset sysstat conntrack libseccomp

# 2、配置加载

cat > /etc/sysconfig/modules/ipvs.modules << "EOF"

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules};

do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash

/etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs(13)所有机器修改内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

# 立即生效

sysctl --system(14)安装containerd(所有节点都做)

自Kubernetes1.24以后,K8S就不再原生支持docker了

我们都知道containerd来自于docker,后被docker捐献给了云原生计算基金会(我们安装docker会一并安装上containerd)

1、centos7默认的libseccomp的版本为2.3.1,不满足containerd的需求,需要下载2.4以上的版本即可,我这里部署2.5.1版本。

# 1、如果你不升级libseccomp的话,启动容器会报错

**Failed to create pod sandbox: rpc error: code = Unknown desc = failed to

create containerd task: failed to create shim task: OCI runtime create failed:

unable to retrieve OCI runtime error (open

/run/containerd/io.containerd.runtime.v2.task/k8s.io/ed17cbdc31099314dc8fd609d52

b0dfbd6fdf772b78aa26fbc9149ab089c6807/log.json: no such file or directory): runc

did not terminate successfully: exit status 127: unknown**

# 2、升级

rpm -e libseccomp-2.3.1-4.el7.x86_64 --nodeps

# wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

wget https://mirrors.aliyun.com/centos/8/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm # 官网已经gg了,不更新了,请用阿里云

rpm -qa | grep libseccomp

安装方式一:( 基于阿里云的源)推荐用这种方式,安装的是

# 1、卸载之前的

yum remove docker docker-ce containerd docker-common docker-selinux docker-engine-y

# 2、准备repo

cd /etc/yum.repos.d/

wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 3、安装

yum install containerd* -y配置

# 1、配置

mkdir -pv /etc/containerd

containerd config default > /etc/containerd/config.toml # 为containerd生成配置文件

# 2、替换默认pause镜像地址: 这一步非常非常非常非常重要

# 这一步非常非常非常非常重要,国内的镜像地址可能导致下载失败,最终kubeadm安装失败!!!!!!!!!!!!!!

grep sandbox_image /etc/containerd/config.toml

sed -i 's/registry.k8s.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/' /etc/containerd/config.toml

grep sandbox_image /etc/containerd/config.toml

# 请务必确认新地址是可用的:sandbox_image = "registry.cnhangzhou.aliyuncs.com/google_containers/pause:3.6"

# 3、配置systemd作为容器的cgroup driver

grep SystemdCgroup /etc/containerd/config.toml

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

grep SystemdCgroup /etc/containerd/config.toml

# 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像)

#参考:

https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registryconfiguration

#添加 config_path = "/etc/containerd/certs.d"

sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.toml

mkdir /etc/containerd/certs.d/docker.io -p

# docker hub镜像加速

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://dockerproxy.com"]

capabilities = ["pull", "resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull", "resolve"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull", "resolve"]

[host."http://hub-mirror.c.163.com"]

capabilities = ["pull", "resolve"]

EOF

# 5、配置containerd开机自启动

# 5.1 启动containerd服务并配置开机自启动

systemctl daemon-reload && systemctl restart containerd

systemctl enable --now containerd

# 5.2 查看containerd状态

systemctl status containerd

# 5.3 查看containerd的版本

ctr version二、部署负载均衡+keepalived

部署负载均衡+keepalived对外提供vip:192.168.110.200,三台master上部署配置nginx

# 1、添加repo源

cat > /etc/yum.repos.d/nginx.repo << "EOF"

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=1

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

EOF

# 2、安装

yum install nginx -y

# 3、配置

cat > /etc/nginx/nginx.conf <<'EOF'

user nginx nginx;

worker_processes auto;

events {

worker_connections 20240;

use epoll;

}

error_log /var/log/nginx_error.log info;

stream {

upstream kube-servers {

hash $remote_addr consistent;

server master01:6443 weight=5 max_fails=1 fail_timeout=3s;

server master02:6443 weight=5 max_fails=1 fail_timeout=3s;

server master03:6443 weight=5 max_fails=1 fail_timeout=3s;

}

server {

listen 8443 reuseport; # 监听8443端口

proxy_connect_timeout 3s;

proxy_timeout 3000s;

proxy_pass kube-servers;

}

}

EOF

# 4、启动

systemctl restart nginx

systemctl enable nginx

systemctl status nginx三台master部署keepalived

1、安装

yum -y install keepalived

2、修改keepalive的配置文件(根据实际环境,interface eth0可能需要修改为interface ens33)

# 编写配置文件,各个master节点需要修改router_id和mcast_src_ip的值即可。

# ==================================> master01

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id 192.168.110.101

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 8443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 100

priority 100

advert_int 1

mcast_src_ip 192.168.110.101

# nopreempt

# 这行注释掉,否则即使一个具有更高优先级的备份节点出现,当前的 MASTER 也不会

# 被抢占,直至 MASTER 失效。

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.110.200

}

}

EOF

# ==================================> master02

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id 192.168.110.102

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 8443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 100

priority 100

advert_int 1

mcast_src_ip 192.168.110.102

# nopreempt

# 这行注释掉,否则即使一个具有更高优先级的备份节点出现,当前的 MASTER 也不会

# 被抢占,直至 MASTER 失效。

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.110.200

}

}

EOF

# ==================================> master03

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id 192.168.110.103

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 8443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 100

priority 100

advert_int 1

mcast_src_ip 192.168.110.103

# nopreempt # 这行注释掉,否则即使一个具有更高优先级的备份节点出现,当前的 MASTER 也不会

被抢占,直至 MASTER 失效。

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.110.200

}

}

EOF

# ==================================> master03

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id 192.168.71.103

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 8443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens36

virtual_router_id 100

priority 100

advert_int 1

mcast_src_ip 192.168.71.103

# nopreempt

# 这行注释掉,否则即使一个具有更高优先级的备份节点出现,当前的 MASTER 也不会

# 被抢占,直至 MASTER 失效。

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.71.200

}

}

EOF

cat > /etc/keepalived/check_port.sh << 'EOF'

# 设置环境变量,确保所有必要的命令路径正确

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=$(/usr/sbin/ss -lt | grep ":$CHK_PORT" | wc -l)

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

EOF

chmod +x /etc/keepalived/check_port.sh

启动

systemctl restart keepalived

systemctl enable keepalived

systemctl status keepalived

6、去到master01上停掉nginx,8443端口就没了

[root@master01 ~]# systemctl stop nginx

会发现vip漂移走了,注意因为你的检测脚本/etc/keepalived/check_port.sh检测端口失效exit非0后,当前master的权重会-20,此时想其他节点能够抢走vip,你必须注释掉 nopreempt

7、动态查看keepalived日志

journalctl -u keepalived -f三、安装k8s

# 1、所有机器准备k8s源

cat > /etc/yum.repos.d/kubernetes.repo <<"EOF"

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/repodata/repomd.xml.key

EOF

#参考:https://developer.aliyun.com/mirror/kubernetes/setenforce

yum install -y kubelet-1.30* kubeadm-1.30* kubectl-1.30*

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

# 2、master01上操作

初始化master节点(仅在master01节点上执行):

# 可以kubeadm config images list查看

[root@maste01 ~]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.30.0

registry.k8s.io/kube-controller-manager:v1.30.0

registry.k8s.io/kube-scheduler:v1.30.0

registry.k8s.io/kube-proxy:v1.30.0

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.12-0

先生成配置文件,编辑修改后,再部署(推荐此方式,因为高级配置只能通过配置文件指定,例如配置使用ipvs模式直接用kubeadm init则无法指定)

kubeadm config print init-defaults > kubeadm.yaml # 先生成配置文件,内容及修改如下

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 0.0.0.0 # 统一监听在0.0.0.0即可

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock #指定containerd容器运行时

imagePullPolicy: IfNotPresent

name: master01 # 你当前的主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd # 内部etcd服务就直接指定本地文件夹就行

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers # 换成阿里云镜

像仓库地址

kind: ClusterConfiguration

kubernetesVersion: 1.30.0 # 指定k8s版本

controlPlaneEndpoint: "api-server:8443" # 指定你的vip地址192.168.71.200与负载均可暴

漏的端口,建议用主机名

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12 # 指定Service网段

podSubnet: 10.244.0.0/16 # 增加一行,指定pod网段

scheduler: {}

#在文件最后,插入以下内容,(复制时,要带着---):

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # 表示kube-proxy代理模式是ipvs,如果不指定ipvs,会默认使用iptables,但是

iptables效率低,所以我们生产环境建议开启ipvs,阿里云和华为云托管的K8s,也提供ipvs模式

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

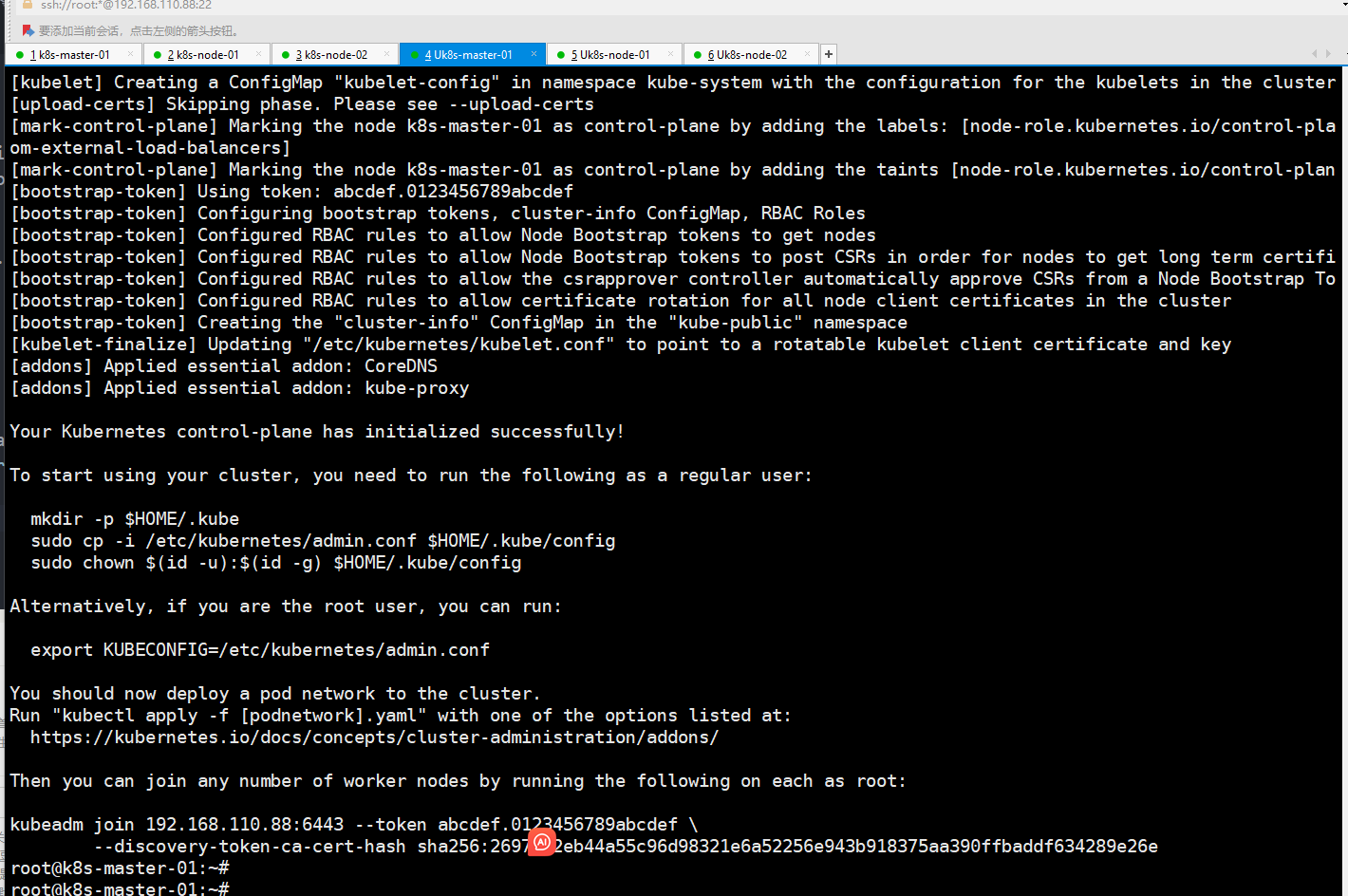

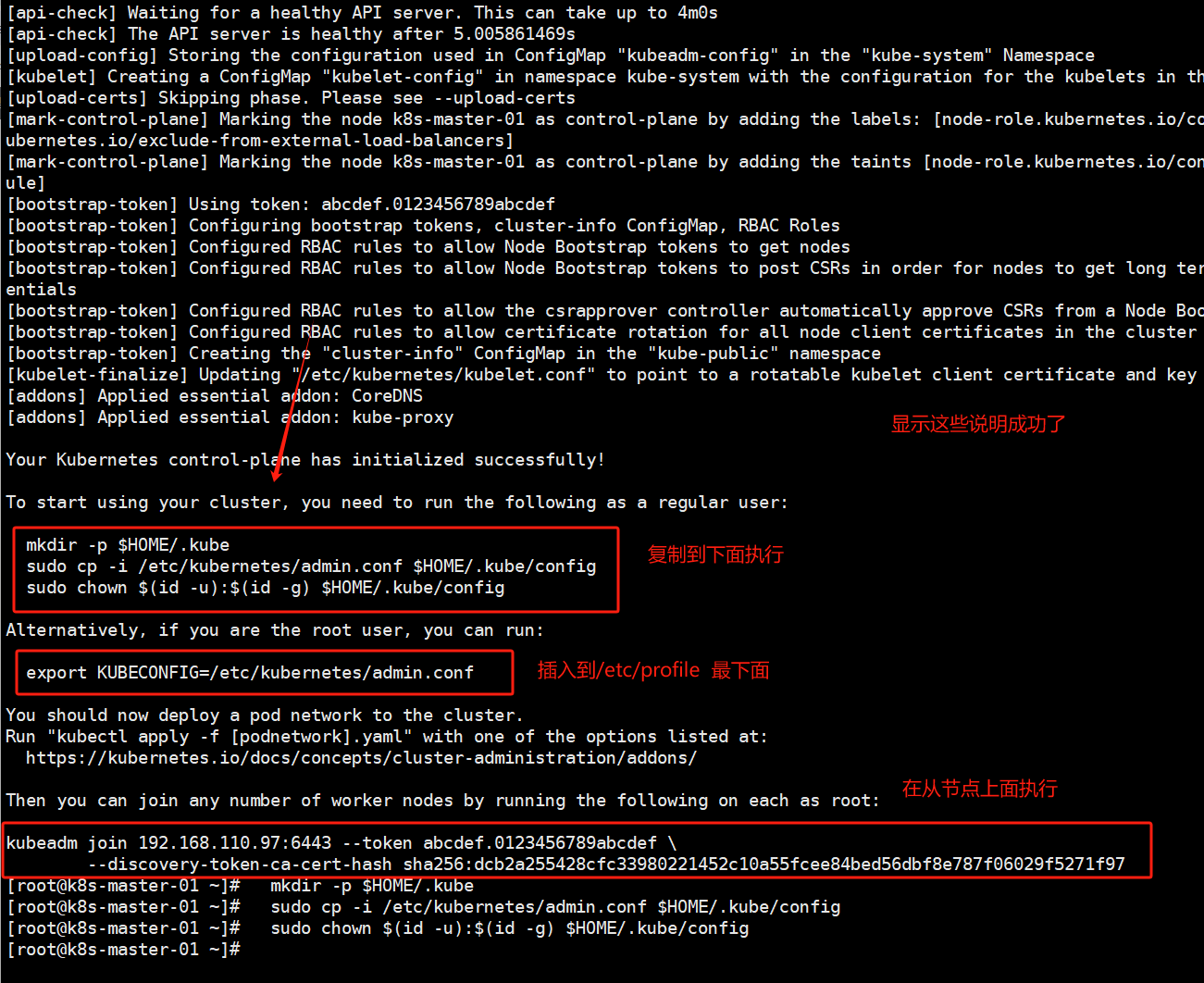

执行成功显示如下结果:

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.110.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3ee0ed5be62b44ac86b9413494371508e190af319fcd782b75b5f998e05a5024 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.110.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3ee0ed5be62b44ac86b9413494371508e190af319fcd782b75b5f998e05a5024

[root@master01 certs.d]# kubeadm init phase upload-certs --upload-certs

I0902 19:21:21.049884 24825 version.go:256] remote version is much newer: v1.31.0; falling back to: stable-1.30

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

3fd8d7a91a1d6172ca81e17194d340dca19c64fb245c95f62e3c0175eb6a7452

加上证书给其他两个master节点输入上

kubeadm join 192.168.110.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash

sha256:3ee0ed5be62b44ac86b9413494371508e190af319fcd782b75b5f998e05a5024 \

--control-plane \

--certificate-key

3fd8d7a91a1d6172ca81e17194d340dca19c64fb245c95f62e3c0175eb6a7452

成功后按照提示执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config四、k8s版本升级

如果需要使用 1.28 及以上版本,请使用 新版配置方法 进行配置。

新版下载地址:https://mirrors.aliyun.com/kubernetes-new/

yum源配置如下

[root@k8s-master-01 /etc/yum.repos.d]# cat kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetesnew/core/stable/v1.30/rpm/repodata/repomd.xml.key下面是老版yum源配置,最多支持到k8s1.28.2-0版本

[root@k8s-master1 yum.repos.d]# pwd

/etc/yum.repos.d

[root@k8s-master1 yum.repos.d]# cat kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg配置后更新yum源,执行命令

yum clean all

yum makecache

[root@master01 ~]# yum list --showduplicates kubeadm --disableexcludes=kubernetes

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

已安装的软件包

kubeadm.x86_64 1.30.4-150500.1.1 @kubernetes

可安装的软件包

kubeadm.x86_64 1.31.0-150500.1.1

具体操作过程如下:

# 1.标记节点不可调度

[root@master01 ~]# kubectl cordon master03

node/master01 cordoned

[root@master01 ~]#

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled control-plane 57m v1.30.4

master02 Ready control-plane 43m v1.30.4

master03 Ready control-plane 43m v1.30.4

node01 Ready <none> 41m v1.30.4

# 2.驱逐pod

[root@master01 ~]# kubectl drain master03 --delete-local-data --ignore-daemonsets --force

Flag --delete-local-data has been deprecated, This option is deprecated and will be deleted. Use --delete-emptydir-data.

node/master01 already cordoned

Warning: ignoring DaemonSet-managed Pods: kube-flannel/kube-flannel-ds-j9dt7, kube-system/kube-proxy-mmnk5

evicting pod kube-system/coredns-7c445c467-rzdzc

evicting pod default/nginx-788f75444d-6lr87

evicting pod default/nginx-788f75444d-gvrr9

evicting pod default/nginx-788f75444d-rkh68

evicting pod default/nginx-788f75444d-txqvj

evicting pod kube-system/coredns-7c445c467-4w2bc

pod/nginx-788f75444d-txqvj evicted

pod/nginx-788f75444d-6lr87 evicted

pod/nginx-788f75444d-rkh68 evicted

pod/nginx-788f75444d-gvrr9 evicted

pod/coredns-7c445c467-4w2bc evicted

pod/coredns-7c445c467-rzdzc evicted

node/master01 drained

yum install -y kubeadm-1.31.0-150500.1.1 --disableexcludes=kubernetes

升级 1 软件包

总下载量:11 M

Downloading packages:

Delta RPMs disabled because /usr/bin/applydeltarpm not installed.

kubeadm-1.31.0-150500.1.1.x86_64.rpm | 11 MB 00:00:40

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

正在更新 : kubeadm-1.31.0-150500.1.1.x86_64 1/2

清理 : kubeadm-1.30.4-150500.1.1.x86_64 2/2

验证中 : kubeadm-1.31.0-150500.1.1.x86_64 1/2

验证中 : kubeadm-1.30.4-150500.1.1.x86_64 2/2

更新完毕:

kubeadm.x86_64 0:1.31.0-150500.1.1

完毕!

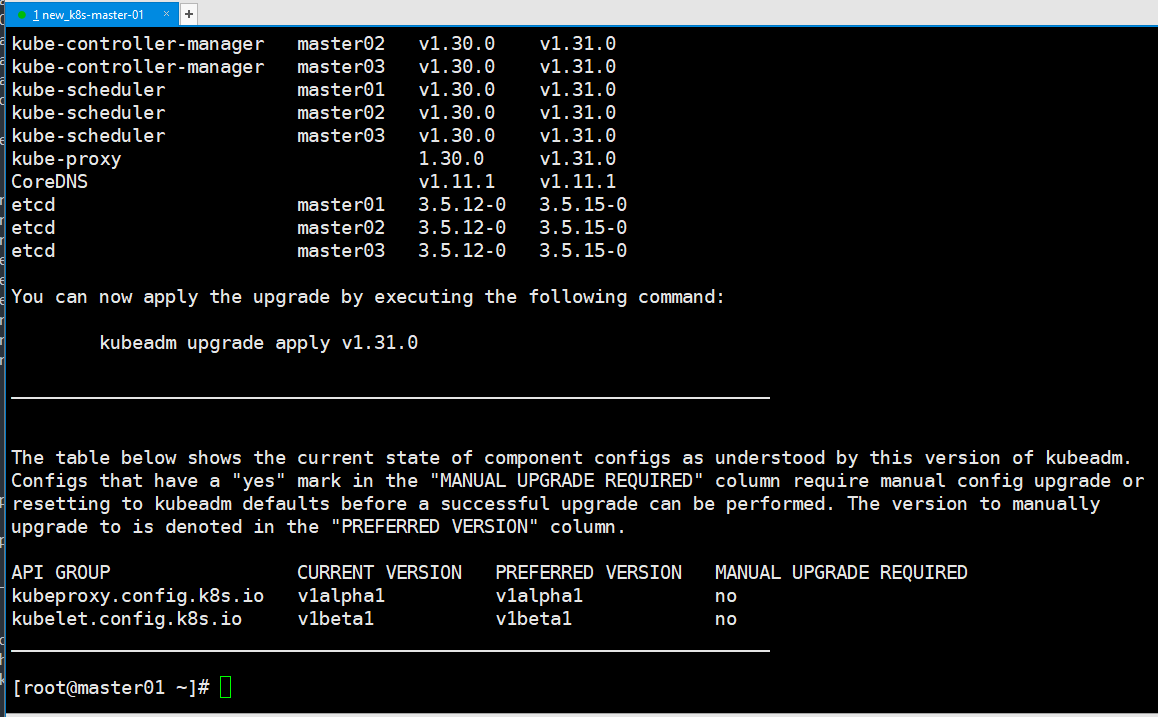

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT NODE CURRENT TARGET

kubelet master01 v1.30.4 v1.31.0

kubelet master02 v1.30.4 v1.31.0

kubelet master03 v1.30.4 v1.31.0

kubelet node01 v1.30.4 v1.31.0

Upgrade to the latest stable version:

COMPONENT NODE CURRENT TARGET

kube-apiserver master01 v1.30.0 v1.31.0

kube-apiserver master02 v1.30.0 v1.31.0

kube-apiserver master03 v1.30.0 v1.31.0

kube-controller-manager master01 v1.30.0 v1.31.0

kube-controller-manager master02 v1.30.0 v1.31.0

kube-controller-manager master03 v1.30.0 v1.31.0

kube-scheduler master01 v1.30.0 v1.31.0

kube-scheduler master02 v1.30.0 v1.31.0

kube-scheduler master03 v1.30.0 v1.31.0

kube-proxy 1.30.0 v1.31.0

CoreDNS v1.11.1 v1.11.1

etcd master01 3.5.12-0 3.5.15-0

etcd master02 3.5.12-0 3.5.15-0

etcd master03 3.5.12-0 3.5.15-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.31.0

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

kubelet.config.k8s.io v1beta1 v1beta1 no

_____________________________________________________________________

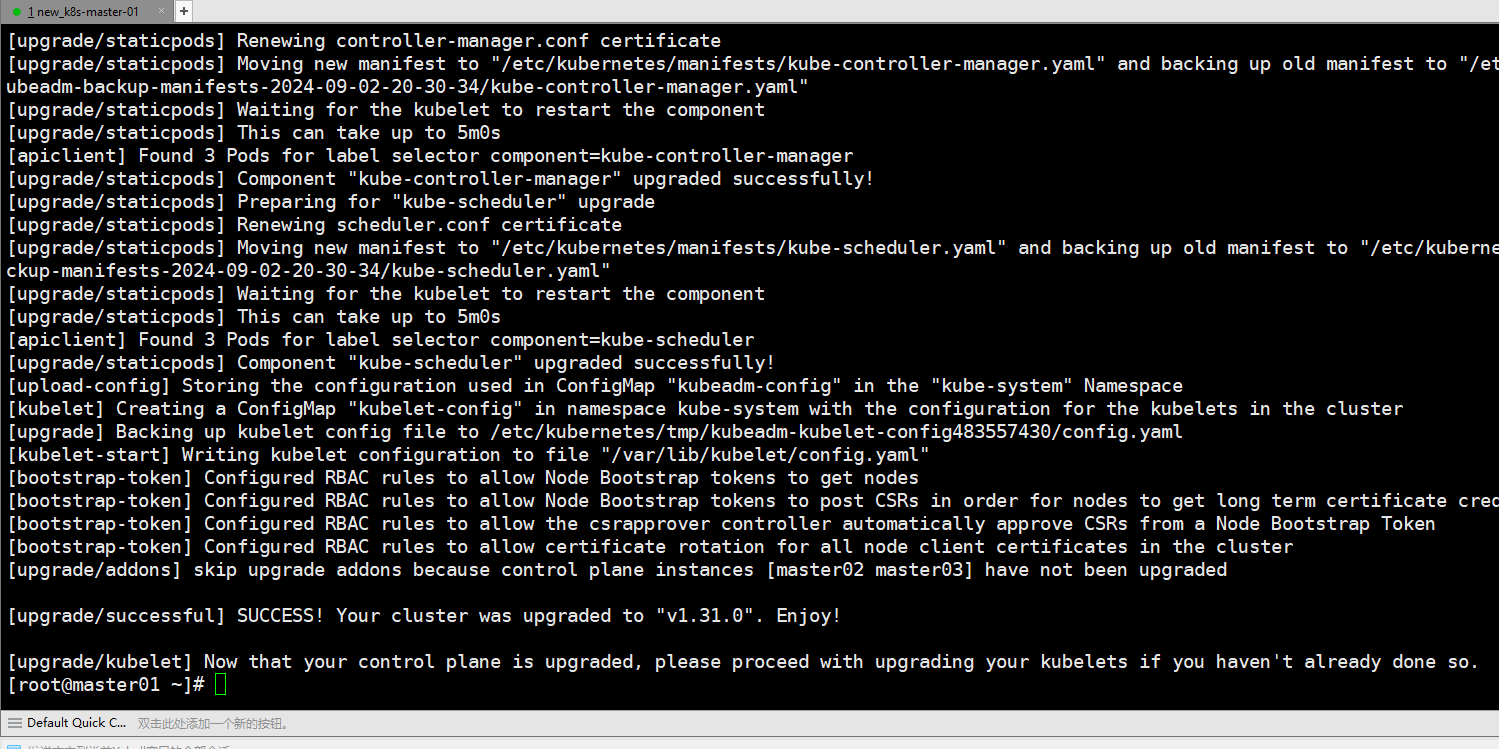

kubeadm upgrade apply v1.31.0

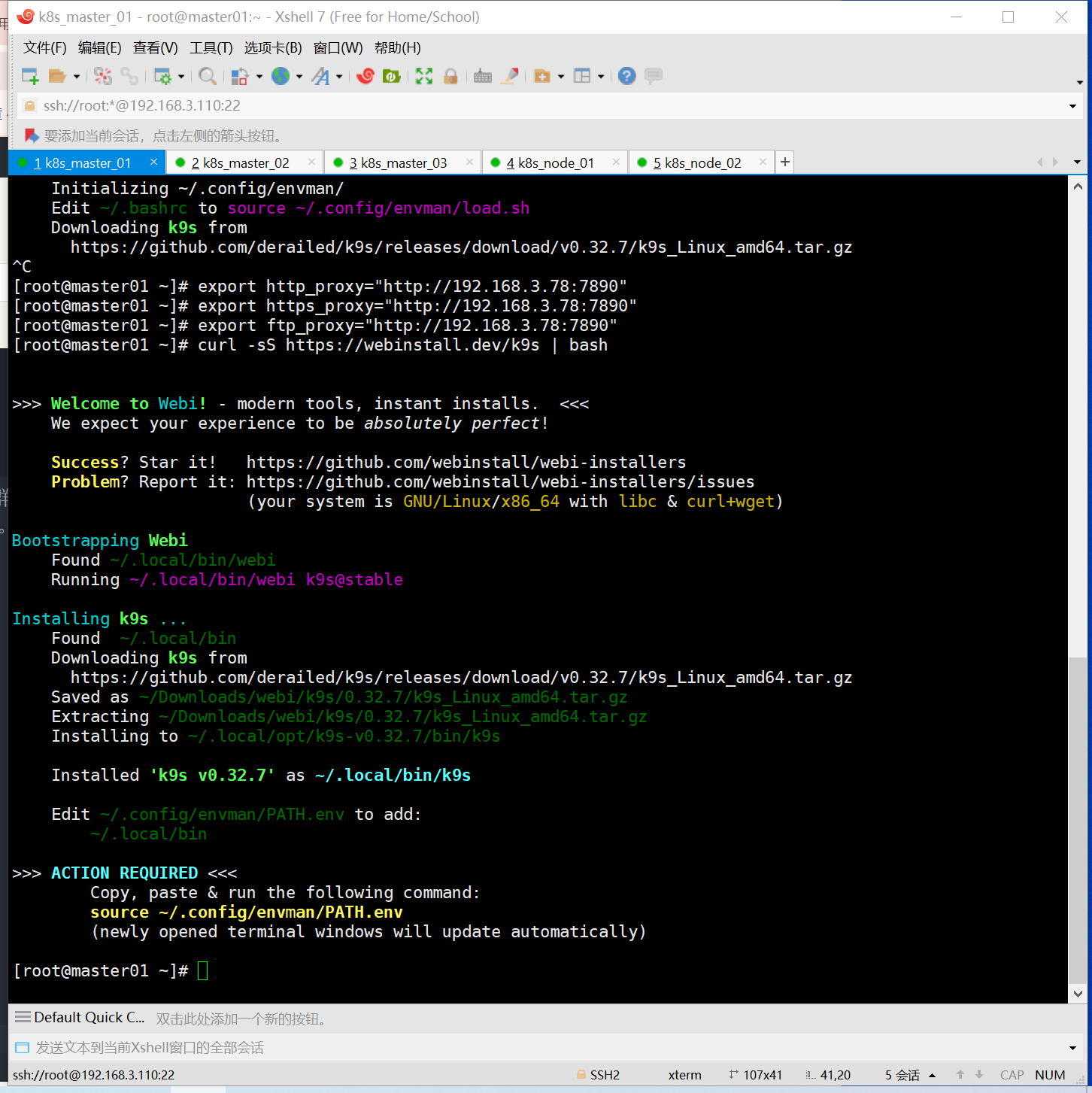

yum install -y kubelet-1.31.0 --disableexcludes=kubernetes

yum install -y kubeadm-1.31.0 --disableexcludes=kubernetes

[root@master01 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled control-plane 84m v1.31.0

master02 Ready control-plane 70m v1.30.4

master03 Ready control-plane 70m v1.30.4

node01 Ready <none> 68m v1.30.4

#恢复节点

kubectl uncordon k8s-master03

升级node节点

前提:升级节点的软件,或者是硬件例如增大内存,都会涉及到的节点的暂时不可用,这与停机维护是一个问题,我们需要考虑的核心问题是节点不可用过程中,如何确保节点上的pod服务不中断!!!

升级或更新node节点步骤:(其他node节点如果要升级,则采用一样的步骤)

1、先隔离Node节点的业务流量

2、cordon禁止新pod调度到当前node

kubectl cordon node01

3、对关键服务创建PDB保护策略,确保下一步的排空时,关键服务的pod至少有1个副本可用(在当前节点以外的节点上有分布)

4、drain排空pod

kubectl drain node01 --delete-local-data --ignore-daemonsets --force

5、升级当前node上的软件

5.1 在所有的 node 节点上执行如下命令,升级 kubeadm

[root@k8s-node2 ~]# yum install -y kubeadm-1.31.0-150500.1.1 --disableexcludes=kubernetes

5.2 升级 kubelet 的配置,在所有node节点上执行

yum install -y kubelet-1.31.0 --disableexcludes=kubernetes

yum install -y kubeadm-1.31.0 --disableexcludes=kubernetes

5.3 升级 kubelet 和 kubectl

执行如下命令,以重启 kubelet

yum install -y kubelet-1.21.4-0 kubectl-1.21.4-0 --disableexcludes=kubernetes

oot@node01 yum.repos.d]# kubectl get pods -n kube-system

E0902 21:15:29.561945 60008 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0902 21:15:29.562332 60008 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

cat ~/.kube/config

华纳万宝路客服电话是多少?(?183-8890-9465—《?薇-STS5099】【?扣6011643??】

华纳万宝路开户专线联系方式?(?183-8890--9465—《?薇-STS5099】【?扣6011643??】

华纳圣淘沙客服开户电话全攻略,让娱乐更顺畅!(?183-8890--9465—《?薇-STS5099】客服开户流程,华纳圣淘沙客服开户流程图(?183-8890--9465—《?薇-STS5099】

新盛客服电话是多少?(?183-8890-9465—《?薇-STS5099】【

新盛开户专线联系方式?(?183-8890--9465—《?薇-STS5099】【?扣6011643??】

新盛客服开户电话全攻略,让娱乐更顺畅!(?183-8890--9465—《?薇-STS5099】客服开户流程,华纳新盛客服开户流程图(?183-8890--9465—《?薇-STS5099】

东方明珠客服开户联系方式【182-8836-2750—】?μ- cxs20250806

东方明珠客服电话联系方式【182-8836-2750—】?- cxs20250806】

东方明珠开户流程【182-8836-2750—】?薇- cxs20250806】

东方明珠客服怎么联系【182-8836-2750—】?薇- cxs20250806】

康悦到家698套餐a0dl.cn