一、创建 radosgw服务

root@ubuntu01:~# ceph orch apply rgw default es --placement="count:3"

Scheduled rgw.default update...<realm-name>:RadosGW 的域名,可以自定义,例如 default.

<zone-name>:RadosGW 的区域名,可以自定义,例如 es.

--placement="count:1":表示 RadosGW 实例的数量。如果你想在多个节点上部署,可以增加 count 的值,或者指定节点列表二、验证radosgw服务状态

root@ubuntu01:~# ceph orch ps | grep rgw

rgw.default.ubuntu01.lusoxe ubuntu01 *:80 starting - - - - <unknown> <unknown> known>

rgw.default.ubuntu02.nvamia ubuntu02 *:80 starting - - - - <unknown> <unknown> known>

rgw.default.ubuntu03.rkgoya ubuntu03 *:80 starting - - - - <unknown> <unknown> known>

root@ubuntu01:~# ceph -s

cluster:

id: 5b0e9b94-e6bb-11ef-a18c-274714e73e14

health: HEALTH_WARN

clock skew detected on mon.ubuntu03

1 pool(s) do not have an application enabled

services:

mon: 3 daemons, quorum ubuntu01,ubuntu02,ubuntu03 (age 8h)

mgr: ubuntu02.jmaxlt(active, since 8h), standbys: ubuntu01.exrhij

mds: 1/1 daemons up, 1 standby

osd: 3 osds: 3 up (since 8h), 3 in (since 2w)

rgw: 3 daemons active (3 hosts, 1 zones)

data:

volumes: 1/1 healthy

pools: 10 pools, 181 pgs

objects: 9.37k objects, 2.5 GiB

usage: 8.5 GiB used, 111 GiB / 120 GiB avail

pgs: 181 active+clean

io:

client: 214 KiB/s rd, 5.1 KiB/s wr, 247 op/s rd, 141 op/s wr三、验证 radosgw 存储池资源

#查看存储池列表

root@ubuntu01:~# ceph osd pool ls

.mgr

kubernetes

k8s-rbd

k8s

cephfs_data

cephfs_metadata

.rgw.root

default.rgw.log

default.rgw.control

default.rgw.meta#查看默认radosgw存储池信息

root@ubuntu01:~# radosgw-admin zone get --rgw-zone=default --rgw-zonegroup=default

{

"id": "7f883cbd-8ada-48aa-9358-f2c09aee0ca7",# 区域的唯一标识符

"name": "default",# 默认区域的名称

"domain_root": "default.rgw.meta:root",# 区域的根域名

"control_pool": "default.rgw.control",# 系统控制池,在有数据更新是,通知其他RGW更新缓存

"gc_pool": "default.rgw.log:gc", # 用于垃圾回收的存储池

"lc_pool": "default.rgw.log:lc",# 用于存储日志的存储池

"log_pool": "default.rgw.log",# 存储日志信息,用于记录各种log信息

"intent_log_pool": "default.rgw.log:intent",

"usage_log_pool": "default.rgw.log:usage",

"roles_pool": "default.rgw.meta:roles",# default.rgw.meta:元数据存储池,通过不同的名称空间分别存储不同的rados对象

"reshard_pool": "default.rgw.log:reshard",

"user_keys_pool": "default.rgw.meta:users.keys",# 用户的密钥名称空间users.keys

"user_email_pool": "default.rgw.meta:users.email",# 用户的email名称空间users.email

"user_swift_pool": "default.rgw.meta:users.swift",# 用户的subuser的名称空间users.swift

"user_uid_pool": "default.rgw.meta:users.uid", # 用户UID

"otp_pool": "default.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "default.rgw.buckets.index",# 存放bucket到object的索引信息

"storage_classes": {

"STANDARD": {

"data_pool": "default.rgw.buckets.data"# 存放对象的数据

}

},

"data_extra_pool": "default.rgw.buckets.non-ec",# 数据的额外信息存储池

"index_type": 0,

"inline_data": true

}

}

],

"realm_id": "",

"notif_pool": "default.rgw.log:notif"

}#查看默认配置信息

root@ubuntu01:~# ceph osd pool get default.rgw.meta crush_rule

crush_rule: replicated_rule

root@ubuntu01:~# ceph osd pool get default.rgw.meta size

size: 3

root@ubuntu01:~# ceph osd pool get default.rgw.meta pgp_num

pgp_num: 16

root@ubuntu01:~# ceph osd pool get default.rgw.meta pg_num

pg_num: 16四、访问radosgw服务

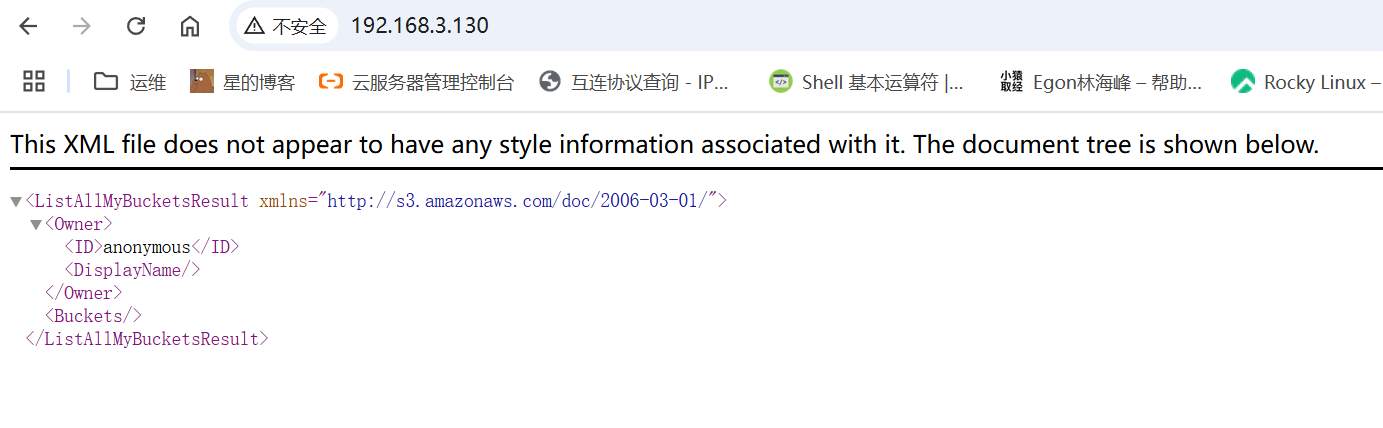

访问radosgw服务所在的节点 IP+默认 80 端口既可。

五、RadosGW https

5.1生成自签证书

root@ubuntu01:/# mkdir -p /etc/ceph/rgw/cert

root@ubuntu01:/# openssl req -newkey rsa:2048 -x509 -days 3650 -nodes -out /etc/ceph/rgw/cert/rgw-cert.pem -keyout /etc/ceph/rgw/cert/rgw-key.pem -subj "/CN=ceph-rgw.local.com"

Generating a RSA private key

...............................+++++

..................................+++++

writing new private key to '/etc/ceph/rgw/cert/rgw-key.pem'

-----

root@ubuntu01:/# tree /etc/ceph/rgw/cert/

/etc/ceph/rgw/cert/

├── rgw-cert.pem

└── rgw-key.pem

0 directories, 2 files5.2创建配置文件

#将公钥和私钥内容添加到配置文件中 rgw.yaml

service_type: rgw

service_id: default

placement:

hosts:

- ubuntu01

- ubuntu02

- ubuntu03

spec:

rgw_frontend_ssl_certificate: |

-----BEGIN PRIVATE KEY-----

MIIEvQIBADANBgkqhkiG9w0BAQEFAASCBKcwggSjAgEAAoIBAQC/q3zdOnRPSsRz

OlP1Z0rBYJUn9VzZF+Rlnt7IN3q+6Ir/jCb6jtMYP1A+KiTcv8OaTdre4OxOjIFG

iK1bZpEl16q+phKGbnfbphrptCVwpw7vV3n7Pjz57awXM728S7ql62JutIfrDWqN

YY0EnMeO7xFrXdy4ow8d3GobhsfiTcU38nyWXZ5Qy7vd43kJElMxbbsXJrhPO43x

6gJCWdvIztdjewtFW2rqy8cDPaKxcXubYAOlJUzN53eAdGxC6Br6HWsyVn8VnsPI

UQigwSzOx6DAa9QKjVIIi454pb3C+se1TD3el1JhZ5ZDav5g0Fp9zB1DetkR3vh7

3ixqwddRAgMBAAECggEADohJElkBQpXPqVDt1rh7MYhKJtpyrL8kARR3ncSfGOR2

zYNp3SuBE+CRC/WUD+y2PvfSNX3mTNpYXum0Ay8WqEDe4E+lLe4oRk4k0j1nbVAt

ULZYOFVyuBxuJOA2bZVsVHIxZ2VmvMqqnoeb8pKUiuDTeEmIl7M9TS1OGkIw25aa

VUU+kFLNwhVXQPAYu17dApl5GWI32gNcfZD7QF3fwNMz+u4h6dLCBiI+IQmXjtzX

OCPs2exwfj9NaZ6jxZPQU1i2YoMRh2AuephP7hW+zeTUpgd4A6VHkX5ASKvs89U2

qel2639RLqdEg1bu7f5kHOlqYVmwuj6rw+EAyfxpQQKBgQDl81/euRc7NKj3uLoh

yJqe/vQkvLKgKwVDAM6aKdI8/FhG1LNYbm5QJ83EuSmXSPm20bJjZWOjmQuIXSV2

V2UvmygHcQOeZsipJPgc2Yza6b3MDCKbBq/Kb4YrTtVLogjgKbbJXg99sSPKgJFG

B4g4OGs8H2inkE3tKOn4CXUF2QKBgQDVYfXnMuGcZ8LfBiD1zfX1WGt8jKsoDI2/

LGGwQa/0Lo7xgqrN47hOFKMDGWglJPT06G2JR3gsPIvJlZNo5m6tYoLtZ0vEERlK

+YzTVmTA/ThGAK7rePQv7GOGWubF6WYA8Bdyfqnm0yv8QtptcXq4bzyLEGMTKh4a

mNOBw0eaOQKBgQDh4IczyKzBQ6EWdSahOORpehdoXtM38gphHtHTLXGO5nHwYc8p

9o/1vKOrshbgvJZOtv18FYQil5t0f8rkzERg1xAi/yiG2IATZnUyIaDzCNefL+xu

S8syFwq8T9vFR41gZJlghDzDQtXdfR02pP9x+jmb/V5X+rARXjc1plSFQQKBgAvG

0UyGSV9Zdq7aZr1KNbXpwFzqYpPeRYB0kZuptG1UmH0JyiV82PIuP2TvZQkPxhky

LsFx2VcPrGNexvj2JsuY8ULq/Yp/qxaxOS18yijAkPeEGCNU1J1EfaWvpKbtn7yT

g6fFB9l+dCIDCo0Zwz0knoHKUL2BCJJNNvclcPE5AoGAXCuMEjSk54OUXoKj355l

NbbpnrGofy7Pmi9H7dKxgKiN7wonjhV9Rztrbsms9lD4Ab/B1CrIRz6Hp5si+bw/

mDDboXmgEbNGMccxknye2p4S34R6RoERbNlXvor3Z46aWMP9Suqwa11WdklXQiaW

wLC0RkGadfimQSxSGuFaJDE=

-----END PRIVATE KEY-----

-----BEGIN CERTIFICATE-----

MIIDGzCCAgOgAwIBAgIUYkIzcxcGB4FBt1qTLXE9tOKNeMUwDQYJKoZIhvcNAQEL

BQAwHTEbMBkGA1UEAwwSY2VwaC1yZ3cubG9jYWwuY29tMB4XDTI1MDYxNDE5MTEy

MloXDTM1MDYxMjE5MTEyMlowHTEbMBkGA1UEAwwSY2VwaC1yZ3cubG9jYWwuY29t

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAv6t83Tp0T0rEczpT9WdK

wWCVJ/Vc2RfkZZ7eyDd6vuiK/4wm+o7TGD9QPiok3L/Dmk3a3uDsToyBRoitW2aR

JdeqvqYShm5326Ya6bQlcKcO71d5+z48+e2sFzO9vEu6petibrSH6w1qjWGNBJzH

ju8Ra13cuKMPHdxqG4bH4k3FN/J8ll2eUMu73eN5CRJTMW27Fya4TzuN8eoCQlnb

yM7XY3sLRVtq6svHAz2isXF7m2ADpSVMzed3gHRsQuga+h1rMlZ/FZ7DyFEIoMEs

zsegwGvUCo1SCIuOeKW9wvrHtUw93pdSYWeWQ2r+YNBafcwdQ3rZEd74e94sasHX

UQIDAQABo1MwUTAdBgNVHQ4EFgQUbL6TyHoVR1jUBE+mT0ETcvJNwq0wHwYDVR0j

BBgwFoAUbL6TyHoVR1jUBE+mT0ETcvJNwq0wDwYDVR0TAQH/BAUwAwEB/zANBgkq

hkiG9w0BAQsFAAOCAQEAZ/8toy7FIUG4uq+SxP4dxcC/P/njpCklzoA5BGc8aEQ+

M+g/0eTR60ib6HWXp2PtezQ5fK1mZLImSeuHCcdAXddEq0opXaS3wEMs8N27fDLU

jMjilBhzDlp7YnxZ64YzF3HzP2qHbDwoJjz/MqFSovkFEb4m8RYZNl6t/5U8XSmx

vxWdypbmmd+Zr07BQ1l1ldeGi0CD9gxSYK3exF6Gdr7G/J7vC8Up0xHKnaZSqKOH

vP8e/WL7T+p0s0ypjAIR29M1E9XfULt8xNQc3KtiEcvAZbxE3HWG7vnp93S/42vA

errp5uKlZdaOaA1OD0/nmrP36hz6RnuUqIF88p7NnQ==

-----END CERTIFICATE-----

ssl: true

rgw_frontend_port: 4435.3更新RGW配置

root@ubuntu01:~# ceph orch apply -i rgw.yaml

Scheduled rgw.default update...

root@ubuntu01:~# ceph orch ps | grep rgw

rgw.default.ubuntu01.lusoxe ubuntu01 *:80 running (31m) 12s ago 31m 101M - 18.2.4 2bc0b0f4375d 510eb15ca011

rgw.default.ubuntu02.abhedc ubuntu02 *:443 starting - - - - <unknown> <unknown> <unknown>

rgw.default.ubuntu02.nvamia ubuntu02 *:80 running (31m) 13s ago 31m 106M - 18.2.4 2bc0b0f4375d 4a733c6c4488

rgw.default.ubuntu03.pvvalj ubuntu03 *:443 starting - - - - <unknown> <unknown> <unknown>

rgw.default.ubuntu03.rkgoya ubuntu03 *:80 running (31m) 13s ago 31m 101M - 18.2.4 2bc0b0f4375d d341d13d6bac 5.4验证访问

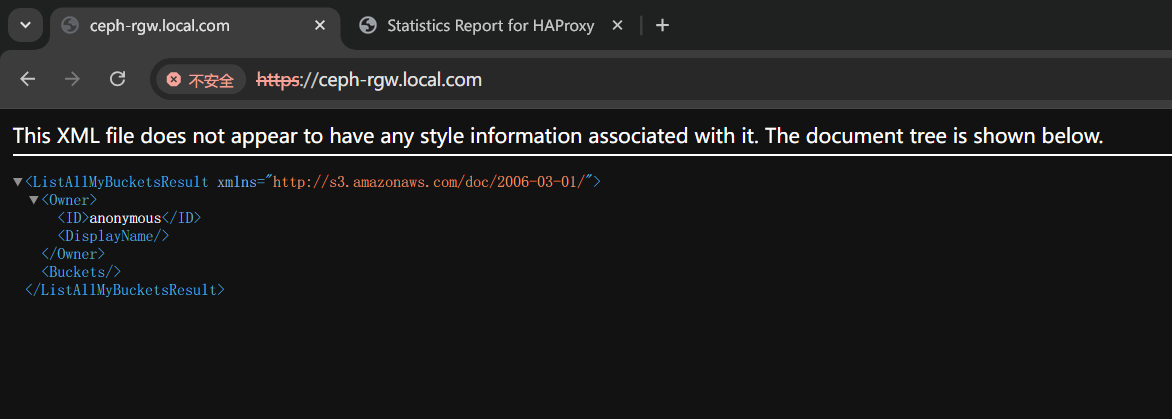

在本地host添加域名解析

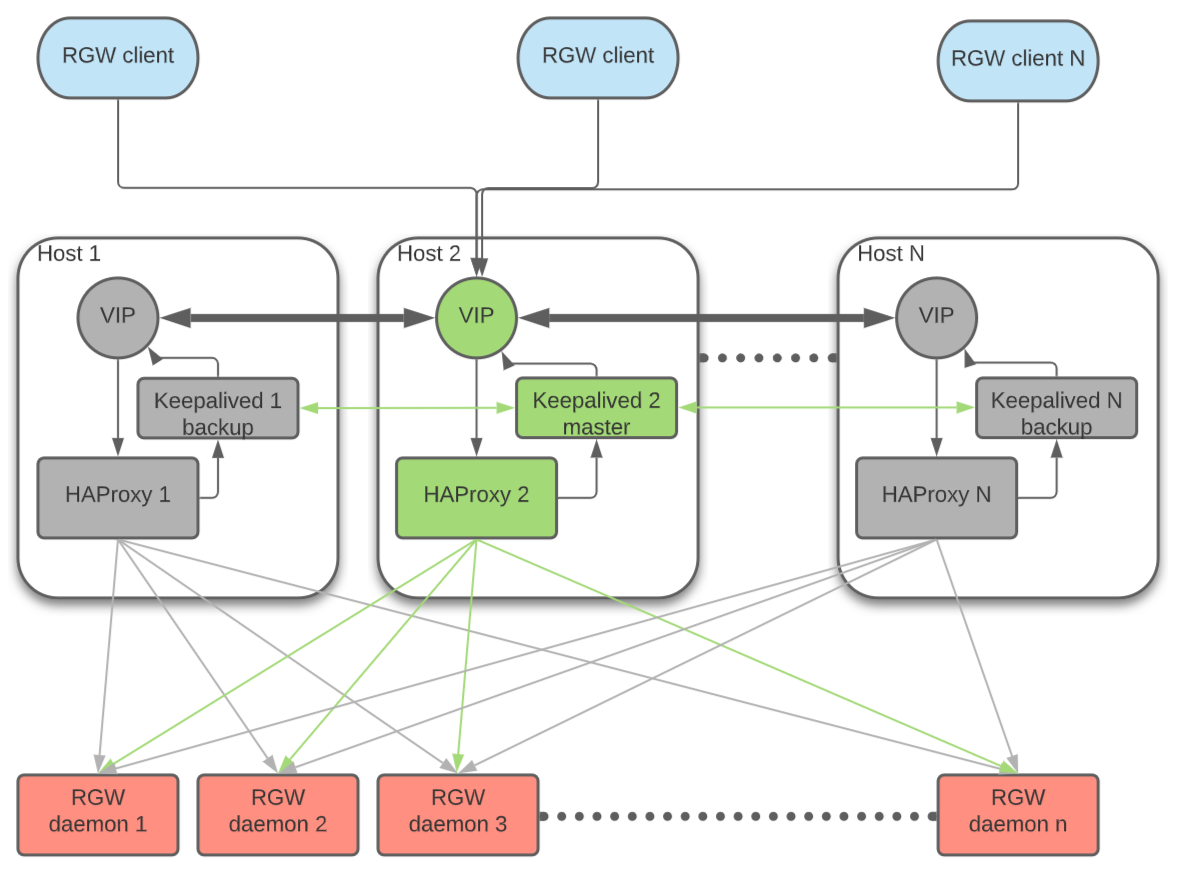

六、RadosGW 高可用

6.1自定义https 端口与副本数

#修改默认端口并指定运行在 ceph1 和 ceph2 节点

root@ceph-1:/etc/ceph/rgw# cat > rgw.yaml << EOF

service_type: rgw

service_id: default

placement:

hosts:

- ceph-1

- ceph-2

- ceph-3

spec:

rgw_frontend_ssl_certificate: |

-----BEGIN PRIVATE KEY-----

MIIEvQIBADANBgkqhkiG9w0BAQEFAASCBKcwggSjAgEAAoIBAQC8EYtfCItzKsoy

zh9AeiYMuJAebFaBkEpF00AYGvnviwOBUx8f4CjStnXAUtNq7nFrhTldai1B+pdL

eZomzgfFTO6oN0tz6KBXEDJG+yoMqrce0RlGJH6i5ML1znWmWsVynU1xrqGC2D1J

jIERsoyx+1vIuYHR8EBpKf7ldLYDqZ79bAEEj1ayzzPOIS4X3g9AAWo3EMCLRzCU

ZY1GIfqXuPi2xMJKYfovXM1agU178qCqNlGFrjtAHOSYpmMssGM638Jh8ExaJxBU

4UvKcxc54Efypancj6ZffgEx07A6tVFk4zQrFp+2vbYTkcs3tF5KrRBvN1ExDqsb

fofCTI+7AgMBAAECggEAaiYE4giynLgkE/TfEsdevoNVZLaFRO+p3CtV28UuGJP/

0HiX8qfUosm1QG3/QjV+8s7pB96r2LeVuVXTOd/D5wp7EZrUDYHZLgrINeQBYdDh

NpWSjFKA33P6zj5PjStikkRSt713D6D5Ro/1MYXzf2l97pc1vMa7tB+t7Nio+vtE

PCzTsRZynbNCY3UoIKfQlbA/fMqyayU05GAJyJT0kHl8M1H5PD5czJjUqztKQEv0

aJGmXN77drBC/qznGfpTPaAi1l6Gh7eBBj8/7yWXDSyI3n7SSAz0e4eDjZRhBZrH

hi2f6+xwpuTuOehUw0xo2rtJxl3o9qzNjwN05A8yoQKBgQDdOzuiAZtqBd7ERXZp

sYev17tLuLpiCHQ5a5ljh4jVrP1/bh3mNiTnFMk3TwJLIwBPlo2Ugbd/vsl+Db3+

EKDFTC2md5CaH04/1QSmFFjsHfN1ZoLdmedVntlR4Hzah44jNdWvHl0WYRjzfxcM

I782oawPYm73J7oGboiEfgtW8wKBgQDZoBNxcvjFZ+0LO5ms+ymWKwTnPpwGhW3M

D0DcPcF08GSnmyHvZoB51FG4GEIjMkWRpOX+pg2fxNjO0Y5QxS5vBg0A5KztDg4r

Kdy+McCZIWjCeF9O0mvhIXOKLFdUMrfp+s6GqJCIoht8QkxUuMn0TM3eq3p/WWts

xVCs/tMmGQKBgBSyPOLsCZECmZN8+BXtMMdnhDMSRgVzywOwKDpibI+ozlJEh/GI

cS1ZCXXuI0XKMXZAnGAfPn5p58muGW8SOSgb901SdCmm8hgQoo2y65qzNppuC6IV

ism8wZHiUWvUMJzkpWfrjEPSs5Xb9tkA4xuGRmVuDPl8Mu/1GTpj3EW3AoGBAMJI

0pLZ3ZX+7fS1RMDViY7y4PHBR3Ha9ObUR0dYKrnHU1T+fhFIJTKehkYgAguB+fHI

kTwB6u/TwOsC0lbxcj7T3BAMFwWbIrMOMG/r4tHSrb/PzuaDnKPkRU35wAz/KonM

y0wUeNRCRN9uIM8SGdnsJ26/ECFZJzp3/Uo0RTUhAoGAG8X00lkMTiVHuAZmP7PO

4lYfUQA8PZ6i/7A/SnHuwWI0MyWKLw3T/4mCdHyw9YwPshdVWCddY59L1GKxdzI5

V87lNmdkH7l6jDm7IwY5KX0voZ8uLB1zQ9lIakQxPTj5ydO2lPsJGE8784suwAhY

Y7UxYWWOAl7Pu0TfGXZjg+I=

-----END PRIVATE KEY-----

-----BEGIN CERTIFICATE-----

MIIDGzCCAgOgAwIBAgIUN4U/CuGb78PO+vzw370d0hz1aBUwDQYJKoZIhvcNAQEL

BQAwHTEbMBkGA1UEAwwSY2VwaC1yZ3cubG9jYWwuY29tMB4XDTI0MTIxNzA3MDAz

M1oXDTM0MTIxNTA3MDAzM1owHTEbMBkGA1UEAwwSY2VwaC1yZ3cubG9jYWwuY29t

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAvBGLXwiLcyrKMs4fQHom

DLiQHmxWgZBKRdNAGBr574sDgVMfH+Ao0rZ1wFLTau5xa4U5XWotQfqXS3maJs4H

xUzuqDdLc+igVxAyRvsqDKq3HtEZRiR+ouTC9c51plrFcp1Nca6hgtg9SYyBEbKM

sftbyLmB0fBAaSn+5XS2A6me/WwBBI9Wss8zziEuF94PQAFqNxDAi0cwlGWNRiH6

l7j4tsTCSmH6L1zNWoFNe/KgqjZRha47QBzkmKZjLLBjOt/CYfBMWicQVOFLynMX

OeBH8qWp3I+mX34BMdOwOrVRZOM0Kxaftr22E5HLN7ReSq0QbzdRMQ6rG36HwkyP

uwIDAQABo1MwUTAdBgNVHQ4EFgQUUxgqlKlO+Pmvr+1QYv0bzf8BY4wwHwYDVR0j

BBgwFoAUUxgqlKlO+Pmvr+1QYv0bzf8BY4wwDwYDVR0TAQH/BAUwAwEB/zANBgkq

hkiG9w0BAQsFAAOCAQEAJf0D5Wy3BS9fUWqqgxLgvUSuK9EzfVHyyBeAzW+AYzus

Iqv3KscFnJFkl8U7tfy0E/03z6LzA91Ok/6IvlsQA9w5agJF++nqNSatVbEin4Fr

h4hu1HFMDFLkaQeGLcaHBgmMWOgK0DonitYEJZMbHBBYY5W7IzoZfduaOsJaXVoG

rYCsoYlH2JHwIu3hXelzCLPfZhdBpvcgWIsQCnVy2n4y2WLRif0R+zPPZ4ZIz0qT

en7C+vmvtP9SrpI9eIPUC3VAcTKxftvzyOHqBIB0+BzDa8lk0b4MMmaJkzt7Uq1k

EJmfIFBAfER1wHb2vVPKd5/zi3h55T3D366M8yLx9Q==

-----END CERTIFICATE-----

ssl: true

rgw_frontend_port: 8443

EOF

root@ceph-1:/etc/ceph/rgw# ceph orch apply -i rgw.yaml

Scheduled rgw.default update...

root@ceph-1:/etc/ceph/rgw# ceph orch ps | grep rgw

rgw.default.ceph-1.rsdmtv ceph-1 *:9443 running (29s) 23s ago 29s 87.6M - 18.2.4 2bc0b0f4375d c4d059ef4eeb

rgw.default.ceph-2.hyhuzv ceph-2 *:9443 running (29s) 13s ago 29s 88.8M - 18.2.4 2bc0b0f4375d ff7f86383ba1

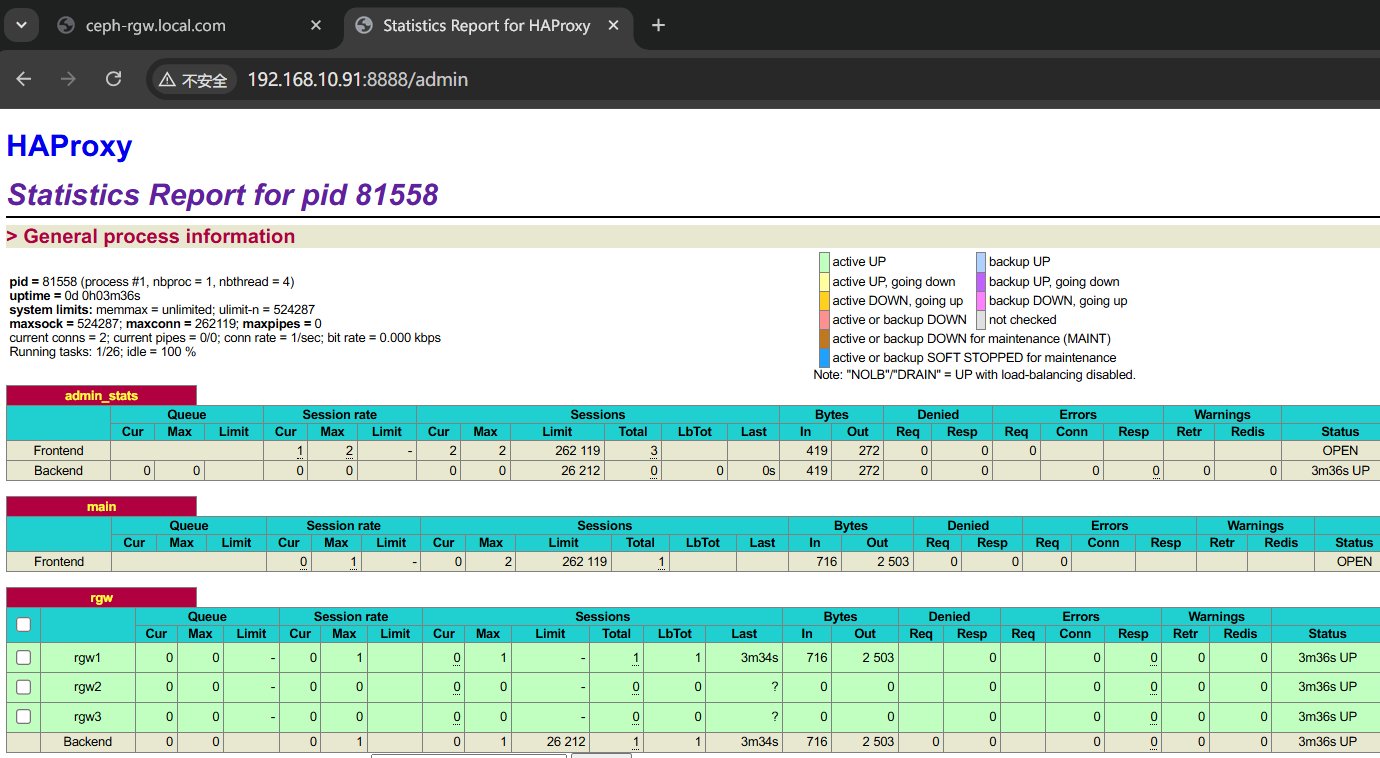

rgw.default.ceph-3.wnnkpd ceph-3 *:9443 running (30s) 24s ago 30s 88.4M - 18.2.4 2bc0b0f4375d 3d198beac816 6.2HaProxy部署

以下操作在 ceph1、2、3 机器执行

安装haproxy

root@ceph-1:~# apt install haproxy -y#修改配置文件

root@ceph-1:~# cat > /etc/haproxy/haproxy.cfg << EOF

# 开启管理员监控页面

listen admin_stats

bind *:8888 # 监听的IP和端口号

mode http # 开启HTTP模式,stats功能需要

stats enable

stats refresh 30s # 统计页面自动刷新时间

stats uri /admin # 访问的uri ip:8888/admin

stats realm haproxy

stats auth admin:admin # 认证用户名和密码

stats hide-version # 隐藏HAProxy的版本号

stats admin if TRUE # 管理界面,如果认证成功了,可通过webui管理节点

timeout client 5s # 客户端超时

timeout connect 3s # 连接超时

timeout server 5s # 后端服务器超时

# 配置前端监听

frontend main

# 监听地址

bind *:443

# 匹配后端服务

default_backend rgw

# 客户端超时

timeout client 5s

# 配置后端代理

backend rgw

# 连接超时

timeout connect 3s

# 后端服务器超时

timeout server 5s

server rgw1 192.168.10.91:9443 check

server rgw2 192.168.10.92:9443 check

server rgw3 192.168.10.93:9443 check

EOF6.3启动服务

root@ceph-1:~# systemctl start haproxy

root@ceph-1:~# systemctl enable haproxy

Synchronizing state of haproxy.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable haproxy

root@ceph-1:~# ss -tunlp | grep haproxy

tcp LISTEN 0 4096 0.0.0.0:443 0.0.0.0:* users:(("haproxy",pid=61839,fd=7))

tcp LISTEN 0 4096 0.0.0.0:8888 0.0.0.0:* users:(("haproxy",pid=61839,fd=6))确认无误后 ceph-2 和 ceph-3 服务器同样的步骤配置。

七、KeepAlived部署

以下操作在ceph-1、2、3 机器执行,设备网卡名称为ens33,VIP为192.168.10.90。

安装软件包

root@ceph-1:~# apt install keepalived -y新增haproxy检测脚本

root@ceph-1:~# vim /etc/keepalived/check_port.sh

#!/bin/bash

# 检查指定端口是否正常

PORT=443

if netstat -tuln | grep -q ":${PORT}\b"; then

echo "${PORT}端口正常: 服务正在监听"

exit 0

else

echo "${PORT}端口异常: 未发现监听服务"

exit 1

fi

root@ceph-1:~# chmod u+x /etc/keepalived/check_port.sh修改配置文件

root@ceph-1:/etc/keepalived# cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

script_user root

enable_script_security

}

vrrp_script chk_port {

script "/etc/keepalived/check_port.sh" # 自定义检测脚本路径

interval 1 # 检测间隔,单位为秒

weight -2 # 如果检测失败,权重降低2

}

vrrp_instance VI_1 {

state MASTER # 设置为master节点

interface ens33 # 物理网卡名称

virtual_router_id 51 # 虚拟路由ID,主备保持一致

priority 100 # 优先级,主大于备

advert_int 1 # 关播间隔

authentication { # 认证信息,主备一致

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.90/24 # 虚拟IP信息

}

track_script {

chk_port # 引用上面定义的脚本

}

}

EOF启动服务

root@ceph-1:/etc/keepalived# systemctl start keepalived.service

root@ceph-1:/etc/keepalived# systemctl enable keepalived.service

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

root@ceph-1:/etc/keepalived# ip a | grep 192.168.10.90

inet 192.168.10.90/24 scope global secondary ens33此时可以看到vip 192.168.10.90绑定到了 ceph-1服务器ens33 网卡上。

同样的操作配置 ceph-2服务器,配置文件如下:

global_defs {

script_user root

enable_script_security

}

vrrp_script chk_port {

script "/etc/keepalived/check.sh"

interval 2

weight -2

}

vrrp_instance VI_1 {

state BACKUP # 主备类型

interface ens33

virtual_router_id 51

priority 99 # 优先级低于主

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.90/24

}

track_script {

chk_port # 引用上面定义的脚本

}

}ceph-3 配置如下

global_defs {

script_user root

enable_script_security

}

vrrp_script chk_port {

script "/etc/keepalived/check.sh"

interval 2

weight -2

}

vrrp_instance VI_1 {

state BACKUP # 主备类型

interface ens33

virtual_router_id 51

priority 98 # 优先级低于主

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.90/24

}

track_script {

chk_port # 引用上面定义的脚本

}

}八、高可用测试

接下来停止 ceph-1服务,模拟异常故障,查看 ceph-2服务器,vip已经成功飘移过来

root@ceph-1:~# systemctl stop haproxy.service

root@ceph-2:~# ip a | grep 192.168.10.90

inet 192.168.10.90/24 scope global secondary ens33访问vip的 443 端口,可正常提供服务

评论 (0)