链路追踪数据收集与导出

一、链路数据收集方案

在 Kubernetes 中部署应用进行链路追踪数据收集,常见有两种方案:

1、基于 Instrumentation Operator 的自动注入(自动埋点)

通过部署 OpenTelemetry Operator,并创建 Instrumentation 自定义资源(CRD),实现对应用容器的自动注入 SDK 或 Sidecar,从而无需修改应用代码即可采集追踪数据。适合需要快速接入、统一管理、降低改造成本的场景。

2、手动在应用中集成 OpenTelemetry SDK(手动埋点)

在应用程序代码中直接引入 OpenTelemetry SDK,手动埋点关键业务逻辑,控制 trace span 的粒度和内容,并将数据通过 OTLP(OpenTelemetry Protocol)协议导出到后端(如 OpenTelemetry Collector、Jaeger、Tempo 等)。适合需要精准控制追踪数据质量或已有自定义采集需求的场景。

接下来以Instrumentation Operator自动注入方式演示如何收集并处理数据。二、部署测试应用

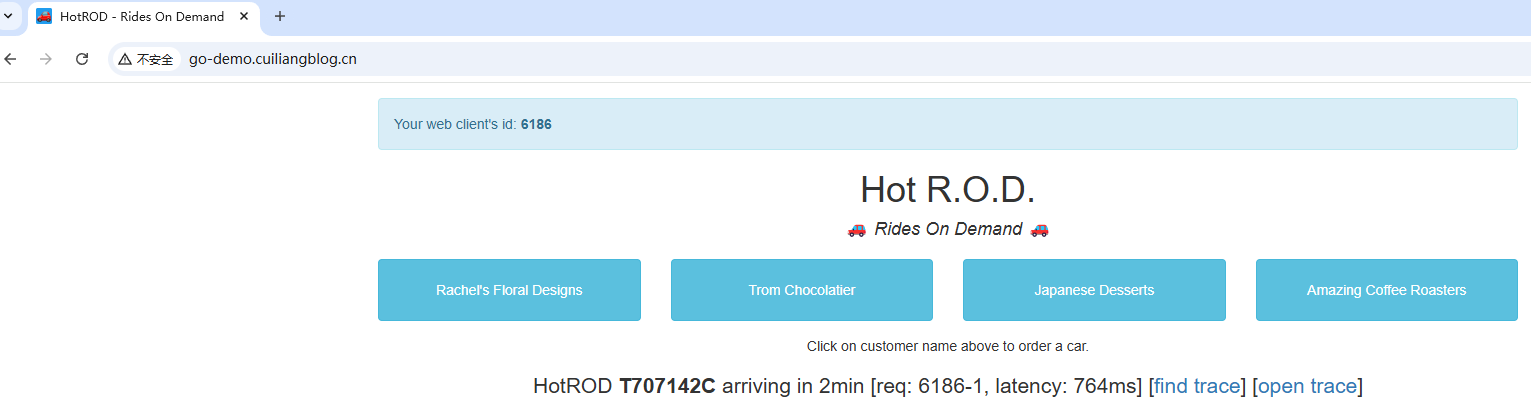

接下来我们部署一个HotROD 演示程序,它内置了OpenTelemetry SDK,我们只需要配置 opentelemetry 接收地址既可,具体可参考文档:

https://github.com/jaegertracing/jaeger/tree/main/examples/hotrodapiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo

spec:

selector:

matchLabels:

app: go-demo

template:

metadata:

labels:

app: go-demo

spec:

containers:

- name: go-demo

image: jaegertracing/example-hotrod:latest

imagePullPolicy: IfNotPresent

resources:

limits:

memory: "500Mi"

cpu: "200m"

ports:

- containerPort: 8080

env:

- name: OTEL_EXPORTER_OTLP_ENDPOINT # opentelemetry服务地址

value: http://center-collector.opentelemetry.svc:4318

---

apiVersion: v1

kind: Service

metadata:

name: go-demo

spec:

selector:

app: go-demo

ports:

- port: 8080

targetPort: 8080

---

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: go-demo

spec:

entryPoints:

- web

routes:

- match: Host(`go-demo.cuiliangblog.cn`)

kind: Rule

services:

- name: go-demo

port: 8080接下来浏览器添加 hosts 解析后访问测试

三、Jaeger方案

3.1Jaeger介绍

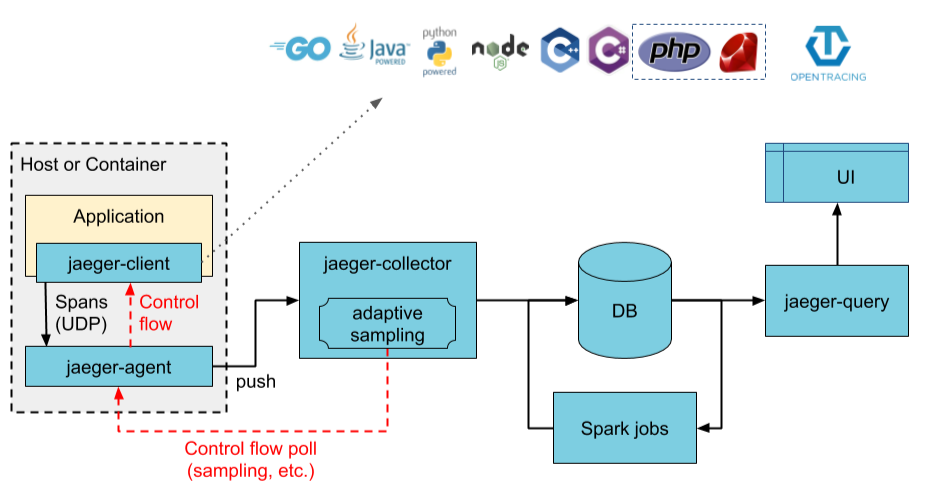

Jaeger 是Uber公司研发,后来贡献给CNCF的一个分布式链路追踪软件,主要用于微服务链路追踪。它优点是性能高(能处理大量追踪数据)、部署灵活(支持单节点和分布式部署)、集成方便(兼容 OpenTelemetry),并且可视化能力强,可以快速定位性能瓶颈和故障。

基于上述示意图,我们简要解析下 Jaeger 各个组件以及组件间的关系:

Client libraries(客户端库)

功能:将追踪信息(trace/span)插入到应用程序中。

说明:

支持多种语言,如 Go、Java、Python、Node.js 等。

通常使用 OpenTelemetry SDK 或 Jaeger Tracer。

将生成的追踪数据发送到 Agent 或 Collector。

Agent(代理)

功能:接收客户端发来的追踪数据,批量转发给 Collector。

说明:

接收 UDP 数据包(更轻量)

向 Collector 使用 gRPC 发送数据

Collector(收集器)

功能:

接收 Agent 或直接从 SDK 发送的追踪数据。

处理(转码、校验等)后写入存储后端。

可横向扩展,提高吞吐能力。

Ingester(摄取器)(可选)

功能:在使用 Kafka 作为中间缓冲队列时,Ingester 从 Kafka 消费数据并写入存储。

用途:解耦收集与存储、提升稳定性。

Storage Backend(存储后端)

功能:保存追踪数据,供查询和分析使用。

支持:

Elasticsearch

Cassandra

Kafka(用于异步摄取)

Badger(仅用于开发)

OpenSearch

Query(查询服务)

功能:从存储中查询追踪数据,提供给前端 UI 使用。

提供 API 接口:供 UI 或其他系统(如 Grafana Tempo)调用。

UI(前端界面)

功能:

可视化展示 Trace、Span、服务依赖图。

支持搜索条件(服务名、时间范围、trace ID 等)。

常用用途:

查看慢请求

分析请求调用链

排查错误或瓶颈

在本示例中,指标数据采集与收集由 OpenTelemetry 实现,仅需要使用 jaeger-collector 组件接收输入,存入 elasticsearch,使用 jaeger-query 组件查询展示数据既可。3.2部署 Jaeger(all in one)

apiVersion: apps/v1

kind: Deployment

metadata:

name: jaeger

namespace: opentelemetry

labels:

app: jaeger

spec:

replicas: 1

selector:

matchLabels:

app: jaeger

template:

metadata:

labels:

app: jaeger

spec:

containers:

- name: jaeger

image: jaegertracing/all-in-one:latest

args:

- "--collector.otlp.enabled=true" # 启用 OTLP gRPC

- "--collector.otlp.grpc.host-port=0.0.0.0:4317"

resources:

limits:

memory: "2Gi"

cpu: "1"

ports:

- containerPort: 6831

protocol: UDP

- containerPort: 16686

protocol: TCP

- containerPort: 4317

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: jaeger

namespace: opentelemetry

labels:

app: jaeger

spec:

selector:

app: jaeger

ports:

- name: jaeger-udp

port: 6831

targetPort: 6831

protocol: UDP

- name: jaeger-ui

port: 16686

targetPort: 16686

protocol: TCP

- name: otlp-grpc

port: 4317

targetPort: 4317

protocol: TCP

---

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: jaeger

namespace: opentelemetry

spec:

entryPoints:

- web

routes:

- match: Host(`jaeger.cuiliangblog.cn`)

kind: Rule

services:

- name: jaeger

port: 166863.3部署 Jaeger(分布式)

all in one 数据存放在内存中不具备高可用性,生产环境中建议使用Elasticsearch 或 OpenSearch 作为 Cassandra 的存储后端,以 ElasticSearch 为例,部署操作具体可参考文档:https://www.cuiliangblog.cn/detail/section/162609409导出 ca 证书

# kubectl -n elasticsearch get secret elasticsearch-es-http-certs-public -o go-template='{{index .data "ca.crt" | base64decode }}' > ca.crt

# kubectl create secret -n opentelemetry generic es-tls-secret --from-file=ca.crt=./ca.crt

secret/es-tls-secret created获取 chart 包

# helm repo add jaegertracing https://jaegertracing.github.io/helm-charts

"jaegertracing" has been added to your repositories

# helm search repo jaegertracing

NAME CHART VERSION APP VERSION DESCRIPTION

jaegertracing/jaeger 3.4.1 1.53.0 A Jaeger Helm chart for Kubernetes

jaegertracing/jaeger-operator 2.57.0 1.61.0 jaeger-operator Helm chart for Kubernetes

# helm pull jaegertracing/jaeger --untar

# cd jaeger

# ls

Chart.lock charts Chart.yaml README.md templates values.yaml修改安装参数

apiVersion: v1

kind: ServiceAccount

metadata:

name: jaeger-collector

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: collector

automountServiceAccountToken: false

---

# Source: jaeger/templates/query-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: jaeger-query

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: query

automountServiceAccountToken: false

---

# Source: jaeger/templates/spark-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: jaeger-spark

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: spark

automountServiceAccountToken: false

---

# Source: jaeger/templates/collector-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: jaeger-collector

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: collector

spec:

ports:

- name: grpc

port: 14250

protocol: TCP

targetPort: grpc

appProtocol: grpc

- name: http

port: 14268

protocol: TCP

targetPort: http

appProtocol: http

- name: otlp-grpc

port: 4317

protocol: TCP

targetPort: otlp-grpc

- name: otlp-http

port: 4318

protocol: TCP

targetPort: otlp-http

- name: admin

port: 14269

targetPort: admin

selector:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: collector

type: ClusterIP

---

# Source: jaeger/templates/query-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: jaeger-query

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: query

spec:

ports:

- name: query

port: 80

protocol: TCP

targetPort: query

- name: grpc

port: 16685

protocol: TCP

targetPort: grpc

- name: admin

port: 16687

protocol: TCP

targetPort: admin

selector:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: query

type: ClusterIP

---

# Source: jaeger/templates/collector-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jaeger-collector

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: collector

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: collector

template:

metadata:

annotations:

checksum/config-env: 75a11da44c802486bc6f65640aa48a730f0f684c5c07a42ba3cd1735eb3fb070

labels:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: collector

spec:

securityContext:

{}

serviceAccountName: jaeger-collector

containers:

- name: jaeger-collector

securityContext:

{}

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/jaeger-collector:1.53.0

imagePullPolicy: IfNotPresent

args:

env:

- name: COLLECTOR_OTLP_ENABLED

value: "true"

- name: SPAN_STORAGE_TYPE

value: elasticsearch

- name: ES_SERVER_URLS

value: https://elasticsearch-client.elasticsearch.svc:9200

- name: ES_TLS_SKIP_HOST_VERIFY # 添加临时跳过主机名验证

value: "true"

- name: ES_USERNAME

value: elastic

- name: ES_PASSWORD

valueFrom:

secretKeyRef:

name: jaeger-elasticsearch

key: password

- name: ES_TLS_ENABLED

value: "true"

- name: ES_TLS_CA

value: /es-tls/ca.crt

ports:

- containerPort: 14250

name: grpc

protocol: TCP

- containerPort: 14268

name: http

protocol: TCP

- containerPort: 14269

name: admin

protocol: TCP

- containerPort: 4317

name: otlp-grpc

protocol: TCP

- containerPort: 4318

name: otlp-http

protocol: TCP

readinessProbe:

httpGet:

path: /

port: admin

livenessProbe:

httpGet:

path: /

port: admin

resources:

{}

volumeMounts:

- name: es-tls-secret

mountPath: /es-tls/ca.crt

subPath: ca-cert.pem

readOnly: true

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: es-tls-secret

secret:

secretName: es-tls-secret

---

# Source: jaeger/templates/query-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jaeger-query

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: query

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: query

template:

metadata:

labels:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: query

spec:

securityContext:

{}

serviceAccountName: jaeger-query

containers:

- name: jaeger-query

securityContext:

{}

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/jaegertracing-jaeger-query:1.53.0

imagePullPolicy: IfNotPresent

args:

env:

- name: SPAN_STORAGE_TYPE

value: elasticsearch

- name: ES_SERVER_URLS

value: https://elasticsearch-client.elasticsearch.svc:9200

- name: ES_TLS_SKIP_HOST_VERIFY # 添加临时跳过主机名验证

value: "true"

- name: ES_USERNAME

value: elastic

- name: ES_PASSWORD

valueFrom:

secretKeyRef:

name: jaeger-elasticsearch

key: password

- name: ES_TLS_ENABLED

value: "true"

- name: ES_TLS_CA

value: /es-tls/ca.crt

- name: QUERY_BASE_PATH

value: "/"

- name: JAEGER_AGENT_PORT

value: "6831"

ports:

- name: query

containerPort: 16686

protocol: TCP

- name: grpc

containerPort: 16685

protocol: TCP

- name: admin

containerPort: 16687

protocol: TCP

resources:

{}

volumeMounts:

- name: es-tls-secret

mountPath: /es-tls/ca.crt

subPath: ca-cert.pem

readOnly: true

livenessProbe:

httpGet:

path: /

port: admin

readinessProbe:

httpGet:

path: /

port: admin

- name: jaeger-agent-sidecar

securityContext:

{}

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/jaegertracing-jaeger-agent:1.53.0

imagePullPolicy: IfNotPresent

args:

env:

- name: REPORTER_GRPC_HOST_PORT

value: jaeger-collector:14250

ports:

- name: admin

containerPort: 14271

protocol: TCP

resources:

null

volumeMounts:

livenessProbe:

httpGet:

path: /

port: admin

readinessProbe:

httpGet:

path: /

port: admin

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: es-tls-secret

secret:

secretName: es-tls-secret

---

# Source: jaeger/templates/spark-cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: jaeger-spark

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: spark

spec:

schedule: "49 23 * * *"

successfulJobsHistoryLimit: 5

failedJobsHistoryLimit: 5

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

metadata:

labels:

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/component: spark

spec:

serviceAccountName: jaeger-spark

securityContext:

{}

containers:

- name: jaeger-spark

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/jaegertracing-spark-dependencies:latest

imagePullPolicy: IfNotPresent

args:

env:

- name: STORAGE

value: elasticsearch

- name: ES_SERVER_URLS

value: https://elasticsearch-client.elasticsearch.svc:9200

- name: ES_USERNAME

value: elastic

- name: ES_PASSWORD

valueFrom:

secretKeyRef:

name: jaeger-elasticsearch

key: password

- name: ES_TLS_ENABLED

value: "true"

- name: ES_TLS_CA

value: /es-tls/ca.crt

- name: ES_NODES

value: https://elasticsearch-client.elasticsearch.svc:9200

- name: ES_NODES_WAN_ONLY

value: "false"

resources:

{}

volumeMounts:

securityContext:

{}

restartPolicy: OnFailure

volumes:

---

# Source: jaeger/templates/elasticsearch-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: jaeger-elasticsearch

labels:

helm.sh/chart: jaeger-3.4.1

app.kubernetes.io/name: jaeger

app.kubernetes.io/instance: jaeger

app.kubernetes.io/version: "1.53.0"

app.kubernetes.io/managed-by: Helm

annotations:

"helm.sh/hook": pre-install,pre-upgrade

"helm.sh/hook-weight": "-1"

"helm.sh/hook-delete-policy": before-hook-creation

"helm.sh/resource-policy": keep

type: Opaque

data:

password: "ZWdvbjY2Ng=="安装 jaeger

root@k8s01:~/helm/jaeger/jaeger# kubectl delete -n opentelemetry -f test.yaml

serviceaccount "jaeger-collector" deleted

serviceaccount "jaeger-query" deleted

serviceaccount "jaeger-spark" deleted

service "jaeger-collector" deleted

service "jaeger-query" deleted

deployment.apps "jaeger-collector" deleted

deployment.apps "jaeger-query" deleted

cronjob.batch "jaeger-spark" deleted

secret "jaeger-elasticsearch" deleted

root@k8s01:~/helm/jaeger/jaeger# vi test.yaml

root@k8s01:~/helm/jaeger/jaeger# kubectl apply -n opentelemetry -f test.yaml

serviceaccount/jaeger-collector created

serviceaccount/jaeger-query created

serviceaccount/jaeger-spark created

service/jaeger-collector created

service/jaeger-query created

deployment.apps/jaeger-collector created

deployment.apps/jaeger-query created

cronjob.batch/jaeger-spark created

secret/jaeger-elasticsearch created

root@k8s01:~/helm/jaeger/jaeger# kubectl get pods -n opentelemetry -w

NAME READY STATUS RESTARTS AGE

center-collector-78f7bbdf45-j798s 1/1 Running 2 (6h2m ago) 30h

jaeger-7989549bb9-hn8jh 1/1 Running 2 (6h2m ago) 25h

jaeger-collector-7f8fb4c946-nkg4m 1/1 Running 0 3s

jaeger-query-5cdb7b68bd-xpftn 2/2 Running 0 3s

^Croot@k8s01:~/helm/jaeger/jaeger# kubectl get svc -n opentelemetry | grep jaeger

jaeger ClusterIP 10.100.251.219 <none> 6831/UDP,16686/TCP,4317/TCP 25h

jaeger-collector ClusterIP 10.111.17.41 <none> 14250/TCP,14268/TCP,4317/TCP,4318/TCP,14269/TCP 51s

jaeger-query ClusterIP 10.98.118.118 <none> 80/TCP,16685/TCP,16687/TCP 51s创建 ingress 资源

root@k8s01:~/helm/jaeger/jaeger# cat jaeger.yaml

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: jaeger

namespace: opentelemetry

spec:

entryPoints:

- web

routes:

- match: Host(`jaeger.axinga.cn`)

kind: Rule

services:

- name: jaeger

port: 16686接下来配置 hosts 解析后浏览器访问既可。

配置 Collector

apiVersion: opentelemetry.io/v1beta1

kind: OpenTelemetryCollector

# 元数据定义部分

metadata:

name: center # Collector 的名称为 center

namespace: opentelemetry

# 具体的配置内容

spec:

replicas: 1 # 设置副本数量为1

config: # 定义 Collector 配置

receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs)

otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器

protocols: # 启用哪些协议来接收数据

grpc:

endpoint: 0.0.0.0:4317 # 启用 gRPC 协议

http:

endpoint: 0.0.0.0:4318 # 启用 HTTP 协议

processors: # 处理器,用于处理收集到的数据

batch: {} # 批处理器,用于将数据分批发送,提高效率

exporters: # 导出器,用于将处理后的数据发送到后端系统

# debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试)

otlp: # 数据发送到jaeger的grpc端口

endpoint: "jaeger-collector:4317"

tls: # 跳过证书验证

insecure: true

service: # 服务配置部分

pipelines: # 定义处理管道

traces: # 定义 trace 类型的管道

receivers: [otlp] # 接收器为 OTLP

processors: [batch] # 使用批处理器

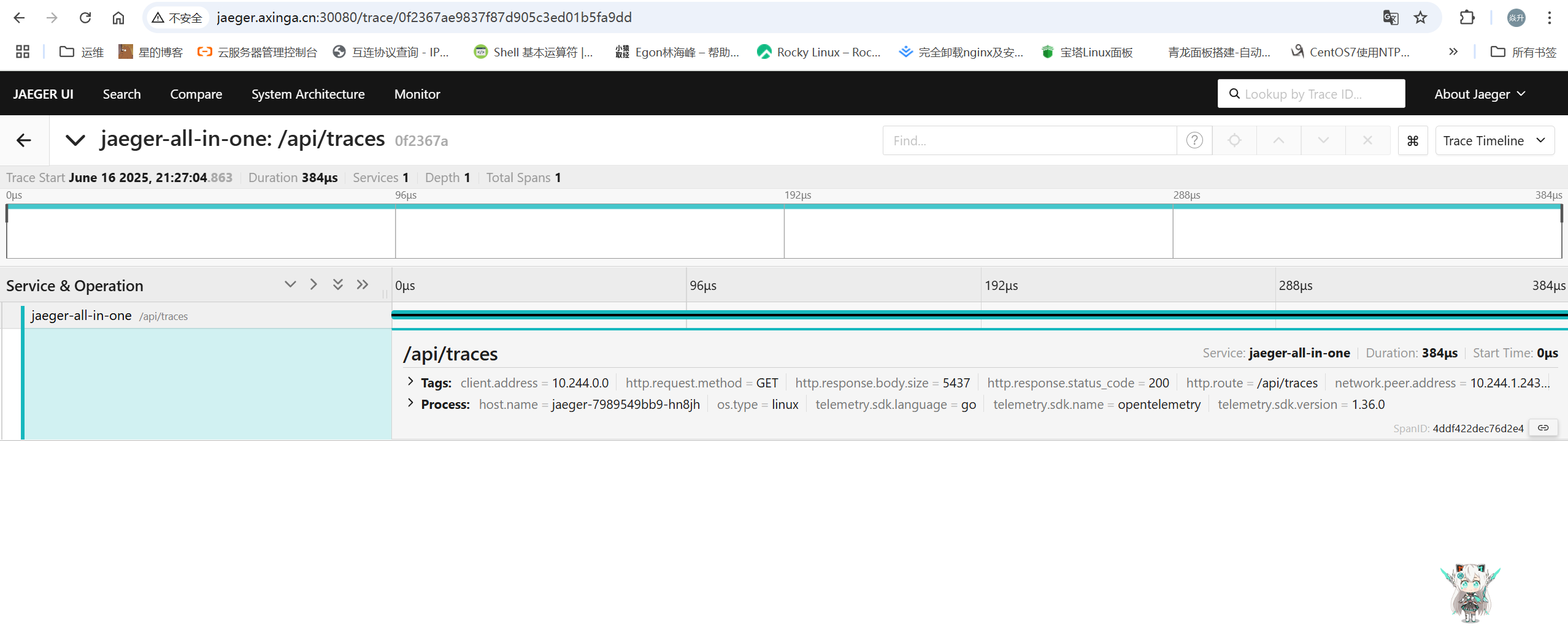

exporters: [otlp] # 将数据发送到otlp接下来我们随机访问 demo 应用,并在 jaeger 查看链路追踪数据。

Jaeger 系统找到了一些 trace 并显示了一些关于该 trace 的元数据,包括参与该 trace 的不同服务的名称以及每个服务发送到 Jaeger 的 span 记录数。

jaeger 使用具体可参考文章https://medium.com/jaegertracing/take-jaeger-for-a-hotrod-ride-233cf43e46c2

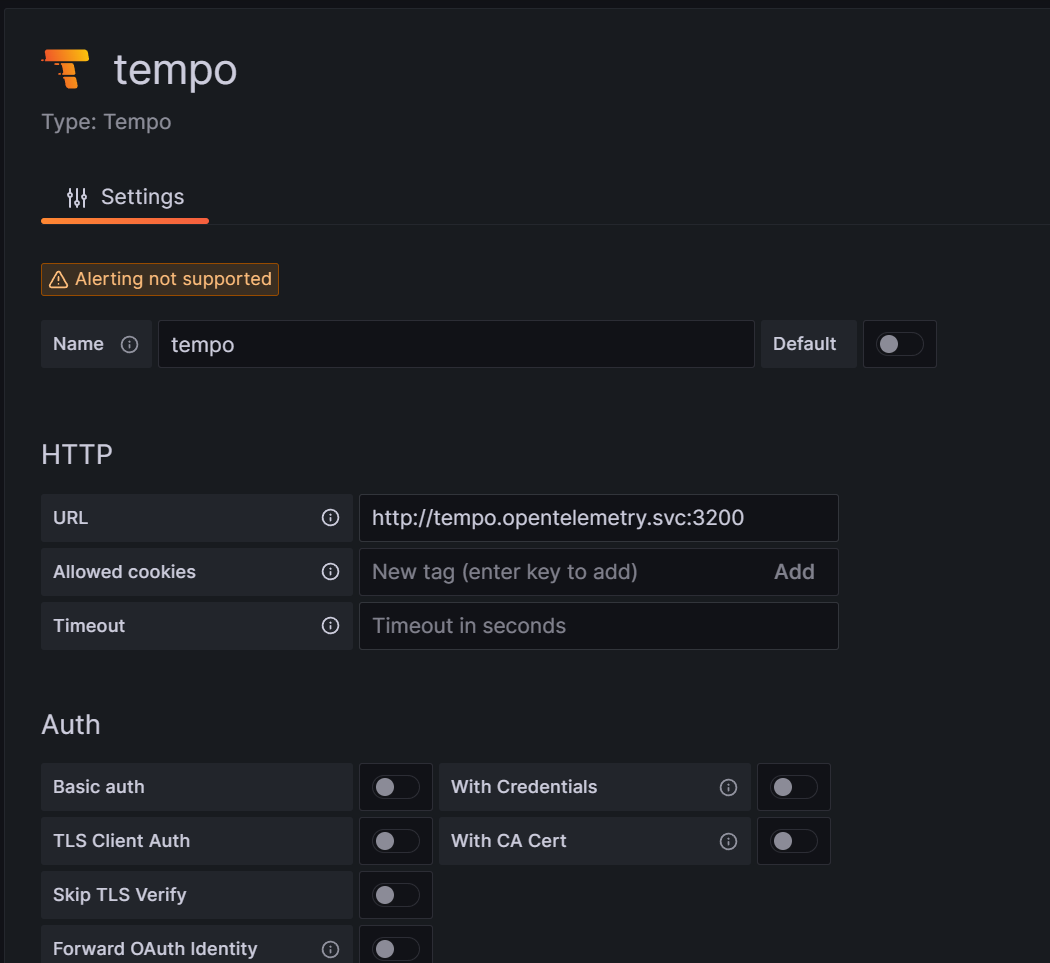

四、Tempo 方案

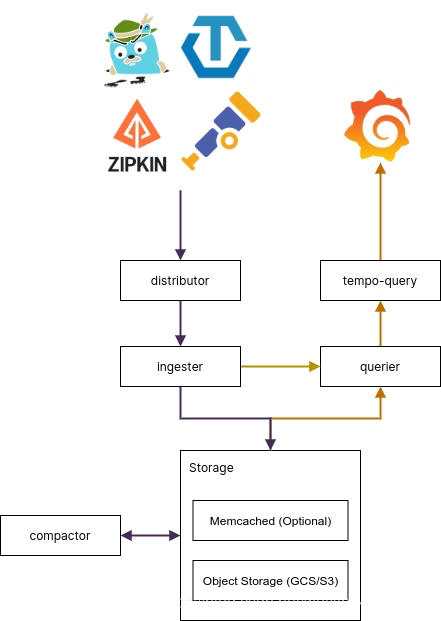

4.1Tempo 介绍

Grafana Tempo是一个开源、易于使用的大规模分布式跟踪后端。Tempo具有成本效益,仅需要对象存储即可运行,并且与Grafana,Prometheus和Loki深度集成,Tempo可以与任何开源跟踪协议一起使用,包括Jaeger、Zipkin和OpenTelemetry。它仅支持键/值查找,并且旨在与用于发现的日志和度量标准(示例性)协同工作

Distributors(分发器)

功能:接收客户端发送的追踪数据并进行初步验证

说明:

对 Trace 进行分片、标签处理。

将数据转发给合适的 Ingesters。

Ingesters(摄取器)

功能:处理和持久化 Trace 数据

说明:

接收来自 Distributor 的数据。

在内存中缓存直到追踪完成(完整的 Trace)。

再写入后端对象存储。

Storage(对象存储)

功能:持久化存储 Trace 数据

说明:

支持多种对象存储(S3、GCS、MinIO、Azure Blob 等)。

Tempo 存储的是压缩的完整 Trace 文件,使用 trace ID 进行索引。

Compactor(数据压缩)

功能:合并 trace 数据,压缩多个小 block 成一个大 block。

说明:

可以单独运行 compactor 容器或进程。

通常以 后台任务 的方式运行,不参与实时 ingest 或 query。

Tempo Query(查询前端)

功能:处理来自用户或 Grafana 的查询请求

说明:

接收查询请求。

提供缓存、合并和调度功能,优化查询性能。

将请求转发给 Querier。

Querier(查询器)

功能:从存储中检索 Trace 数据

说明:

根据 trace ID 从对象存储中检索完整 trace。

解压和返回结构化的 Span 数据。

返回结果供 Grafana 或其他前端展示。4.2部署 Tempo

推荐用Helm 安装,官方提供了tempo-distributed Helm chart 和 tempo Helm chart 两种部署模式,一般来说本地测试使用 tempo Helm chart,而生产环境可以使用 Tempo 的微服务部署方式 tempo-distributed。接下来以整体模式为例,具体可参考文档https://github.com/grafana/helm-charts/tree/main/charts/tempo

创建 s3 的 bucket、ak、sk 资源,并配置权限。具体可参考上面minio4.2.1获取 chart 包

# helm repo add grafana https://grafana.github.io/helm-charts

# helm pull grafana/tempo --untar

# cd tempo

# ls

Chart.yaml README.md README.md.gotmpl templates values.yaml4.2.2修改配置,prometheus 默认未启用远程写入,可参考文章开启远程写入https://www.cuiliangblog.cn/detail/section/15189202

# vim values.yaml

tempo:

storage:

trace: # 默认使用本地文件存储,改为使用s3对象存储

backend: s3

s3:

bucket: tempo # store traces in this bucket

endpoint: minio-service.minio.svc:9000 # api endpoint

access_key: zbsIQQnsp871ZnZ2AuKr # optional. access key when using static credentials.

secret_key: zxL5EeXwU781M8inSBPcgY49mEbBVoR1lvFCX4JU # optional. secret key when using static credentials.

insecure: true # 跳过证书验证4.2.3创建 tempo

root@k8s01:~/helm/opentelemetry/tempo# cat test.yaml

---

# Source: tempo/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tempo

namespace: opentelemetry

labels:

helm.sh/chart: tempo-1.23.1

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

app.kubernetes.io/version: "2.8.0"

app.kubernetes.io/managed-by: Helm

automountServiceAccountToken: true

---

# Source: tempo/templates/configmap-tempo.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: tempo

namespace: opentelemetry

labels:

helm.sh/chart: tempo-1.23.1

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

app.kubernetes.io/version: "2.8.0"

app.kubernetes.io/managed-by: Helm

data:

overrides.yaml: |

overrides:

{}

tempo.yaml: |

memberlist:

cluster_label: "tempo.opentelemetry"

multitenancy_enabled: false

usage_report:

reporting_enabled: true

compactor:

compaction:

block_retention: 24h

distributor:

receivers:

jaeger:

protocols:

grpc:

endpoint: 0.0.0.0:14250

thrift_binary:

endpoint: 0.0.0.0:6832

thrift_compact:

endpoint: 0.0.0.0:6831

thrift_http:

endpoint: 0.0.0.0:14268

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

ingester:

{}

server:

http_listen_port: 3200

storage:

trace:

backend: s3

s3:

access_key: admin

bucket: tempo

endpoint: minio-demo.minio.svc:9000

secret_key: 8fGYikcyi4

insecure: true

#tls: false

wal:

path: /var/tempo/wal

querier:

{}

query_frontend:

{}

overrides:

defaults: {}

per_tenant_override_config: /conf/overrides.yaml

---

# Source: tempo/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: tempo

namespace: opentelemetry

labels:

helm.sh/chart: tempo-1.23.1

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

app.kubernetes.io/version: "2.8.0"

app.kubernetes.io/managed-by: Helm

spec:

type: ClusterIP

ports:

- name: tempo-jaeger-thrift-compact

port: 6831

protocol: UDP

targetPort: 6831

- name: tempo-jaeger-thrift-binary

port: 6832

protocol: UDP

targetPort: 6832

- name: tempo-prom-metrics

port: 3200

protocol: TCP

targetPort: 3200

- name: tempo-jaeger-thrift-http

port: 14268

protocol: TCP

targetPort: 14268

- name: grpc-tempo-jaeger

port: 14250

protocol: TCP

targetPort: 14250

- name: tempo-zipkin

port: 9411

protocol: TCP

targetPort: 9411

- name: tempo-otlp-legacy

port: 55680

protocol: TCP

targetPort: 55680

- name: tempo-otlp-http-legacy

port: 55681

protocol: TCP

targetPort: 55681

- name: grpc-tempo-otlp

port: 4317

protocol: TCP

targetPort: 4317

- name: tempo-otlp-http

port: 4318

protocol: TCP

targetPort: 4318

- name: tempo-opencensus

port: 55678

protocol: TCP

targetPort: 55678

selector:

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

---

# Source: tempo/templates/statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: tempo

namespace: opentelemetry

labels:

helm.sh/chart: tempo-1.23.1

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

app.kubernetes.io/version: "2.8.0"

app.kubernetes.io/managed-by: Helm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

serviceName: tempo-headless

template:

metadata:

labels:

app.kubernetes.io/name: tempo

app.kubernetes.io/instance: tempo

annotations:

checksum/config: 563d333fcd3b266c31add18d53e0fa1f5e6ed2e1588e6ed4c466a8227285129b

spec:

serviceAccountName: tempo

automountServiceAccountToken: true

containers:

- args:

- -config.file=/conf/tempo.yaml

- -mem-ballast-size-mbs=1024

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/grafana-tempo-2.8.0:2.8.0

imagePullPolicy: IfNotPresent

name: tempo

ports:

- containerPort: 3200

name: prom-metrics

- containerPort: 6831

name: jaeger-thrift-c

protocol: UDP

- containerPort: 6832

name: jaeger-thrift-b

protocol: UDP

- containerPort: 14268

name: jaeger-thrift-h

- containerPort: 14250

name: jaeger-grpc

- containerPort: 9411

name: zipkin

- containerPort: 55680

name: otlp-legacy

- containerPort: 4317

name: otlp-grpc

- containerPort: 55681

name: otlp-httplegacy

- containerPort: 4318

name: otlp-http

- containerPort: 55678

name: opencensus

livenessProbe:

failureThreshold: 3

httpGet:

path: /ready

port: 3200

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

readinessProbe:

failureThreshold: 3

httpGet:

path: /ready

port: 3200

initialDelaySeconds: 20

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

{}

env:

volumeMounts:

- mountPath: /conf

name: tempo-conf

securityContext:

fsGroup: 10001

runAsGroup: 10001

runAsNonRoot: true

runAsUser: 10001

volumes:

- configMap:

name: tempo

name: tempo-conf

updateStrategy:

type:

RollingUpdate

root@k8s01:~/helm/opentelemetry/tempo# kubectl get pod -n opentelemetry

NAME READY STATUS RESTARTS AGE

center-collector-67dcddd7db-8hd98 1/1 Running 0 4h3m

tempo-0 1/1 Running 35 (5h57m ago) 8d

root@k8s01:~/helm/opentelemetry/tempo# kubectl get svc -n opentelemetry | grep tempo

tempo ClusterIP 10.105.249.189 <none> 6831/UDP,6832/UDP,3200/TCP,14268/TCP,14250/TCP,9411/TCP,55680/TCP,55681/TCP,4317/TCP,4318/TCP,55678/TCP 8d

root@k8s01:~/helm/opentelemetry/tempo#

4.2.4配置 Collector

#按之前上面的完整配置 下面可以参考

tempo 服务的otlp 数据接收端口分别为4317(grpc)和4318(http),修改OpenTelemetryCollector 配置,将数据发送到 tempo 的 otlp 接收端口。

apiVersion: opentelemetry.io/v1beta1

kind: OpenTelemetryCollector

# 元数据定义部分

metadata:

name: center # Collector 的名称为 center

namespace: opentelemetry

# 具体的配置内容

spec:

replicas: 1 # 设置副本数量为1

config: # 定义 Collector 配置

receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs)

otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器

protocols: # 启用哪些协议来接收数据

grpc:

endpoint: 0.0.0.0:4317 # 启用 gRPC 协议

http:

endpoint: 0.0.0.0:4318 # 启用 HTTP 协议

processors: # 处理器,用于处理收集到的数据

batch: {} # 批处理器,用于将数据分批发送,提高效率

exporters: # 导出器,用于将处理后的数据发送到后端系统

# debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试)

otlp: # 数据发送到tempo的grpc端口

endpoint: "tempo:4317"

tls: # 跳过证书验证

insecure: true

service: # 服务配置部分

pipelines: # 定义处理管道

traces: # 定义 trace 类型的管道

receivers: [otlp] # 接收器为 OTLP

processors: [batch] # 使用批处理器

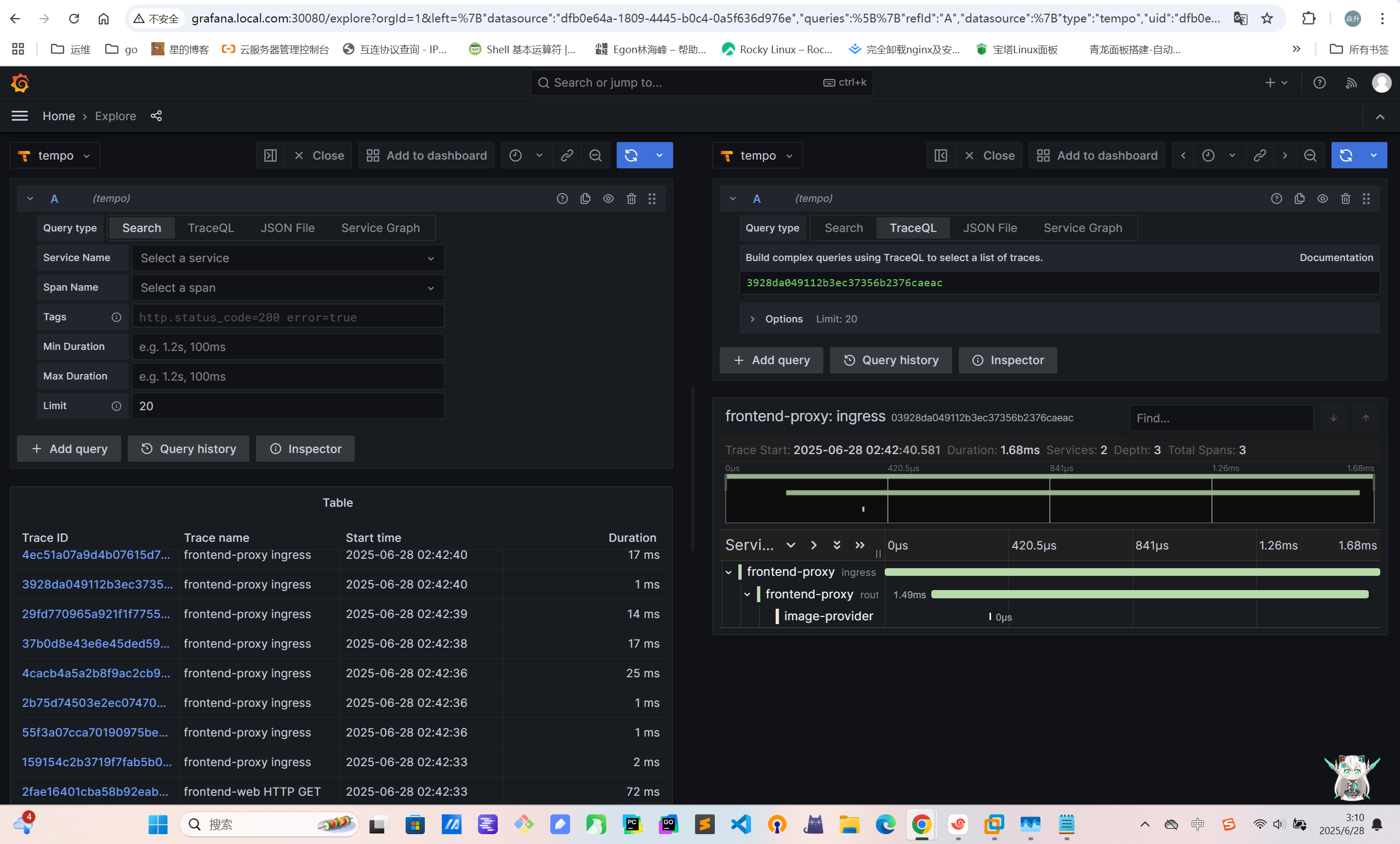

exporters: [otlp] # 将数据打印到OTLP4.2.5访问验证

4.2.6服务拓扑图配置

Tempo Metrics Generator 是 Grafana Tempo 提供的一个 可选组件,用于将 Trace(链路追踪数据)转换为 Metrics(指标数据),从而实现 Trace-to-Metrics(T2M) 的能力,默认情况下 tempo 并未启用该功能。4.2.6.1prometheus 开启remote-write-receiver 功能,关键配置如下:

# vim prometheus-prometheus.yaml

spec:

# enableFeatures:

enableFeatures: # 开启远程写入

- remote-write-receiver

externalLabels:

web.enable-remote-write-receiver: "true"

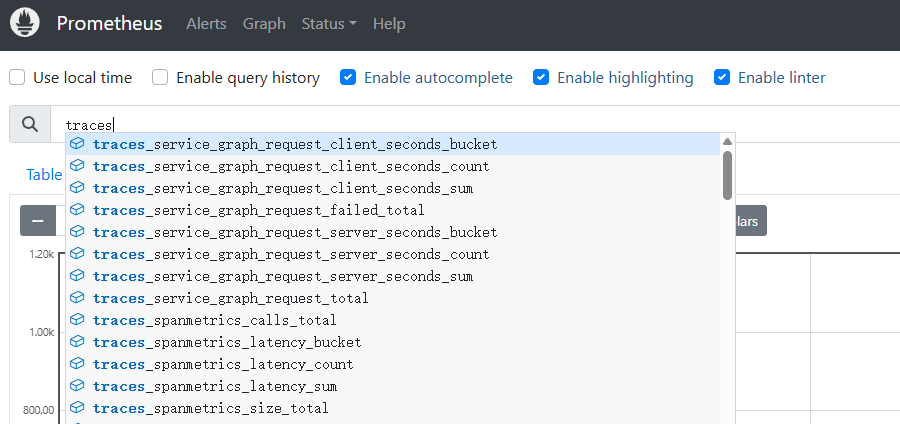

# kubectl apply -f prometheus-prometheus.yaml具体可参考文档:https://m.cuiliangblog.cn/detail/section/151892024.2.6.2tempo 开启metricsGenerator 功能,关键配置如下:

# vim values.yaml

global:

per_tenant_override_config: /runtime-config/overrides.yaml

metrics_generator_processors:

- 'service-graphs'

- 'span-metrics'

tempo:

metricsGenerator:

enabled: true # 从 Trace 中自动生成 metrics(指标),用于服务调用关系图

remoteWriteUrl: "http://prometheus-k8s.monitoring.svc:9090/api/v1/write" # prometheus地址

overrides: # 多租户默认配置启用metrics

defaults:

metrics_generator:

processors:

- service-graphs

- span-metrics4.2.6.3此时查询 prometheus 图表,可以获取traces 相关指标

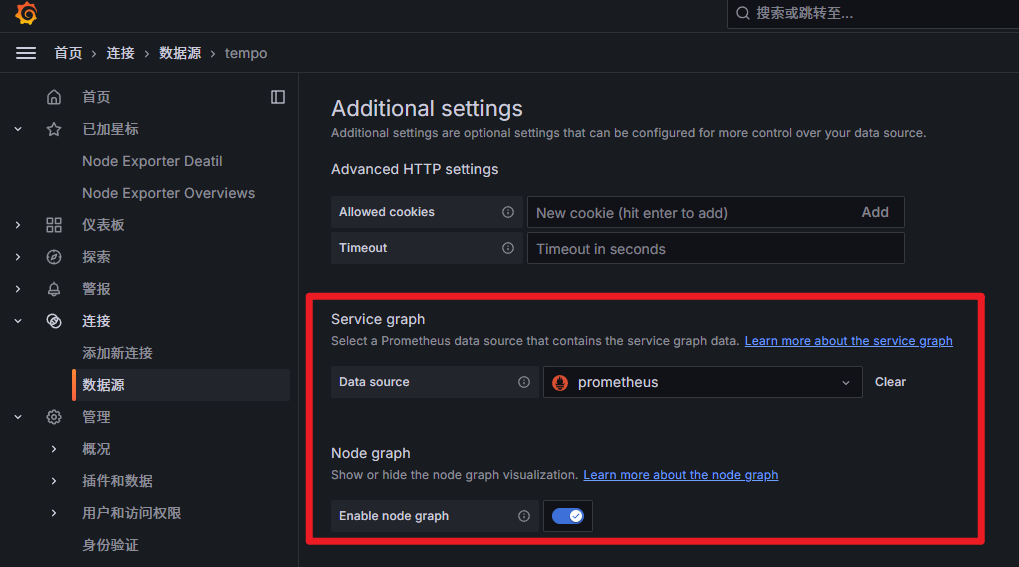

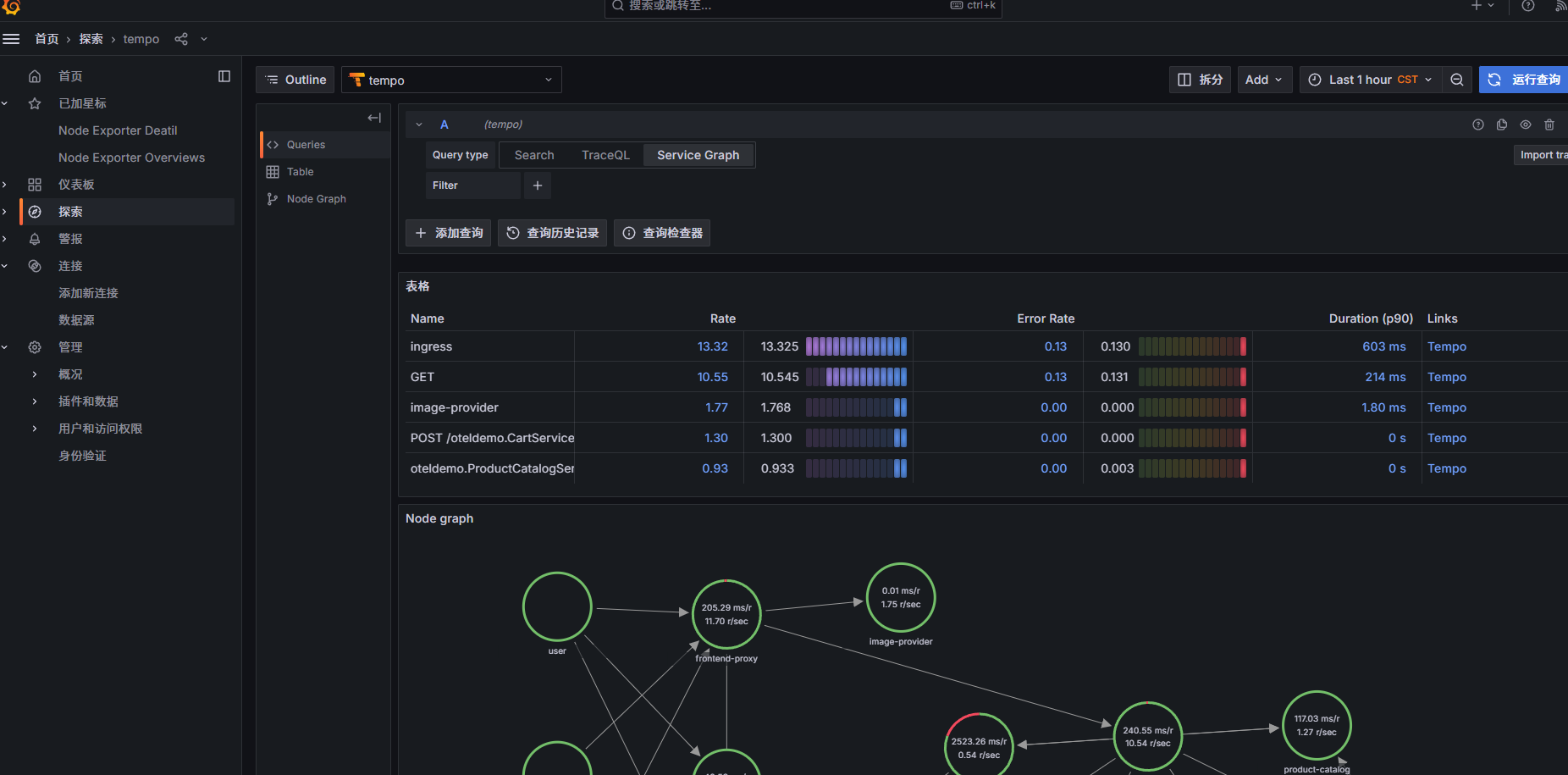

grafana 数据源启用节点图与服务图,配置如下

查看服务图数据

[...]10.1Tempo 介绍Grafana Tempo是一个开源、易于使用的大规模分布式跟踪后端。Tempo具有成本效益,仅需要对象存储即可运行,并且与Grafana,Prometheus和Loki深度集成,Tempo可以与任何开源跟踪协议一起使用,包括Jaeger、Zipkin和OpenTelemetry。它仅支持键/值查找,并且旨在与用于发现的日志和度量标准(示例性)协同工作。https://ax[...]