一、创建资源

root@k8s-master-01:~# mkdir harbor

root@k8s-master-01:~# cd harbor/

root@k8s-master-01:~/harbor# ls

root@k8s-master-01:~/harbor# helm repo add harbor https://helm.goharbor.io

"harbor" has been added to your repositories

root@k8s-master-01:~/harbor# helm repo list

NAME URL

nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner

harbor https://helm.goharbor.io

root@k8s-master-01:~/harbor# helm pull harbor/harbor

root@k8s-master-01:~/harbor# ls

harbor-1.17.1.tgz

root@k8s-master-01:~/harbor# tar -zxvf harbor-1.17.1.tgz

root@k8s-master-01:~/harbor# ls

harbor harbor-1.17.1.tgzkubectl create ns harbor二、渲染&修改yaml文件

helm template my-harbor ./test.yaml---

# Source: harbor/templates/core/core-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: release-name-harbor-core

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

secretKey: "bm90LWEtc2VjdXJlLWtleQ=="

secret: "Z3NncnBQWURsQ01hUjZlWg=="

tls.key: "LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBMWdRMlJMbHJ6bUQzM0ZkT2RvanNHU1hUaVNhSXhkam1maW9VWmJVNDIzSFB2bnRwCjBuRi9Vcm40Vlk4SWtSZU4vbGFjQVBCVm1BQys0czBCYkxXSytrbC9wVUxlMFZHTUI0UElCMFF5YW5hNW9wQm0KY25sb3pEcVVOTTVpMlBVMnFXUEFHWG1BenBhTmpuZlBycnlRS0ZCY0ZkOTM4NW9GSmJCdlhmZ00ycS9pU09MOQp1dzdBUDd1UUFhQ0xjMzYvSlFtb1N0ZE9tNWZtYU9idDZ5ZW9JaU50ZXNUaXF1eVN3Y0k5RFZqdXk4SDhuamp5CnNOWTdWZlBwaXhSemw0K1EzZVgxNU5Lb0NOWm4yL0lNb2x4aUk3Q3FnQTkzSU1aSkFvVy9zMnhqSVp4aDFVZ3oKRW93YkNsOXdjT1ZRR2g4eUV5a0MzRTlKQjZrTjhkY2pKT3N2VlFJREFRQUJBb0lCQVFDWEN2LzEvdHNjRzRteQo0NWRIeHhqQ0l0VXBqWjJuN0kyMzZ5RGNLMHRHYlF1T1J2R0hpWHl2dVBxUC85T3UrdTNHMi85Y0ZrS0NkYnhDCnV5YlBQMDBubWFuUnkrRVAzN3F4THd1RVBWaExsU0VzbnpiK2diczVyL29iVHJHcXAxMTlyUjNObk5nUWRXYlEKYnJTUGdSdElxSFpsSllNMTFMVGZSYWREcmFYOHpDeG1ZSkwxc3JtMHBGNXk4Q1E0SG1Ka0ZtMG0rVlMxSFk4NAptazR3OGE3SGpoZWZJSE15Z3dUREluREZ0VVFlaDNyTkRYMzI3cmw5c0NBV1NMZnpaZDBuUGc2K2lZVTE0amxmCnA1Mi8yd1E2Y3lMSFY4am1QTDNoRUVIWXJlNVp6cFEzM0sybVFWVVpTR2c2SFRIOGszdWxQS2c1UXRJTmc1K3MKR3A0aS9EREJBb0dCQVBWMVVNSjFWZ3RKTENYM1pRQVArZTBVbEhLa2dWNnZhS2R3d2tpU0tjbTBWa1g0NUlIUwpFQ3ZhaTEzWHpsNkQ0S0tlbHFRUHY2VHc0TXBRcE4xSGwwd25PVnBDTW01dGc5bmF1WDhEdlNpcGJQaVE4anFMCnhSTzhaa2NJaW15ZXdXYVUxRFVqQ281Qk5DMWd4bUV4RnFjdjVVMlVQM1RzWllmQVFTMm1NWWR4QW9HQkFOODEKTmRsRnFDWnZZT0NYMm1ud3pxZzlyQ1Y4YWFHMnQ1WjVKUkZTL2gvL2dyZFZNd25VWHZ6cXVsRmM4dHM1Q0Y2eAoxSDJwVTJFTnpJYjBCM0dKNTBCZnJBUUxwdjdXRThjaFBzUTZpRW0yeTlmLzVIeThTdVJWblRCU295NktpRk13Ci9ycTE1blBXVVJzZkF6aFYvUVgvMCtvSFFxdFVkNnY4bFVtMWRsd2xBb0dBQlhmYm1MbHNkVXZvQStDREM0RlAKbkF4OVVpQ0FFVS92RU92ZUtDZTVicGpwNHgwc1dnZ0gvREllTUxVQ0QvRDRMQ2RFUzl0ZDlacTRKMG1zb3BGWgp1WVNXTG9DVEJ3ckJpVFRxTlA0c1ZKK1JvZWY0dlgwbm9zenJxbUZ5VkFFbFpkZWk4cHdaUEJvUHc0TUlhRm5qCm0wM2gyZHlYblU4MjQ5TlFvR2UzYXNFQ2dZQjdrdDcwSWg5YzRBN1hhTnJRQ2pTdmFpMXpOM1RYeGV2UUQ5UFkKeW9UTXZFM25KL0V3d1BXeHVsWmFrMFlVM25kbXpiY2h0dXZsY0psS0liSTVScXJUdGVQcS9YUi80NDloa0dOSwppa2xIM2o3dW44b2swSzM1eWZoVGQzekdXSVh1NE5JMkZseTJ4dkZ5UFhJdjcxTTh6Z3pKcFNsZzUwdTEyUW5oCm0rZ2lUUUtCZ0R3dHBnMjNkWEh2WFBtOEt4ZmhseElGOVB3SkF4TnF2SDNNT0NRMnJCYmE2cWhseWdSa2UrSVMKeXFUWFlmSjVISFdtYTZDZ1ZMOG9nSnA2emZiS2FvOFg5VSsyOUNuaUpvK2RsM1VtTVR6VDFXcFdlRS9xWTk2LwoySVFVQVR0U1EyU2Z6YUFaQm5JL29EK1FlcTBlOEszUXZnZlc3S2Y0bzdsSnlJUGRKS2NCCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg=="

tls.crt: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJRENDQWdpZ0F3SUJBZ0lSQUpMU3p5cTVFc05aQzV6TDZURmhpWFF3RFFZSktvWklodmNOQVFFTEJRQXcKR2pFWU1CWUdBMVVFQXhNUGFHRnlZbTl5TFhSdmEyVnVMV05oTUI0WERUSTFNRGN5TmpFME1UUXhNbG9YRFRJMgpNRGN5TmpFME1UUXhNbG93R2pFWU1CWUdBMVVFQXhNUGFHRnlZbTl5TFhSdmEyVnVMV05oTUlJQklqQU5CZ2txCmhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBMWdRMlJMbHJ6bUQzM0ZkT2RvanNHU1hUaVNhSXhkam0KZmlvVVpiVTQyM0hQdm50cDBuRi9Vcm40Vlk4SWtSZU4vbGFjQVBCVm1BQys0czBCYkxXSytrbC9wVUxlMFZHTQpCNFBJQjBReWFuYTVvcEJtY25sb3pEcVVOTTVpMlBVMnFXUEFHWG1BenBhTmpuZlBycnlRS0ZCY0ZkOTM4NW9GCkpiQnZYZmdNMnEvaVNPTDl1dzdBUDd1UUFhQ0xjMzYvSlFtb1N0ZE9tNWZtYU9idDZ5ZW9JaU50ZXNUaXF1eVMKd2NJOURWanV5OEg4bmpqeXNOWTdWZlBwaXhSemw0K1EzZVgxNU5Lb0NOWm4yL0lNb2x4aUk3Q3FnQTkzSU1aSgpBb1cvczJ4aklaeGgxVWd6RW93YkNsOXdjT1ZRR2g4eUV5a0MzRTlKQjZrTjhkY2pKT3N2VlFJREFRQUJvMkV3Clh6QU9CZ05WSFE4QkFmOEVCQU1DQXFRd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUdDQ3NHQVFVRkJ3TUMKTUE4R0ExVWRFd0VCL3dRRk1BTUJBZjh3SFFZRFZSME9CQllFRkhJcFJZZklNZkd5NkV1cU9iVSs0bGdhYVJOSApNQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUJDK3plNi9yUjQ3WmJZUGN4dU00L2FXdUtkeWZpMUhhLzlGNitGCk4rMmxKT1JOTjRYeS9KT2VQWmE0NlQxYzd2OFFNNFpVMHhBbnlqdXdRN1E5WE1GcmlucEF6NVRYV1ZZcW44dWIKNlNBd2YrT01qVTVhWGM3VVZtdzJoMzdBc0svTFRYOGo3NmtMUXVyVGVNOHdFTjBDbXI5NlF2Rnk5d2d2ZThlTgpiZldac1A3c0FwYklBeDdOVmc3RUl2czQxdDgvY2dxQWVvaGpYa1UyQVZkdFNLbzh0TFU5ZzNVdTdUNGtWWXpLCm9IQzJiQ3lpbkRPRFZIOHVtcHBCbVRubEg0TDRTR0lnSGFWcVZoOUhoOGFjTmgvbE1ST0dtU1RpVC9DNDRkUS8KcXlZWWxrcXQ3Ymk0dkhjaXZJZXBzckIvdkZ1ZXlQSzZGbGNTMjEvN3BVS0FUOU1YCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K"

HARBOR_ADMIN_PASSWORD: "SGFyYm9yMTIzNDU="

POSTGRESQL_PASSWORD: "Y2hhbmdlaXQ="

REGISTRY_CREDENTIAL_PASSWORD: "aGFyYm9yX3JlZ2lzdHJ5X3Bhc3N3b3Jk"

CSRF_KEY: "dXRzODI2MDNCZGJyVWFBRlhudFNqaWpSbUZPVUR5Mmg="

---

# Source: harbor/templates/database/database-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: "release-name-harbor-database"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

POSTGRES_PASSWORD: "Y2hhbmdlaXQ="

---

# Source: harbor/templates/jobservice/jobservice-secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: "release-name-harbor-jobservice"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

JOBSERVICE_SECRET: "Z3M3T2k4dTBTUk1GRzVlTg=="

REGISTRY_CREDENTIAL_PASSWORD: "aGFyYm9yX3JlZ2lzdHJ5X3Bhc3N3b3Jk"

---

# Source: harbor/templates/registry/registry-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: "release-name-harbor-registry"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

REGISTRY_HTTP_SECRET: "U0FnY21vVFNQVjlIdnlTRw=="

REGISTRY_REDIS_PASSWORD: ""

---

# Source: harbor/templates/registry/registry-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: "release-name-harbor-registry-htpasswd"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

REGISTRY_HTPASSWD: "aGFyYm9yX3JlZ2lzdHJ5X3VzZXI6JDJhJDEwJGNJZTk1ek9aQnp4RG1aMTQzem1HUmVWUkFmc0VMazZ0azNObWZXZmZjYkZtOU1ja1lrbnYy"

---

# Source: harbor/templates/registry/registryctl-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: "release-name-harbor-registryctl"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

---

# Source: harbor/templates/trivy/trivy-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: release-name-harbor-trivy

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

type: Opaque

data:

redisURL: cmVkaXM6Ly9yZWxlYXNlLW5hbWUtaGFyYm9yLXJlZGlzOjYzNzkvNT9pZGxlX3RpbWVvdXRfc2Vjb25kcz0zMA==

gitHubToken: ""

---

# Source: harbor/templates/core/core-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: release-name-harbor-core

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

app.conf: |+

appname = Harbor

runmode = prod

enablegzip = true

[prod]

httpport = 8080

PORT: "8080"

DATABASE_TYPE: "postgresql"

POSTGRESQL_HOST: "release-name-harbor-database"

POSTGRESQL_PORT: "5432"

POSTGRESQL_USERNAME: "postgres"

POSTGRESQL_DATABASE: "registry"

POSTGRESQL_SSLMODE: "disable"

POSTGRESQL_MAX_IDLE_CONNS: "100"

POSTGRESQL_MAX_OPEN_CONNS: "900"

EXT_ENDPOINT: "http://192.168.3.160:30002"

CORE_URL: "http://release-name-harbor-core:80"

JOBSERVICE_URL: "http://release-name-harbor-jobservice"

REGISTRY_URL: "http://release-name-harbor-registry:5000"

TOKEN_SERVICE_URL: "http://release-name-harbor-core:80/service/token"

CORE_LOCAL_URL: "http://127.0.0.1:8080"

WITH_TRIVY: "true"

TRIVY_ADAPTER_URL: "http://release-name-harbor-trivy:8080"

REGISTRY_STORAGE_PROVIDER_NAME: "filesystem"

LOG_LEVEL: "info"

CONFIG_PATH: "/etc/core/app.conf"

CHART_CACHE_DRIVER: "redis"

_REDIS_URL_CORE: "redis://release-name-harbor-redis:6379/0?idle_timeout_seconds=30"

_REDIS_URL_REG: "redis://release-name-harbor-redis:6379/2?idle_timeout_seconds=30"

PORTAL_URL: "http://release-name-harbor-portal"

REGISTRY_CONTROLLER_URL: "http://release-name-harbor-registry:8080"

REGISTRY_CREDENTIAL_USERNAME: "harbor_registry_user"

HTTP_PROXY: ""

HTTPS_PROXY: ""

NO_PROXY: "release-name-harbor-core,release-name-harbor-jobservice,release-name-harbor-database,release-name-harbor-registry,release-name-harbor-portal,release-name-harbor-trivy,release-name-harbor-exporter,127.0.0.1,localhost,.local,.internal"

PERMITTED_REGISTRY_TYPES_FOR_PROXY_CACHE: "docker-hub,harbor,azure-acr,aws-ecr,google-gcr,quay,docker-registry,github-ghcr,jfrog-artifactory"

QUOTA_UPDATE_PROVIDER: "db"

---

# Source: harbor/templates/jobservice/jobservice-cm-env.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: "release-name-harbor-jobservice-env"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

CORE_URL: "http://release-name-harbor-core:80"

TOKEN_SERVICE_URL: "http://release-name-harbor-core:80/service/token"

REGISTRY_URL: "http://release-name-harbor-registry:5000"

REGISTRY_CONTROLLER_URL: "http://release-name-harbor-registry:8080"

REGISTRY_CREDENTIAL_USERNAME: "harbor_registry_user"

JOBSERVICE_WEBHOOK_JOB_MAX_RETRY: "3"

JOBSERVICE_WEBHOOK_JOB_HTTP_CLIENT_TIMEOUT: "3"

LOG_LEVEL: "info"

HTTP_PROXY: ""

HTTPS_PROXY: ""

NO_PROXY: "release-name-harbor-core,release-name-harbor-jobservice,release-name-harbor-database,release-name-harbor-registry,release-name-harbor-portal,release-name-harbor-trivy,release-name-harbor-exporter,127.0.0.1,localhost,.local,.internal"

---

# Source: harbor/templates/jobservice/jobservice-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: "release-name-harbor-jobservice"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

config.yml: |+

#Server listening port

protocol: "http"

port: 8080

worker_pool:

workers: 10

backend: "redis"

redis_pool:

redis_url: "redis://release-name-harbor-redis:6379/1"

namespace: "harbor_job_service_namespace"

idle_timeout_second: 3600

job_loggers:

- name: "FILE"

level: INFO

settings: # Customized settings of logger

base_dir: "/var/log/jobs"

sweeper:

duration: 14 #days

settings: # Customized settings of sweeper

work_dir: "/var/log/jobs"

metric:

enabled: false

path: /metrics

port: 8001

#Loggers for the job service

loggers:

- name: "STD_OUTPUT"

level: INFO

reaper:

# the max time to wait for a task to finish, if unfinished after max_update_hours, the task will be mark as error, but the task will continue to run, default value is 24

max_update_hours: 24

# the max time for execution in running state without new task created

max_dangling_hours: 168

---

# Source: harbor/templates/nginx/configmap-http.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: release-name-harbor-nginx

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

nginx.conf: |+

worker_processes auto;

pid /tmp/nginx.pid;

events {

worker_connections 3096;

use epoll;

multi_accept on;

}

http {

client_body_temp_path /tmp/client_body_temp;

proxy_temp_path /tmp/proxy_temp;

fastcgi_temp_path /tmp/fastcgi_temp;

uwsgi_temp_path /tmp/uwsgi_temp;

scgi_temp_path /tmp/scgi_temp;

tcp_nodelay on;

# this is necessary for us to be able to disable request buffering in all cases

proxy_http_version 1.1;

upstream core {

server "release-name-harbor-core:80";

}

upstream portal {

server release-name-harbor-portal:80;

}

log_format timed_combined '[$time_local]:$remote_addr - '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'$request_time $upstream_response_time $pipe';

access_log /dev/stdout timed_combined;

map $http_x_forwarded_proto $x_forwarded_proto {

default $http_x_forwarded_proto;

"" $scheme;

}

server {

listen 8080;

listen [::]:8080;

server_tokens off;

# disable any limits to avoid HTTP 413 for large image uploads

client_max_body_size 0;

# Add extra headers

add_header X-Frame-Options DENY;

add_header Content-Security-Policy "frame-ancestors 'none'";

location / {

proxy_pass http://portal/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $x_forwarded_proto;

proxy_buffering off;

proxy_request_buffering off;

}

location /api/ {

proxy_pass http://core/api/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $x_forwarded_proto;

proxy_buffering off;

proxy_request_buffering off;

}

location /c/ {

proxy_pass http://core/c/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $x_forwarded_proto;

proxy_buffering off;

proxy_request_buffering off;

}

location /v1/ {

return 404;

}

location /v2/ {

proxy_pass http://core/v2/;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $x_forwarded_proto;

proxy_buffering off;

proxy_request_buffering off;

proxy_send_timeout 900;

proxy_read_timeout 900;

}

location /service/ {

proxy_pass http://core/service/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $x_forwarded_proto;

proxy_buffering off;

proxy_request_buffering off;

}

location /service/notifications {

return 404;

}

}

}

---

# Source: harbor/templates/portal/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: "release-name-harbor-portal"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

nginx.conf: |+

worker_processes auto;

pid /tmp/nginx.pid;

events {

worker_connections 1024;

}

http {

client_body_temp_path /tmp/client_body_temp;

proxy_temp_path /tmp/proxy_temp;

fastcgi_temp_path /tmp/fastcgi_temp;

uwsgi_temp_path /tmp/uwsgi_temp;

scgi_temp_path /tmp/scgi_temp;

server {

listen 8080;

listen [::]:8080;

server_name localhost;

root /usr/share/nginx/html;

index index.html index.htm;

include /etc/nginx/mime.types;

gzip on;

gzip_min_length 1000;

gzip_proxied expired no-cache no-store private auth;

gzip_types text/plain text/css application/json application/javascript application/x-javascript text/xml application/xml application/xml+rss text/javascript;

location /devcenter-api-2.0 {

try_files $uri $uri/ /swagger-ui-index.html;

}

location / {

try_files $uri $uri/ /index.html;

}

location = /index.html {

add_header Cache-Control "no-store, no-cache, must-revalidate";

}

}

}

---

# Source: harbor/templates/registry/registry-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: "release-name-harbor-registry"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

config.yml: |+

version: 0.1

log:

level: info

fields:

service: registry

storage:

filesystem:

rootdirectory: /storage

cache:

layerinfo: redis

maintenance:

uploadpurging:

enabled: true

age: 168h

interval: 24h

dryrun: false

delete:

enabled: true

redirect:

disable: false

redis:

addr: release-name-harbor-redis:6379

db: 2

readtimeout: 10s

writetimeout: 10s

dialtimeout: 10s

enableTLS: false

pool:

maxidle: 100

maxactive: 500

idletimeout: 60s

http:

addr: :5000

relativeurls: false

# set via environment variable

# secret: placeholder

debug:

addr: localhost:5001

auth:

htpasswd:

realm: harbor-registry-basic-realm

path: /etc/registry/passwd

validation:

disabled: true

compatibility:

schema1:

enabled: true

ctl-config.yml: |+

---

protocol: "http"

port: 8080

log_level: info

registry_config: "/etc/registry/config.yml"

---

# Source: harbor/templates/registry/registryctl-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: "release-name-harbor-registryctl"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

data:

---

# Source: harbor/templates/jobservice/jobservice-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: release-name-harbor-jobservice

namespace: "harbor"

annotations:

helm.sh/resource-policy: keep

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: jobservice

app.kubernetes.io/component: jobservice

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs-sc

---

# Source: harbor/templates/registry/registry-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: release-name-harbor-registry

namespace: "harbor"

annotations:

helm.sh/resource-policy: keep

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: registry

app.kubernetes.io/component: registry

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: nfs-sc

---

# Source: harbor/templates/core/core-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: release-name-harbor-core

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- name: http-web

port: 80

targetPort: 8080

selector:

release: release-name

app: "harbor"

component: core

---

# Source: harbor/templates/database/database-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: "release-name-harbor-database"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- port: 5432

selector:

release: release-name

app: "harbor"

component: database

---

# Source: harbor/templates/jobservice/jobservice-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: "release-name-harbor-jobservice"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- name: http-jobservice

port: 80

targetPort: 8080

selector:

release: release-name

app: "harbor"

component: jobservice

---

# Source: harbor/templates/nginx/service.yaml

apiVersion: v1

kind: Service

metadata:

name: harbor

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 8080

nodePort: 30002

selector:

release: release-name

app: "harbor"

component: nginx

---

# Source: harbor/templates/portal/service.yaml

apiVersion: v1

kind: Service

metadata:

name: "release-name-harbor-portal"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- port: 80

targetPort: 8080

selector:

release: release-name

app: "harbor"

component: portal

---

# Source: harbor/templates/redis/service.yaml

apiVersion: v1

kind: Service

metadata:

name: release-name-harbor-redis

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- port: 6379

selector:

release: release-name

app: "harbor"

component: redis

---

# Source: harbor/templates/registry/registry-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: "release-name-harbor-registry"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- name: http-registry

port: 5000

- name: http-controller

port: 8080

selector:

release: release-name

app: "harbor"

component: registry

---

# Source: harbor/templates/trivy/trivy-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: "release-name-harbor-trivy"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

spec:

ports:

- name: http-trivy

protocol: TCP

port: 8080

selector:

release: release-name

app: "harbor"

component: trivy

---

# Source: harbor/templates/core/core-dpl.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: release-name-harbor-core

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: core

app.kubernetes.io/component: core

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

release: release-name

app: "harbor"

component: core

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: core

app.kubernetes.io/component: core

annotations:

checksum/configmap: bf9940a91f31ccd8db1c6b0aa6a6cdbd27483d0ef0400b0478b766dba1e8778f

checksum/secret: 2d768cea8bf3f359707036a5230c42ab503ee35988f494ed8e36cf09fbd7f04b

checksum/secret-jobservice: c3bcac00f13ee5b6d0346fbcbabe8a495318a0b4f860f1d0d594652e0c3cfcdf

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

automountServiceAccountToken: false

terminationGracePeriodSeconds: 120

containers:

- name: core

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core:v2.13.0

imagePullPolicy: IfNotPresent

startupProbe:

httpGet:

path: /api/v2.0/ping

scheme: HTTP

port: 8080

failureThreshold: 360

initialDelaySeconds: 10

periodSeconds: 10

livenessProbe:

httpGet:

path: /api/v2.0/ping

scheme: HTTP

port: 8080

failureThreshold: 2

periodSeconds: 10

readinessProbe:

httpGet:

path: /api/v2.0/ping

scheme: HTTP

port: 8080

failureThreshold: 2

periodSeconds: 10

envFrom:

- configMapRef:

name: "release-name-harbor-core"

- secretRef:

name: "release-name-harbor-core"

env:

- name: CORE_SECRET

valueFrom:

secretKeyRef:

name: release-name-harbor-core

key: secret

- name: JOBSERVICE_SECRET

valueFrom:

secretKeyRef:

name: release-name-harbor-jobservice

key: JOBSERVICE_SECRET

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

ports:

- containerPort: 8080

volumeMounts:

- name: config

mountPath: /etc/core/app.conf

subPath: app.conf

- name: secret-key

mountPath: /etc/core/key

subPath: key

- name: token-service-private-key

mountPath: /etc/core/private_key.pem

subPath: tls.key

- name: psc

mountPath: /etc/core/token

volumes:

- name: config

configMap:

name: release-name-harbor-core

items:

- key: app.conf

path: app.conf

- name: secret-key

secret:

secretName: release-name-harbor-core

items:

- key: secretKey

path: key

- name: token-service-private-key

secret:

secretName: release-name-harbor-core

- name: psc

emptyDir: {}

---

# Source: harbor/templates/jobservice/jobservice-dpl.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: "release-name-harbor-jobservice"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: jobservice

app.kubernetes.io/component: jobservice

spec:

replicas: 1

revisionHistoryLimit: 10

strategy:

type: RollingUpdate

selector:

matchLabels:

release: release-name

app: "harbor"

component: jobservice

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: jobservice

app.kubernetes.io/component: jobservice

annotations:

checksum/configmap: 13935e266ee9ce33b7c4d1c769e78a85da9b0519505029a9b6098a497d9a1220

checksum/configmap-env: 9773f3ab781f37f25b82e63ed4e8cad53fbd5b6103a82128e4d793265eed1a1d

checksum/secret: dc0d310c734ec96a18f24f108a124db429517b46f48067f10f45b653925286ea

checksum/secret-core: 515f10ad6f291e46fa3c7e570e5fc27dd32089185e1306e0943e667ad083d334

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

automountServiceAccountToken: false

terminationGracePeriodSeconds: 120

containers:

- name: jobservice

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice:v2.13.0

imagePullPolicy: IfNotPresent

livenessProbe:

httpGet:

path: /api/v1/stats

scheme: HTTP

port: 8080

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

httpGet:

path: /api/v1/stats

scheme: HTTP

port: 8080

initialDelaySeconds: 20

periodSeconds: 10

env:

- name: CORE_SECRET

valueFrom:

secretKeyRef:

name: release-name-harbor-core

key: secret

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

envFrom:

- configMapRef:

name: "release-name-harbor-jobservice-env"

- secretRef:

name: "release-name-harbor-jobservice"

ports:

- containerPort: 8080

volumeMounts:

- name: jobservice-config

mountPath: /etc/jobservice/config.yml

subPath: config.yml

- name: job-logs

mountPath: /var/log/jobs

subPath:

volumes:

- name: jobservice-config

configMap:

name: "release-name-harbor-jobservice"

- name: job-logs

persistentVolumeClaim:

claimName: release-name-harbor-jobservice

---

# Source: harbor/templates/nginx/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: release-name-harbor-nginx

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: nginx

app.kubernetes.io/component: nginx

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

release: release-name

app: "harbor"

component: nginx

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: nginx

app.kubernetes.io/component: nginx

annotations:

checksum/configmap: a9da1570c68479a856aa8cba7fa5ca3cc7f57eb28fb7180ea8630e1e96fbbcb0

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

automountServiceAccountToken: false

containers:

- name: nginx

image: "registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon:v2.13.0"

imagePullPolicy: "IfNotPresent"

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8080

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

httpGet:

scheme: HTTP

path: /

port: 8080

initialDelaySeconds: 1

periodSeconds: 10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

ports:

- containerPort: 8080

volumeMounts:

- name: config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

volumes:

- name: config

configMap:

name: release-name-harbor-nginx

---

# Source: harbor/templates/portal/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: "release-name-harbor-portal"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: portal

app.kubernetes.io/component: portal

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

release: release-name

app: "harbor"

component: portal

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: portal

app.kubernetes.io/component: portal

annotations:

checksum/configmap: 92a534063aacac0294c6aefc269663fd4b65e2f3aabd23e05a7485cbb28cdc72

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

automountServiceAccountToken: false

containers:

- name: portal

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal:v2.13.0

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

livenessProbe:

httpGet:

path: /

scheme: HTTP

port: 8080

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

httpGet:

path: /

scheme: HTTP

port: 8080

initialDelaySeconds: 1

periodSeconds: 10

ports:

- containerPort: 8080

volumeMounts:

- name: portal-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

volumes:

- name: portal-config

configMap:

name: "release-name-harbor-portal"

---

# Source: harbor/templates/registry/registry-dpl.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: "release-name-harbor-registry"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: registry

app.kubernetes.io/component: registry

spec:

replicas: 1

revisionHistoryLimit: 10

strategy:

type: RollingUpdate

selector:

matchLabels:

release: release-name

app: "harbor"

component: registry

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: registry

app.kubernetes.io/component: registry

annotations:

checksum/configmap: e7cdc1d01e8e65c3e6a380a112730c8030cc0634a401cc2eda63a84d2098d2d0

checksum/secret: 0c29e3fdc2300f19ecf0d055e2d25e75bd856adf5dfb4eda338c647d90bdfca0

checksum/secret-jobservice: 4dd5bbe6b81b66aa7b11a43a7470bf3f04cbc1650f51e2b5ace05c9dc2c81151

checksum/secret-core: 8d2c730b5b3fa7401c1f5e78b4eac13f3b02a968843ce8cb652d795e5e810692

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

fsGroupChangePolicy: OnRootMismatch

automountServiceAccountToken: false

terminationGracePeriodSeconds: 120

containers:

- name: registry

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon:v2.13.0

imagePullPolicy: IfNotPresent

livenessProbe:

httpGet:

path: /

scheme: HTTP

port: 5000

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

httpGet:

path: /

scheme: HTTP

port: 5000

initialDelaySeconds: 1

periodSeconds: 10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

envFrom:

- secretRef:

name: "release-name-harbor-registry"

env:

ports:

- containerPort: 5000

- containerPort: 5001

volumeMounts:

- name: registry-data

mountPath: /storage

subPath:

- name: registry-htpasswd

mountPath: /etc/registry/passwd

subPath: passwd

- name: registry-config

mountPath: /etc/registry/config.yml

subPath: config.yml

- name: registryctl

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl:v2.13.0

imagePullPolicy: IfNotPresent

livenessProbe:

httpGet:

path: /api/health

scheme: HTTP

port: 8080

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

httpGet:

path: /api/health

scheme: HTTP

port: 8080

initialDelaySeconds: 1

periodSeconds: 10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

envFrom:

- configMapRef:

name: "release-name-harbor-registryctl"

- secretRef:

name: "release-name-harbor-registry"

- secretRef:

name: "release-name-harbor-registryctl"

env:

- name: CORE_SECRET

valueFrom:

secretKeyRef:

name: release-name-harbor-core

key: secret

- name: JOBSERVICE_SECRET

valueFrom:

secretKeyRef:

name: release-name-harbor-jobservice

key: JOBSERVICE_SECRET

ports:

- containerPort: 8080

volumeMounts:

- name: registry-data

mountPath: /storage

subPath:

- name: registry-config

mountPath: /etc/registry/config.yml

subPath: config.yml

- name: registry-config

mountPath: /etc/registryctl/config.yml

subPath: ctl-config.yml

volumes:

- name: registry-htpasswd

secret:

secretName: release-name-harbor-registry-htpasswd

items:

- key: REGISTRY_HTPASSWD

path: passwd

- name: registry-config

configMap:

name: "release-name-harbor-registry"

- name: registry-data

persistentVolumeClaim:

claimName: release-name-harbor-registry

---

# Source: harbor/templates/database/database-ss.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: "release-name-harbor-database"

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: database

app.kubernetes.io/component: database

spec:

replicas: 1

serviceName: "release-name-harbor-database"

selector:

matchLabels:

release: release-name

app: "harbor"

component: database

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: database

app.kubernetes.io/component: database

annotations:

checksum/secret: 4ae67a99eb6eba38dcf86bbd000a763abf20cbb3cd0e2c11d2780167980b7c08

spec:

securityContext:

runAsUser: 999

fsGroup: 999

automountServiceAccountToken: false

terminationGracePeriodSeconds: 120

initContainers:

# with "fsGroup" set, each time a volume is mounted, Kubernetes must recursively chown() and chmod() all the files and directories inside the volume

# this causes the postgresql reports the "data directory /var/lib/postgresql/data/pgdata has group or world access" issue when using some CSIs e.g. Ceph

# use this init container to correct the permission

# as "fsGroup" applied before the init container running, the container has enough permission to execute the command

- name: "data-permissions-ensurer"

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db:v2.13.0

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

command: ["/bin/sh"]

args: ["-c", "chmod -R 700 /var/lib/postgresql/data/pgdata || true"]

volumeMounts:

- name: database-data

mountPath: /var/lib/postgresql/data

subPath:

containers:

- name: database

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db:v2.13.0

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

livenessProbe:

exec:

command:

- /docker-healthcheck.sh

initialDelaySeconds: 300

periodSeconds: 10

timeoutSeconds: 1

readinessProbe:

exec:

command:

- /docker-healthcheck.sh

initialDelaySeconds: 1

periodSeconds: 10

timeoutSeconds: 1

envFrom:

- secretRef:

name: "release-name-harbor-database"

env:

# put the data into a sub directory to avoid the permission issue in k8s with restricted psp enabled

# more detail refer to https://github.com/goharbor/harbor-helm/issues/756

- name: PGDATA

value: "/var/lib/postgresql/data/pgdata"

volumeMounts:

- name: database-data

mountPath: /var/lib/postgresql/data

subPath:

- name: shm-volume

mountPath: /dev/shm

volumes:

- name: shm-volume

emptyDir:

medium: Memory

sizeLimit: 512Mi

volumeClaimTemplates:

- metadata:

name: "database-data"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

annotations:

spec:

accessModes: ["ReadWriteMany"]

storageClassName: "nfs-sc"

resources:

requests:

storage: "1Gi"

---

# Source: harbor/templates/redis/statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: release-name-harbor-redis

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: redis

app.kubernetes.io/component: redis

spec:

replicas: 1

serviceName: release-name-harbor-redis

selector:

matchLabels:

release: release-name

app: "harbor"

component: redis

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: redis

app.kubernetes.io/component: redis

spec:

securityContext:

runAsUser: 999

fsGroup: 999

automountServiceAccountToken: false

terminationGracePeriodSeconds: 120

containers:

- name: redis

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon:v2.13.0

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

livenessProbe:

tcpSocket:

port: 6379

initialDelaySeconds: 300

periodSeconds: 10

readinessProbe:

tcpSocket:

port: 6379

initialDelaySeconds: 1

periodSeconds: 10

volumeMounts:

- name: data

mountPath: /var/lib/redis

subPath:

volumeClaimTemplates:

- metadata:

name: data

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

annotations:

spec:

accessModes: ["ReadWriteMany"]

storageClassName: "nfs-sc"

resources:

requests:

storage: "1Gi"

---

# Source: harbor/templates/trivy/trivy-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: release-name-harbor-trivy

namespace: "harbor"

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: trivy

app.kubernetes.io/component: trivy

spec:

replicas: 1

serviceName: release-name-harbor-trivy

selector:

matchLabels:

release: release-name

app: "harbor"

component: trivy

template:

metadata:

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

app.kubernetes.io/instance: release-name

app.kubernetes.io/name: harbor

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: harbor

app.kubernetes.io/version: "2.13.0"

component: trivy

app.kubernetes.io/component: trivy

annotations:

checksum/secret: 44be12495ce86a4d9182302ace8a923cf60e791c072dddc10aab3dc17a54309f

spec:

securityContext:

runAsUser: 10000

fsGroup: 10000

automountServiceAccountToken: false

containers:

- name: trivy

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon:v2.13.0

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

env:

- name: HTTP_PROXY

value: ""

- name: HTTPS_PROXY

value: ""

- name: NO_PROXY

value: "release-name-harbor-core,release-name-harbor-jobservice,release-name-harbor-database,release-name-harbor-registry,release-name-harbor-portal,release-name-harbor-trivy,release-name-harbor-exporter,127.0.0.1,localhost,.local,.internal"

- name: "SCANNER_LOG_LEVEL"

value: "info"

- name: "SCANNER_TRIVY_CACHE_DIR"

value: "/home/scanner/.cache/trivy"

- name: "SCANNER_TRIVY_REPORTS_DIR"

value: "/home/scanner/.cache/reports"

- name: "SCANNER_TRIVY_DEBUG_MODE"

value: "false"

- name: "SCANNER_TRIVY_VULN_TYPE"

value: "os,library"

- name: "SCANNER_TRIVY_TIMEOUT"

value: "5m0s"

- name: "SCANNER_TRIVY_GITHUB_TOKEN"

valueFrom:

secretKeyRef:

name: release-name-harbor-trivy

key: gitHubToken

- name: "SCANNER_TRIVY_SEVERITY"

value: "UNKNOWN,LOW,MEDIUM,HIGH,CRITICAL"

- name: "SCANNER_TRIVY_IGNORE_UNFIXED"

value: "false"

- name: "SCANNER_TRIVY_SKIP_UPDATE"

value: "false"

- name: "SCANNER_TRIVY_SKIP_JAVA_DB_UPDATE"

value: "false"

- name: "SCANNER_TRIVY_OFFLINE_SCAN"

value: "false"

- name: "SCANNER_TRIVY_SECURITY_CHECKS"

value: "vuln"

- name: "SCANNER_TRIVY_INSECURE"

value: "false"

- name: SCANNER_API_SERVER_ADDR

value: ":8080"

- name: "SCANNER_REDIS_URL"

valueFrom:

secretKeyRef:

name: release-name-harbor-trivy

key: redisURL

- name: "SCANNER_STORE_REDIS_URL"

valueFrom:

secretKeyRef:

name: release-name-harbor-trivy

key: redisURL

- name: "SCANNER_JOB_QUEUE_REDIS_URL"

valueFrom:

secretKeyRef:

name: release-name-harbor-trivy

key: redisURL

ports:

- name: api-server

containerPort: 8080

volumeMounts:

- name: data

mountPath: /home/scanner/.cache

subPath:

readOnly: false

livenessProbe:

httpGet:

scheme: HTTP

path: /probe/healthy

port: api-server

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 10

readinessProbe:

httpGet:

scheme: HTTP

path: /probe/ready

port: api-server

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 200m

memory: 512Mi

volumeClaimTemplates:

- metadata:

name: data

labels:

heritage: Helm

release: release-name

chart: harbor

app: "harbor"

annotations:

spec:

accessModes: ["ReadWriteMany"]

storageClassName: "nfs-sc"

resources:

requests:

storage: "5Gi"root@k8s-master-01:~/harbor# kubectl apply -f test.yaml -n harbor

secret/release-name-harbor-core created

secret/release-name-harbor-database created

secret/release-name-harbor-jobservice created

secret/release-name-harbor-registry created

secret/release-name-harbor-registry-htpasswd created

secret/release-name-harbor-registryctl created

secret/release-name-harbor-trivy created

configmap/release-name-harbor-core created

configmap/release-name-harbor-jobservice-env created

configmap/release-name-harbor-jobservice created

configmap/release-name-harbor-nginx created

configmap/release-name-harbor-portal created

configmap/release-name-harbor-registry created

configmap/release-name-harbor-registryctl created

persistentvolumeclaim/release-name-harbor-jobservice created

persistentvolumeclaim/release-name-harbor-registry created

service/release-name-harbor-core created

service/release-name-harbor-database created

service/release-name-harbor-jobservice created

service/harbor created

service/release-name-harbor-portal created

service/release-name-harbor-redis created

service/release-name-harbor-registry created

service/release-name-harbor-trivy created

deployment.apps/release-name-harbor-core created

deployment.apps/release-name-harbor-jobservice created

deployment.apps/release-name-harbor-nginx created

deployment.apps/release-name-harbor-portal created

deployment.apps/release-name-harbor-registry created

statefulset.apps/release-name-harbor-database created

statefulset.apps/release-name-harbor-redis created

statefulset.apps/release-name-harbor-trivy created

root@k8s-master-01:~/harbor# kubectl get all -n harbor

NAME READY STATUS RESTARTS AGE

pod/release-name-harbor-core-849974d76-f4wqp 1/1 Running 0 83s

pod/release-name-harbor-database-0 1/1 Running 0 83s

pod/release-name-harbor-jobservice-75f59fcb64-29sp8 1/1 Running 3 (57s ago) 83s

pod/release-name-harbor-nginx-b67dcbfc6-wlvtv 1/1 Running 0 83s

pod/release-name-harbor-portal-59b9cfd58c-l4wpj 1/1 Running 0 83s

pod/release-name-harbor-redis-0 1/1 Running 0 83s

pod/release-name-harbor-registry-659f59fcb5-wj8zm 2/2 Running 0 83s

pod/release-name-harbor-trivy-0 1/1 Running 0 83s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/harbor NodePort 10.102.128.215 <none> 80:30002/TCP 83s

service/release-name-harbor-core ClusterIP 10.108.245.194 <none> 80/TCP 83s

service/release-name-harbor-database ClusterIP 10.97.69.58 <none> 5432/TCP 83s

service/release-name-harbor-jobservice ClusterIP 10.106.125.16 <none> 80/TCP 83s

service/release-name-harbor-portal ClusterIP 10.101.153.231 <none> 80/TCP 83s

service/release-name-harbor-redis ClusterIP 10.101.144.182 <none> 6379/TCP 83s

service/release-name-harbor-registry ClusterIP 10.97.84.52 <none> 5000/TCP,8080/TCP 83s

service/release-name-harbor-trivy ClusterIP 10.106.250.151 <none> 8080/TCP 83s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/release-name-harbor-core 1/1 1 1 83s

deployment.apps/release-name-harbor-jobservice 1/1 1 1 83s

deployment.apps/release-name-harbor-nginx 1/1 1 1 83s

deployment.apps/release-name-harbor-portal 1/1 1 1 83s

deployment.apps/release-name-harbor-registry 1/1 1 1 83s

NAME DESIRED CURRENT READY AGE

replicaset.apps/release-name-harbor-core-849974d76 1 1 1 83s

replicaset.apps/release-name-harbor-jobservice-75f59fcb64 1 1 1 83s

replicaset.apps/release-name-harbor-nginx-b67dcbfc6 1 1 1 83s

replicaset.apps/release-name-harbor-portal-59b9cfd58c 1 1 1 83s

replicaset.apps/release-name-harbor-registry-659f59fcb5 1 1 1 83s

NAME READY AGE

statefulset.apps/release-name-harbor-database 1/1 83s

statefulset.apps/release-name-harbor-redis 1/1 83s

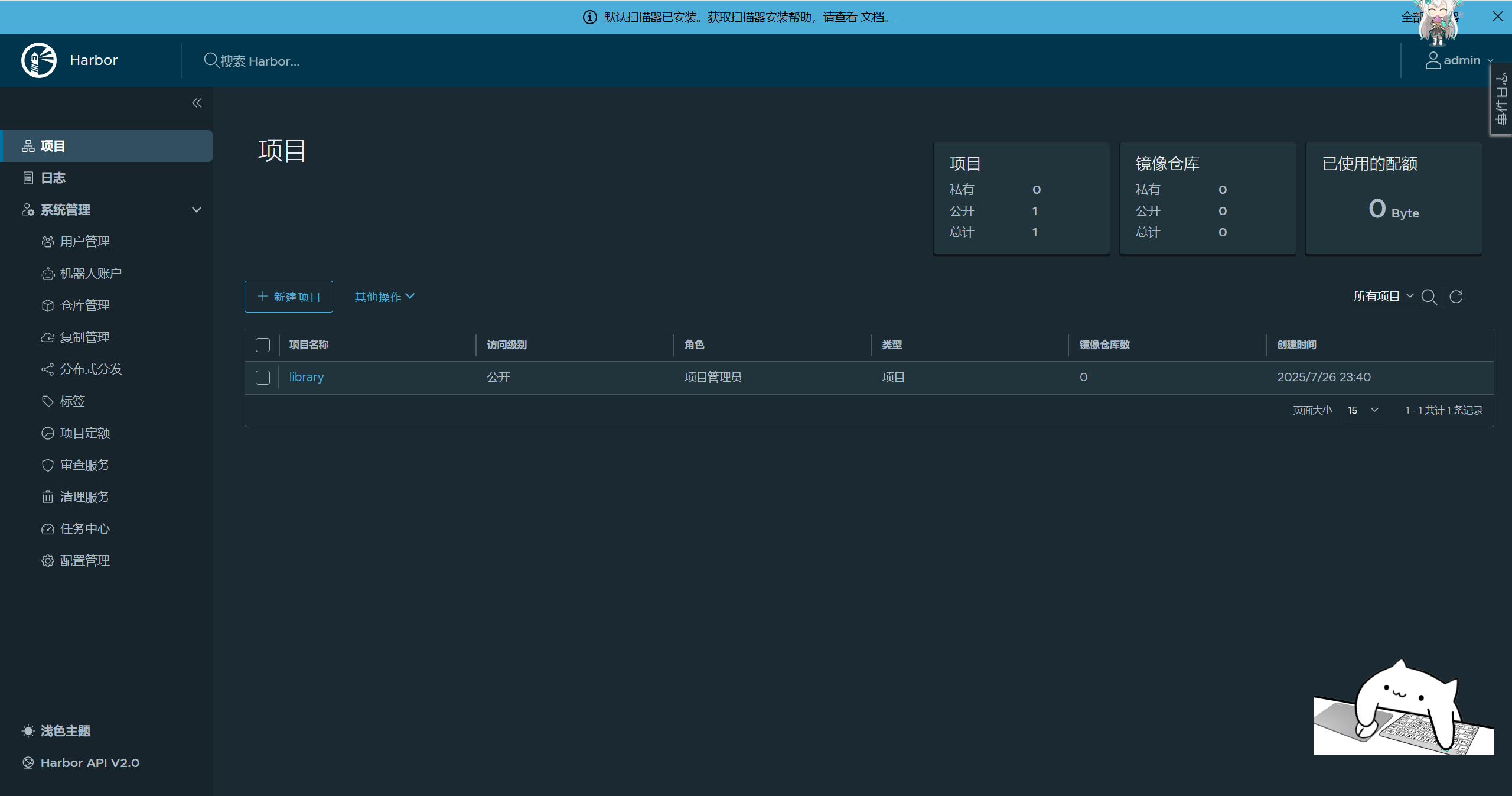

statefulset.apps/release-name-harbor-trivy 1/1 83s账号:admin

密码:Harbor1234

评论 (0)