一、固定IP地址

#配置

sudo nmcli connection modify ens160 \

ipv4.method manual \

ipv4.addresses 192.168.30.20/24 \

ipv4.gateway 192.168.30.2 \

ipv4.dns "8.8.8.8,8.8.4.4"

#更新配置

sudo nmcli connection down ens160 && sudo nmcli connection up ens160二、配置yum源

2.1备份现有仓库配置文件

#sudo mkdir /etc/yum.repos.d/backup

#sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/

直接执行下面的2.2修改仓库配置文件

# 使用阿里云推荐的配置方法

sudo sed -e 's!^mirrorlist=!#mirrorlist=!g' \

-e 's!^#baseurl=http://dl.rockylinux.org/$contentdir!baseurl=https://mirrors.aliyun.com/rockylinux!g' \

-i /etc/yum.repos.d/Rocky-*.repo2.3清理并重建缓存

sudo dnf clean all

sudo dnf makecache2.4测试更新

sudo dnf update -y三、准备工作

3.1修改主机名

hostnamectl set-hostname k8s-01

hostnamectl set-hostname k8s-02

hostnamectl set-hostname k8s-033.2关闭一些服务

# 1、关闭selinux

sed -i 's#enforcing#disabled#g' /etc/selinux/config

setenforce 0

# 2、禁用防火墙,网络管理,邮箱

systemctl disable --now firewalld NetworkManager postfix

# 3、关闭swap分区

swapoff -a

# 注释swap分区

cp /etc/fstab /etc/fstab_bak

sed -i '/swap/d' /etc/fstab3.3sshd服务优化(可以不做)

# 1、加速访问

sed -ri 's@^#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config

sed -ri 's#^GSSAPIAuthentication yes#GSSAPIAuthentication no#g' /etc/ssh/sshd_config

grep ^UseDNS /etc/ssh/sshd_config

grep ^GSSAPIAuthentication /etc/ssh/sshd_config

systemctl restart sshd

# 2、密钥登录(主机点做):为了让后续一些远程拷贝操作更方便

ssh-keygen

ssh-copy-id -i root@k8s-01

ssh-copy-id -i root@k8s-02

ssh-copy-id -i root@k8s-03

#连接测试

[root@m01 ~]# ssh 172.16.1.7

Last login: Tue Nov 24 09:02:26 2020 from 10.0.0.1

[root@web01 ~]#3.4增大文件标识符数量(退出当前会话立即生效)

cat > /etc/security/limits.d/k8s.conf <<EOF

* soft nofile 65535

* hard nofile 131070

EOF

ulimit -Sn

ulimit -Hn3.5所有节点配置模块自动加载,此步骤不做的话(kubeadm init时会直接失败)

modprobe br_netfilter

modprobe ip_conntrack

cat >>/etc/rc.sysinit<<EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

echo "modprobe br_netfilter" >/etc/sysconfig/modules/br_netfilter.modules

echo "modprobe ip_conntrack" >/etc/sysconfig/modules/ip_conntrack.modules

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

chmod 755 /etc/sysconfig/modules/ip_conntrack.modules

lsmod | grep br_netfilter3.6同步集群时间

# =====================》chrony服务端:服务端我们可以自己搭建,也可以直接用公网上的时间服务器,所以是否部署服务端看你自己

# 1、安装

dnf -y install chrony

# 2、修改配置文件

mv /etc/chrony.conf /etc/chrony.conf.bak

cat > /etc/chrony.conf << EOF

server ntp1.aliyun.com iburst minpoll 4 maxpoll 10

server ntp2.aliyun.com iburst minpoll 4 maxpoll 10

server ntp3.aliyun.com iburst minpoll 4 maxpoll 10

server ntp4.aliyun.com iburst minpoll 4 maxpoll 10

server ntp5.aliyun.com iburst minpoll 4 maxpoll 10

server ntp6.aliyun.com iburst minpoll 4 maxpoll 10

server ntp7.aliyun.com iburst minpoll 4 maxpoll 10

driftfile /var/lib/chrony/drift

makestep 10 3

rtcsync

allow 0.0.0.0/0

local stratum 10

keyfile /etc/chrony.keys

logdir /var/log/chrony

stratumweight 0.05

noclientlog

logchange 0.5

EOF

# 4、启动chronyd服务

systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置

systemctl enable chronyd.service

systemctl status chronyd.service

# =====================》chrony客户端:在需要与外部同步时间的机器上安装,启动后会自动与你指定的服务端同步时间

# 下述步骤一次性粘贴到每个客户端执行即可

# 1、安装chrony

dnf -y install chrony

# 2、需改客户端配置文件

mv /etc/chrony.conf /etc/chrony.conf.bak

cat > /etc/chrony.conf << EOF

server 192.168.30.20 iburst

driftfile /var/lib/chrony/drift

makestep 10 3

rtcsync

local stratum 10

keyfile /etc/chrony.key

logdir /var/log/chrony

stratumweight 0.05

noclientlog

logchange 0.5

EOF

# 3、启动chronyd

systemctl restart chronyd.service

systemctl enable chronyd.service

systemctl status chronyd.service

# 4、验证

chronyc sources -v3.7安装常用软件

dnf -y install expect wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git ntpdate chrony bind-utils rsync unzip git3.8查看内核版本要4.4+

[root@localhost ~]# grubby --default-kernel

/boot/vmlinuz-5.14.0-570.30.1.el9_6.x86_643.8节点安装IPVS

# 1、安装ipvsadm等相关工具

dnf -y install ipvsadm ipset sysstat conntrack libseccomp

# 2、配置加载

cat > /etc/sysconfig/modules/ipvs.modules <<"EOF"

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules};

do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs3.9机器修改内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

# 立即生效

sysctl --system四、安装containerd(所有k8s节点都要做)

自Kubernetes1.24以后,K8S就不再原生支持docker了

我们都知道containerd来自于docker,后被docker捐献给了云原生计算基金会(我们安装docker当然会一并安装上containerd)

安装方法:

centos的libseccomp的版本为2.3.1,不满足containerd的需求,需要下载2.4以上的版本即可,我这里部署2.5.1版本。

rpm -e libseccomp-2.5.1-1.el8.x86_64 --nodeps

rpm -ivh libseccomp-2.5.1-1.e18.x8664.rpm #官网已经gg了,不更新了,请用阿里云

# wget http://rpmfind.net/linux/centos/8-stream/Base0s/x86 64/0s/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

wget https://mirrors.aliyun.com/centos/8/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

cd /root/rpms

sudo yum localinstall libseccomp-2.5.1-1.el8.x86_64.rpm -y

#rocky 默认版本就是2.5.2 无需执行上面的命令 直接执行下面的命令查看版本

[root@k8s-01 ~]# rpm -qa | grep libseccomp

libseccomp-2.5.2-2.el9.x86_64

安装方式一:(基于阿里云的源)推荐用这种方式,安装的是4

sudo dnf config-manager --set-enabled powertools # Rocky Linux 8/9需启用PowerTools仓库

sudo dnf install -y yum-utils device-mapper-persistent-data lvm2

#1、卸载之前的

dnf remove docker docker-ce containerd docker-common docker-selinux docker-engine -y

#2、准备repo

sudo tee /etc/yum.repos.d/docker-ce.repo <<-'EOF'

[docker-ce-stable]

name=Docker CE Stable - AliOS

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF

# 3、安装

sudo dnf install -y containerd.io

sudo dnf install containerd* -y配置

# 1、配置

mkdir -pv /etc/containerd

containerd config default > /etc/containerd/config.toml #为containerd生成配置文件

#2、替换默认pause镜像地址:这一步非常非常非常非常重要

grep sandbox_image /etc/containerd/config.toml

sed -i 's/registry.k8s.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/' /etc/containerd/config.toml

grep sandbox_image /etc/containerd/config.toml

#请务必确认新地址是可用的:

sandbox_image="registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6"

#3、配置systemd作为容器的cgroup driver

grep SystemdCgroup /etc/containerd/config.toml

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

grep SystemdCgroup /etc/containerd/config.toml

# 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像)

#参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration

#添加 config_path="/etc/containerd/certs.d"

sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io

cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF

server ="https://docker.io"

[host."https ://dockerproxy.com"]

capabilities = ["pull","resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull","resolve"]

[host."https://docker.chenby.cn"]

capabilities = ["pull","resolve"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull","resolve" ]

[host."http://hub-mirror.c.163.com"]

capabilities = ["pull","resolve" ]

EOF#5、配置containerd开机自启动

#5.1 启动containerd服务并配置开机自启动

systemctl daemon-reload && systemctl restart containerd

systemctl enable --now containerd

#5.2 查看containerd状态

systemctl status containerd

#5.3查看containerd的版本

ctr version五、安装k8s

5.1准备k8s源

# 创建repo文件

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sudo dnf makecache

#参考:https://developer.aliyun.com/mirror/kubernetes/setenforce

dnf install -y kubelet-1.27* kubeadm-1.27* kubectl-1.27*

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

安装锁定版本的插件

sudo dnf install -y dnf-plugin-versionlock

锁定版本不让后续更新sudo dnf versionlock add kubelet-1.27* kubeadm-1.27* kubectl-1.27* containerd.io

[root@k8s-01 ~]# sudo dnf versionlock list

Last metadata expiration check: 0:35:21 ago on Fri Aug 8 10:40:25 2025.

kubelet-0:1.27.6-0.*

kubeadm-0:1.27.6-0.*

kubectl-0:1.27.6-0.*

containerd.io-0:1.7.27-3.1.el9.*

#sudo dnf update就会排除锁定的应用5.2加载内核

# 加载 br_netfilter 模块

sudo modprobe br_netfilter

# 启用内核参数

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用配置

sudo sysctl --system

#临时关闭防火墙

sudo systemctl stop firewalld

#永久关闭防火墙

sudo systemctl disable firewalld

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

sudo systemctl stop firewalld

sudo systemctl disable firewalld

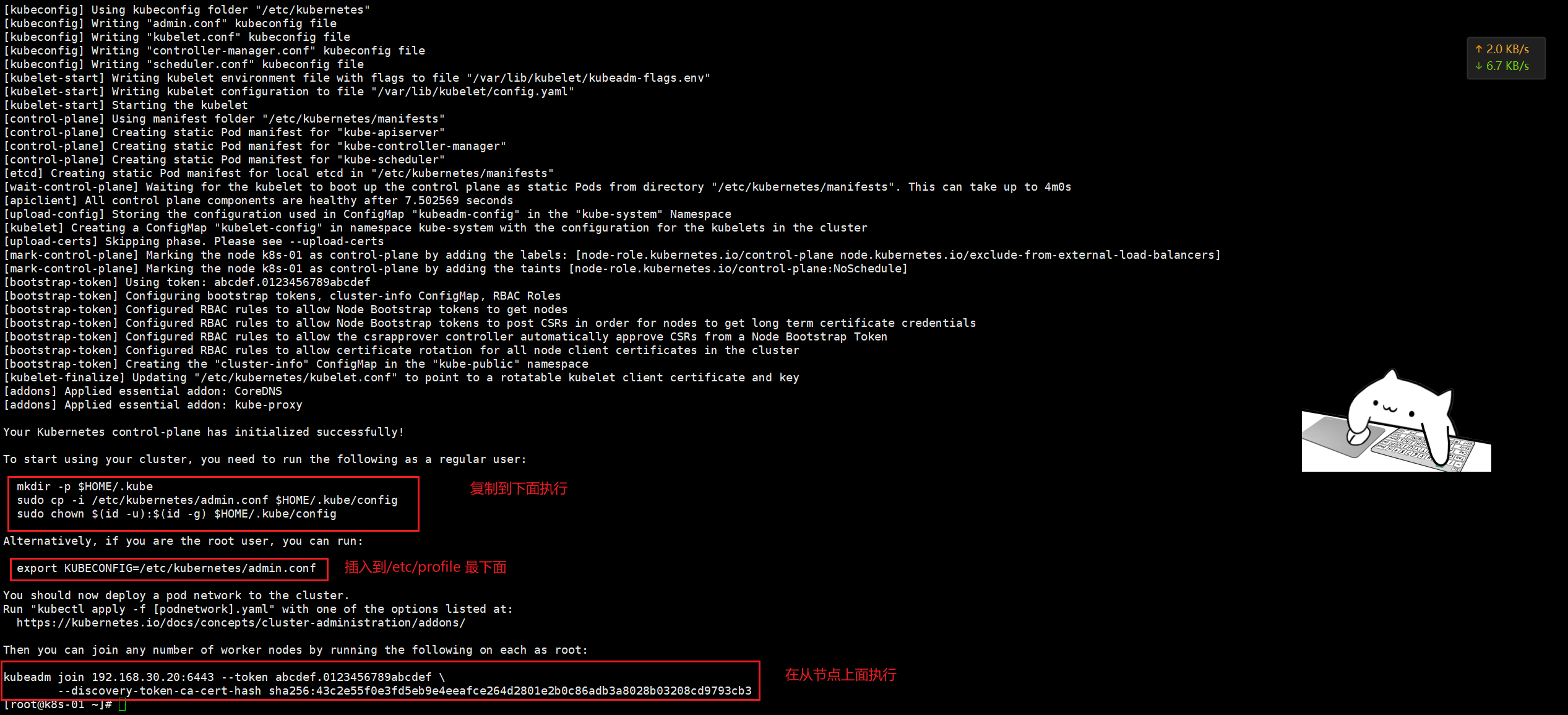

5.3主节点操作(node节点不执行)

初始化master节点(仅在master节点上执行)

#可以kubeadm config images list查看

[root@k8s-master-01 ~]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.30.0

registry.k8s.io/kube-controller-manager:v1.30.0

registry.k8s.io/kube-scheduler:v1.30.0

registry.k8s.io/kube-proxy:v1.30.0

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlvi kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.110.97 #这里要改为控制节点

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master-01 #这里要修改

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #要去阿里云创建仓库

kind: ClusterConfiguration

kubernetesVersion: 1.30.3

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #添加这行

scheduler: {}

#在最后插入以下内容

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd部署K8S

kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap

部署网络插件

下载网络插件

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-01 ~]# cat kube-flannel.yml

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock[root@k8s-01 ~]# grep -i image kube-flannel.yml

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

#在node节点执行下面命令修改ip地址

mkdir -p $HOME/.kube

scp root@192.168.30.135:/etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/configdocker安装

1.卸载旧版本(如有)

sudo dnf remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

2.安装依赖包

sudo dnf install -y dnf-plugins-core

3.添加 Docker 官方仓库

sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

或者安装阿里云的

sudo dnf config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

4.安装 Docker 引擎

sudo dnf install -y docker-ce docker-ce-cli containerd.io

5.启动并设置开机自启

sudo systemctl start docker

sudo systemctl enable docker安装docker-compose

1、要先给chmod +x 执行权限

2、/usr/local/bin/docker-compose 自己传docker-compose 过去

[root@k8s-03 harbor]# sudo ./install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 20.10.24

[Step 1]: checking docker-compose is installed ...

Note: docker-compose version: 2.24.5

[Step 2]: preparing environment ...

[Step 3]: preparing harbor configs ...

prepare base dir is set to /root/harbor

Clearing the configuration file: /config/portal/nginx.conf

Clearing the configuration file: /config/log/logrotate.conf

Clearing the configuration file: /config/log/rsyslog_docker.conf

Clearing the configuration file: /config/nginx/nginx.conf

Clearing the configuration file: /config/core/env

Clearing the configuration file: /config/core/app.conf

Clearing the configuration file: /config/registry/passwd

Clearing the configuration file: /config/registry/config.yml

Clearing the configuration file: /config/registryctl/env

Clearing the configuration file: /config/registryctl/config.yml

Clearing the configuration file: /config/db/env

Clearing the configuration file: /config/jobservice/env

Clearing the configuration file: /config/jobservice/config.yml

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

loaded secret from file: /data/secret/keys/secretkey

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

[Step 4]: starting Harbor ...

[+] Running 9/10

⠸ Network harbor_harbor Created 2.3s

✔ Container harbor-log Started 0.4s

✔ Container harbor-db Started 1.3s

✔ Container harbor-portal Started 1.3s

✔ Container redis Started 1.2s

✔ Container registry Started 1.2s

✔ Container registryctl Started 1.3s

✔ Container harbor-core Started 1.6s

✔ Container nginx Started 2.1s

✔ Container harbor-jobservice Started 2.2s

✔ ----Harbor has been installed and started successfully.----

[root@k8s-03 harbor]# dockeer ps

-bash: dockeer: command not found

[root@k8s-03 harbor]# docker p

docker: 'p' is not a docker command.

See 'docker --help'

[root@k8s-03 harbor]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

49d3c2bd157f goharbor/nginx-photon:v2.5.0 "nginx -g 'daemon of…" 11 seconds ago Up 8 seconds (health: starting) 0.0.0.0:80->8080/tcp, :::80->8080/tcp, 0.0.0.0:443->8443/tcp, :::443->8443/tcp nginx

60a868e50223 goharbor/harbor-jobservice:v2.5.0 "/harbor/entrypoint.…" 11 seconds ago Up 8 seconds (health: starting) harbor-jobservice

abf5e1d382b1 goharbor/harbor-core:v2.5.0 "/harbor/entrypoint.…" 11 seconds ago Up 8 seconds (health: starting) harbor-core

9f5415aa4086 goharbor/harbor-portal:v2.5.0 "nginx -g 'daemon of…" 11 seconds ago Up 9 seconds (health: starting) harbor-portal

f4c2c38abe04 goharbor/harbor-db:v2.5.0 "/docker-entrypoint.…" 11 seconds ago Up 9 seconds (health: starting) harbor-db

74b6a076b5b2 goharbor/harbor-registryctl:v2.5.0 "/home/harbor/start.…" 11 seconds ago Up 8 seconds (health: starting) registryctl

8c3bead9c56e goharbor/redis-photon:v2.5.0 "redis-server /etc/r…" 11 seconds ago Up 9 seconds (health: starting) redis

d09c4161d411 goharbor/registry-photon:v2.5.0 "/home/harbor/entryp…" 11 seconds ago Up 9 seconds (health: starting) registry

90f8c13f0490 goharbor/harbor-log:v2.5.0 "/bin/sh -c /usr/loc…" 11 seconds ago Up 9 seconds (health: starting) 127.0.0.1:1514->10514/tcp harbor-log

[root@k8s-03 harbor]# sudo wget "https://github.com/docker/compose/releases/download/v2.24.5/docker-compose-$(uname -s)-$(uname -m)" -O /usr/local/bin/docker-compose

--2025-08-11 16:12:21-- https://github.com/docker/compose/releases/download/v2.24.5/docker-compose-Linux-x86_64

Resolving github.com (github.com)... 20.200.245.247

Connecting to github.com (github.com)|20.200.245.247|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://release-assets.githubusercontent.com/github-production-release-asset/15045751/aef9c31b-3422-45af-b239-516f7a79cca1?sp=r&sv=2018-11-09&sr=b&spr=https&se=2025-08-11T08%3A49%3A34Z&rscd=attachment%3B+filename%3Ddocker-compose-linux-x86_64&rsct=application%2Foctet-stream&skoid=96c2d410-5711-43a1-aedd-ab1947aa7ab0&sktid=398a6654-997b-47e9-b12b-9515b896b4de&skt=2025-08-11T07%3A49%3A31Z&ske=2025-08-11T08%3A49%3A34Z&sks=b&skv=2018-11-09&sig=k%2BvfmI39lbdCBNCQDwuQiB5UfH%2F8S9PNPOgAFydaPJs%3D&jwt=eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJnaXRodWIuY29tIiwiYXVkIjoicmVsZWFzZS1hc3NldHMuZ2l0aHVidXNlcmNvbnRlbnQuY29tIiwia2V5Ijoia2V5MSIsImV4cCI6MTc1NDkwMDI0MiwibmJmIjoxNzU0ODk5OTQyLCJwYXRoIjoicmVsZWFzZWFzc2V0cHJvZHVjdGlvbi5ibG9iLmNvcmUud2luZG93cy5uZXQifQ.x2Izppyvpu0u8fDdEvN9JVDiEOk70qV6l1OyQSg1woI&response-content-disposition=attachment%3B%20filename%3Ddocker-compose-linux-x86_64&response-content-type=application%2Foctet-stream [following]

--2025-08-11 16:12:22-- https://release-assets.githubusercontent.com/github-production-release-asset/15045751/aef9c31b-3422-45af-b239-516f7a79cca1?sp=r&sv=2018-11-09&sr=b&spr=https&se=2025-08-11T08%3A49%3A34Z&rscd=attachment%3B+filename%3Ddocker-compose-linux-x86_64&rsct=application%2Foctet-stream&skoid=96c2d410-5711-43a1-aedd-ab1947aa7ab0&sktid=398a6654-997b-47e9-b12b-9515b896b4de&skt=2025-08-11T07%3A49%3A31Z&ske=2025-08-11T08%3A49%3A34Z&sks=b&skv=2018-11-09&sig=k%2BvfmI39lbdCBNCQDwuQiB5UfH%2F8S9PNPOgAFydaPJs%3D&jwt=eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJnaXRodWIuY29tIiwiYXVkIjoicmVsZWFzZS1hc3NldHMuZ2l0aHVidXNlcmNvbnRlbnQuY29tIiwia2V5Ijoia2V5MSIsImV4cCI6MTc1NDkwMDI0MiwibmJmIjoxNzU0ODk5OTQyLCJwYXRoIjoicmVsZWFzZWFzc2V0cHJvZHVjdGlvbi5ibG9iLmNvcmUud2luZG93cy5uZXQifQ.x2Izppyvpu0u8fDdEvN9JVDiEOk70qV6l1OyQSg1woI&response-content-disposition=attachment%3B%20filename%3Ddocker-compose-linux-x86_64&response-content-type=application%2Foctet-stream

Resolving release-assets.githubusercontent.com (release-assets.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.109.133, ...

Connecting to release-assets.githubusercontent.com (release-assets.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 61389086 (59M) [application/octet-stream]

Saving to: ‘/usr/local/bin/docker-compose’

/usr/local/bin/docker-compose 100%[=================================================================================================================================================================>] 58.54M 164KB/s in 2m 49s

2025-08-11 16:15:11 (355 KB/s) - ‘/usr/local/bin/docker-compose’ saved [61389086/61389086]

[root@k8s-03 harbor]# sudo chmod +x /usr/local/bin/docker-compose

[root@k8s-03 harbor]# sudo rm -f /usr/bin/docker-compose

[root@k8s-03 harbor]# sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

[root@k8s-03 harbor]# echo $PATH

/root/.local/bin:/root/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/.local/bin

[root@k8s-03 harbor]# docker-compose version

-bash: /root/.local/bin/docker-compose: No such file or directory

[root@k8s-03 harbor]# export PATH=/usr/local/bin:/usr/bin:/root/.local/bin:$PATH

[root@k8s-03 harbor]# echo 'export PATH=/usr/local/bin:$PATH' | sudo tee -a /root/.bashrc

export PATH=/usr/local/bin:$PATH

[root@k8s-03 harbor]# source /root/.bashrc

[root@k8s-03 harbor]# docker-compose version

Docker Compose version v2.24.5

[root@k8s-03 harbor]#

获取证书

[root@k8s-03 harbor]# sudo ./t.sh

Certificate request self-signature ok

subject=C=CN, ST=Beijing, L=Beijing, O=example, OU=Personal, CN=harbor.telewave.tech

[root@k8s-03 harbor]# ls

LICENSE common common.sh data docker-compose.yml harbor.v2.5.0.tar harbor.yml harbor.yml.bak harbor.yml.tmpl install.sh prepare t.sh

[root@k8s-03 harbor]# pwd

/root/harbor

[root@k8s-03 harbor]# ls /work/harbor/cert/

ca.crt ca.key ca.srl harbor.telewave.tech.cert harbor.telewave.tech.crt harbor.telewave.tech.csr harbor.telewave.tech.key v3.ext

评论 (0)