一、准备工作

0、ubuntu 添加root用户

sudo passwd root

su - root # 输入你刚刚设置的密码即可,退出,下次就可以用root登录

#关闭防火墙

systemctl status ufw.service

systemctl stop ufw.service

#ssh禁用了root连接可以开启

设置vi /etc/ssh/sshd_config配置开启

PermitRootLogin yes

重启服务

systemctl restart sshd#配置加速

代理长期生效

cat >/etc/profile.d/proxy.sh << 'EOF'

export http_proxy="http://192.168.1.9:7890"

export https_proxy="http://192.168.1.9:7890"

export HTTP_PROXY="$http_proxy"

export HTTPS_PROXY="$https_proxy"

export no_proxy="127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc"

export NO_PROXY="$no_proxy"

EOF

source /etc/profile.d/proxy.sh

#下面部署完containerd后再操作

mkdir -p /etc/systemd/system/containerd.service.d

cat >/etc/systemd/system/containerd.service.d/http-proxy.conf << 'EOF'

[Service]

Environment="HTTP_PROXY=http://192.168.1.9:7890"

Environment="HTTPS_PROXY=http://192.168.1.9:7890"

Environment="NO_PROXY=127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc"

EOF

systemctl daemon-reload

systemctl restart containerd

crictl pull docker.io/library/busybox:latest1、打开Netplan配置文件

sudo nano /etc/netplan/00-installer-config.yaml # 根据实际文件名修改2、修改配置文件

2.1动态IP

network:

ethernets:

ens33: # 网卡名(用 `ip a` 查看)

dhcp4: true

version: 22.2静态IP

network:

ethernets:

ens33:

dhcp4: no

addresses: [192.168.1.100/24] # IP/子网掩码

gateway4: 192.168.1.1 # 网关

nameservers:

addresses: [8.8.8.8, 1.1.1.1] # DNS服务器

version: 23、应用配置

sudo netplan apply4、SSH远程登录

#修改/etc/ssh/sshd_config

PermitRootLogin yessudo systemctl restart sshd三台主机

ubuntu20.04.4使用阿里云的apt源

先备份一份 sudo cp /etc/apt/sources.list /etc/apt/sources.list.bakvi /etc/apt/sources.list deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse机器配置

#修改主机名 sudo hostnamectl set-hostname 主机名 #刷新主机名无需重启 sudo hostname -F /etc/hostnamecat >> /etc/hosts << "EOF" 192.168.110.88 k8s-master-01 m1 192.168.110.70 k8s-node-01 n1 192.168.110.176 k8s-node-02 n2 EOF集群通信

ssh-keygen ssh-copy-id m1 ssh-copy-id n1 ssh-copy-id n2关闭系统的交换分区swap

集群内主机都需要执行sed -ri 's/^([^#].*swap.*)$/#\1/' /etc/fstab && grep swap /etc/fstab && swapoff -a && free -h同步时间

主节点做sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat << 'EOF' > /etc/chrony/conf.d/aliyun.conf server ntp1.aliyun.com iburst minpoll 4 maxpoll 10 server ntp2.aliyun.com iburst minpoll 4 maxpoll 10 server ntp3.aliyun.com iburst minpoll 4 maxpoll 10 server ntp4.aliyun.com iburst minpoll 4 maxpoll 10 server ntp5.aliyun.com iburst minpoll 4 maxpoll 10 server ntp6.aliyun.com iburst minpoll 4 maxpoll 10 server ntp7.aliyun.com iburst minpoll 4 maxpoll 10 driftfile /var/lib/chrony/drift makestep 10 3 rtcsync allow 0.0.0.0/0 local stratum 10 keyfile /etc/chrony.keys logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置 systemctl enable chronyd.service systemctl status chronyd.service从节点做

sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat > /etc/chrony/conf.d/aliyun.conf<< EOF server 192.168.110.88 iburst driftfile /var/lib/chrony/drift makestep 10 3 rtcsync local stratum 10 keyfile /etc/chrony.key logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF设置内核参数

集群内主机都需要执行cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl = 15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF # 立即生效 sysctl --system# 1. 加载必要的内核模块 sudo modprobe br_netfilter # 2. 确保模块开机自动加载 echo "br_netfilter" | sudo tee /etc/modules-load.d/k8s.conf # 3. 配置网络参数 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF # 4. 应用配置 sudo sysctl --system # 5. 验证配置 ls /proc/sys/net/bridge/ # 应该显示 bridge-nf-call-iptables cat /proc/sys/net/bridge/bridge-nf-call-iptables # 应该输出 1安装常用工具

sudo apt update sudo apt install -y expect wget jq psmisc vim net-tools telnet lvm2 git ntpdate chrony bind9-utils rsync unzip git安装ipvsadm

安装ipvsadmsudo apt install -y ipvsadm ipset sysstat conntrack #libseccomp 是预装好的 dpkg -l | grep libseccomp在 Ubuntu 22.04.4 中,/etc/sysconfig/modules/ 目录通常不是默认存在的,因为 Ubuntu 使用的是 systemd 作为初始化系统,而不是传统的 SysVinit 或者其他初始化系统。因此,Ubuntu 不使用 /etc/sysconfig/modules/ 来管理模块加载。

如果你想确保 IPVS 模块在系统启动时自动加载,你可以按照以下步骤操作:

创建一个 /etc/modules-load.d/ipvs.conf 文件: 在这个文件中,你可以列出所有需要在启动时加载的模块。这样做可以确保在启动时自动加载这些模块。echo "ip_vs" > /etc/modules-load.d/ipvs.conf echo "ip_vs_lc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wlc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_rr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wrr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblcr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_dh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_fo" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_nq" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sed" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_ftp" >> /etc/modules-load.d/ipvs.conf echo "nf_conntrack" >> /etc/modules-load.d/ipvs.conf加载模块: 你可以使用 modprobe 命令来手动加载这些模块,或者让系统在下次重启时自动加载。

sudo modprobe ip_vs sudo modprobe ip_vs_lc sudo modprobe ip_vs_wlc sudo modprobe ip_vs_rr sudo modprobe ip_vs_wrr sudo modprobe ip_vs_lblc sudo modprobe ip_vs_lblcr sudo modprobe ip_vs_dh sudo modprobe ip_vs_sh sudo modprobe ip_vs_fo sudo modprobe ip_vs_nq sudo modprobe ip_vs_sed sudo modprobe ip_vs_ftp sudo modprobe nf_conntrack验证模块是否加载: 你可以使用 lsmod 命令来验证这些模块是否已经被成功加载。

lsmod | grep ip_vs# 1. 内核模块 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 2. 必要 sysctl cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # 3. 可选常用调优(按需) cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-extra.conf fs.inotify.max_user_watches = 524288 fs.inotify.max_user_instances = 8192 fs.file-max = 1000000 EOF # 4. 应用所有 sysctl sudo sysctl --system二、安装containerd(三台节点都要做)

#只要超过2.4就不用再安装了 root@k8s-master-01:/etc/modules-load.d# dpkg -l | grep libseccomp ii libseccomp2:amd64 2.5.3-2ubuntu2 amd64 high level interface to Linux seccomp filter开始安装

apt install containerd* -y containerd --version #查看版本配置

mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 vi /etc/containerd/config.toml 把下面改为自己构建的仓库 sandbox_image = sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8"#配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml 配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#配置containerd开机自启动 #启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #查看containerd状态 systemctl status containerd #查看containerd的版本 ctr version

三、安装最新版本的kubeadm、kubelet 和 kubectl

1、三台机器准备k8s

配置安装源

apt-get update && apt-get install -y apt-transport-https

sudo mkdir -p /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo tee /etc/apt/keyrings/kubernetes-apt-keyring.asc > /dev/null

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.asc] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# 2.

sudo mkdir -p /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key \

| sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" \

| sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubeadm=1.30.14-1.1 kubelet=1.30.14-1.1 kubectl=1.30.14-1.1

sudo apt-mark hold kubelet kubeadm kubectl2、主节点操作(node节点不执行)

初始化master节点(仅在master节点上执行)

#可以kubeadm config images list查看

[root@k8s-master-01 ~]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.30.0

registry.k8s.io/kube-controller-manager:v1.30.0

registry.k8s.io/kube-scheduler:v1.30.0

registry.k8s.io/kube-proxy:v1.30.0

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlroot@k8s-master-01:~# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.110.88

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master-01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.30.3

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

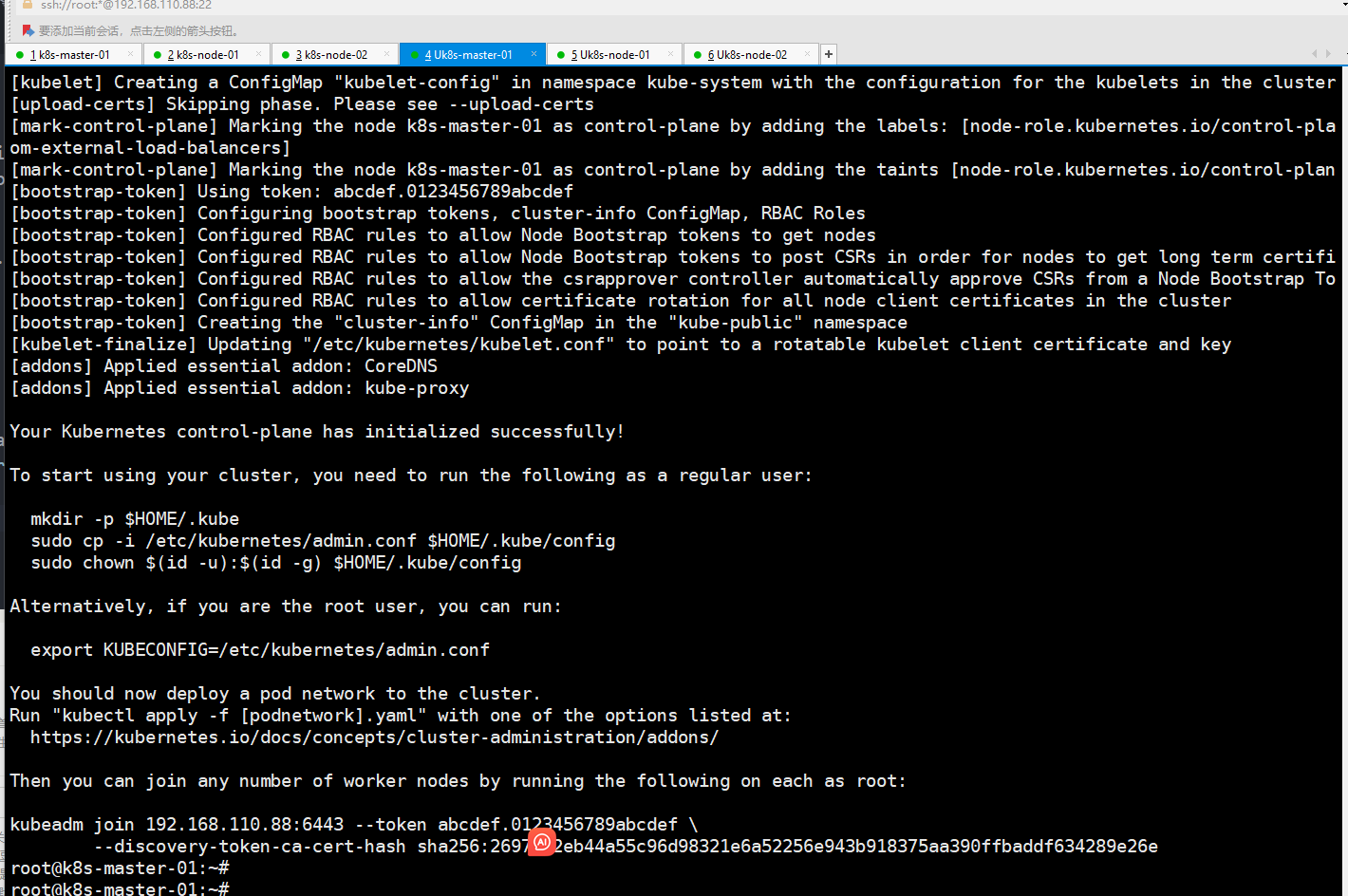

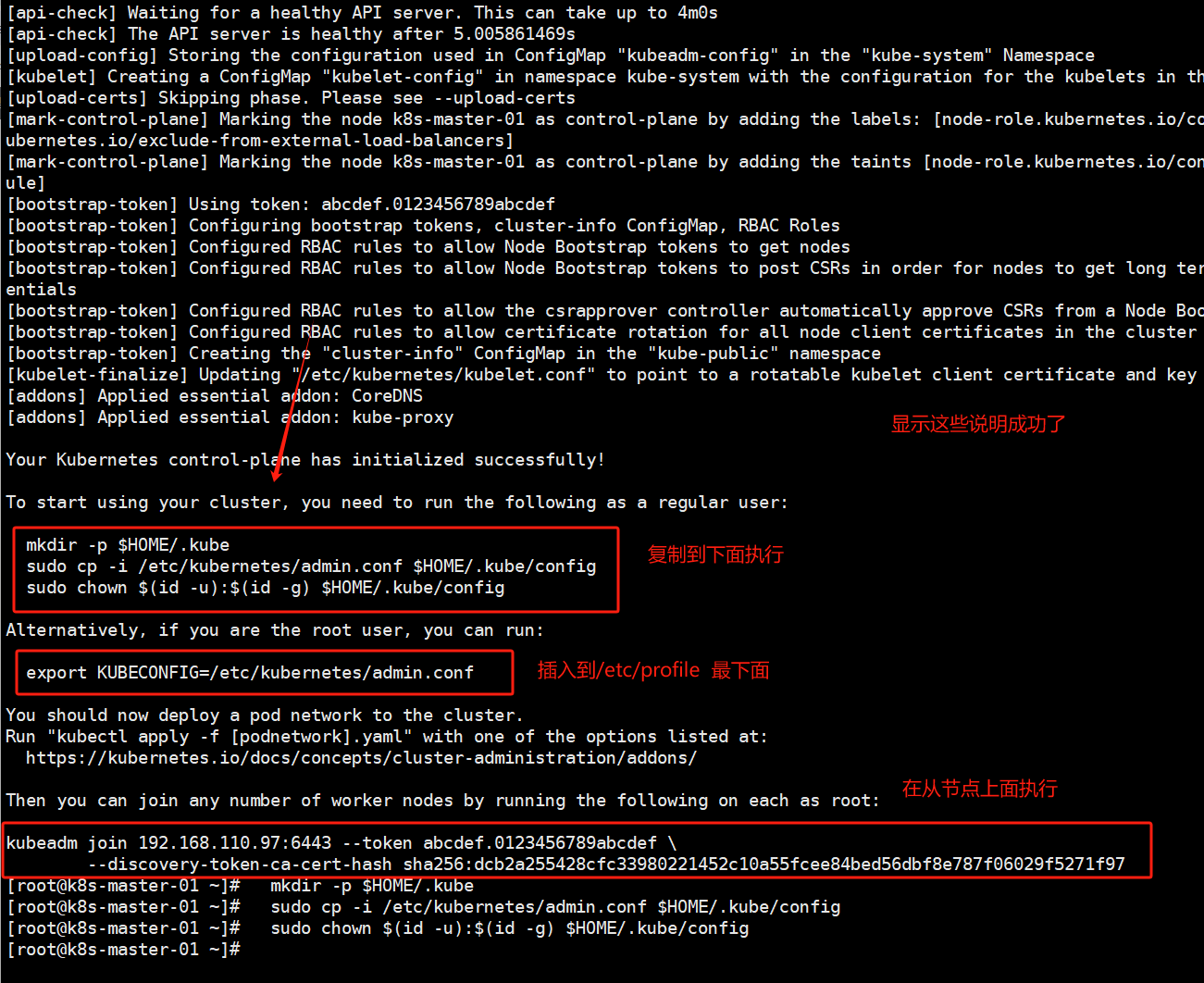

部署K8S

kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap

部署网络插件

下载网络插件

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-master-01 ~]# grep -i image kube-flannel.yml

image: docker.io/flannel/flannel:v0.25.5

image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1

image: docker.io/flannel/flannel:v0.25.5

改为下面 要去阿里云上面构建自己的镜像

root@k8s-master-01:~# grep -i image kube-flannel.yml

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1

image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5

部署在master上即可

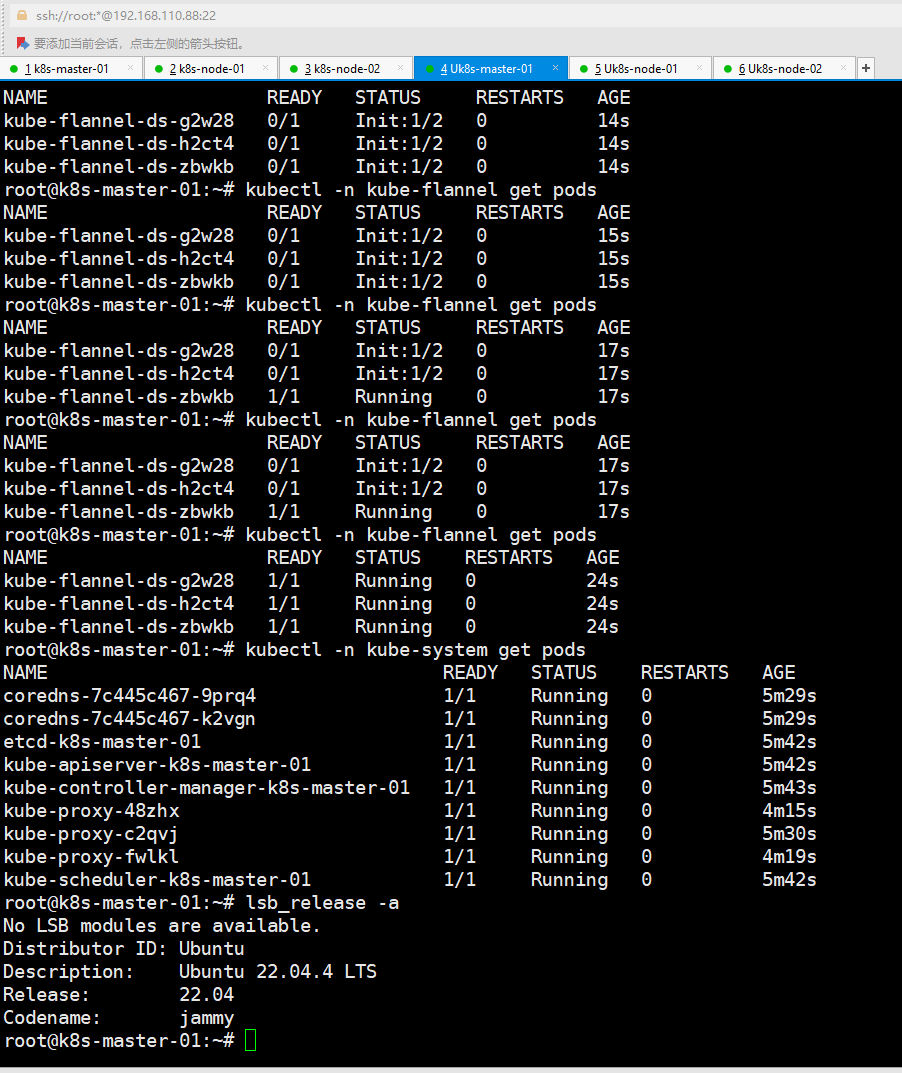

kubectl apply -f kube-flannel.yml

kubectl delete -f kube-flannel.yml #这个是删除网络插件的查看状态

kubectl -n kube-flannel get pods

kubectl -n kube-flannel get pods -w

[root@k8s-master-01 ~]# kubectl get nodes # 全部ready

[root@k8s-master-01 ~]# kubectl -n kube-system get pods # 两个coredns的pod也都ready部署kubectl命令提示(在所有节点上执行)

yum install bash-completion* -y

kubectl completion bash > ~/.kube/completion.bash.inc

echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile

source $HOME/.bash_profile

出现

root@k8s-node-01:~# kubectl get node

E0720 07:32:10.289542 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E0720 07:32:10.290237 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E0720 07:32:10.292469 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E0720 07:32:10.292759 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E0720 07:32:10.294655 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?

#在node节点执行下面命令修改ip地址

mkdir -p $HOME/.kube

scp root@192.168.30.135:/etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config重新触发证书上传(核心操作)在首次成功初始化控制平面(kubeadm init)后,需再次执行以下命令(秘钥有效期是两小时):root@k8s-01:~# sudo kubeadm init phase upload-certs --upload-certs

I0807 05:49:38.988834 143146 version.go:256] remote version is much newer: v1.33.3; falling back to: stable-1.27

W0807 05:49:48.990339 143146 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.27.txt": Get "https://cdn.dl.k8s.io/release/stable-1.27.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0807 05:49:48.990372 143146 version.go:105] falling back to the local client version: v1.27.6

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

52cb628f88aefbb45cccb94f09bb4e27f9dc77aff464e7bc60af0a9843f41a3fkubeadm join <MASTER_IP>:6443 --token <TOKEN> \

--discovery-token-ca-cert-hash sha256:<HASH> \

--control-plane --certificate-key <KEY>

独家揭秘:私服传奇公益火龙,惊艳全服的神秘力量!:https://501h.com/yuanshi/2024-08-18/28835.html

《网者荣耀》剧情片高清在线免费观看:https://www.jgz518.com/xingkong/65252.html

情感真挚自然,字里行间传递出强烈的感染力。

建议增加个人经历分享,增强情感穿透力。

情感真挚自然,字里行间传递出强烈的感染力。

作者的观点新颖且实用,让人在阅读中获得了新的思考和灵感。

方世玉粤配

评估

亚瑟的威士忌

最佳损友粤配

高跟鞋

为子寻父

致胜王牌

校园神探

倩女幽魂

笑林小子

飞狐外传

星球大战前传3西斯的复仇

内特巴加兹的纳什维尔圣诞节

亡命救护车

记忆裂痕粤配

突破者

深海狂鲨2

战国

演员

阴宅捉迷藏

怒火无间

银河护卫队3

哥斯拉

浑身是胆

羞羞的铁拳

拿针的女孩

高跟鞋

荣耀之谁与争锋

除暴

柯村风云

女心理师之心迷水影

暴风

演员

css教程

哭泣的女人

泳队惊魂

妖猫传

孤寂午后

孤海沙堡

芝拉克

青面修罗

神雕侠侣问世间

梦幻岛

第三帝国邪恶的欺骗

我爱你

布袋人

马克萨斯群岛的顺风处

天涯芬芳

圣诞大赢家

生于一九四七爱情仍在继续

卧底费瑞崛起

无痛凯恩

亚瑟的威士忌

杀手之王

孤独的士兵

宗师叶问

孤海沙堡

甜心

悟空传

物种起源

广东小老虎

王牌

孤堡疑云

李碧华鬼魅系列奇幻夜粤配

钢之炼金术师

柠檬糖的魔法

梦之园

女巫之手

房子里的陌生人

小教父

耶里肖

狄亚伯洛大决斗

超级轰天雷

世界之外

破地狱

美洲大陆

飞来横财

贝茨先生与邮局真实的故事

米克与诡计

太空神鹰

新警察故事

林场追杀

狂暴2资本的惩罚

婴儿车

广东小老虎

宝莱坞机器人20重生归来

雪牦牛

狄亚伯洛大决斗

鬼视频

我们的追星之路

五郎八卦棍

html教程

狄仁杰之天神下凡

燃烧

小孩不笨3

野王

最佳损友闯情关

新警察故事

聚光灯下的圣诞节

新洗冤录

房子里的陌生人

盲女惊魂记

亡命救护车

疯狂德克萨斯

娘子军传奇

长津湖

一闪一闪亮星星

汽车旅馆

他她他她

一念之痒

陈翔六点半之重楼别

风水二十年

突破者

快乐赢家

女拳皇

复仇女神

塞伦盖蒂第三季

喜剧片的黄金时代

呼吁

超能含片

妖猫传

丧尸集中营

甜心

洞穴的秘密

人蛇大战李劲峰

林荫路825号

年年夏日

狂兽粤配

恐龙战队

评估

亲爱的达令

步步为营

遗产计划

南少林之怒目金刚

海侠尼禄

路毙

星球大战前传3西斯的复仇

噢加拿大

光年正传

监控高中

军情五处利益之争

孟婆传之缘起

热血燃烧

记忆奇旅

打怪

亲亲海豚

打倒他们

只有我能喜欢你

异界余生

狂兽

化身博士

不二兄弟

飞龙斩1976

致命审判

猎金游戏

梦想之城

如积雪般的永寂

海滩救护队

你往哪里跑

落花洞新娘

拳语者

谍与蝶

西西里来信

鬼娃的诅咒

云

木匠的祈祷

菜单

不明影像绝对点击禁止

盛大的家庭圣诞节

故事

红灯停绿灯行

不老奇事

核弹快车

心灵食谱

内心之火

不能错过的只有你

雪山飞狐之塞北宝藏

他她他她

微信电话同步?【——183-88909465—微电同号】?华纳公司客服联系方式?【6011643——183-88909465——-】、?华纳公司直属开户

华纳公司注册会员流程?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

如何成为华纳公司会员?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳直属会员开户步骤?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳公司会员注册指南?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳总公司会员申请?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳公司会员注册所需材料?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳会员开户流程?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳公司注册会员步骤?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳会员申请流程?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳公司会员注册指南?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳东方明珠开户专线联系方式?(▲18288362750?《?微信STS5099? 】【╃q 2704132802╃】

华纳东方明珠客服电话是多少?(▲18288362750?《?微信STS5099? 】

如何联系华纳东方明珠客服?(▲18288362750?《?微信STS5099? 】

华纳东方明珠官方客服联系方式?(▲18288362750?《?微信STS5099?

华纳东方明珠客服热线?(▲18288362750?《?微信STS5099?

华纳东方明珠24小时客服电话?(▲18288362750?《?微信STS5099? 】

华纳东方明珠官方客服在线咨询?(▲18288362750?《?微信STS5099?

华纳东方明珠客服电话是多少?(??155--8729--1507?《?薇-STS5099】【?扣6011643?】

华纳东方明珠开户专线联系方式?(??155--8729--1507?《?薇-STS5099】【?扣6011643?】

新盛客服电话是多少?(?183-8890-9465—《?薇-STS5099】【

新盛开户专线联系方式?(?183-8890--9465—《?薇-STS5099】【?扣6011643??】

新盛客服开户电话全攻略,让娱乐更顺畅!(?183-8890--9465—《?薇-STS5099】客服开户流程,华纳新盛客服开户流程图(?183-8890--9465—《?薇-STS5099】

果博东方客服开户联系方式【182-8836-2750—】?薇- cxs20250806】

果博东方公司客服电话联系方式【182-8836-2750—】?薇- cxs20250806】

果博东方开户流程【182-8836-2750—】?薇- cxs20250806】

果博东方客服怎么联系【182-8836-2750—】?薇- cxs20250806】

东方明珠客服开户联系方式【182-8836-2750—】?μ- cxs20250806

东方明珠客服电话联系方式【182-8836-2750—】?- cxs20250806】

东方明珠开户流程【182-8836-2750—】?薇- cxs20250806】

东方明珠客服怎么联系【182-8836-2750—】?薇- cxs20250806】

寻找华纳圣淘沙公司开户代理(183-8890-9465薇-STS5099】

华纳圣淘沙官方合作开户渠道(183-8890-9465薇-STS5099】

华纳圣淘沙公司开户代理服务(183-8890-9465薇-STS5099】

华纳圣淘沙公司开户咨询热线(183-8890-9465薇-STS5099】

联系客服了解华纳圣淘沙开户

(183-8890-9465薇-STS5099】

华纳圣淘沙公司开户专属顾问

(183-8890-9465薇-STS5099】

《华纳圣淘沙公司开户流程全解析》→ 官方顾问一对一指导??? 安全联系:183第三段8890第四段9465

《华纳圣淘沙开户步骤详解》→ 」专属通道快速办理??? 安全联系:183第三段8890第四段9465

《华纳圣淘沙账户注册指南》→ 扫码获取完整资料清单?「微?? 安全联系:183第三段8890第四段9465

《新手开通华纳圣淘沙公司账户指南》→ 限时免费咨询开放??? 安全联系:183第三段8890第四段9465

《华纳圣淘沙企业开户标准流程》→ 资深顾问实时解答疑问??? 安全联系:183第三段8890第四段9465

《华纳圣淘沙开户步骤全景图》→ 点击获取极速开户方案??? 安全联系:183第三段8890第四段9465

《华纳圣淘沙账户创建全流程手册》→ 预约顾问免排队服务?9?? 安全联系:183第三段8890第四段9465 《从零开通华纳圣淘沙公司账户》→ 添加客服领取开户工具包?? 安全联系:183第三段8890第四段9465

《官方授权:华纳圣淘沙开户流程》→ 认证顾问全程代办?」?? 安全联系:183第三段8890第四段9465

《华纳圣淘沙开户说明书》→立即联系获取电子版文件??? 安全联系:183第三段8890第四段9465

康悦到家698套餐暗示a0fz.cn