搜索到

152

篇与

的结果

-

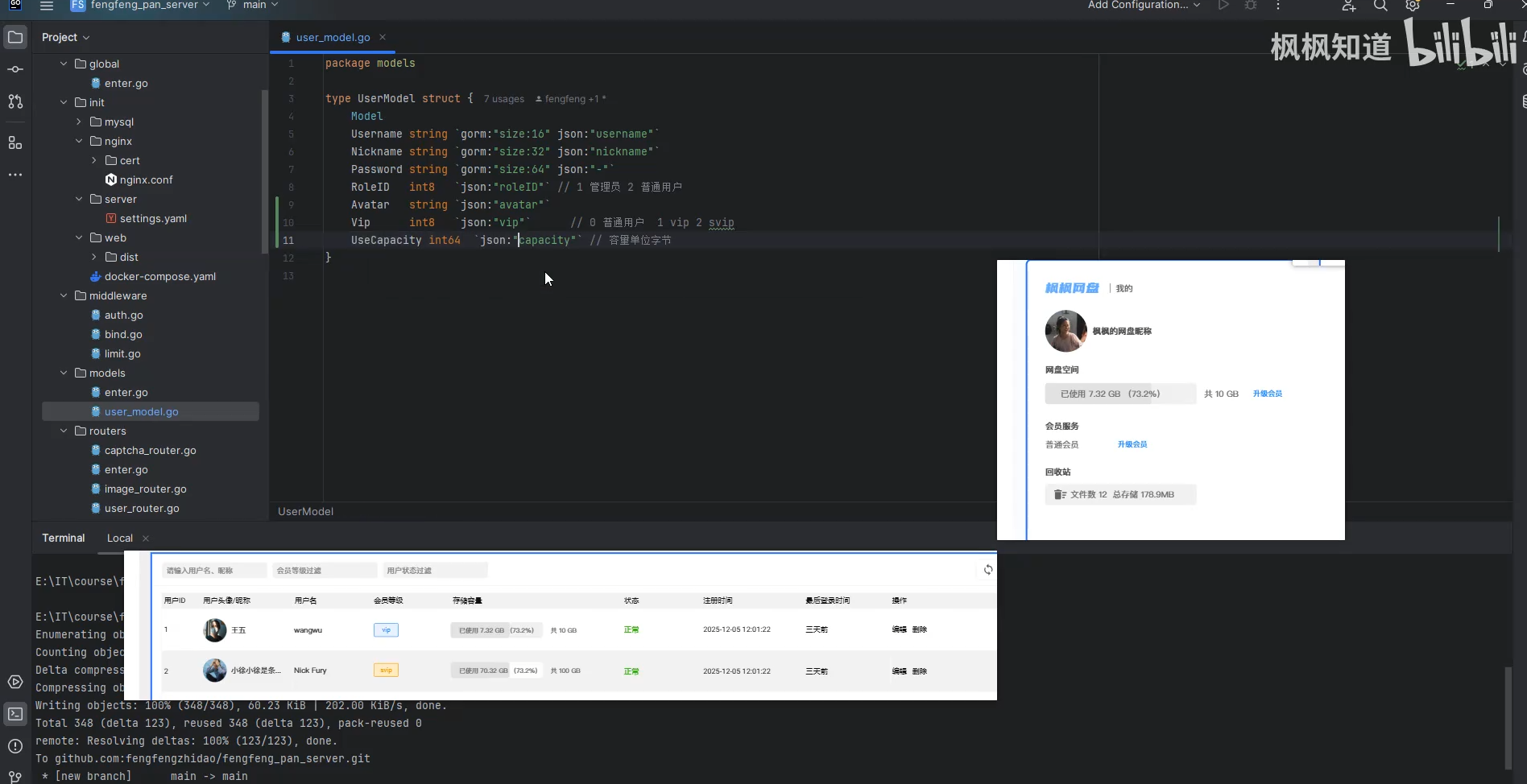

网盘项目 1) “结构体 ↔ 数据库表” 一般情况下: 一个 type Xxx struct { ... }(模型结构体) ↔ 数据库里一张表 结构体里的字段 ↔ 表里的列(字段) 比如你这个 UserModel,迁移后通常会对应一张 user_models / users 之类的表(具体表名取决于你的命名策略)。 2) “标签 ↔ 列的规则” 字段上写的: gorm:"size:16":影响数据库列类型/长度等 json:"username":影响接口返回的 JSON 字段名 json:"-":表示接口不会返回该字段(比如密码) 3) 什么时候会真的创建表? 必须在代码里做类似操作(举例): db.AutoMigrate(&models.UserModel{}, &models.OtherModel{}) 只有被 AutoMigrate(或你自己写的迁移脚本)传进去的模型,才会被创建/更新成表。 所以不是 “models 目录下每个文件都会变成表”,而是 “你迁移时注册/迁移的那些模型 struct 会变成表”。 4) “内容会变成表里面的内容”是什么意思? 结构体定义(字段/标签) 会决定表结构(列名、类型、长度、索引等) 结构体里的数据(你创建/查询出来的值) 才是表里的行数据 也就是说:struct 是“表的蓝图”,你 Create/Find 时才是“写入/读取数据”LastLoginAt 和 LastLoginTime 有什么区别? 它们表达“上次登录时间”这件事,但存储方式不同: A. LastLoginTime int64 通常表示 Unix 时间戳(秒或毫秒): 例如:1700000000(秒) 或 1700000000000(毫秒) 优点: 存得很简单、跨语言好用、占空间小 缺点: 不直观,要转换 容易搞错单位(秒/毫秒) 数据库层面做时间函数/范围查询时没 datetime 方便(看数据库) B. LastLoginAt time.Time / *time.Time 表示 真正的时间类型: 在 Go 里是 time.Time 在数据库里通常会映射成 datetime/timestamp 之类 优点: 语义清晰,可读性好 数据库做时间范围查询、排序、时间函数更顺手 缺点: JSON 输出时是字符串(例如 RFC3339),前端要按字符串解析 需要考虑时区/序列化格式 还有一个关键差别:time.Time vs *time.Time time.Time:永远有值(默认是 0001-01-01...),很难表达“从未登录” *time.Time:可以为 nil,数据库可为 NULL,更适合“从未登录过”的场景 你该选哪个? 大多数项目更推荐: 用 *time.Time LastLoginAt(允许 NULL) 或者你想 JSON 简单一点就用 int64 时间戳,但要统一单位(强烈建议统一毫秒或秒并写注释) 你现在注释写了“单位为秒”,如果继续用 int64 就保持秒;如果改成毫秒要改注释并保证全项目一致。

网盘项目 1) “结构体 ↔ 数据库表” 一般情况下: 一个 type Xxx struct { ... }(模型结构体) ↔ 数据库里一张表 结构体里的字段 ↔ 表里的列(字段) 比如你这个 UserModel,迁移后通常会对应一张 user_models / users 之类的表(具体表名取决于你的命名策略)。 2) “标签 ↔ 列的规则” 字段上写的: gorm:"size:16":影响数据库列类型/长度等 json:"username":影响接口返回的 JSON 字段名 json:"-":表示接口不会返回该字段(比如密码) 3) 什么时候会真的创建表? 必须在代码里做类似操作(举例): db.AutoMigrate(&models.UserModel{}, &models.OtherModel{}) 只有被 AutoMigrate(或你自己写的迁移脚本)传进去的模型,才会被创建/更新成表。 所以不是 “models 目录下每个文件都会变成表”,而是 “你迁移时注册/迁移的那些模型 struct 会变成表”。 4) “内容会变成表里面的内容”是什么意思? 结构体定义(字段/标签) 会决定表结构(列名、类型、长度、索引等) 结构体里的数据(你创建/查询出来的值) 才是表里的行数据 也就是说:struct 是“表的蓝图”,你 Create/Find 时才是“写入/读取数据”LastLoginAt 和 LastLoginTime 有什么区别? 它们表达“上次登录时间”这件事,但存储方式不同: A. LastLoginTime int64 通常表示 Unix 时间戳(秒或毫秒): 例如:1700000000(秒) 或 1700000000000(毫秒) 优点: 存得很简单、跨语言好用、占空间小 缺点: 不直观,要转换 容易搞错单位(秒/毫秒) 数据库层面做时间函数/范围查询时没 datetime 方便(看数据库) B. LastLoginAt time.Time / *time.Time 表示 真正的时间类型: 在 Go 里是 time.Time 在数据库里通常会映射成 datetime/timestamp 之类 优点: 语义清晰,可读性好 数据库做时间范围查询、排序、时间函数更顺手 缺点: JSON 输出时是字符串(例如 RFC3339),前端要按字符串解析 需要考虑时区/序列化格式 还有一个关键差别:time.Time vs *time.Time time.Time:永远有值(默认是 0001-01-01...),很难表达“从未登录” *time.Time:可以为 nil,数据库可为 NULL,更适合“从未登录过”的场景 你该选哪个? 大多数项目更推荐: 用 *time.Time LastLoginAt(允许 NULL) 或者你想 JSON 简单一点就用 int64 时间戳,但要统一单位(强烈建议统一毫秒或秒并写注释) 你现在注释写了“单位为秒”,如果继续用 int64 就保持秒;如果改成毫秒要改注释并保证全项目一致。 -

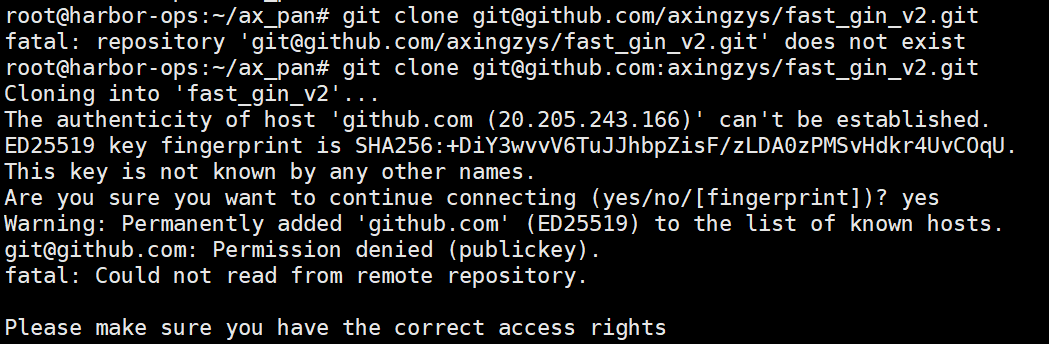

github使用 #github里面没有添加该节点的公钥 root@harbor-ops:~/ax_pan# ssh -T git@github.com git@github.com: Permission denied (publickey). #解决办法添加公钥给github root@harbor-ops:~/ax_pan# ls -al ~/.ssh total 28 drwx------ 2 root root 4096 Feb 5 11:11 . drwx------ 20 root root 4096 Feb 12 17:04 .. -rw------- 1 root root 0 Nov 16 22:23 authorized_keys -rw-r--r-- 1 root root 115 Dec 13 17:16 config -rw------- 1 root root 399 Dec 13 17:06 id_ed25519 -rw-r--r-- 1 root root 88 Dec 13 17:06 id_ed25519.pub -rw------- 1 root root 4054 Feb 12 17:05 known_hosts -rw------- 1 root root 3076 Feb 5 11:11 known_hosts.old root@harbor-ops:~/ax_pan# cat /root/.ssh/id_ed25519.pub ssh-ed25519 XXXX/ gitlab #成功 root@harbor-ops:~/ax_pan# ssh -T git@github.com Hi axingzys! You've successfully authenticated, but GitHub does not provide shell access. root@harbor-ops:~/ax_pan# root@harbor-ops:~/ax_pan/ax_pan_server# git remote -v origin https://github.com/axingzys/fast_gin_v2.git (fetch) origin https://github.com/axingzys/fast_gin_v2.git (push) root@harbor-ops:~/ax_pan/ax_pan_server# git remote remove origin root@harbor-ops:~/ax_pan/ax_pan_server# git remote -v root@harbor-ops:~/ax_pan/ax_pan_server# #新创建项目root@harbor-ops:~/ax_pan/ax_pan_server# git branch * main #我们本来就是main就不用做git branch -M main #下面就会把代码到新的仓库 root@harbor-ops:~/ax_pan/ax_pan_server# git remote add origin https://github.com/axingzys/axpan_server.git git push -u origin main Enumerating objects: 348, done. Counting objects: 100% (348/348), done. Delta compression using up to 4 threads Compressing objects: 100% (181/181), done. Writing objects: 100% (348/348), 60.23 KiB | 60.23 MiB/s, done. Total 348 (delta 123), reused 348 (delta 123), pack-reused 0 (from 0) remote: Resolving deltas: 100% (123/123), done. To https://github.com/axingzys/axpan_server.git * [new branch] main -> main branch 'main' set up to track 'origin/main'.

github使用 #github里面没有添加该节点的公钥 root@harbor-ops:~/ax_pan# ssh -T git@github.com git@github.com: Permission denied (publickey). #解决办法添加公钥给github root@harbor-ops:~/ax_pan# ls -al ~/.ssh total 28 drwx------ 2 root root 4096 Feb 5 11:11 . drwx------ 20 root root 4096 Feb 12 17:04 .. -rw------- 1 root root 0 Nov 16 22:23 authorized_keys -rw-r--r-- 1 root root 115 Dec 13 17:16 config -rw------- 1 root root 399 Dec 13 17:06 id_ed25519 -rw-r--r-- 1 root root 88 Dec 13 17:06 id_ed25519.pub -rw------- 1 root root 4054 Feb 12 17:05 known_hosts -rw------- 1 root root 3076 Feb 5 11:11 known_hosts.old root@harbor-ops:~/ax_pan# cat /root/.ssh/id_ed25519.pub ssh-ed25519 XXXX/ gitlab #成功 root@harbor-ops:~/ax_pan# ssh -T git@github.com Hi axingzys! You've successfully authenticated, but GitHub does not provide shell access. root@harbor-ops:~/ax_pan# root@harbor-ops:~/ax_pan/ax_pan_server# git remote -v origin https://github.com/axingzys/fast_gin_v2.git (fetch) origin https://github.com/axingzys/fast_gin_v2.git (push) root@harbor-ops:~/ax_pan/ax_pan_server# git remote remove origin root@harbor-ops:~/ax_pan/ax_pan_server# git remote -v root@harbor-ops:~/ax_pan/ax_pan_server# #新创建项目root@harbor-ops:~/ax_pan/ax_pan_server# git branch * main #我们本来就是main就不用做git branch -M main #下面就会把代码到新的仓库 root@harbor-ops:~/ax_pan/ax_pan_server# git remote add origin https://github.com/axingzys/axpan_server.git git push -u origin main Enumerating objects: 348, done. Counting objects: 100% (348/348), done. Delta compression using up to 4 threads Compressing objects: 100% (181/181), done. Writing objects: 100% (348/348), 60.23 KiB | 60.23 MiB/s, done. Total 348 (delta 123), reused 348 (delta 123), pack-reused 0 (from 0) remote: Resolving deltas: 100% (123/123), done. To https://github.com/axingzys/axpan_server.git * [new branch] main -> main branch 'main' set up to track 'origin/main'. -

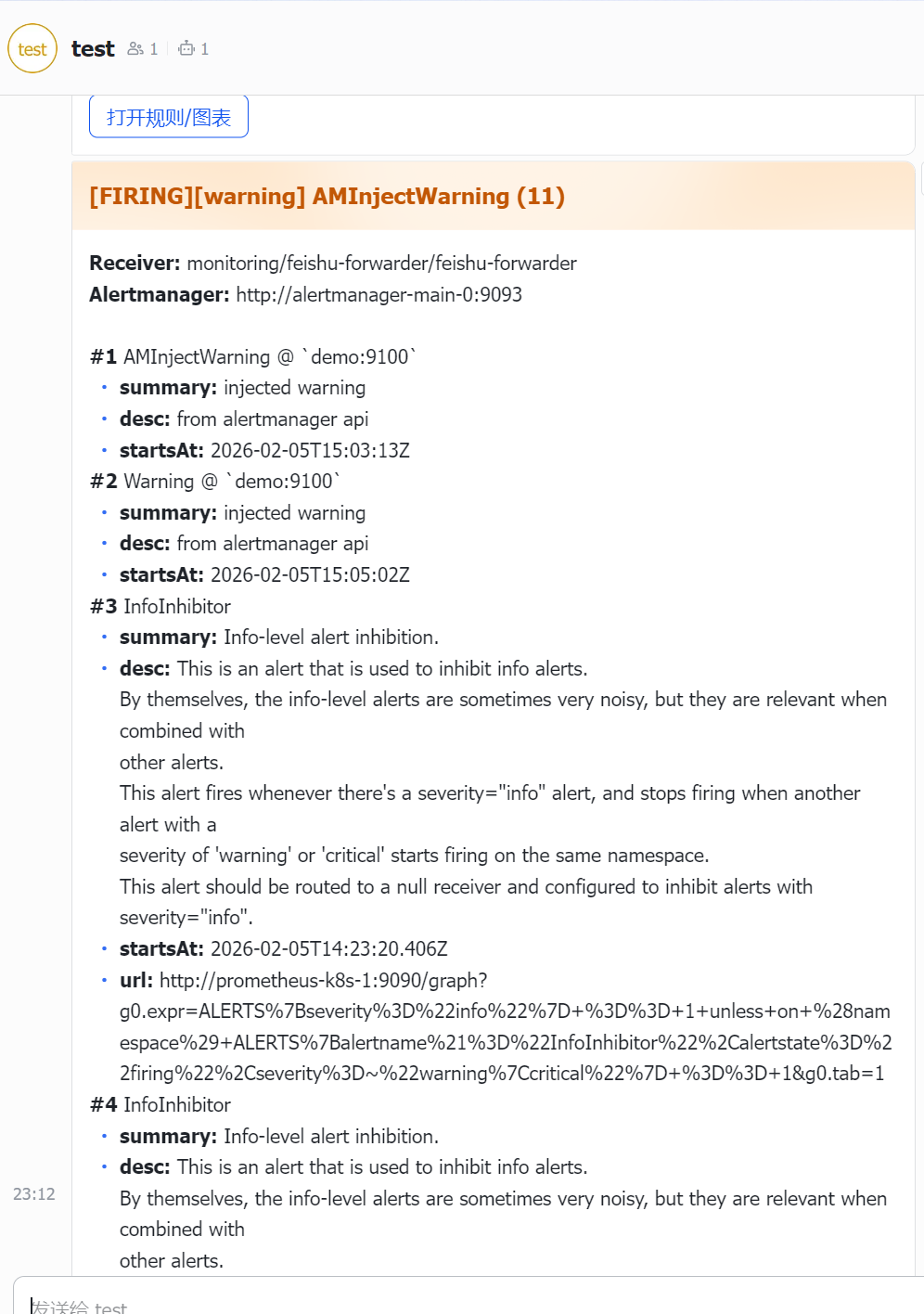

prometheus-operator 详解 一、prometheus-operatorroot@k8s-01:/woke/prometheus/feishu# kubectl get pod -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 0 7d23h alertmanager-main-1 2/2 Running 0 7d23h alertmanager-main-2 2/2 Running 0 7d23h blackbox-exporter-7fcbd888d-zv6z6 3/3 Running 0 16d feishu-forwarder-646d54f7cc-ks92j 1/1 Running 0 4m11s grafana-7ff454c477-l9x2k 1/1 Running 0 16d kube-state-metrics-78f95f79bb-wpcln 3/3 Running 0 16d node-exporter-622pm 2/2 Running 24 (36d ago) 40d node-exporter-mp2vg 2/2 Running 0 11h node-exporter-rl67z 2/2 Running 22 (36d ago) 40d prometheus-adapter-585d9c5dd5-bfsxw 1/1 Running 0 8d prometheus-adapter-585d9c5dd5-pcrnd 1/1 Running 0 8d prometheus-k8s-0 2/2 Running 0 7d23h prometheus-k8s-1 2/2 Running 0 7d23h prometheus-operator-78967669c9-5pk25 2/2 Running 0 7d23h prometheus-operator:控制器/管家 负责看你集群里的 CRD(ServiceMonitor/PodMonitor/PrometheusRule/Prometheus/Alertmanager/...),然后生成/维护真正跑起来的 Prometheus、Alertmanager 的 StatefulSet、配置 Secret 等。 prometheus-k8s-0/1:真正的 Prometheus 实例(采集+存储+算告警) prometheus-k8s-0/1:真正的 Prometheus 实例(采集+存储+算告警) 做三件事: 从各种 target 抓取 /metrics(scrape) 存到自己的 TSDB(本地时序库) 按规则(PrometheusRule)持续计算告警表达式,触发后发给 Alertmanager alertmanager-main-0/1/2:告警“中控”(聚合、路由、抑制、去重、静默) Prometheus 只负责“算出告警是否触发”,真正决定“发给谁、怎么合并、怎么抑制、多久发一次”是 Alertmanager。 grafana:出图/看板 Grafana 不采集数据,它是 去 Prometheus 查询(PromQL),然后画图。 node-exporter:采集 Node OS 指标(CPU/内存/磁盘/网卡) 暴露 /metrics 给 Prometheus 抓。 kube-state-metrics:采集 K8s 资源对象状态(Deployment 副本数、Pod 状态、Job 成功失败等) 也是暴露 /metrics 给 Prometheus 抓。 blackbox-exporter:探活(HTTP/TCP/ICMP 探测) Prometheus 调 blackbox-exporter 去探测目标,再把结果当指标存起来。 prometheus-adapter:把 Prometheus 指标转成 K8s HPA 可用的 custom/external metrics(与你问的告警链路不是一条线,但属于体系组件)1.1 Prometheus 怎么知道要抓哪些 target?这里就是 prometheus-operator 的关键:用 CRD 来描述“抓谁”。 常见 CRD: ServiceMonitor:按 label 选择某些 Service,然后抓它们的 endpoints PodMonitor:直接按 label 选择 Pod 抓 Probe:给 blackbox-exporter 用的探测对象(有些栈里会用) 简化理解就是: ServiceMonitor / PodMonitor = “抓取目标清单 + 抓取方式” Prometheus 会通过 Kubernetes API 做服务发现,然后按这些 CRD 去抓。1.2 阈值(告警规则)在哪里配置?怎么“算出来触发”?阈值本质是 PromQL 表达式 + 持续时间。 在 operator 体系里,告警规则通常放在 CRD:PrometheusRule 里。 PrometheusRule 里面一般长这样(示意): expr: 告警表达式(PromQL) for: 持续多久才算真的触发(防抖) labels: 给告警打标签(比如 severity) annotations: summary/description InfoInhibitor 就是 kube-prometheus 里常见的一条规则:它本身是“用来抑制 info 告警”的辅助告警(触发时提示:现在有 info 告警在 firing)。1.3 Grafana 是怎么“出图”的?Grafana 的数据来源一般配置成一个 Prometheus DataSource(指向 http://prometheus-k8s.monitoring.svc:9090 之类)。 然后每个 Dashboard 的每个 Panel 都是一条或多条 PromQL 查询,例如: CPU 使用率图:rate(node_cpu_seconds_total{mode!="idle"}[5m]) Pod 重启次数:increase(kube_pod_container_status_restarts_total[1h]) 业务 QPS:sum(rate(http_requests_total[1m])) by (service) Grafana 不存数据、不采集数据,只负责向 Prometheus 查询并可视化。1.4 Alertmanager 是怎么收到 Prometheus 的告警?又怎么发给 feishu-forwarder?Prometheus → Alertmanager Prometheus 在运行时会做两件事: 每隔一小段时间计算 PrometheusRule 的 alert 规则 规则满足(pending/firing)后,把告警实例通过 HTTP 发给 Alertmanager(Prometheus 配置里有 alerting.alertmanagers 指向 alertmanager-main) 可以理解为:Prometheus 负责“算出告警”,然后把告警事件 POST 给 Alertmanager。Alertmanager 内部做什么? 收到告警后,Alertmanager 会: group:按 group_by 合并同类告警(所以你会看到一次来 “(9)” 条) dedup:同一告警别重复刷 inhibit:抑制规则(比如有 warning/critical 时,把 info 抑制掉) route:按 matchers 决定走哪个 receiver(发给哪个渠道)Alertmanager → feishu-forwarder 当路由命中你的 webhook receiver 时,Alertmanager 会对你的 forwarder 发 HTTP POST(就是你代码里 /alertmanager 这个 handler 接收的 AMPayload)。 典型就是这样一个 receiver(示意): receiver: feishu-forwarder webhook_configs: url: http://feishu-forwarder.monitoring.svc:8080/alertmanager 飞书通知里看到的 Receiver: monitoring/feishu-forwarder/feishu-forwarder 也是这个链路来的。feishu-forwarder → 飞书 forwarder 做的事就是: 解析 Alertmanager webhook 的 JSON(AMPayload) 根据 severity 路由到不同飞书群 webhook 构建飞书消息(text/card/你现在要的折叠面板 card v2) POST 到飞书机器人 webhook(带签名) 最终:飞书群里就收到消息。1.5 整条链路采集链路: Exporter(/metrics) → Prometheus scrape → TSDB 看板链路: Grafana → Prometheus PromQL 查询 → 图表 告警链路: PrometheusRule(阈值/表达式) → Prometheus 计算触发 → POST Alertmanager → Alertmanager(聚合/路由/抑制) → webhook POST feishu-forwarder → feishu-forwarder 转飞书卡片 → 飞书机器人 webhook → 群通知1.6 验证每一段链路Prometheus 抓取目标是否正常: kubectl -n monitoring port-forward pod/prometheus-k8s-0 9090:9090 # 浏览器打开 http://127.0.0.1:9090/targets告警规则是否存在、当前是否 firing: # Prometheus UI: # http://127.0.0.1:9090/rules # http://127.0.0.1:9090/alertsAlertmanager 是否收到告警、路由到哪个 receiver: kubectl -n monitoring port-forward pod/alertmanager-main-0 9093:9093 # 打开 http://127.0.0.1:9093feishu-forwarder 是否收到 webhook: kubectl logs -n monitoring deploy/feishu-forwarder -f二、监控指标详解#列出所有规则对象(所有命名空间) root@k8s-01:/woke/prometheus/feishu# kubectl get prometheusrules -A NAMESPACE NAME AGE monitoring alertmanager-main-rules 7d18h monitoring grafana-rules 7d18h monitoring kube-prometheus-rules 7d18h monitoring kube-state-metrics-rules 7d18h monitoring kubernetes-monitoring-rules 7d18h monitoring node-exporter-rules 7d18h monitoring prometheus-k8s-prometheus-rules 7d18h monitoring prometheus-operator-rules 7d18h node-exporter-rules 来源指标:job="node-exporter"(node_exporter 导出的主机/节点指标) 覆盖内容:CPU、内存、磁盘、inode、网络、系统负载、文件系统只读、时钟偏移等 常见指标前缀:node_cpu_*, node_memory_*, node_filesystem_*, node_network_*, node_load* kube-state-metrics-rules 来源指标:job="kube-state-metrics"(把 K8s 资源状态转成指标) 覆盖内容:Deployment/StatefulSet/DaemonSet 副本不一致、Pod CrashLoopBackOff、Job 失败、HPA 异常、Node 条件(NotReady/Pressure)等 常见指标前缀:kube_pod_*, kube_deployment_*, kube_statefulset_*, kube_daemonset_*, kube_node_*, kube_job_* kubernetes-monitoring-rules 来源指标:Kubernetes 控制面/节点组件(不同集群会略有差异) 典型 job:kube-apiserver、kubelet、coredns、kube-controller-manager、kube-scheduler 等 覆盖内容:API Server 错误率/延迟、kubelet/cadvisor 异常、CoreDNS 错误、控制面组件不可达等 常见指标:apiserver_*, kubelet_*, coredns_*, scheduler_*, workqueue_* 等 kube-prometheus-rules 这是 kube-prometheus 自带的“通用规则包”,通常偏 整套监控栈/集群通用: 一些通用 recording 规则(把原始指标聚合成更好用的序列) 一些跨组件的通用告警(规则名随版本会变) 来源可能混合:node-exporter、kube-state-metrics、apiserver、kubelet 等都会用到 你可以把它理解成“这套监控方案自带的公共配方”,不专属于某一个 exporter。 prometheus-k8s-prometheus-rules 来源指标:job="prometheus-k8s"(Prometheus 自己的 /metrics) 覆盖内容:抓取失败、规则评估失败、远端写入失败、TSDB 问题、磁盘即将满、样本摄入异常、告警发送到 Alertmanager 失败等 常见指标前缀:prometheus_*, prometheus_tsdb_*, prometheus_rule_* prometheus-operator-rules 来源指标:job="prometheus-operator" 覆盖内容:operator reconcile 失败/错误率、资源同步问题、配置 reload 问题等 常见指标前缀:prometheus_operator_* alertmanager-main-rules 来源指标:job="alertmanager-main"(Alertmanager 自己的 /metrics) 覆盖内容:通知发送失败、集群 peer 同步问题、silence/通知队列相关异常等 常见指标前缀:alertmanager_* grafana-rules 来源指标:job="grafana"(Grafana /metrics,若开启) 覆盖内容:Grafana 自身可用性/HTTP 错误、(有些环境也会加数据源连接类告警) 常见指标前缀:grafana_*#看详细监控数据 kubectl get prometheusrule node-exporter-rules -n monitoring -o yaml apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"monitoring.coreos.com/v1","kind":"PrometheusRule","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"exporter","app.kubernetes.io/name":"node-exporter","app.kubernetes.io/part-of":"kube-prometheus","app.kubernetes.io/version":"1.8.2","prometheus":"k8s","role":"alert-rules"},"name":"node-exporter-rules","namespace":"monitoring"},"spec":{"groups":[{"name":"node-exporter","rules":[{"alert":"NodeFilesystemSpaceFillingUp","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left and is filling up.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemspacefillingup","summary":"Filesystem is predicted to run out of space within the next 24 hours."},"expr":"(\n node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_size_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 15\nand\n predict_linear(node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"}[6h], 24*60*60) \u003c 0\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"warning"}},{"alert":"NodeFilesystemSpaceFillingUp","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left and is filling up fast.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemspacefillingup","summary":"Filesystem is predicted to run out of space within the next 4 hours."},"expr":"(\n node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_size_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 10\nand\n predict_linear(node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"}[6h], 4*60*60) \u003c 0\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"critical"}},{"alert":"NodeFilesystemAlmostOutOfSpace","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutofspace","summary":"Filesystem has less than 5% space left."},"expr":"(\n node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_size_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 5\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"30m","labels":{"severity":"warning"}},{"alert":"NodeFilesystemAlmostOutOfSpace","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutofspace","summary":"Filesystem has less than 3% space left."},"expr":"(\n node_filesystem_avail_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_size_bytes{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 3\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"30m","labels":{"severity":"critical"}},{"alert":"NodeFilesystemFilesFillingUp","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left and is filling up.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemfilesfillingup","summary":"Filesystem is predicted to run out of inodes within the next 24 hours."},"expr":"(\n node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_files{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 40\nand\n predict_linear(node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"}[6h], 24*60*60) \u003c 0\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"warning"}},{"alert":"NodeFilesystemFilesFillingUp","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left and is filling up fast.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemfilesfillingup","summary":"Filesystem is predicted to run out of inodes within the next 4 hours."},"expr":"(\n node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_files{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 20\nand\n predict_linear(node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"}[6h], 4*60*60) \u003c 0\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"critical"}},{"alert":"NodeFilesystemAlmostOutOfFiles","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutoffiles","summary":"Filesystem has less than 5% inodes left."},"expr":"(\n node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_files{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 5\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"warning"}},{"alert":"NodeFilesystemAlmostOutOfFiles","annotations":{"description":"Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutoffiles","summary":"Filesystem has less than 3% inodes left."},"expr":"(\n node_filesystem_files_free{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} / node_filesystem_files{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} * 100 \u003c 3\nand\n node_filesystem_readonly{job=\"node-exporter\",fstype!=\"\",mountpoint!=\"\"} == 0\n)\n","for":"1h","labels":{"severity":"critical"}},{"alert":"NodeNetworkReceiveErrs","annotations":{"description":"{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf \"%.0f\" $value }} receive errors in the last two minutes.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodenetworkreceiveerrs","summary":"Network interface is reporting many receive errors."},"expr":"rate(node_network_receive_errs_total{job=\"node-exporter\"}[2m]) / rate(node_network_receive_packets_total{job=\"node-exporter\"}[2m]) \u003e 0.01\n","for":"1h","labels":{"severity":"warning"}},{"alert":"NodeNetworkTransmitErrs","annotations":{"description":"{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf \"%.0f\" $value }} transmit errors in the last two minutes.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodenetworktransmiterrs","summary":"Network interface is reporting many transmit errors."},"expr":"rate(node_network_transmit_errs_total{job=\"node-exporter\"}[2m]) / rate(node_network_transmit_packets_total{job=\"node-exporter\"}[2m]) \u003e 0.01\n","for":"1h","labels":{"severity":"warning"}},{"alert":"NodeHighNumberConntrackEntriesUsed","annotations":{"description":"{{ $value | humanizePercentage }} of conntrack entries are used.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodehighnumberconntrackentriesused","summary":"Number of conntrack are getting close to the limit."},"expr":"(node_nf_conntrack_entries{job=\"node-exporter\"} / node_nf_conntrack_entries_limit) \u003e 0.75\n","labels":{"severity":"warning"}},{"alert":"NodeTextFileCollectorScrapeError","annotations":{"description":"Node Exporter text file collector on {{ $labels.instance }} failed to scrape.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodetextfilecollectorscrapeerror","summary":"Node Exporter text file collector failed to scrape."},"expr":"node_textfile_scrape_error{job=\"node-exporter\"} == 1\n","labels":{"severity":"warning"}},{"alert":"NodeClockSkewDetected","annotations":{"description":"Clock at {{ $labels.instance }} is out of sync by more than 0.05s. Ensure NTP is configured correctly on this host.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodeclockskewdetected","summary":"Clock skew detected."},"expr":"(\n node_timex_offset_seconds{job=\"node-exporter\"} \u003e 0.05\nand\n deriv(node_timex_offset_seconds{job=\"node-exporter\"}[5m]) \u003e= 0\n)\nor\n(\n node_timex_offset_seconds{job=\"node-exporter\"} \u003c -0.05\nand\n deriv(node_timex_offset_seconds{job=\"node-exporter\"}[5m]) \u003c= 0\n)\n","for":"10m","labels":{"severity":"warning"}},{"alert":"NodeClockNotSynchronising","annotations":{"description":"Clock at {{ $labels.instance }} is not synchronising. Ensure NTP is configured on this host.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodeclocknotsynchronising","summary":"Clock not synchronising."},"expr":"min_over_time(node_timex_sync_status{job=\"node-exporter\"}[5m]) == 0\nand\nnode_timex_maxerror_seconds{job=\"node-exporter\"} \u003e= 16\n","for":"10m","labels":{"severity":"warning"}},{"alert":"NodeRAIDDegraded","annotations":{"description":"RAID array '{{ $labels.device }}' at {{ $labels.instance }} is in degraded state due to one or more disks failures. Number of spare drives is insufficient to fix issue automatically.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/noderaiddegraded","summary":"RAID Array is degraded."},"expr":"node_md_disks_required{job=\"node-exporter\",device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"} - ignoring (state) (node_md_disks{state=\"active\",job=\"node-exporter\",device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"}) \u003e 0\n","for":"15m","labels":{"severity":"critical"}},{"alert":"NodeRAIDDiskFailure","annotations":{"description":"At least one device in RAID array at {{ $labels.instance }} failed. Array '{{ $labels.device }}' needs attention and possibly a disk swap.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/noderaiddiskfailure","summary":"Failed device in RAID array."},"expr":"node_md_disks{state=\"failed\",job=\"node-exporter\",device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"} \u003e 0\n","labels":{"severity":"warning"}},{"alert":"NodeFileDescriptorLimit","annotations":{"description":"File descriptors limit at {{ $labels.instance }} is currently at {{ printf \"%.2f\" $value }}%.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefiledescriptorlimit","summary":"Kernel is predicted to exhaust file descriptors limit soon."},"expr":"(\n node_filefd_allocated{job=\"node-exporter\"} * 100 / node_filefd_maximum{job=\"node-exporter\"} \u003e 70\n)\n","for":"15m","labels":{"severity":"warning"}},{"alert":"NodeFileDescriptorLimit","annotations":{"description":"File descriptors limit at {{ $labels.instance }} is currently at {{ printf \"%.2f\" $value }}%.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodefiledescriptorlimit","summary":"Kernel is predicted to exhaust file descriptors limit soon."},"expr":"(\n node_filefd_allocated{job=\"node-exporter\"} * 100 / node_filefd_maximum{job=\"node-exporter\"} \u003e 90\n)\n","for":"15m","labels":{"severity":"critical"}},{"alert":"NodeCPUHighUsage","annotations":{"description":"CPU usage at {{ $labels.instance }} has been above 90% for the last 15 minutes, is currently at {{ printf \"%.2f\" $value }}%.\n","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodecpuhighusage","summary":"High CPU usage."},"expr":"sum without(mode) (avg without (cpu) (rate(node_cpu_seconds_total{job=\"node-exporter\", mode!=\"idle\"}[2m]))) * 100 \u003e 90\n","for":"15m","labels":{"severity":"info"}},{"alert":"NodeSystemSaturation","annotations":{"description":"System load per core at {{ $labels.instance }} has been above 2 for the last 15 minutes, is currently at {{ printf \"%.2f\" $value }}.\nThis might indicate this instance resources saturation and can cause it becoming unresponsive.\n","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodesystemsaturation","summary":"System saturated, load per core is very high."},"expr":"node_load1{job=\"node-exporter\"}\n/ count without (cpu, mode) (node_cpu_seconds_total{job=\"node-exporter\", mode=\"idle\"}) \u003e 2\n","for":"15m","labels":{"severity":"warning"}},{"alert":"NodeMemoryMajorPagesFaults","annotations":{"description":"Memory major pages are occurring at very high rate at {{ $labels.instance }}, 500 major page faults per second for the last 15 minutes, is currently at {{ printf \"%.2f\" $value }}.\nPlease check that there is enough memory available at this instance.\n","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodememorymajorpagesfaults","summary":"Memory major page faults are occurring at very high rate."},"expr":"rate(node_vmstat_pgmajfault{job=\"node-exporter\"}[5m]) \u003e 500\n","for":"15m","labels":{"severity":"warning"}},{"alert":"NodeMemoryHighUtilization","annotations":{"description":"Memory is filling up at {{ $labels.instance }}, has been above 90% for the last 15 minutes, is currently at {{ printf \"%.2f\" $value }}%.\n","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodememoryhighutilization","summary":"Host is running out of memory."},"expr":"100 - (node_memory_MemAvailable_bytes{job=\"node-exporter\"} / node_memory_MemTotal_bytes{job=\"node-exporter\"} * 100) \u003e 90\n","for":"15m","labels":{"severity":"warning"}},{"alert":"NodeDiskIOSaturation","annotations":{"description":"Disk IO queue (aqu-sq) is high on {{ $labels.device }} at {{ $labels.instance }}, has been above 10 for the last 30 minutes, is currently at {{ printf \"%.2f\" $value }}.\nThis symptom might indicate disk saturation.\n","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodediskiosaturation","summary":"Disk IO queue is high."},"expr":"rate(node_disk_io_time_weighted_seconds_total{job=\"node-exporter\", device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"}[5m]) \u003e 10\n","for":"30m","labels":{"severity":"warning"}},{"alert":"NodeSystemdServiceFailed","annotations":{"description":"Systemd service {{ $labels.name }} has entered failed state at {{ $labels.instance }}","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodesystemdservicefailed","summary":"Systemd service has entered failed state."},"expr":"node_systemd_unit_state{job=\"node-exporter\", state=\"failed\"} == 1\n","for":"5m","labels":{"severity":"warning"}},{"alert":"NodeBondingDegraded","annotations":{"description":"Bonding interface {{ $labels.master }} on {{ $labels.instance }} is in degraded state due to one or more slave failures.","runbook_url":"https://runbooks.prometheus-operator.dev/runbooks/node/nodebondingdegraded","summary":"Bonding interface is degraded"},"expr":"(node_bonding_slaves - node_bonding_active) != 0\n","for":"5m","labels":{"severity":"warning"}}]},{"name":"node-exporter.rules","rules":[{"expr":"count without (cpu, mode) (\n node_cpu_seconds_total{job=\"node-exporter\",mode=\"idle\"}\n)\n","record":"instance:node_num_cpu:sum"},{"expr":"1 - avg without (cpu) (\n sum without (mode) (rate(node_cpu_seconds_total{job=\"node-exporter\", mode=~\"idle|iowait|steal\"}[5m]))\n)\n","record":"instance:node_cpu_utilisation:rate5m"},{"expr":"(\n node_load1{job=\"node-exporter\"}\n/\n instance:node_num_cpu:sum{job=\"node-exporter\"}\n)\n","record":"instance:node_load1_per_cpu:ratio"},{"expr":"1 - (\n (\n node_memory_MemAvailable_bytes{job=\"node-exporter\"}\n or\n (\n node_memory_Buffers_bytes{job=\"node-exporter\"}\n +\n node_memory_Cached_bytes{job=\"node-exporter\"}\n +\n node_memory_MemFree_bytes{job=\"node-exporter\"}\n +\n node_memory_Slab_bytes{job=\"node-exporter\"}\n )\n )\n/\n node_memory_MemTotal_bytes{job=\"node-exporter\"}\n)\n","record":"instance:node_memory_utilisation:ratio"},{"expr":"rate(node_vmstat_pgmajfault{job=\"node-exporter\"}[5m])\n","record":"instance:node_vmstat_pgmajfault:rate5m"},{"expr":"rate(node_disk_io_time_seconds_total{job=\"node-exporter\", device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"}[5m])\n","record":"instance_device:node_disk_io_time_seconds:rate5m"},{"expr":"rate(node_disk_io_time_weighted_seconds_total{job=\"node-exporter\", device=~\"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)\"}[5m])\n","record":"instance_device:node_disk_io_time_weighted_seconds:rate5m"},{"expr":"sum without (device) (\n rate(node_network_receive_bytes_total{job=\"node-exporter\", device!=\"lo\"}[5m])\n)\n","record":"instance:node_network_receive_bytes_excluding_lo:rate5m"},{"expr":"sum without (device) (\n rate(node_network_transmit_bytes_total{job=\"node-exporter\", device!=\"lo\"}[5m])\n)\n","record":"instance:node_network_transmit_bytes_excluding_lo:rate5m"},{"expr":"sum without (device) (\n rate(node_network_receive_drop_total{job=\"node-exporter\", device!=\"lo\"}[5m])\n)\n","record":"instance:node_network_receive_drop_excluding_lo:rate5m"},{"expr":"sum without (device) (\n rate(node_network_transmit_drop_total{job=\"node-exporter\", device!=\"lo\"}[5m])\n)\n","record":"instance:node_network_transmit_drop_excluding_lo:rate5m"}]}]}} creationTimestamp: "2026-01-29T13:10:34Z" generation: 1 labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: node-exporter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 1.8.2 prometheus: k8s role: alert-rules name: node-exporter-rules namespace: monitoring resourceVersion: "17509681" uid: 8f17f249-40fd-4bef-839b-d9389947b19d spec: groups: - name: node-exporter rules: - alert: NodeFilesystemSpaceFillingUp annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available space left and is filling up. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemspacefillingup summary: Filesystem is predicted to run out of space within the next 24 hours. expr: | ( node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_size_bytes{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 15 and predict_linear(node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""}[6h], 24*60*60) < 0 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: warning - alert: NodeFilesystemSpaceFillingUp annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available space left and is filling up fast. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemspacefillingup summary: Filesystem is predicted to run out of space within the next 4 hours. expr: | ( node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_size_bytes{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 10 and predict_linear(node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""}[6h], 4*60*60) < 0 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: critical - alert: NodeFilesystemAlmostOutOfSpace annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available space left. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutofspace summary: Filesystem has less than 5% space left. expr: | ( node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_size_bytes{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 5 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 30m labels: severity: warning - alert: NodeFilesystemAlmostOutOfSpace annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available space left. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutofspace summary: Filesystem has less than 3% space left. expr: | ( node_filesystem_avail_bytes{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_size_bytes{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 3 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 30m labels: severity: critical - alert: NodeFilesystemFilesFillingUp annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available inodes left and is filling up. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemfilesfillingup summary: Filesystem is predicted to run out of inodes within the next 24 hours. expr: | ( node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_files{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 40 and predict_linear(node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""}[6h], 24*60*60) < 0 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: warning - alert: NodeFilesystemFilesFillingUp annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available inodes left and is filling up fast. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemfilesfillingup summary: Filesystem is predicted to run out of inodes within the next 4 hours. expr: | ( node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_files{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 20 and predict_linear(node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""}[6h], 4*60*60) < 0 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: critical - alert: NodeFilesystemAlmostOutOfFiles annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available inodes left. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutoffiles summary: Filesystem has less than 5% inodes left. expr: | ( node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_files{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 5 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: warning - alert: NodeFilesystemAlmostOutOfFiles annotations: description: Filesystem on {{ $labels.device }}, mounted on {{ $labels.mountpoint }}, at {{ $labels.instance }} has only {{ printf "%.2f" $value }}% available inodes left. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefilesystemalmostoutoffiles summary: Filesystem has less than 3% inodes left. expr: | ( node_filesystem_files_free{job="node-exporter",fstype!="",mountpoint!=""} / node_filesystem_files{job="node-exporter",fstype!="",mountpoint!=""} * 100 < 3 and node_filesystem_readonly{job="node-exporter",fstype!="",mountpoint!=""} == 0 ) for: 1h labels: severity: critical - alert: NodeNetworkReceiveErrs annotations: description: '{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf "%.0f" $value }} receive errors in the last two minutes.' runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodenetworkreceiveerrs summary: Network interface is reporting many receive errors. expr: | rate(node_network_receive_errs_total{job="node-exporter"}[2m]) / rate(node_network_receive_packets_total{job="node-exporter"}[2m]) > 0.01 for: 1h labels: severity: warning - alert: NodeNetworkTransmitErrs annotations: description: '{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf "%.0f" $value }} transmit errors in the last two minutes.' runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodenetworktransmiterrs summary: Network interface is reporting many transmit errors. expr: | rate(node_network_transmit_errs_total{job="node-exporter"}[2m]) / rate(node_network_transmit_packets_total{job="node-exporter"}[2m]) > 0.01 for: 1h labels: severity: warning - alert: NodeHighNumberConntrackEntriesUsed annotations: description: '{{ $value | humanizePercentage }} of conntrack entries are used.' runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodehighnumberconntrackentriesused summary: Number of conntrack are getting close to the limit. expr: | (node_nf_conntrack_entries{job="node-exporter"} / node_nf_conntrack_entries_limit) > 0.75 labels: severity: warning - alert: NodeTextFileCollectorScrapeError annotations: description: Node Exporter text file collector on {{ $labels.instance }} failed to scrape. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodetextfilecollectorscrapeerror summary: Node Exporter text file collector failed to scrape. expr: | node_textfile_scrape_error{job="node-exporter"} == 1 labels: severity: warning - alert: NodeClockSkewDetected annotations: description: Clock at {{ $labels.instance }} is out of sync by more than 0.05s. Ensure NTP is configured correctly on this host. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodeclockskewdetected summary: Clock skew detected. expr: | ( node_timex_offset_seconds{job="node-exporter"} > 0.05 and deriv(node_timex_offset_seconds{job="node-exporter"}[5m]) >= 0 ) or ( node_timex_offset_seconds{job="node-exporter"} < -0.05 and deriv(node_timex_offset_seconds{job="node-exporter"}[5m]) <= 0 ) for: 10m labels: severity: warning - alert: NodeClockNotSynchronising annotations: description: Clock at {{ $labels.instance }} is not synchronising. Ensure NTP is configured on this host. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodeclocknotsynchronising summary: Clock not synchronising. expr: | min_over_time(node_timex_sync_status{job="node-exporter"}[5m]) == 0 and node_timex_maxerror_seconds{job="node-exporter"} >= 16 for: 10m labels: severity: warning - alert: NodeRAIDDegraded annotations: description: RAID array '{{ $labels.device }}' at {{ $labels.instance }} is in degraded state due to one or more disks failures. Number of spare drives is insufficient to fix issue automatically. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/noderaiddegraded summary: RAID Array is degraded. expr: | node_md_disks_required{job="node-exporter",device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"} - ignoring (state) (node_md_disks{state="active",job="node-exporter",device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"}) > 0 for: 15m labels: severity: critical - alert: NodeRAIDDiskFailure annotations: description: At least one device in RAID array at {{ $labels.instance }} failed. Array '{{ $labels.device }}' needs attention and possibly a disk swap. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/noderaiddiskfailure summary: Failed device in RAID array. expr: | node_md_disks{state="failed",job="node-exporter",device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"} > 0 labels: severity: warning - alert: NodeFileDescriptorLimit annotations: description: File descriptors limit at {{ $labels.instance }} is currently at {{ printf "%.2f" $value }}%. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefiledescriptorlimit summary: Kernel is predicted to exhaust file descriptors limit soon. expr: | ( node_filefd_allocated{job="node-exporter"} * 100 / node_filefd_maximum{job="node-exporter"} > 70 ) for: 15m labels: severity: warning - alert: NodeFileDescriptorLimit annotations: description: File descriptors limit at {{ $labels.instance }} is currently at {{ printf "%.2f" $value }}%. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodefiledescriptorlimit summary: Kernel is predicted to exhaust file descriptors limit soon. expr: | ( node_filefd_allocated{job="node-exporter"} * 100 / node_filefd_maximum{job="node-exporter"} > 90 ) for: 15m labels: severity: critical - alert: NodeCPUHighUsage annotations: description: | CPU usage at {{ $labels.instance }} has been above 90% for the last 15 minutes, is currently at {{ printf "%.2f" $value }}%. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodecpuhighusage summary: High CPU usage. expr: | sum without(mode) (avg without (cpu) (rate(node_cpu_seconds_total{job="node-exporter", mode!="idle"}[2m]))) * 100 > 90 for: 15m labels: severity: info - alert: NodeSystemSaturation annotations: description: | System load per core at {{ $labels.instance }} has been above 2 for the last 15 minutes, is currently at {{ printf "%.2f" $value }}. This might indicate this instance resources saturation and can cause it becoming unresponsive. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodesystemsaturation summary: System saturated, load per core is very high. expr: | node_load1{job="node-exporter"} / count without (cpu, mode) (node_cpu_seconds_total{job="node-exporter", mode="idle"}) > 2 for: 15m labels: severity: warning - alert: NodeMemoryMajorPagesFaults annotations: description: | Memory major pages are occurring at very high rate at {{ $labels.instance }}, 500 major page faults per second for the last 15 minutes, is currently at {{ printf "%.2f" $value }}. Please check that there is enough memory available at this instance. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodememorymajorpagesfaults summary: Memory major page faults are occurring at very high rate. expr: | rate(node_vmstat_pgmajfault{job="node-exporter"}[5m]) > 500 for: 15m labels: severity: warning - alert: NodeMemoryHighUtilization annotations: description: | Memory is filling up at {{ $labels.instance }}, has been above 90% for the last 15 minutes, is currently at {{ printf "%.2f" $value }}%. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodememoryhighutilization summary: Host is running out of memory. expr: | 100 - (node_memory_MemAvailable_bytes{job="node-exporter"} / node_memory_MemTotal_bytes{job="node-exporter"} * 100) > 90 for: 15m labels: severity: warning - alert: NodeDiskIOSaturation annotations: description: | Disk IO queue (aqu-sq) is high on {{ $labels.device }} at {{ $labels.instance }}, has been above 10 for the last 30 minutes, is currently at {{ printf "%.2f" $value }}. This symptom might indicate disk saturation. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodediskiosaturation summary: Disk IO queue is high. expr: | rate(node_disk_io_time_weighted_seconds_total{job="node-exporter", device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"}[5m]) > 10 for: 30m labels: severity: warning - alert: NodeSystemdServiceFailed annotations: description: Systemd service {{ $labels.name }} has entered failed state at {{ $labels.instance }} runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodesystemdservicefailed summary: Systemd service has entered failed state. expr: | node_systemd_unit_state{job="node-exporter", state="failed"} == 1 for: 5m labels: severity: warning - alert: NodeBondingDegraded annotations: description: Bonding interface {{ $labels.master }} on {{ $labels.instance }} is in degraded state due to one or more slave failures. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodebondingdegraded summary: Bonding interface is degraded expr: | (node_bonding_slaves - node_bonding_active) != 0 for: 5m labels: severity: warning - name: node-exporter.rules rules: - expr: | count without (cpu, mode) ( node_cpu_seconds_total{job="node-exporter",mode="idle"} ) record: instance:node_num_cpu:sum - expr: | 1 - avg without (cpu) ( sum without (mode) (rate(node_cpu_seconds_total{job="node-exporter", mode=~"idle|iowait|steal"}[5m])) ) record: instance:node_cpu_utilisation:rate5m - expr: | ( node_load1{job="node-exporter"} / instance:node_num_cpu:sum{job="node-exporter"} ) record: instance:node_load1_per_cpu:ratio - expr: | 1 - ( ( node_memory_MemAvailable_bytes{job="node-exporter"} or ( node_memory_Buffers_bytes{job="node-exporter"} + node_memory_Cached_bytes{job="node-exporter"} + node_memory_MemFree_bytes{job="node-exporter"} + node_memory_Slab_bytes{job="node-exporter"} ) ) / node_memory_MemTotal_bytes{job="node-exporter"} ) record: instance:node_memory_utilisation:ratio - expr: | rate(node_vmstat_pgmajfault{job="node-exporter"}[5m]) record: instance:node_vmstat_pgmajfault:rate5m - expr: | rate(node_disk_io_time_seconds_total{job="node-exporter", device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"}[5m]) record: instance_device:node_disk_io_time_seconds:rate5m - expr: | rate(node_disk_io_time_weighted_seconds_total{job="node-exporter", device=~"(/dev/)?(mmcblk.p.+|nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|md.+|dasd.+)"}[5m]) record: instance_device:node_disk_io_time_weighted_seconds:rate5m - expr: | sum without (device) ( rate(node_network_receive_bytes_total{job="node-exporter", device!="lo"}[5m]) ) record: instance:node_network_receive_bytes_excluding_lo:rate5m - expr: | sum without (device) ( rate(node_network_transmit_bytes_total{job="node-exporter", device!="lo"}[5m]) ) record: instance:node_network_transmit_bytes_excluding_lo:rate5m - expr: | sum without (device) ( rate(node_network_receive_drop_total{job="node-exporter", device!="lo"}[5m]) ) record: instance:node_network_receive_drop_excluding_lo:rate5m - expr: | sum without (device) ( rate(node_network_transmit_drop_total{job="node-exporter", device!="lo"}[5m]) ) record: instance:node_network_transmit_drop_excluding_lo:rate5m#kubectl edit prometheusrule node-exporter-rules -n monitoring 可以直接修改 - alert: NodeMemoryHighUtilization annotations: description: | Memory is filling up at {{ $labels.instance }}, has been above 90% for the last 15 minutes, is currently at {{ printf "%.2f" $value }}%. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/node/nodememoryhighutilization summary: Host is running out of memory. expr: | 100 - (node_memory_MemAvailable_bytes{job="node-exporter"} / node_memory_MemTotal_bytes{job="node-exporter"} * 100) > 90 for: 15m labels: severity: warning annotations: 是注释信息/展示信息,不会参与告警匹配逻辑,但会出现在告警详情、通知内容里。常见用来放:描述、摘要、排障链接、负责人等。 alert: NodeMemoryHighUtilization 含义:告警规则的名字(Alert name)。在告警列表里显示的名称; Alertmanager 路由/分组/抑制(silence)时经常用它做匹配条件;下游通知(钉钉/飞书/Slack/邮件)里也会带这个名字。 description: | | 表示 多行字符串(保留换行)。 内容: Memory is filling up at {{ $labels.instance }}, has been above 90% for the last 15 minutes, is currently at {{ printf "%.2f" $value }}%. 这里用的是 Alertmanager 的模板变量(Go template 风格): {{ $labels.instance }} 会被替换成该时间序列的 label 值,比如 10.0.0.12:9100。 这个 label 来自 metrics 本身(node-exporter 指标通常会带 instance)。 {{ $value }} 触发告警时表达式的计算值(这里是“内存使用率百分比”)。 printf "%.2f" 把数值格式化为 保留 2 位小数(比如 91.23%)。 注意:模板渲染发生在 告警发送/展示阶段,不影响 expr 的计算。 runbook_url: ... 含义:Runbook(排障手册)链接。 用途:当值班同学收到告警,点进去能看到: 常见原因; 排查步骤; 缓解/修复方法; 需要升级到谁。 这里链接指向 prometheus-operator 官方 runbook:nodememoryhighutilization。 summary: Host is running out of memory. 含义:一句话摘要。 用途:很多通知渠道会优先展示 summary,适合“短、明确”。 expr: | expr 是 PromQL 表达式,决定“什么时候触发告警”。 表达式: 100 - (node_memory_MemAvailable_bytes{job="node-exporter"} / node_memory_MemTotal_bytes{job="node-exporter"} node_memory_MemAvailable_bytes 来自:node-exporter(Linux 主机)。 含义:系统“可用内存”字节数(大致等于在不严重影响性能前提下可立即分配的内存,包括可回收 page cache 等)。 比 MemFree 更实用:MemFree 只算完全空闲,不算 cache/buffer 的可回收部分,容易误判。 node_memory_MemTotal_bytes 含义:总内存字节数。 node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100计算:可用内存占比(%)。 100 - (...)计算:已使用内存占比(%)。 使用率 = 100% - 可用率 > 90 阈值:使用率超过 90% 才算触发条件成立。 {job="node-exporter"} label 过滤器:只选 job label 为 node-exporter 的时间序列。 为什么需要:如果同名指标来自多个采集 job,用它避免混杂;也能减少误匹配。 可调整:如果你在 Prometheus 里 job 名不是 node-exporter,就得改这里,否则永远算不出来/不触发。 for: 15m 含义:表达式条件需要 连续成立 15 分钟 才“真正触发”(从 Pending 变成 Firing)。 用途: 抑制抖动(比如短时间内存尖峰、瞬时 load)。 避免频繁通知。 与你的文案对应:description 写了 “above 90% for the last 15 minutes”,就是呼应 for: 15m。 注意: 如果指标中断、抓取失败、或者值掉下阈值又上来,计时会重置。 labels: labels 是告警标签,会参与 Alertmanager 路由、分组、去重。 severity: warning 含义:告警级别。 用途: Alertmanager 根据 severity 把告警发到不同渠道(warning 走群通知,critical 走电话/短信)。 也可用于抑制策略(warning 被 critical 覆盖等)。 常见约定:info / warning / critical(团队可自定义,但要统一)。 #或者直接改yaml文件 然后apply 我是没有helm部署的 直接apply https://axzys.cn/index.php/archives/423/ root@k8s-01:/woke/prometheus/kube-prometheus/manifests# grep -R "NodeMemoryHighUtilization" nodeExporter-prometheusRule.yaml: - alert: NodeMemoryHighUtilization root@k8s-01:/woke/prometheus/kube-prometheus/manifests# 改了 直接apply 也可以