搜索到

152

篇与

的结果

-

istio流量分析 一、流量走势NodePort → ingressgateway Service → ingressgateway Pod(Envoy) → Gateway 筛选 → VirtualService 路由 → productpage Service:9080 → productpage Pod #如果不是NodePort 就需要kubectl port-forward -n istio-system --address 0.0.0.0 svc/istio-ingressgateway 8080:80 进行端口暴露 只需要记住一点svc只是负责把流量转发到对应的pod 而网关和路由的匹配则是istio-ingressgateway进行处理二、对应的yaml文件cat gateway.yaml apiVersion: networking.istio.io/v1beta1 kind: Gateway metadata: name: bookinfo-gateway namespace: default spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" root@k8s-01:/woke/istio# kubectl get virtualservice bookinfo -n default -o yaml apiVersion: networking.istio.io/v1 kind: VirtualService metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"networking.istio.io/v1beta1","kind":"VirtualService","metadata":{"annotations":{},"name":"bookinfo","namespace":"default"},"spec":{"gateways":["bookinfo-gateway"],"hosts":["*"],"http":[{"match":[{"uri":{"prefix":"/"}}],"route":[{"destination":{"host":"productpage","port":{"number":9080}}}]}]}} creationTimestamp: "2025-12-31T05:30:04Z" generation: 1 name: bookinfo namespace: default resourceVersion: "8121204" uid: b0fd2c20-35ab-475c-8c1a-d6dc36749ce8 spec: gateways: - bookinfo-gateway hosts: - '*' http: - match: - uri: prefix: / route: - destination: host: productpage port: number: 9080三、对应svc和podroot@k8s-01:/woke/istio# kubectl get pod NAME READY STATUS RESTARTS AGE bookinfo-gateway-istio-6c4c8d9b74-z7c4j 1/1 Running 0 18d details-v1-7b88fb8889-5qt26 2/2 Running 0 33d nginx-5fd58574d-2c9z9 2/2 Running 0 13d nginx-test-78b8db46d6-cfd98 2/2 Running 0 13d productpage-v1-79d79d6-mktpd 2/2 Running 0 33d ratings-v1-5b89cf4bbf-c9xdg 2/2 Running 0 33d reviews-v1-ffd57b847-w9dkr 2/2 Running 0 33d reviews-v2-8cc6fd8dc-q9bd9 2/2 Running 0 33d reviews-v3-cf48455-znts5 2/2 Running 0 33d traefik-gw-istio-7867b4f544-hkknj 1/1 Running 0 18d root@k8s-01:/woke/istio# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE bookinfo-gateway-istio ClusterIP 10.105.38.228 <none> 15021/TCP,80/TCP 18d details ClusterIP 10.100.97.40 <none> 9080/TCP 35d kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 78d nginx ClusterIP 10.110.220.61 <none> 80/TCP 60d nginx-svc NodePort 10.108.4.45 <none> 80:32574/TCP 77d productpage ClusterIP 10.111.145.105 <none> 9080/TCP 35d ratings ClusterIP 10.98.94.10 <none> 9080/TCP 35d reviews ClusterIP 10.97.19.38 <none> 9080/TCP 35d traefik-gw-istio LoadBalancer 10.103.179.128 <pending> 15021:31055/TCP,8000:30447/TCP 18d root@k8s-01:/woke/istio# kubectl get pod -n istio-system NAME READY STATUS RESTARTS AGE istio-egressgateway-596f455c4f-7qh6w 1/1 Running 0 13d istio-ingressgateway-796f5cf647-tcpph 1/1 Running 0 13d istiod-5c84f8c79d-ddxkz 1/1 Running 0 13d kiali-68f9949bf5-8n4tk 1/1 Running 6 (13d ago) 13d prometheus-7f6bc65664-29c7c 0/2 Completed 0 18d prometheus-7f6bc65664-llp2q 2/2 Running 0 5d root@k8s-01:/woke/istio# kubectl get svc -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istio-egressgateway ClusterIP 10.106.183.187 <none> 80/TCP,443/TCP 35d istio-ingressgateway NodePort 10.99.189.246 <none> 15021:31689/TCP,80:32241/TCP,443:30394/TCP,31400:31664/TCP,15443:32466/TCP 35d istiod ClusterIP 10.104.189.5 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 35d istiod-revision-tag-default ClusterIP 10.102.152.242 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 35d kiali NodePort 10.103.234.52 <none> 20001:30318/TCP,9090:30682/TCP 34d prometheus ClusterIP 10.100.183.52 <none> 9090/TCP 34d root@k8s-01:/woke/istio#

istio流量分析 一、流量走势NodePort → ingressgateway Service → ingressgateway Pod(Envoy) → Gateway 筛选 → VirtualService 路由 → productpage Service:9080 → productpage Pod #如果不是NodePort 就需要kubectl port-forward -n istio-system --address 0.0.0.0 svc/istio-ingressgateway 8080:80 进行端口暴露 只需要记住一点svc只是负责把流量转发到对应的pod 而网关和路由的匹配则是istio-ingressgateway进行处理二、对应的yaml文件cat gateway.yaml apiVersion: networking.istio.io/v1beta1 kind: Gateway metadata: name: bookinfo-gateway namespace: default spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" root@k8s-01:/woke/istio# kubectl get virtualservice bookinfo -n default -o yaml apiVersion: networking.istio.io/v1 kind: VirtualService metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"networking.istio.io/v1beta1","kind":"VirtualService","metadata":{"annotations":{},"name":"bookinfo","namespace":"default"},"spec":{"gateways":["bookinfo-gateway"],"hosts":["*"],"http":[{"match":[{"uri":{"prefix":"/"}}],"route":[{"destination":{"host":"productpage","port":{"number":9080}}}]}]}} creationTimestamp: "2025-12-31T05:30:04Z" generation: 1 name: bookinfo namespace: default resourceVersion: "8121204" uid: b0fd2c20-35ab-475c-8c1a-d6dc36749ce8 spec: gateways: - bookinfo-gateway hosts: - '*' http: - match: - uri: prefix: / route: - destination: host: productpage port: number: 9080三、对应svc和podroot@k8s-01:/woke/istio# kubectl get pod NAME READY STATUS RESTARTS AGE bookinfo-gateway-istio-6c4c8d9b74-z7c4j 1/1 Running 0 18d details-v1-7b88fb8889-5qt26 2/2 Running 0 33d nginx-5fd58574d-2c9z9 2/2 Running 0 13d nginx-test-78b8db46d6-cfd98 2/2 Running 0 13d productpage-v1-79d79d6-mktpd 2/2 Running 0 33d ratings-v1-5b89cf4bbf-c9xdg 2/2 Running 0 33d reviews-v1-ffd57b847-w9dkr 2/2 Running 0 33d reviews-v2-8cc6fd8dc-q9bd9 2/2 Running 0 33d reviews-v3-cf48455-znts5 2/2 Running 0 33d traefik-gw-istio-7867b4f544-hkknj 1/1 Running 0 18d root@k8s-01:/woke/istio# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE bookinfo-gateway-istio ClusterIP 10.105.38.228 <none> 15021/TCP,80/TCP 18d details ClusterIP 10.100.97.40 <none> 9080/TCP 35d kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 78d nginx ClusterIP 10.110.220.61 <none> 80/TCP 60d nginx-svc NodePort 10.108.4.45 <none> 80:32574/TCP 77d productpage ClusterIP 10.111.145.105 <none> 9080/TCP 35d ratings ClusterIP 10.98.94.10 <none> 9080/TCP 35d reviews ClusterIP 10.97.19.38 <none> 9080/TCP 35d traefik-gw-istio LoadBalancer 10.103.179.128 <pending> 15021:31055/TCP,8000:30447/TCP 18d root@k8s-01:/woke/istio# kubectl get pod -n istio-system NAME READY STATUS RESTARTS AGE istio-egressgateway-596f455c4f-7qh6w 1/1 Running 0 13d istio-ingressgateway-796f5cf647-tcpph 1/1 Running 0 13d istiod-5c84f8c79d-ddxkz 1/1 Running 0 13d kiali-68f9949bf5-8n4tk 1/1 Running 6 (13d ago) 13d prometheus-7f6bc65664-29c7c 0/2 Completed 0 18d prometheus-7f6bc65664-llp2q 2/2 Running 0 5d root@k8s-01:/woke/istio# kubectl get svc -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istio-egressgateway ClusterIP 10.106.183.187 <none> 80/TCP,443/TCP 35d istio-ingressgateway NodePort 10.99.189.246 <none> 15021:31689/TCP,80:32241/TCP,443:30394/TCP,31400:31664/TCP,15443:32466/TCP 35d istiod ClusterIP 10.104.189.5 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 35d istiod-revision-tag-default ClusterIP 10.102.152.242 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 35d kiali NodePort 10.103.234.52 <none> 20001:30318/TCP,9090:30682/TCP 34d prometheus ClusterIP 10.100.183.52 <none> 9090/TCP 34d root@k8s-01:/woke/istio# -

问题 一、找回密码root@k8s-01:~# kubectl -n monitoring get pod | grep grafana grafana-7ff454c477-l9x2k 1/1 Running 0 8d root@k8s-01:~# kubectl -n monitoring exec -it grafana-7ff454c477-l9x2k -- sh /usr/share/grafana $ grafana-cli admin reset-admin-password '33070595Abc' Deprecation warning: The standalone 'grafana-cli' program is deprecated and will be removed in the future. Please update all uses of 'grafana-cli' to 'grafana cli' INFO [01-29|12:45:37] Starting Grafana logger=settings version= commit= branch= compiled=1970-01-01T00:00:00Z INFO [01-29|12:45:37] Config loaded from logger=settings file=/usr/share/grafana/conf/defaults.ini INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_DATA=/var/lib/grafana" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_LOGS=/var/log/grafana" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_PLUGINS=/var/lib/grafana/plugins" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_PROVISIONING=/etc/grafana/provisioning" INFO [01-29|12:45:37] Target logger=settings target=[all] INFO [01-29|12:45:37] Path Home logger=settings path=/usr/share/grafana INFO [01-29|12:45:37] Path Data logger=settings path=/var/lib/grafana INFO [01-29|12:45:37] Path Logs logger=settings path=/var/log/grafana INFO [01-29|12:45:37] Path Plugins logger=settings path=/var/lib/grafana/plugins INFO [01-29|12:45:37] Path Provisioning logger=settings path=/etc/grafana/provisioning INFO [01-29|12:45:37] App mode production logger=settings INFO [01-29|12:45:37] FeatureToggles logger=featuremgmt recoveryThreshold=true panelMonitoring=true lokiQuerySplitting=true nestedFolders=true logsContextDatasourceUi=true cloudWatchNewLabelParsing=true logRowsPopoverMenu=true kubernetesPlaylists=true dataplaneFrontendFallback=true recordedQueriesMulti=true transformationsVariableSupport=true addFieldFromCalculationStatFunctions=true cloudWatchCrossAccountQuerying=true prometheusAzureOverrideAudience=true lokiQueryHints=true logsExploreTableVisualisation=true annotationPermissionUpdate=true lokiMetricDataplane=true prometheusMetricEncyclopedia=true lokiStructuredMetadata=true topnav=true alertingInsights=true exploreMetrics=true formatString=true ssoSettingsApi=true autoMigrateXYChartPanel=true tlsMemcached=true prometheusConfigOverhaulAuth=true logsInfiniteScrolling=true alertingSimplifiedRouting=true awsAsyncQueryCaching=true managedPluginsInstall=true cloudWatchRoundUpEndTime=true transformationsRedesign=true alertingNoDataErrorExecution=true dashgpt=true influxdbBackendMigration=true prometheusDataplane=true groupToNestedTableTransformation=true correlations=true publicDashboards=true angularDeprecationUI=true INFO [01-29|12:45:37] Connecting to DB logger=sqlstore dbtype=sqlite3 INFO [01-29|12:45:37] Locking database logger=migrator INFO [01-29|12:45:37] Starting DB migrations logger=migrator INFO [01-29|12:45:37] migrations completed logger=migrator performed=0 skipped=594 duration=359.617µs INFO [01-29|12:45:37] Unlocking database logger=migrator INFO [01-29|12:45:37] Envelope encryption state logger=secrets enabled=true current provider=secretKey.v1 Admin password changed successfully ✔ /usr/share/grafana $ exit root@k8s-01:~# cat <<'EOF' | kubectl apply -f - apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-grafana namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: grafana app.kubernetes.io/name: grafana app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 3000 --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-prometheus namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 9090 --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-alertmanager namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: alert-router app.kubernetes.io/instance: main app.kubernetes.io/name: alertmanager app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 9093 EOF 需要放行 monitoring 里原来的 NetworkPolicy 没放行 traefik → 导致 Traefik 转发到 grafana/prometheus/alertmanager 全部被丢包,最后表现成 504/超时。#添加节点 创建 additionalScrapeConfigs 的 Secret(把外部节点加进去) #我这里用 job_name: node-exporter 是为了让你现成的 Node Exporter / Nodes 仪表盘直接复用(很多面板按 job 过滤)。 cat <<'EOF' | kubectl apply -f - apiVersion: v1 kind: Secret metadata: name: prometheus-additional-scrape-configs namespace: monitoring type: Opaque stringData: additional-scrape-configs.yaml: | - job_name: node-exporter static_configs: - targets: - 192.168.1.12:9100 - 192.168.1.15:9100 - 192.168.1.30:9100 labels: origin: external relabel_configs: - source_labels: [__address__] target_label: instance regex: '([^:]+):\d+' replacement: '$1' EOF #把 Secret 挂到 Prometheus(prometheus-k8s) kubectl -n monitoring patch prometheus prometheus-k8s --type merge -p ' { "spec": { "additionalScrapeConfigs": { "name": "prometheus-additional-scrape-configs", "key": "additional-scrape-configs.yaml" } } }' #验证 kubectl -n monitoring port-forward svc/prometheus-k8s 9090:9090

问题 一、找回密码root@k8s-01:~# kubectl -n monitoring get pod | grep grafana grafana-7ff454c477-l9x2k 1/1 Running 0 8d root@k8s-01:~# kubectl -n monitoring exec -it grafana-7ff454c477-l9x2k -- sh /usr/share/grafana $ grafana-cli admin reset-admin-password '33070595Abc' Deprecation warning: The standalone 'grafana-cli' program is deprecated and will be removed in the future. Please update all uses of 'grafana-cli' to 'grafana cli' INFO [01-29|12:45:37] Starting Grafana logger=settings version= commit= branch= compiled=1970-01-01T00:00:00Z INFO [01-29|12:45:37] Config loaded from logger=settings file=/usr/share/grafana/conf/defaults.ini INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_DATA=/var/lib/grafana" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_LOGS=/var/log/grafana" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_PLUGINS=/var/lib/grafana/plugins" INFO [01-29|12:45:37] Config overridden from Environment variable logger=settings var="GF_PATHS_PROVISIONING=/etc/grafana/provisioning" INFO [01-29|12:45:37] Target logger=settings target=[all] INFO [01-29|12:45:37] Path Home logger=settings path=/usr/share/grafana INFO [01-29|12:45:37] Path Data logger=settings path=/var/lib/grafana INFO [01-29|12:45:37] Path Logs logger=settings path=/var/log/grafana INFO [01-29|12:45:37] Path Plugins logger=settings path=/var/lib/grafana/plugins INFO [01-29|12:45:37] Path Provisioning logger=settings path=/etc/grafana/provisioning INFO [01-29|12:45:37] App mode production logger=settings INFO [01-29|12:45:37] FeatureToggles logger=featuremgmt recoveryThreshold=true panelMonitoring=true lokiQuerySplitting=true nestedFolders=true logsContextDatasourceUi=true cloudWatchNewLabelParsing=true logRowsPopoverMenu=true kubernetesPlaylists=true dataplaneFrontendFallback=true recordedQueriesMulti=true transformationsVariableSupport=true addFieldFromCalculationStatFunctions=true cloudWatchCrossAccountQuerying=true prometheusAzureOverrideAudience=true lokiQueryHints=true logsExploreTableVisualisation=true annotationPermissionUpdate=true lokiMetricDataplane=true prometheusMetricEncyclopedia=true lokiStructuredMetadata=true topnav=true alertingInsights=true exploreMetrics=true formatString=true ssoSettingsApi=true autoMigrateXYChartPanel=true tlsMemcached=true prometheusConfigOverhaulAuth=true logsInfiniteScrolling=true alertingSimplifiedRouting=true awsAsyncQueryCaching=true managedPluginsInstall=true cloudWatchRoundUpEndTime=true transformationsRedesign=true alertingNoDataErrorExecution=true dashgpt=true influxdbBackendMigration=true prometheusDataplane=true groupToNestedTableTransformation=true correlations=true publicDashboards=true angularDeprecationUI=true INFO [01-29|12:45:37] Connecting to DB logger=sqlstore dbtype=sqlite3 INFO [01-29|12:45:37] Locking database logger=migrator INFO [01-29|12:45:37] Starting DB migrations logger=migrator INFO [01-29|12:45:37] migrations completed logger=migrator performed=0 skipped=594 duration=359.617µs INFO [01-29|12:45:37] Unlocking database logger=migrator INFO [01-29|12:45:37] Envelope encryption state logger=secrets enabled=true current provider=secretKey.v1 Admin password changed successfully ✔ /usr/share/grafana $ exit root@k8s-01:~# cat <<'EOF' | kubectl apply -f - apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-grafana namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: grafana app.kubernetes.io/name: grafana app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 3000 --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-prometheus namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 9090 --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-traefik-to-alertmanager namespace: monitoring spec: podSelector: matchLabels: app.kubernetes.io/component: alert-router app.kubernetes.io/instance: main app.kubernetes.io/name: alertmanager app.kubernetes.io/part-of: kube-prometheus policyTypes: ["Ingress"] ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: traefik ports: - protocol: TCP port: 9093 EOF 需要放行 monitoring 里原来的 NetworkPolicy 没放行 traefik → 导致 Traefik 转发到 grafana/prometheus/alertmanager 全部被丢包,最后表现成 504/超时。#添加节点 创建 additionalScrapeConfigs 的 Secret(把外部节点加进去) #我这里用 job_name: node-exporter 是为了让你现成的 Node Exporter / Nodes 仪表盘直接复用(很多面板按 job 过滤)。 cat <<'EOF' | kubectl apply -f - apiVersion: v1 kind: Secret metadata: name: prometheus-additional-scrape-configs namespace: monitoring type: Opaque stringData: additional-scrape-configs.yaml: | - job_name: node-exporter static_configs: - targets: - 192.168.1.12:9100 - 192.168.1.15:9100 - 192.168.1.30:9100 labels: origin: external relabel_configs: - source_labels: [__address__] target_label: instance regex: '([^:]+):\d+' replacement: '$1' EOF #把 Secret 挂到 Prometheus(prometheus-k8s) kubectl -n monitoring patch prometheus prometheus-k8s --type merge -p ' { "spec": { "additionalScrapeConfigs": { "name": "prometheus-additional-scrape-configs", "key": "additional-scrape-configs.yaml" } } }' #验证 kubectl -n monitoring port-forward svc/prometheus-k8s 9090:9090 -

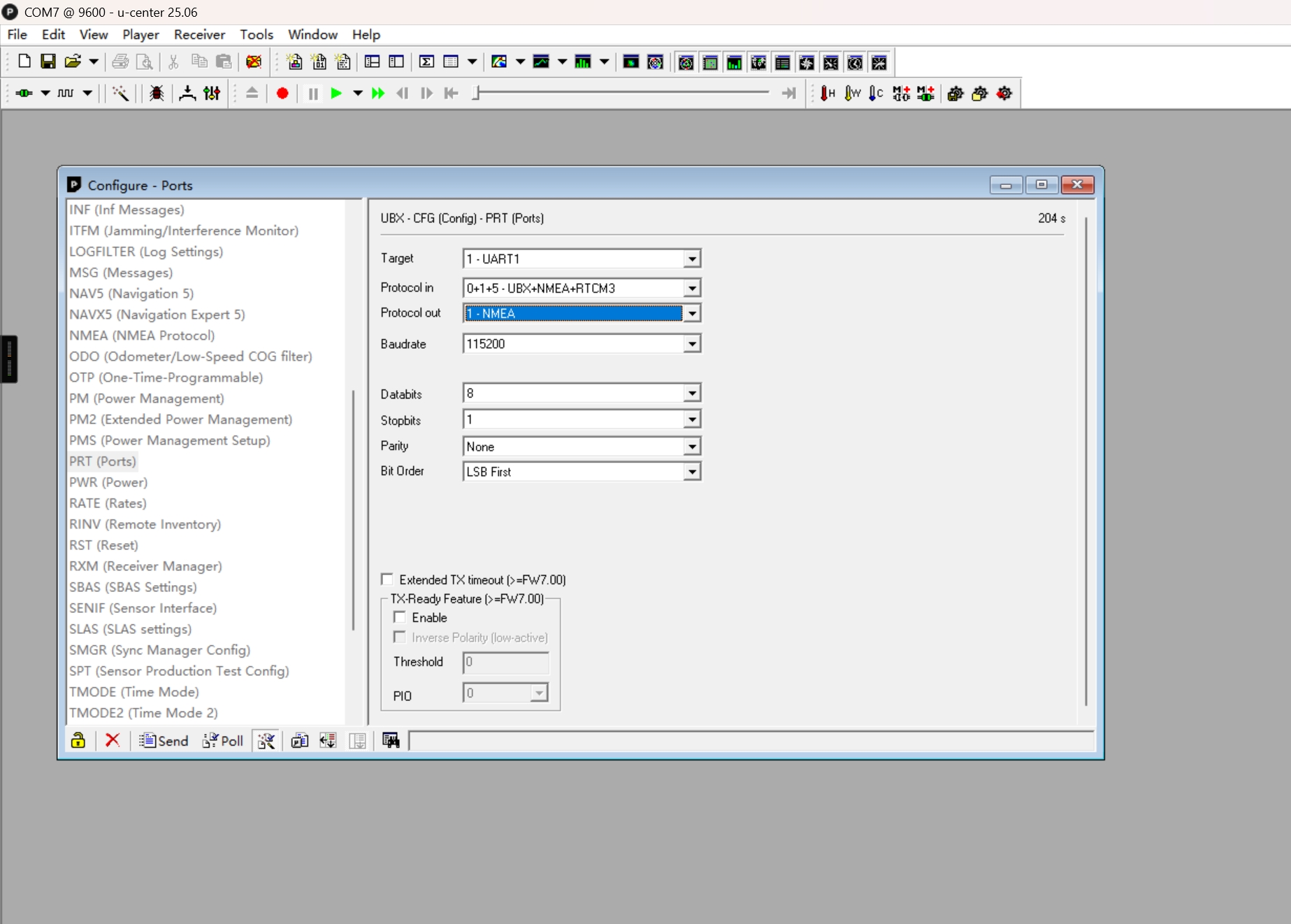

F9P和DETA配置 一、F9P接线 F9P TX1 → DETA R3 F9P GND → DETA G #数据 05:39:17 $GNRMC,053917.00,A,2232.90388,N,11356.18681,E,0.101,,270126,,,A,V*10 05:39:17 $GNVTG,,T,,M,0.101,N,0.187,K,A*33 05:39:17 $GNGGA,053917.00,2232.90388,N,11356.18681,E,1,10,1.84,37.4,M,-2.7,M,,*62 05:39:17 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:17 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:17 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:17 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:17 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:17 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:17 $GPGSV,3,3,12,16,12,088,19,17,12,243,27,27,14,044,19,30,31,318,,1*6D 05:39:17 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,18,6*61 05:39:17 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:17 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:17 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:17 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,17,1*75 05:39:17 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:17 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:17 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,13,15,32,176,28,7*78 05:39:17 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:17 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:17 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:17 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:17 $GAGSV,3,3,09,36,23,048,,2*47 05:39:17 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:17 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:17 $GNGLL,2232.90388,N,11356.18681,E,053917.00,A,A*73 05:39:18 $GNRMC,053918.00,A,2232.90388,N,11356.18681,E,0.060,,270126,,,A,V*19 05:39:18 $GNVTG,,T,,M,0.060,N,0.111,K,A*3A 05:39:18 $GNGGA,053918.00,2232.90388,N,11356.18681,E,1,10,1.84,37.4,M,-2.7,M,,*6D 05:39:18 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:18 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:18 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:18 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:18 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,24,1*6C 05:39:18 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:18 $GPGSV,3,3,12,16,12,088,19,17,12,243,27,27,14,044,18,30,31,318,,1*6C 05:39:18 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,18,6*61 05:39:18 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:18 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:18 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:18 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:18 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:18 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:18 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,13,15,32,176,28,7*78 05:39:18 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:18 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:18 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:18 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:18 $GAGSV,3,3,09,36,23,048,,2*47 05:39:18 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:18 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:18 $GNGLL,2232.90388,N,11356.18681,E,053918.00,A,A*7C 05:39:19 $GNRMC,053919.00,A,2232.90387,N,11356.18682,E,0.055,,270126,,,A,V*12 05:39:19 $GNVTG,,T,,M,0.055,N,0.101,K,A*3D 05:39:19 $GNGGA,053919.00,2232.90387,N,11356.18682,E,1,11,1.84,37.4,M,-2.7,M,,*61 05:39:19 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:19 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:19 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:19 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:19 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:19 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,16,1*6D 05:39:19 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:19 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:19 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:19 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:19 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:19 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:19 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:19 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:19 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,14,15,32,176,28,7*7F 05:39:19 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:19 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:19 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:19 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:19 $GAGSV,3,3,09,36,23,048,,2*47 05:39:19 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:19 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:19 $GNGLL,2232.90387,N,11356.18682,E,053919.00,A,A*71 05:39:20 $GNRMC,053920.00,A,2232.90385,N,11356.18683,E,0.025,,270126,,,A,V*1C 05:39:20 $GNVTG,,T,,M,0.025,N,0.045,K,A*3B 05:39:20 $GNGGA,053920.00,2232.90385,N,11356.18683,E,1,11,1.84,37.4,M,-2.7,M,,*68 05:39:20 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:20 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:20 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:20 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:20 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:20 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,15,1*6E 05:39:20 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:20 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:20 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:20 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:20 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:20 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:20 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:20 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:20 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,15,15,32,176,28,7*7D 05:39:20 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:20 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:20 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,32,2*72 05:39:20 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:20 $GAGSV,3,3,09,36,23,048,,2*47 05:39:20 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:20 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:20 $GNGLL,2232.90385,N,11356.18683,E,053920.00,A,A*78 05:39:21 $GNRMC,053921.00,A,2232.90383,N,11356.18684,E,0.031,,270126,,,A,V*19 05:39:21 $GNVTG,,T,,M,0.031,N,0.057,K,A*3D 05:39:21 $GNGGA,053921.00,2232.90383,N,11356.18684,E,1,11,1.84,37.4,M,-2.7,M,,*68 05:39:21 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:21 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:21 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:21 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:21 $GPGSV,3,1,12,01,50,161,45,02,65,092,39,03,01,168,,04,12,190,26,1*60 05:39:21 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,22,14,09,301,14,1*6F 05:39:21 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:21 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:21 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:21 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:21 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:21 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,15,1*77 05:39:21 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:21 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:21 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,28,7*7E 05:39:21 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:21 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:21 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:21 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:21 $GAGSV,3,3,09,36,23,048,,2*47 05:39:21 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:21 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:21 $GNGLL,2232.90383,N,11356.18684,E,053921.00,A,A*78 05:39:22 $GNRMC,053922.00,A,2232.90381,N,11356.18685,E,0.024,,270126,,,A,V*1D 05:39:22 $GNVTG,,T,,M,0.024,N,0.044,K,A*3B 05:39:22 $GNGGA,053922.00,2232.90381,N,11356.18685,E,1,11,1.84,37.5,M,-2.7,M,,*69 05:39:22 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:22 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:22 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:22 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:22 $GPGSV,3,1,12,01,50,161,45,02,65,092,39,03,01,168,,04,12,190,26,1*60 05:39:22 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,13,1*68 05:39:22 $GPGSV,3,3,12,16,12,088,20,17,12,243,29,27,14,044,18,30,31,318,,1*68 05:39:22 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:22 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:22 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:22 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:22 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:22 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:22 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:22 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,17,15,32,176,29,7*7E 05:39:22 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:22 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:22 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:22 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:22 $GAGSV,3,3,09,36,23,048,,2*47 05:39:22 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:22 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:22 $GNGLL,2232.90381,N,11356.18685,E,053922.00,A,A*78 05:39:23 $GNRMC,053923.00,A,2232.90378,N,11356.18687,E,0.026,,270126,,,A,V*1A 05:39:23 $GNVTG,,T,,M,0.026,N,0.048,K,A*35 05:39:23 $GNGGA,053923.00,2232.90378,N,11356.18687,E,1,11,1.84,37.6,M,-2.7,M,,*6F 05:39:23 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:23 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:23 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:23 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:23 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:23 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:23 $GPGSV,3,3,12,16,12,088,21,17,12,243,28,27,14,044,17,30,31,318,,1*67 05:39:23 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:23 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:23 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:23 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:23 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:23 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:23 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:23 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:23 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:23 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:23 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:23 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:23 $GAGSV,3,3,09,36,23,048,,2*47 05:39:23 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:23 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:23 $GNGLL,2232.90378,N,11356.18687,E,053923.00,A,A*7D 05:39:24 $GNRMC,053924.00,A,2232.90375,N,11356.18690,E,0.037,,270126,,,A,V*16 05:39:24 $GNVTG,,T,,M,0.037,N,0.068,K,A*37 05:39:24 $GNGGA,053924.00,2232.90375,N,11356.18690,E,1,11,1.84,37.8,M,-2.7,M,,*6D 05:39:24 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:24 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:24 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:24 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:24 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:24 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:24 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,17,30,31,318,,1*6D 05:39:24 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,16,6*6F 05:39:24 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:24 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:24 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:24 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,15,1*77 05:39:24 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:24 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:24 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,16,15,32,176,29,7*7C 05:39:24 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:24 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:24 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,32,2*72 05:39:24 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:24 $GAGSV,3,3,09,36,23,048,,2*47 05:39:24 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:24 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:24 $GNGLL,2232.90375,N,11356.18690,E,053924.00,A,A*71 05:39:25 $GNRMC,053925.00,A,2232.90374,N,11356.18691,E,0.048,,270126,,,A,V*1F 05:39:25 $GNVTG,,T,,M,0.048,N,0.090,K,A*38 05:39:25 $GNGGA,053925.00,2232.90374,N,11356.18691,E,1,11,1.84,37.8,M,-2.7,M,,*6C 05:39:25 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:25 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:25 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:25 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:25 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:25 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:25 $GPGSV,3,3,12,16,12,088,21,17,12,243,30,27,14,044,16,30,31,318,,1*6F 05:39:25 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:25 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:25 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:25 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:25 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:25 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:25 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:25 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:25 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:25 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:25 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:25 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:25 $GAGSV,3,3,09,36,23,048,,2*47 05:39:25 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:25 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:25 $GNGLL,2232.90374,N,11356.18691,E,053925.00,A,A*70 05:39:26 $GNRMC,053926.00,A,2232.90371,N,11356.18693,E,0.024,,270126,,,A,V*11 05:39:26 $GNVTG,,T,,M,0.024,N,0.045,K,A*3A 05:39:26 $GNGGA,053926.00,2232.90371,N,11356.18693,E,1,11,1.84,37.9,M,-2.7,M,,*69 05:39:26 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:26 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:26 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:26 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:26 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:26 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,18,1*63 05:39:26 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,14,30,31,318,,1*6E 05:39:26 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:26 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:26 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:26 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:26 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:26 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:26 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:26 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:26 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:26 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:26 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:26 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:26 $GAGSV,3,3,09,36,23,048,,2*47 05:39:26 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:26 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:26 $GNGLL,2232.90371,N,11356.18693,E,053926.00,A,A*74 05:39:27 $GNRMC,053927.00,A,2232.90370,N,11356.18692,E,0.048,,270126,,,A,V*1A 05:39:27 $GNVTG,,T,,M,0.048,N,0.089,K,A*30 05:39:27 $GNGGA,053927.00,2232.90370,N,11356.18692,E,1,11,1.84,37.9,M,-2.7,M,,*68 05:39:27 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:27 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:27 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:27 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:27 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:27 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:27 $GPGSV,3,3,12,16,12,088,21,17,12,243,27,27,14,044,13,30,31,318,,1*6C 05:39:27 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:27 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:27 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:27 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:27 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:27 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:27 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:27 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:27 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:27 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:27 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:27 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:27 $GAGSV,3,3,09,36,23,048,,2*47 05:39:27 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:27 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:27 $GNGLL,2232.90370,N,11356.18692,E,053927.00,A,A*75 05:39:28 $GNRMC,053928.00,A,2232.90366,N,11356.18693,E,0.029,,270126,,,A,V*14 05:39:28 $GNVTG,,T,,M,0.029,N,0.054,K,A*37 05:39:28 $GNGGA,053928.00,2232.90366,N,11356.18693,E,1,11,1.84,38.0,M,-2.7,M,,*67 05:39:28 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:28 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:28 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:28 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:28 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:28 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,13,1*68 05:39:28 $GPGSV,3,3,12,16,12,088,21,17,12,243,30,27,14,044,12,30,31,318,,1*6B 05:39:28 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,16,6*6F 05:39:28 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:28 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:28 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:28 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:28 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:28 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:28 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,16,15,32,176,29,7*7E 05:39:28 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:28 $GAGSV,3,3,09,36,23,048,22,7*42 05:39:28 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:28 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:28 $GAGSV,3,3,09,36,23,048,,2*47 05:39:28 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:28 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:28 $GNGLL,2232.90366,N,11356.18693,E,053928.00,A,A*7C 05:39:29 $GNRMC,053929.00,A,2232.90363,N,11356.18695,E,0.049,,270126,,,A,V*10 05:39:29 $GNVTG,,T,,M,0.049,N,0.091,K,A*38 05:39:29 $GNGGA,053929.00,2232.90363,N,11356.18695,E,1,11,1.84,38.1,M,-2.7,M,,*64 05:39:29 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:29 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:29 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:29 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:29 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,24,1*63 05:39:29 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,1*6A 05:39:29 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,13,30,31,318,,1*69 05:39:29 $GPGSV,3,1,12,01,50,161,32,02,65,092,,03,01,168,,04,12,190,17,6*6F 05:39:29 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:29 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:29 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:29 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:29 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:29 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:29 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,17,15,32,176,29,7*7F 05:39:29 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:29 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:29 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:29 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:29 $GAGSV,3,3,09,36,23,048,,2*47 05:39:29 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:29 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:29 $GNGLL,2232.90363,N,11356.18695,E,053929.00,A,A*7E 05:39:30 $GNRMC,053930.00,A,2232.90362,N,11356.18695,E,0.037,,270126,,,A,V*10 05:39:30 $GNVTG,,T,,M,0.037,N,0.068,K,A*37 05:39:30 $GNGGA,053930.00,2232.90362,N,11356.18695,E,1,11,1.84,38.1,M,-2.7,M,,*6D 05:39:30 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:30 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:30 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:30 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:30 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:30 $GPGSV,3,2,12,07,61,311,,08,42,027,20,09,21,220,,14,09,301,,1*68 05:39:30 $GPGSV,3,3,12,16,12,088,22,17,12,243,27,27,14,044,14,30,31,318,,1*68 05:39:30 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:30 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:30 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:30 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:30 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,10,1*72 05:39:30 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:30 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:30 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,17,15,32,176,29,7*7F 05:39:30 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:30 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:30 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:30 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:30 $GAGSV,3,3,09,36,23,048,,2*47 05:39:30 $GBGSV,1,1,01,16,83,126,40,1*4A 05:39:30 $GBGSV,1,1,01,16,83,126,34,3*4B 05:39:30 $GNGLL,2232.90362,N,11356.18695,E,053930.00,A,A*77 时间:053917.00 ~ 053930.00 是 UTC 时间(NMEA 默认 UTC)。对应北京时间/中国时区 +8 大概是 13:39:17 ~ 13:39:30。 270126 年月日 定位状态:A(有效定位) 定位类型:GNGGA ... ,1,... → Fix Quality = 1(普通单点定位) 速度:0.02~0.10 knot(≈ 0.04~0.19 km/h)→ 基本静止,只有噪声抖动。 位置(十进制度): 纬度 2232.90388N → 22.548398°N 经度 11356.18681E → 113.9364468°E 13 秒内的漂移:从第一条到最后一条位置变化约 0.54 m(很像静止情况下的正常抖动/多路径)。 因为是静态环境所以准确A. $GNRMC(Recommended Minimum:最小定位信息) 例子: $GNRMC,053917.00,A,2232.90388,N,11356.18681,E,0.101,,270126,,,A,V*10 字段重点: 053917.00:UTC 时间 hhmmss.ss A:定位有效(V=无效) 2232.90388,N / 11356.18681,E:纬经度(ddmm.mmmmm 格式) 0.101:对地速度 knots 航向(course)这里是空的:你速度太低时很多设备会不输出航向 270126:日期 ddmmyy 倒数第二个 A:一般是 Mode=Autonomous(自主定位、无差分) 最后一个 V:这是 NMEA 新版本里扩展的 “Nav status/Integrity” 一类字段,F9P 有时会给 V(常见含义是“没有完整性信息/不可用”之类)。不影响你前面的 A 有效定位结论,但它提示:这不是带完整性监测/认证的导航状态输出。B. $GNVTG(Course/Speed:航向与速度) 例子:$GNVTG,,T,,M,0.101,N,0.187,K,A*33 真航向/磁航向都空(同样因为几乎不动) 0.101,N:0.101 knot 0.187,K:0.187 km/h A:模式 AutonomousC. $GNGGA(Fix Data:定位解算质量、卫星数、高度等) 例子:$GNGGA,053917.00,...,1,10,1.84,37.4,M,-2.7,M,,*62 关键字段: 1:Fix Quality = 1 单点定位(不是 RTK) 10/11:参与解算的卫星数(你这段里从 10 变到 11) 1.84:HDOP(水平几何精度因子,越小越好;1.84 属于“还行但不算很漂亮”) 37.4,M:海拔高度(通常是 相对大地水准面 MSL) -2.7,M:大地水准面起伏(Geoid separation) N ≈ -2.7 m 所以椭球高大概:h = H + N ≈ 37.4 + (-2.7) = 34.7 m(这段高度范围大概 34.7~35.4m 椭球高) 差分龄期/差分站号为空:没有差分改正输入 你这 13 秒里海拔从 37.4 → 38.1 m,静止情况下这种 0.7 m 级别上下浮动也挺常见(多路径/卫星几何变化/滤波)。D. $GNGSA(DOP + Used SVs:参与解算的卫星列表 + PDOP/HDOP/VDOP) 你有多条 GSA(这是 F9P 多星座输出时常见的写法),例如: ... 01,02,04,17 ...(GPS用到的) ... 84,85,73 ...(GLONASS用到的) ... 15,34,08 ...(Galileo用到的) ... 16 ...(北斗用到的) 末尾这三个是核心: PDOP 2.70 HDOP 1.84 VDOP 1.97 解释: PDOP 2.70:整体几何一般(天空开阔时经常能做到 1.x 甚至更低;你这里可能有遮挡/仰角截止较高/或解算策略没把更多卫星纳入) HDOP/VDOP 与 GGA 中一致E. $GxGSV(Satellites in View:可见卫星、方位、仰角、信噪比) 你这里有: $GPGSV:GPS(你这里显示 12 颗在视野) $GLGSV:GLONASS(8 颗) $GAGSV:Galileo(9 颗) $GBGSV:北斗(1 颗) GSV 每颗卫星给: PRN(卫星号) Elevation(仰角,0~90°) Azimuth(方位角,0~359°) SNR/CN0(信噪比,数字越大越强;空值通常表示该信号此刻没给出或不可用) 你还会看到同一星座的 GSV 重复两套,并且在校验和前多了一个类似 ...,1*xx 或 ...,6*xx 的尾字段: 这通常表示 Signal ID(不同频点/信号类型) F9P 是双频多星座,所以经常会把“同一颗卫星在不同信号上的 CN0”分别输出两套(例如 GPS L1 vs L2、Galileo E1 vs E5b、北斗 B1 vs B2 等)。 从你截取的 CN0 看,强信号里比较典型的有: GPS:PRN01(45)、PRN02(40)这类不错 GLONASS:PRN84(39)较强 Galileo:PRN34(45)很强 北斗:PRN16(40)且仰角 83°(几乎头顶),很稳F. $GNGLL(Geographic Position:经纬度简版) 例子:$GNGLL,2232.90388,N,11356.18681,E,053917.00,A,A*73 本质上是 RMC/GGA 的经纬度再输出一遍(更简洁)。 A,A:一个是数据有效,一个是模式(通常 Autonomous)

F9P和DETA配置 一、F9P接线 F9P TX1 → DETA R3 F9P GND → DETA G #数据 05:39:17 $GNRMC,053917.00,A,2232.90388,N,11356.18681,E,0.101,,270126,,,A,V*10 05:39:17 $GNVTG,,T,,M,0.101,N,0.187,K,A*33 05:39:17 $GNGGA,053917.00,2232.90388,N,11356.18681,E,1,10,1.84,37.4,M,-2.7,M,,*62 05:39:17 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:17 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:17 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:17 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:17 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:17 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:17 $GPGSV,3,3,12,16,12,088,19,17,12,243,27,27,14,044,19,30,31,318,,1*6D 05:39:17 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,18,6*61 05:39:17 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:17 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:17 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:17 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,17,1*75 05:39:17 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:17 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:17 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,13,15,32,176,28,7*78 05:39:17 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:17 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:17 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:17 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:17 $GAGSV,3,3,09,36,23,048,,2*47 05:39:17 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:17 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:17 $GNGLL,2232.90388,N,11356.18681,E,053917.00,A,A*73 05:39:18 $GNRMC,053918.00,A,2232.90388,N,11356.18681,E,0.060,,270126,,,A,V*19 05:39:18 $GNVTG,,T,,M,0.060,N,0.111,K,A*3A 05:39:18 $GNGGA,053918.00,2232.90388,N,11356.18681,E,1,10,1.84,37.4,M,-2.7,M,,*6D 05:39:18 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:18 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:18 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:18 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:18 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,24,1*6C 05:39:18 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:18 $GPGSV,3,3,12,16,12,088,19,17,12,243,27,27,14,044,18,30,31,318,,1*6C 05:39:18 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,18,6*61 05:39:18 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:18 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:18 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:18 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:18 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:18 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:18 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,13,15,32,176,28,7*78 05:39:18 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:18 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:18 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:18 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:18 $GAGSV,3,3,09,36,23,048,,2*47 05:39:18 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:18 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:18 $GNGLL,2232.90388,N,11356.18681,E,053918.00,A,A*7C 05:39:19 $GNRMC,053919.00,A,2232.90387,N,11356.18682,E,0.055,,270126,,,A,V*12 05:39:19 $GNVTG,,T,,M,0.055,N,0.101,K,A*3D 05:39:19 $GNGGA,053919.00,2232.90387,N,11356.18682,E,1,11,1.84,37.4,M,-2.7,M,,*61 05:39:19 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:19 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:19 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:19 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:19 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:19 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,16,1*6D 05:39:19 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:19 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:19 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:19 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:19 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:19 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:19 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:19 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:19 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,14,15,32,176,28,7*7F 05:39:19 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:19 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:19 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:19 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:19 $GAGSV,3,3,09,36,23,048,,2*47 05:39:19 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:19 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:19 $GNGLL,2232.90387,N,11356.18682,E,053919.00,A,A*71 05:39:20 $GNRMC,053920.00,A,2232.90385,N,11356.18683,E,0.025,,270126,,,A,V*1C 05:39:20 $GNVTG,,T,,M,0.025,N,0.045,K,A*3B 05:39:20 $GNGGA,053920.00,2232.90385,N,11356.18683,E,1,11,1.84,37.4,M,-2.7,M,,*68 05:39:20 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:20 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:20 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:20 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:20 $GPGSV,3,1,12,01,50,161,45,02,65,092,40,03,01,168,,04,12,190,25,1*6D 05:39:20 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,15,1*6E 05:39:20 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:20 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:20 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:20 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:20 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:20 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:20 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:20 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:20 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,15,15,32,176,28,7*7D 05:39:20 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:20 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:20 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,32,2*72 05:39:20 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:20 $GAGSV,3,3,09,36,23,048,,2*47 05:39:20 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:20 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:20 $GNGLL,2232.90385,N,11356.18683,E,053920.00,A,A*78 05:39:21 $GNRMC,053921.00,A,2232.90383,N,11356.18684,E,0.031,,270126,,,A,V*19 05:39:21 $GNVTG,,T,,M,0.031,N,0.057,K,A*3D 05:39:21 $GNGGA,053921.00,2232.90383,N,11356.18684,E,1,11,1.84,37.4,M,-2.7,M,,*68 05:39:21 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:21 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:21 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:21 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:21 $GPGSV,3,1,12,01,50,161,45,02,65,092,39,03,01,168,,04,12,190,26,1*60 05:39:21 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,22,14,09,301,14,1*6F 05:39:21 $GPGSV,3,3,12,16,12,088,20,17,12,243,,27,14,044,18,30,31,318,,1*63 05:39:21 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:21 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:21 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:21 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:21 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,15,1*77 05:39:21 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:21 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:21 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,28,7*7E 05:39:21 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:21 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:21 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:21 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:21 $GAGSV,3,3,09,36,23,048,,2*47 05:39:21 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:21 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:21 $GNGLL,2232.90383,N,11356.18684,E,053921.00,A,A*78 05:39:22 $GNRMC,053922.00,A,2232.90381,N,11356.18685,E,0.024,,270126,,,A,V*1D 05:39:22 $GNVTG,,T,,M,0.024,N,0.044,K,A*3B 05:39:22 $GNGGA,053922.00,2232.90381,N,11356.18685,E,1,11,1.84,37.5,M,-2.7,M,,*69 05:39:22 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:22 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:22 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:22 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:22 $GPGSV,3,1,12,01,50,161,45,02,65,092,39,03,01,168,,04,12,190,26,1*60 05:39:22 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,13,1*68 05:39:22 $GPGSV,3,3,12,16,12,088,20,17,12,243,29,27,14,044,18,30,31,318,,1*68 05:39:22 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:22 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:22 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:22 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:22 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:22 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,21,74,35,346,,3*7D 05:39:22 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:22 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,17,15,32,176,29,7*7E 05:39:22 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,45,7*76 05:39:22 $GAGSV,3,3,09,36,23,048,24,7*44 05:39:22 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:22 $GAGSV,3,2,09,27,17,299,,29,07,312,,30,48,348,,34,54,106,38,2*79 05:39:22 $GAGSV,3,3,09,36,23,048,,2*47 05:39:22 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:22 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:22 $GNGLL,2232.90381,N,11356.18685,E,053922.00,A,A*78 05:39:23 $GNRMC,053923.00,A,2232.90378,N,11356.18687,E,0.026,,270126,,,A,V*1A 05:39:23 $GNVTG,,T,,M,0.026,N,0.048,K,A*35 05:39:23 $GNGGA,053923.00,2232.90378,N,11356.18687,E,1,11,1.84,37.6,M,-2.7,M,,*6F 05:39:23 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:23 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:23 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:23 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:23 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:23 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:23 $GPGSV,3,3,12,16,12,088,21,17,12,243,28,27,14,044,17,30,31,318,,1*67 05:39:23 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:23 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:23 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:23 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,27,74,35,346,,1*79 05:39:23 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,16,1*74 05:39:23 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:23 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,20,3*78 05:39:23 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:23 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:23 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:23 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:23 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:23 $GAGSV,3,3,09,36,23,048,,2*47 05:39:23 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:23 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:23 $GNGLL,2232.90378,N,11356.18687,E,053923.00,A,A*7D 05:39:24 $GNRMC,053924.00,A,2232.90375,N,11356.18690,E,0.037,,270126,,,A,V*16 05:39:24 $GNVTG,,T,,M,0.037,N,0.068,K,A*37 05:39:24 $GNGGA,053924.00,2232.90375,N,11356.18690,E,1,11,1.84,37.8,M,-2.7,M,,*6D 05:39:24 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:24 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:24 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:24 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:24 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:24 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:24 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,17,30,31,318,,1*6D 05:39:24 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,16,6*6F 05:39:24 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:24 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:24 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:24 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,15,1*77 05:39:24 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:24 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:24 $GAGSV,3,1,09,02,35,061,21,07,32,265,,08,27,205,16,15,32,176,29,7*7C 05:39:24 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:24 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:24 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,32,2*72 05:39:24 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:24 $GAGSV,3,3,09,36,23,048,,2*47 05:39:24 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:24 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:24 $GNGLL,2232.90375,N,11356.18690,E,053924.00,A,A*71 05:39:25 $GNRMC,053925.00,A,2232.90374,N,11356.18691,E,0.048,,270126,,,A,V*1F 05:39:25 $GNVTG,,T,,M,0.048,N,0.090,K,A*38 05:39:25 $GNGGA,053925.00,2232.90374,N,11356.18691,E,1,11,1.84,37.8,M,-2.7,M,,*6C 05:39:25 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:25 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:25 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:25 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:25 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:25 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,19,1*62 05:39:25 $GPGSV,3,3,12,16,12,088,21,17,12,243,30,27,14,044,16,30,31,318,,1*6F 05:39:25 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:25 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:25 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:25 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:25 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:25 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:25 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:25 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:25 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:25 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:25 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:25 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:25 $GAGSV,3,3,09,36,23,048,,2*47 05:39:25 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:25 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:25 $GNGLL,2232.90374,N,11356.18691,E,053925.00,A,A*70 05:39:26 $GNRMC,053926.00,A,2232.90371,N,11356.18693,E,0.024,,270126,,,A,V*11 05:39:26 $GNVTG,,T,,M,0.024,N,0.045,K,A*3A 05:39:26 $GNGGA,053926.00,2232.90371,N,11356.18693,E,1,11,1.84,37.9,M,-2.7,M,,*69 05:39:26 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:26 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:26 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:26 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:26 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:26 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,18,1*63 05:39:26 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,14,30,31,318,,1*6E 05:39:26 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:26 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:26 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:26 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:26 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:26 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:26 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:26 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:26 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:26 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:26 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:26 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:26 $GAGSV,3,3,09,36,23,048,,2*47 05:39:26 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:26 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:26 $GNGLL,2232.90371,N,11356.18693,E,053926.00,A,A*74 05:39:27 $GNRMC,053927.00,A,2232.90370,N,11356.18692,E,0.048,,270126,,,A,V*1A 05:39:27 $GNVTG,,T,,M,0.048,N,0.089,K,A*30 05:39:27 $GNGGA,053927.00,2232.90370,N,11356.18692,E,1,11,1.84,37.9,M,-2.7,M,,*68 05:39:27 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:27 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:27 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:27 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:27 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:27 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,17,1*6C 05:39:27 $GPGSV,3,3,12,16,12,088,21,17,12,243,27,27,14,044,13,30,31,318,,1*6C 05:39:27 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:27 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:27 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:27 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:27 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:27 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:27 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:27 $GAGSV,3,1,09,02,35,061,22,07,32,265,,08,27,205,16,15,32,176,29,7*7F 05:39:27 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:27 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:27 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:27 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:27 $GAGSV,3,3,09,36,23,048,,2*47 05:39:27 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:27 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:27 $GNGLL,2232.90370,N,11356.18692,E,053927.00,A,A*75 05:39:28 $GNRMC,053928.00,A,2232.90366,N,11356.18693,E,0.029,,270126,,,A,V*14 05:39:28 $GNVTG,,T,,M,0.029,N,0.054,K,A*37 05:39:28 $GNGGA,053928.00,2232.90366,N,11356.18693,E,1,11,1.84,38.0,M,-2.7,M,,*67 05:39:28 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:28 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:28 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:28 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:28 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:28 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,13,1*68 05:39:28 $GPGSV,3,3,12,16,12,088,21,17,12,243,30,27,14,044,12,30,31,318,,1*6B 05:39:28 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,16,6*6F 05:39:28 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:28 $GPGSV,3,3,12,16,12,088,,17,12,243,26,27,14,044,,30,31,318,,6*6B 05:39:28 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:28 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,14,1*76 05:39:28 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,23,74,35,346,,3*7F 05:39:28 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:28 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,16,15,32,176,29,7*7E 05:39:28 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:28 $GAGSV,3,3,09,36,23,048,22,7*42 05:39:28 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:28 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:28 $GAGSV,3,3,09,36,23,048,,2*47 05:39:28 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:28 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:28 $GNGLL,2232.90366,N,11356.18693,E,053928.00,A,A*7C 05:39:29 $GNRMC,053929.00,A,2232.90363,N,11356.18695,E,0.049,,270126,,,A,V*10 05:39:29 $GNVTG,,T,,M,0.049,N,0.091,K,A*38 05:39:29 $GNGGA,053929.00,2232.90363,N,11356.18695,E,1,11,1.84,38.1,M,-2.7,M,,*64 05:39:29 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:29 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:29 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:29 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:29 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,24,1*63 05:39:29 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,1*6A 05:39:29 $GPGSV,3,3,12,16,12,088,21,17,12,243,,27,14,044,13,30,31,318,,1*69 05:39:29 $GPGSV,3,1,12,01,50,161,32,02,65,092,,03,01,168,,04,12,190,17,6*6F 05:39:29 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:29 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:29 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:29 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,13,1*71 05:39:29 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:29 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:29 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,17,15,32,176,29,7*7F 05:39:29 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:29 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:29 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:29 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:29 $GAGSV,3,3,09,36,23,048,,2*47 05:39:29 $GBGSV,1,1,01,16,83,123,40,1*4F 05:39:29 $GBGSV,1,1,01,16,83,123,34,3*4E 05:39:29 $GNGLL,2232.90363,N,11356.18695,E,053929.00,A,A*7E 05:39:30 $GNRMC,053930.00,A,2232.90362,N,11356.18695,E,0.037,,270126,,,A,V*10 05:39:30 $GNVTG,,T,,M,0.037,N,0.068,K,A*37 05:39:30 $GNGGA,053930.00,2232.90362,N,11356.18695,E,1,11,1.84,38.1,M,-2.7,M,,*6D 05:39:30 $GNGSA,A,3,01,02,04,17,,,,,,,,,2.70,1.84,1.97,1*07 05:39:30 $GNGSA,A,3,84,85,73,,,,,,,,,,2.70,1.84,1.97,2*00 05:39:30 $GNGSA,A,3,15,34,08,,,,,,,,,,2.70,1.84,1.97,3*0F 05:39:30 $GNGSA,A,3,16,,,,,,,,,,,,2.70,1.84,1.97,4*04 05:39:30 $GPGSV,3,1,12,01,50,161,44,02,65,092,39,03,01,168,,04,12,190,25,1*62 05:39:30 $GPGSV,3,2,12,07,61,311,,08,42,027,20,09,21,220,,14,09,301,,1*68 05:39:30 $GPGSV,3,3,12,16,12,088,22,17,12,243,27,27,14,044,14,30,31,318,,1*68 05:39:30 $GPGSV,3,1,12,01,50,161,33,02,65,092,,03,01,168,,04,12,190,17,6*6E 05:39:30 $GPGSV,3,2,12,07,61,311,,08,42,027,,09,21,220,,14,09,301,,6*6D 05:39:30 $GPGSV,3,3,12,16,12,088,,17,12,243,25,27,14,044,,30,31,318,,6*68 05:39:30 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,26,74,35,346,,1*78 05:39:30 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,39,85,34,202,10,1*72 05:39:30 $GLGSV,2,1,08,71,04,228,,72,06,275,,73,53,055,22,74,35,346,,3*7E 05:39:30 $GLGSV,2,2,08,80,16,110,,83,36,031,,84,82,143,32,85,34,202,19,3*72 05:39:30 $GAGSV,3,1,09,02,35,061,23,07,32,265,,08,27,205,17,15,32,176,29,7*7F 05:39:30 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,45,7*77 05:39:30 $GAGSV,3,3,09,36,23,048,23,7*43 05:39:30 $GAGSV,3,1,09,02,35,061,,07,32,265,,08,27,205,27,15,32,176,31,2*71 05:39:30 $GAGSV,3,2,09,27,17,300,,29,07,312,,30,48,348,,34,54,106,38,2*78 05:39:30 $GAGSV,3,3,09,36,23,048,,2*47 05:39:30 $GBGSV,1,1,01,16,83,126,40,1*4A 05:39:30 $GBGSV,1,1,01,16,83,126,34,3*4B 05:39:30 $GNGLL,2232.90362,N,11356.18695,E,053930.00,A,A*77 时间:053917.00 ~ 053930.00 是 UTC 时间(NMEA 默认 UTC)。对应北京时间/中国时区 +8 大概是 13:39:17 ~ 13:39:30。 270126 年月日 定位状态:A(有效定位) 定位类型:GNGGA ... ,1,... → Fix Quality = 1(普通单点定位) 速度:0.02~0.10 knot(≈ 0.04~0.19 km/h)→ 基本静止,只有噪声抖动。 位置(十进制度): 纬度 2232.90388N → 22.548398°N 经度 11356.18681E → 113.9364468°E 13 秒内的漂移:从第一条到最后一条位置变化约 0.54 m(很像静止情况下的正常抖动/多路径)。 因为是静态环境所以准确A. $GNRMC(Recommended Minimum:最小定位信息) 例子: $GNRMC,053917.00,A,2232.90388,N,11356.18681,E,0.101,,270126,,,A,V*10 字段重点: 053917.00:UTC 时间 hhmmss.ss A:定位有效(V=无效) 2232.90388,N / 11356.18681,E:纬经度(ddmm.mmmmm 格式) 0.101:对地速度 knots 航向(course)这里是空的:你速度太低时很多设备会不输出航向 270126:日期 ddmmyy 倒数第二个 A:一般是 Mode=Autonomous(自主定位、无差分) 最后一个 V:这是 NMEA 新版本里扩展的 “Nav status/Integrity” 一类字段,F9P 有时会给 V(常见含义是“没有完整性信息/不可用”之类)。不影响你前面的 A 有效定位结论,但它提示:这不是带完整性监测/认证的导航状态输出。B. $GNVTG(Course/Speed:航向与速度) 例子:$GNVTG,,T,,M,0.101,N,0.187,K,A*33 真航向/磁航向都空(同样因为几乎不动) 0.101,N:0.101 knot 0.187,K:0.187 km/h A:模式 AutonomousC. $GNGGA(Fix Data:定位解算质量、卫星数、高度等) 例子:$GNGGA,053917.00,...,1,10,1.84,37.4,M,-2.7,M,,*62 关键字段: 1:Fix Quality = 1 单点定位(不是 RTK) 10/11:参与解算的卫星数(你这段里从 10 变到 11) 1.84:HDOP(水平几何精度因子,越小越好;1.84 属于“还行但不算很漂亮”) 37.4,M:海拔高度(通常是 相对大地水准面 MSL) -2.7,M:大地水准面起伏(Geoid separation) N ≈ -2.7 m 所以椭球高大概:h = H + N ≈ 37.4 + (-2.7) = 34.7 m(这段高度范围大概 34.7~35.4m 椭球高) 差分龄期/差分站号为空:没有差分改正输入 你这 13 秒里海拔从 37.4 → 38.1 m,静止情况下这种 0.7 m 级别上下浮动也挺常见(多路径/卫星几何变化/滤波)。D. $GNGSA(DOP + Used SVs:参与解算的卫星列表 + PDOP/HDOP/VDOP) 你有多条 GSA(这是 F9P 多星座输出时常见的写法),例如: ... 01,02,04,17 ...(GPS用到的) ... 84,85,73 ...(GLONASS用到的) ... 15,34,08 ...(Galileo用到的) ... 16 ...(北斗用到的) 末尾这三个是核心: PDOP 2.70 HDOP 1.84 VDOP 1.97 解释: PDOP 2.70:整体几何一般(天空开阔时经常能做到 1.x 甚至更低;你这里可能有遮挡/仰角截止较高/或解算策略没把更多卫星纳入) HDOP/VDOP 与 GGA 中一致E. $GxGSV(Satellites in View:可见卫星、方位、仰角、信噪比) 你这里有: $GPGSV:GPS(你这里显示 12 颗在视野) $GLGSV:GLONASS(8 颗) $GAGSV:Galileo(9 颗) $GBGSV:北斗(1 颗) GSV 每颗卫星给: PRN(卫星号) Elevation(仰角,0~90°) Azimuth(方位角,0~359°) SNR/CN0(信噪比,数字越大越强;空值通常表示该信号此刻没给出或不可用) 你还会看到同一星座的 GSV 重复两套,并且在校验和前多了一个类似 ...,1*xx 或 ...,6*xx 的尾字段: 这通常表示 Signal ID(不同频点/信号类型) F9P 是双频多星座,所以经常会把“同一颗卫星在不同信号上的 CN0”分别输出两套(例如 GPS L1 vs L2、Galileo E1 vs E5b、北斗 B1 vs B2 等)。 从你截取的 CN0 看,强信号里比较典型的有: GPS:PRN01(45)、PRN02(40)这类不错 GLONASS:PRN84(39)较强 Galileo:PRN34(45)很强 北斗:PRN16(40)且仰角 83°(几乎头顶),很稳F. $GNGLL(Geographic Position:经纬度简版) 例子:$GNGLL,2232.90388,N,11356.18681,E,053917.00,A,A*73 本质上是 RMC/GGA 的经纬度再输出一遍(更简洁)。 A,A:一个是数据有效,一个是模式(通常 Autonomous) -

-