搜索到

152

篇与

的结果

-

-

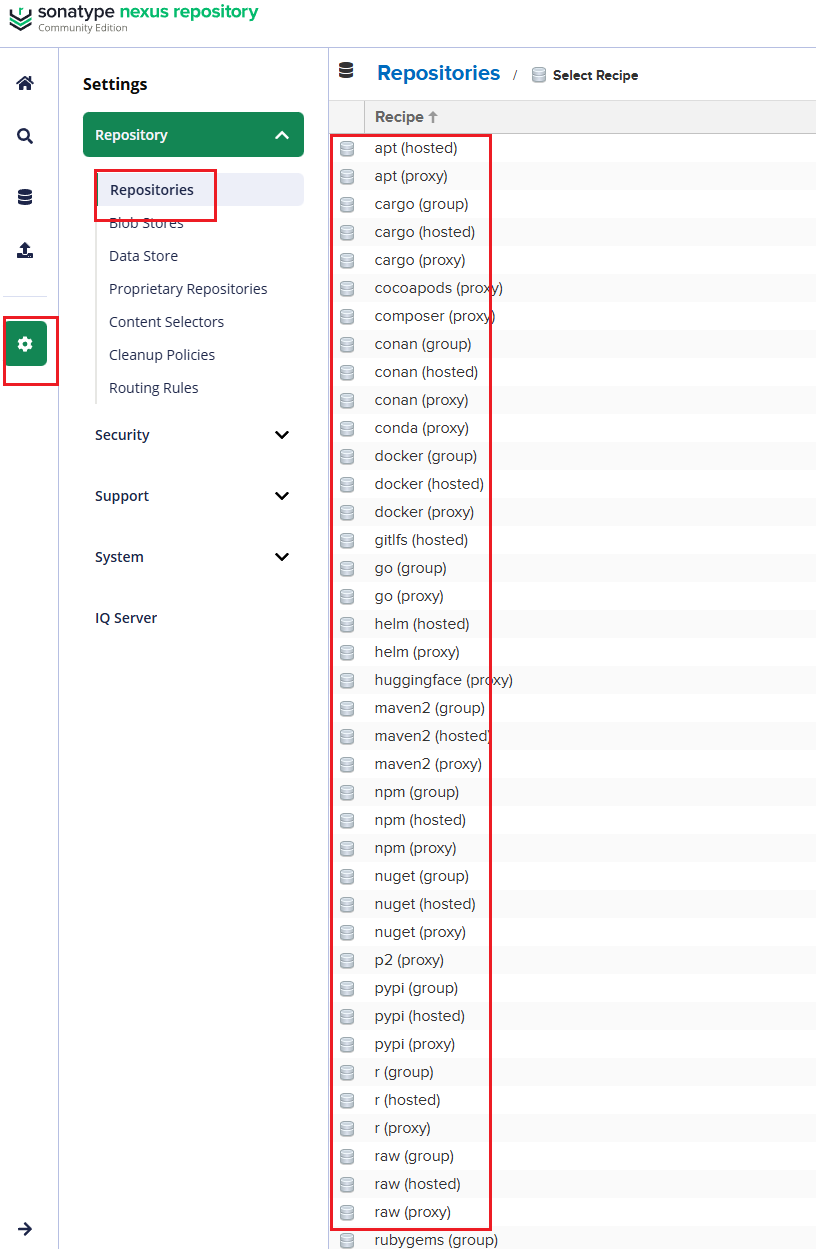

nesux部署 一、Nexus是什么?能干什么?#nexus施舍么? Nexus 跟 Harbor 一样都是“仓库”,但 Nexus 更偏语言包/构件(Maven、npm、PyPI…),Harbor 更偏 Docker/OCI 镜像。 作为运维,一般会用 Nexus 来做:Maven 私服、npm 私服、Python 包、甚至 Docker / Helm 仓库。#能干什么? - 加速依赖下载(代理公网仓库 + 本地缓存) - 私有包托管(比如你们内部的 Java 包、npm 私有库) - 统一出口(所有构建机器都从 Nexus 下载依赖,方便管控和审计) - 配合 CI/CD:构建产物统一上传 Nexus,后续部署环境只从 Nexus 拉。二、Nexus 里的核心概念 2.1 三种仓库类型(很重要)不管是 Maven、npm 还是 Docker,Nexus 里都只有三类仓库: 1、Hosted(宿主仓库 / 私有仓库) - 放你们自己发布的包。 - 比如:maven-releases、npm-private、docker-private。 2、Proxy(代理仓库) - 代理公网源,并把下载的东西缓存在本地。 - 比如: - aven-central 代理 https://repo.maven.apache.org/maven2 - npm-proxy 代理 https://registry.npmjs.org - docker-hub 代理 https://registry-1.docker.io 3、Group(聚合仓库) - 把若干 Hosted + Proxy 打包成一个虚拟仓库,对外只暴露一个地址。 - 比如:maven-public = maven-releases + maven-snapshots + maven-central - 开发/CI 只配 maven-public,不用管具体后端有多少仓库。2.2 格式(Format)创建仓库时要选择 格式,比如: - maven2、npm、pypi、docker、helm 等。 - 格式决定了:URL 路径结构、客户端怎么访问、支持哪些功能。2.3 Blob Store(存储)- Nexus 会把真实的文件放在叫 Blob store 的路径里(默认在 /nexus-data 下面)。 - 一般建议: - 如果是k8s建议用独立磁盘或 PV - 大一点,后面所有包/镜像都在里面。 - 我这里是使用docker单独部署 挂载建议内存和磁盘空间给多一些 root@ubuntu:/work/nexus# cat docker-compose.yml version: '3.8' services: nexus: image: sonatype/nexus3 container_name: nexus restart: always ports: - "8081:8081" volumes: - ./nexus-data:/nexus-data # 如有需要可以限制内存,比如: # deploy: # resources: # limits: # memory: 4g 2.4 用户、角色、Realm- 用户 / 角色 / 权限:可以控制谁能读、谁能发包。 - 如果接 LDAP/AD,就在 Security -> Realms 里启用对应 Realm。三、怎么部署 Nexus(最常用:Docker)- 说明: - Web UI 默认是 http://<服务器IP>:8081 - 数据都在 /data/nexus-data(持久化很关键,别丢) - 初始 admin 密码在 /nexus-data/admin.password 文件里。 - 生产环境建议: - 做反向代理(Nginx / Ingress) - 配置 HTTPS - 定期备份 /nexus-data。 root@ubuntu:/work/nexus# cat docker-compose.yml version: '3.8' services: nexus: image: sonatype/nexus3 container_name: nexus restart: always ports: - "8081:8081" volumes: - ./nexus-data:/nexus-data # 如有需要可以限制内存,比如: # deploy: # resources: # limits: # memory: 4g

nesux部署 一、Nexus是什么?能干什么?#nexus施舍么? Nexus 跟 Harbor 一样都是“仓库”,但 Nexus 更偏语言包/构件(Maven、npm、PyPI…),Harbor 更偏 Docker/OCI 镜像。 作为运维,一般会用 Nexus 来做:Maven 私服、npm 私服、Python 包、甚至 Docker / Helm 仓库。#能干什么? - 加速依赖下载(代理公网仓库 + 本地缓存) - 私有包托管(比如你们内部的 Java 包、npm 私有库) - 统一出口(所有构建机器都从 Nexus 下载依赖,方便管控和审计) - 配合 CI/CD:构建产物统一上传 Nexus,后续部署环境只从 Nexus 拉。二、Nexus 里的核心概念 2.1 三种仓库类型(很重要)不管是 Maven、npm 还是 Docker,Nexus 里都只有三类仓库: 1、Hosted(宿主仓库 / 私有仓库) - 放你们自己发布的包。 - 比如:maven-releases、npm-private、docker-private。 2、Proxy(代理仓库) - 代理公网源,并把下载的东西缓存在本地。 - 比如: - aven-central 代理 https://repo.maven.apache.org/maven2 - npm-proxy 代理 https://registry.npmjs.org - docker-hub 代理 https://registry-1.docker.io 3、Group(聚合仓库) - 把若干 Hosted + Proxy 打包成一个虚拟仓库,对外只暴露一个地址。 - 比如:maven-public = maven-releases + maven-snapshots + maven-central - 开发/CI 只配 maven-public,不用管具体后端有多少仓库。2.2 格式(Format)创建仓库时要选择 格式,比如: - maven2、npm、pypi、docker、helm 等。 - 格式决定了:URL 路径结构、客户端怎么访问、支持哪些功能。2.3 Blob Store(存储)- Nexus 会把真实的文件放在叫 Blob store 的路径里(默认在 /nexus-data 下面)。 - 一般建议: - 如果是k8s建议用独立磁盘或 PV - 大一点,后面所有包/镜像都在里面。 - 我这里是使用docker单独部署 挂载建议内存和磁盘空间给多一些 root@ubuntu:/work/nexus# cat docker-compose.yml version: '3.8' services: nexus: image: sonatype/nexus3 container_name: nexus restart: always ports: - "8081:8081" volumes: - ./nexus-data:/nexus-data # 如有需要可以限制内存,比如: # deploy: # resources: # limits: # memory: 4g 2.4 用户、角色、Realm- 用户 / 角色 / 权限:可以控制谁能读、谁能发包。 - 如果接 LDAP/AD,就在 Security -> Realms 里启用对应 Realm。三、怎么部署 Nexus(最常用:Docker)- 说明: - Web UI 默认是 http://<服务器IP>:8081 - 数据都在 /data/nexus-data(持久化很关键,别丢) - 初始 admin 密码在 /nexus-data/admin.password 文件里。 - 生产环境建议: - 做反向代理(Nginx / Ingress) - 配置 HTTPS - 定期备份 /nexus-data。 root@ubuntu:/work/nexus# cat docker-compose.yml version: '3.8' services: nexus: image: sonatype/nexus3 container_name: nexus restart: always ports: - "8081:8081" volumes: - ./nexus-data:/nexus-data # 如有需要可以限制内存,比如: # deploy: # resources: # limits: # memory: 4g -

-

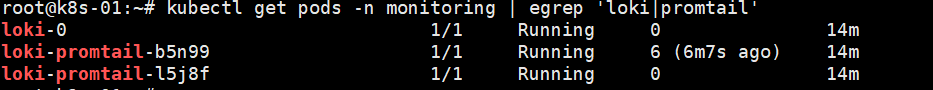

loki 部署和镜像代理 一、部署 1.1 添加 Grafana 的 Helm 仓库helm repo add grafana https://grafana.github.io/helm-charts helm repo update1.2 安装 Loki Stack(只装 Loki + Promtail,不重复装 Prometheus/Grafana)#如果你有代理 临时生效 export http_proxy="http://192.168.3.135:7890" export https_proxy="http://192.168.3.135:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" # 内网和 k8s service 网段一般不走代理 export no_proxy="localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" export NO_PROXY="$no_proxy" #要全局永久生效,可以写到 /etc/environment 或 /etc/profile 里: sudo tee -a /etc/environment >/dev/null <<EOF HTTP_PROXY=http://192.168.3.135:7890 HTTPS_PROXY=http://192.168.3.135:7890 http_proxy=http://192.168.3.135:7890 https_proxy=http://192.168.3.135:7890 NO_PROXY=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local no_proxy=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local EOF #如果是docker运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/docker.service.d sudo tee /etc/systemd/system/docker.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl show --property=Environment docker #如果是containerd运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/containerd.service.d sudo tee /etc/systemd/system/containerd.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart containerd sudo systemctl show --property=Environment containerd #创建pod helm install loki grafana/loki-stack \ -n monitoring --create-namespace \ --set grafana.enabled=false \ --set prometheus.enabled=false 二、配置 2.1 如果loki 和 prometheus在同一个名称空间下2.2 使用

loki 部署和镜像代理 一、部署 1.1 添加 Grafana 的 Helm 仓库helm repo add grafana https://grafana.github.io/helm-charts helm repo update1.2 安装 Loki Stack(只装 Loki + Promtail,不重复装 Prometheus/Grafana)#如果你有代理 临时生效 export http_proxy="http://192.168.3.135:7890" export https_proxy="http://192.168.3.135:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" # 内网和 k8s service 网段一般不走代理 export no_proxy="localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" export NO_PROXY="$no_proxy" #要全局永久生效,可以写到 /etc/environment 或 /etc/profile 里: sudo tee -a /etc/environment >/dev/null <<EOF HTTP_PROXY=http://192.168.3.135:7890 HTTPS_PROXY=http://192.168.3.135:7890 http_proxy=http://192.168.3.135:7890 https_proxy=http://192.168.3.135:7890 NO_PROXY=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local no_proxy=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local EOF #如果是docker运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/docker.service.d sudo tee /etc/systemd/system/docker.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl show --property=Environment docker #如果是containerd运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/containerd.service.d sudo tee /etc/systemd/system/containerd.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart containerd sudo systemctl show --property=Environment containerd #创建pod helm install loki grafana/loki-stack \ -n monitoring --create-namespace \ --set grafana.enabled=false \ --set prometheus.enabled=false 二、配置 2.1 如果loki 和 prometheus在同一个名称空间下2.2 使用 -