搜索到

5

篇与

的结果

-

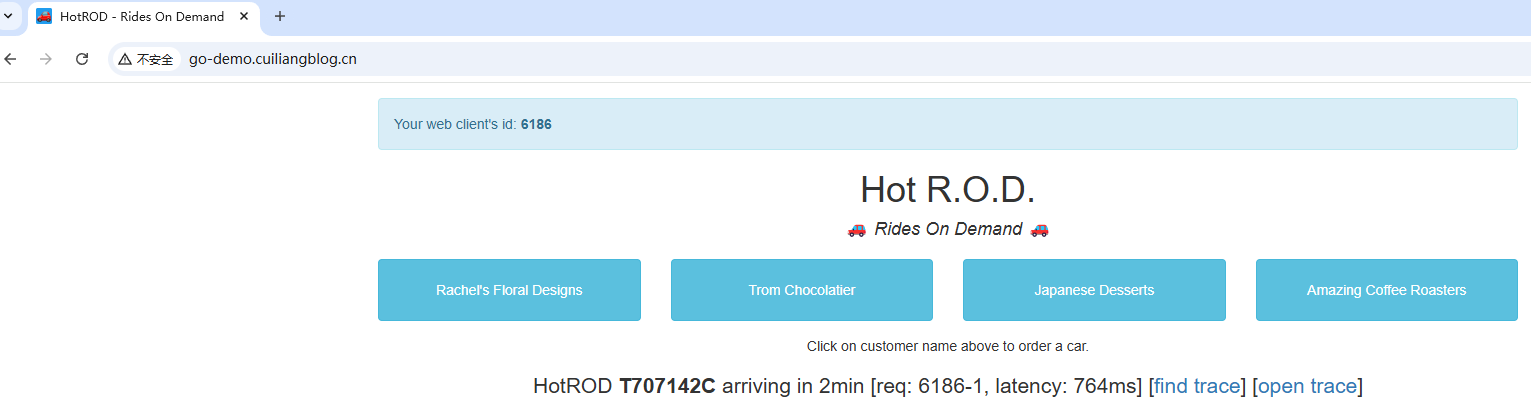

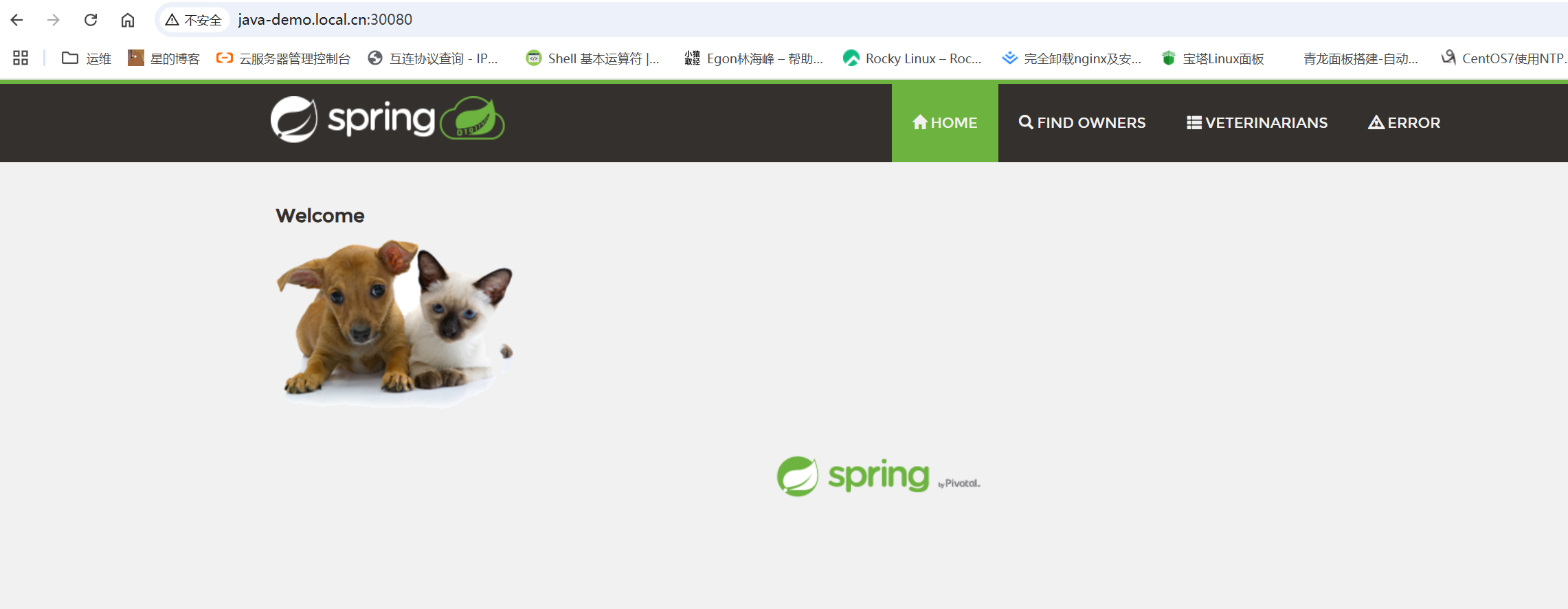

基于OpenTelemetry+Grafana可观测性实践 一、方案介绍OpenTelemetry + Prometheus + Loki + Tempo + Grafana 是一套现代化、云备份的可安装性解决方案组合,涵盖Trace(追踪追踪)、Log(日志)、Metrics(指标)三大核心维度,为微服务架构中的应用提供统一的可安装性平台。二、组件介绍三、系统架构四、部署示例应用4.1 应用介绍https://opentelemetry.io/docs/demo/kubernetes-deployment/ 官方为大家写了一个opentelemetry-demo。 这个项目模拟了一个微服务版本的电子商城,主要包含了以下一些项目:4.2 部署应用4.2.1获取图表包# helm repo open-telemetry https://open-telemetry.github.io 添加/opentelemetry-helm-charts # helm pull open-telemetry/opentelemetry-demo --untar # cd opentelemetry-demo # ls Chart.lock Chart.yaml 示例 grafana-dashboards README.md UPGRADING.md values.yaml 图表 ci flagd 产品模板values.schema.json4.2.2 自定义图表包,默认图表包集成了opentelemetry-collector、prometheus、grafana、opensearch、jaeger组件,我们先将其取消# vim 值.yaml 默认: # 评估所有组件的环境变量列表 环境: -名称:OTEL_COLLECTOR_NAME 值:center-collector.opentelemetry.svc opentelemetry-收集器: 已启用:false 耶格尔: 已启用:false 普罗米修斯: 已启用:false 格拉法纳: 已启用:false 开放搜索: 已启用:false4.2.3安装示例应用# helm install demo .-f values.yaml -所有服务渴望通过前置代理获得:http://localhost:8080 通过运行以下命令: kubectl --namespace 默认端口转发 svc/frontend-proxy 8080 :8080 通过端口转发暴露frontend-proxy服务后,这些路径上可以使用以下服务: 网上商店 http://localhost:8080/ Jaeger 用户界面 http://localhost:8080/jaeger/ui/ Grafana http://localhost:8080/grafana/ 负载生成器 UI http://localhost:8080/loadgen/ 功能标志UI http://localhost:8080/feature/ # kubectl 获取 pod 名称 就绪状态 重启时间 Accounting-79cdcf89df-h8nnc 1 /1 运动 0 2分15秒 ad-dc6768b6-lvzcq 1 /1 跑步 0 2分14秒 cart-65c89fcdd7-8tcwp 1 /1 运动 0 2分15秒 checkout-7c45459f67-xvft2 1 /1 运动 0 2分13秒 currency-65dd8c8f6-pxxbb 1 /1 跑步 0 2分15秒 email-5659b8d84f-9ljr9 1 /1 运动 0 2分15秒 flagd-57fdd95655-xrmsk 2 /2 运动 0 2分14秒 欺诈检测-7db9cbbd4d-znxq6 1 /1 运动 0 2分15秒 frontend-6bd764b6b9-gmstv 1 /1 跑步 0 2分15秒 frontend-proxy-56977d5ddb-cl87k 1 /1 跑步 0 2分15秒 image-provider-54b56c68b8-gdgnv 1 /1 跑步 0 2分15秒 kafka-976bc899f-79vd7 1 /1 运动 0 2分14秒 load-generator-79dd9d8d58-hcw8c 1 /1 运行 0 2分15秒 payment-6d9748df64-46zwt 1/1 正在播放 0 2分15秒 产品目录-658d99b4d4-xpczv 1/1 运行 0 2m13s quote-5dfbb544f5-6r8gr 1/1 播放 0 2分14秒 推荐-764b6c5cf8-lnkm6 1/1 播放 0 2分14秒 Shipping-5f65469746-zdr2g 1/1 运行 0 2分15秒 valkey-cart-85ccb5db-kr74s 1/1 运动 0 2分15秒 # kubectl 获取服务 名称类型 供应商 IP 外部 IP 端口年龄 广告 ClusterIP 10.103.72.85 <无> 8080/TCP 2分19秒 购物车 ClusterIP 10.106.118.178 <无> 8080/TCP 2分19秒 检出 ClusterIP 10.109.56.238 <无> 8080/TCP 2m19s 货币 ClusterIP 10.96.112.137 <无> 8080/TCP 2m19s 电子邮件 ClusterIP 10.103.214.222 <无> 8080/TCP 2分19秒 flagd ClusterIP 10.101.48.231 <无> 8013/TCP,8016/TCP,4000/TCP 2分19秒 前 ClusterIP 10.103.70.199 <无> 8080/TCP 2m19s 增强代理 ClusterIP 10.106.13.80 <无> 8080/TCP 2分19秒 镜像提供者 ClusterIP 10.109.69.146 <无> 8081/TCP 2m19s kafka ClusterIP 10.104.9.210 <无> 9092/TCP,9093/TCP 2分19秒 kubernetes ClusterIP 10.96.0.1 <无> 443/TCP 176d 负载生成器 ClusterIP 10.106.97.167 <none> 8089/TCP 2m19s 付款 ClusterIP 10.102.143.196 <无> 8080/TCP 2m19s 产品目录 ClusterIP 10.109.219.138 <无> 8080/TCP 2m19s 引用 ClusterIP 10.111.139.80 <无> 8080/TCP 2m19s 建议 ClusterIP 10.97.118.12 <无> 8080/TCP 2m19s 货物运输IP 10.107.102.160 <无> 8080/TCP 2m19s valkey-cart ClusterIP 10.104.34.233 <无> 6379/TCP 2分19秒4.2.4 接下来创建 ingress 资源,引入 frontend-proxy 服务 8080 端口api版本:traefik.io/v1alpha1 种类:IngressRoute 元数据: 名称: 练习 规格: 入口点: - 网络 路线: - 匹配:主持人(`demo.cuiliangblog.cn`) 种类:规则 服务: - 名称:前置代理 端口:80804.2.5创建完成ingress资源后添加主机解析并访问验证。4.3配置Ingress输出以 ingress 为例,从 Traefik v2.6 开始,Traefik 初步支持使用 OpenTelemetry 协议导出数据追踪(traces),这使得你可以将 Traefik 的数据发送到兼容 OTel 的湖南。Traefik 部署可参考文档:https://www.cuiliangblog.cn/detail/section/140101250, 访问配置参考文档:https://doc.traefik.io/traefik/observability/access-logs/#opentelemetry# vim 值.yaml 实验性:#实验性功能配置 otlpLogs: true # 日志导出otlp格式 extraArguments: # 自定义启动参数 —“--experimental.otlpLogs=true” —“--accesslog.otlp=true” -“--accesslog.otlp.grpc=true” “--accesslog.otlp.grpc.endpoint=center-collector.opentelemetry.svc:4317” –“--accesslog.otlp.grpc.insecure=true” 指标: # 指标 addInternals: true # 追踪内部流量 otlp: enabled: true #导出otlp格式 grpc: # 使用grpc协议 端点:“center-collector.opentelemetry.svc:4317”#OpenTelemetry地址 insecure: true # 跳过证书 追踪:#仓库追踪 addInternals: true # 追踪内部流量(如重定向) otlp: enabled: true #导出otlp格式 grpc: # 使用grpc协议 端点:“center-collector.opentelemetry.svc:4317”#OpenTelemetry地址 insecure: true # 跳过证书五、MinIO部署5.1配置MinIO对象存储5.1.1配置minIO[root@k8s-master minio]# cat > minio.yaml << EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: minio-pvc namespace: minio spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 50Gi --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: minio name: minio namespace: minio spec: selector: matchLabels: app: minio template: metadata: labels: app: minio spec: containers: - name: minio image: quay.io/minio/minio:latest command: - /bin/bash - -c args: - minio server /data --console-address :9090 volumeMounts: - mountPath: /data name: data ports: - containerPort: 9090 name: console - containerPort: 9000 name: api env: - name: MINIO_ROOT_USER # 指定用户名 value: "admin" - name: MINIO_ROOT_PASSWORD # 指定密码,最少8位置 value: "minioadmin" volumes: - name: data persistentVolumeClaim: claimName: minio-pvc --- apiVersion: v1 kind: Service metadata: name: minio-service namespace: minio spec: type: NodePort selector: app: minio ports: - name: console port: 9090 protocol: TCP targetPort: 9090 nodePort: 30300 - name: api port: 9000 protocol: TCP targetPort: 9000 nodePort: 30200 EOF [root@k8s-master minio]# kubectl apply -f minio.yaml deployment.apps/minio created service/minio-service created5.1.2使用NodePort方式访问网页[root@k8s-master minio]# kubectl get pod -n minio NAME READY STATUS RESTARTS AGE minio-86577f8755-l65mf 1/1 Running 0 11m [root@k8s-master minio]# kubectl get svc -n minio NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE minio-service NodePort 10.102.223.132 <none> 9090:30300/TCP,9000:30200/TCP 10m访问k8s节点ip:30300,默认用户名密码都是admin5.1.3使用ingress方式访问[root@k8s-master minio]# cat minio-ingress.yaml apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: minio-console namespace: minio spec: entryPoints: - web routes: - match: Host(`minio.test.com`) # 域名 kind: Rule services: - name: minio-service # 与svc的name一致 port: 9090 # 与svc的port一致 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: minio-api namespace: minio spec: entryPoints: - web routes: - match: Host(`minio-api.test.com`) # 域名 kind: Rule services: - name: minio-service # 与svc的name一致 port: 9000 # 与svc的port一致 [root@k8s-master minio]# kubectl apply -f minio-ingress.yaml ingressroute.traefik.containo.us/minio-console created ingressroute.traefik.containo.us/minio-api created添加hosts记录192.168.10.10 minio.test.com访问域名即可5.2helmminIO 部署集群minIO 集群方式部署使用operator或者helm。如果是一套 k8s 集群部署方式 minio 推荐 shiyonghelm 方式部署,operator 更适合多套 minio 集群多机场场景使用。 helmminIO部署参考文档:https://artifacthub.io/packages/helm/bitnami/minio。5.2.1资源角色规划使用分散方式部署高可用的minIO负载时,驱动器总数至少是4个,以保证纠错码。我们可以在k8s-work1和k8s-work2上的data1和data2路径存放minIO数据,使用本地pv方式持久化数据。# 创建数据存放路径 [root@k8s-work1 ~]# mkdir -p /data1/minio [root@k8s-work1 ~]# mkdir -p /data2/minio [root@k8s-work2 ~]# mkdir -p /data1/minio [root@k8s-work2 ~]# mkdir -p /data2/minio5.2.2下载helm包[root@k8s-master ~]# helm repo add bitnami https://charts.bitnami.com/bitnami [root@k8s-master ~]# helm search repo minio NAME CHART VERSION APP VERSION DESCRIPTION bitnami/minio 14.1.4 2024.3.30 MinIO(R) is an object storage server, compatibl... [root@k8s-master ~]# helm pull bitnami/minio --untar [root@k8s-master ~]# cd minio root@k8s01:~/helm/minio/minio-demo# ls minio minio-17.0.5.tgz root@k8s01:~/helm/minio/minio-demo# cd minio/ root@k8s01:~/helm/minio/minio-demo/minio# ls Chart.lock Chart.yaml ingress.yaml pv.yaml storageClass.yaml values.yaml charts demo.yaml pvc.yaml README.md templates values.yaml.bak 5.2.3创建scprovisioner 字段定义为 no-provisioner,这是尚不支持动态预配置动态生成 PV,所以我们需要提前手动创建 PV。volumeBindingMode 因为关系 定义为 WaitForFirstConsumer,是本地持久卷里一个非常重要的特性,即:延迟绑定。延迟绑定就是在我们提交 PVC 文件时,StorageClass 为我们延迟绑定 PV 与 PVC 的对应。root@k8s01:~/helm/minio/minio-demo/minio# cat storageClass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer5.2.4创建pvroot@k8s01:~/helm/minio/minio-demo/minio# cat pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: minio-pv1 labels: app: minio-0 spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteOnce storageClassName: local-storage # storageClass名称,与前面创建的storageClass保持一致 local: path: /data1/minio # 本地存储路径 nodeAffinity: # 调度至work1节点 required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s01 --- apiVersion: v1 kind: PersistentVolume metadata: name: minio-pv2 labels: app: minio-1 spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteOnce storageClassName: local-storage local: path: /data2/minio nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s01 --- apiVersion: v1 kind: PersistentVolume metadata: name: minio-pv3 labels: app: minio-2 spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteOnce storageClassName: local-storage local: path: /data1/minio nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s02 --- apiVersion: v1 kind: PersistentVolume metadata: name: minio-pv4 labels: app: minio-3 spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteOnce storageClassName: local-storage local: path: /data2/minio nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s02 root@k8s01:~/helm/minio/minio-demo/minio# kubectl get pv | grep minio minio-pv1 10Gi RWO Retain Bound minio/data-0-minio-demo-1 local-storage 10d minio-pv2 10Gi RWO Retain Bound minio/data-1-minio-demo-1 local-storage 10d minio-pv3 10Gi RWO Retain Bound minio/data-0-minio-demo-0 local-storage 10d minio-pv4 10Gi RWO Retain Bound minio/data-1-minio-demo-0 local-storage 10d5.2.5创建pvc创建的时候注意pvc的名字的构成:pvc的名字 = volume_name-statefulset_name-序号,然后通过selector标签选择,强制将pvc与pv绑定。root@k8s01:~/helm/minio/minio-demo/minio# cat pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data-minio-0 namespace: minio spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-storage selector: matchLabels: app: minio-0 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data-minio-1 namespace: minio spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-storage selector: matchLabels: app: minio-1 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data-minio-2 namespace: minio spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-storage selector: matchLabels: app: minio-2 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data-minio-3 namespace: minio spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-storage selector: matchLabels: app: minio-3root@k8s01:~/helm/minio/minio-demo/minio# kubectl get pvc -n minio NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE data-0-minio-demo-0 Bound minio-pv3 10Gi RWO local-storage 10d data-0-minio-demo-1 Bound minio-pv1 10Gi RWO local-storage 10d data-1-minio-demo-0 Bound minio-pv4 10Gi RWO local-storage 10d data-1-minio-demo-1 Bound minio-pv2 10Gi RWO local-storage 10d data-minio-0 Pending local-storage 10d 5.2.6 修改配置68 image: 69 registry: docker.io 70 repository: bitnami/minio 71 tag: 2024.3.30-debian-12-r0 104 mode: distributed # 集群模式,单节点为standalone,分布式集群为distributed 197 statefulset: 215 replicaCount: 2 # 节点数 218 zones: 1 # 区域数,1个即可 221 drivesPerNode: 2 # 每个节点数据目录数.2节点×2目录组成4节点的mimio集群 558 #podAnnotations: {} # 导出Prometheus指标 559 podAnnotations: 560 prometheus.io/scrape: "true" 561 prometheus.io/path: "/minio/v2/metrics/cluster" 562 prometheus.io/port: "9000" 1049 persistence: 1052 enabled: true 1060 storageClass: "local-storage" 1063 mountPath: /bitnami/minio/data 1066 accessModes: 1067 - ReadWriteOnce 1070 size: 10Gi 1073 annotations: {} 1076 existingClaim: ""5.2.7 部署miniOkubectl create ns minioroot@k8s01:~/helm/minio/minio-demo/minio# cat demo.yaml --- # Source: minio/templates/console/networkpolicy.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: minio-demo-console namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2.0.1 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: console app.kubernetes.io/part-of: minio spec: podSelector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: console app.kubernetes.io/part-of: minio policyTypes: - Ingress - Egress egress: - {} ingress: # Allow inbound connections - ports: - port: 9090 --- # Source: minio/templates/networkpolicy.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio spec: podSelector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio policyTypes: - Ingress - Egress egress: - {} ingress: # Allow inbound connections - ports: - port: 9000 --- # Source: minio/templates/console/pdb.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: minio-demo-console namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2.0.1 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: console app.kubernetes.io/part-of: minio spec: maxUnavailable: 1 selector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: console app.kubernetes.io/part-of: minio --- # Source: minio/templates/pdb.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio spec: maxUnavailable: 1 selector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio --- # Source: minio/templates/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/part-of: minio automountServiceAccountToken: false secrets: - name: minio-demo --- # Source: minio/templates/secrets.yaml apiVersion: v1 kind: Secret metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio type: Opaque data: root-user: "YWRtaW4=" root-password: "OGZHWWlrY3lpNA==" --- # Source: minio/templates/console/service.yaml apiVersion: v1 kind: Service metadata: name: minio-demo-console namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2.0.1 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: console app.kubernetes.io/part-of: minio spec: type: ClusterIP ports: - name: http port: 9090 targetPort: http nodePort: null selector: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: console app.kubernetes.io/part-of: minio --- # Source: minio/templates/headless-svc.yaml apiVersion: v1 kind: Service metadata: name: minio-demo-headless namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio spec: type: ClusterIP clusterIP: None ports: - name: tcp-api port: 9000 targetPort: api publishNotReadyAddresses: true selector: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio --- # Source: minio/templates/service.yaml apiVersion: v1 kind: Service metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio spec: type: ClusterIP ports: - name: tcp-api port: 9000 targetPort: api nodePort: null selector: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio --- # Source: minio/templates/console/deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: minio-demo-console namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2.0.1 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: console app.kubernetes.io/part-of: minio spec: replicas: 1 strategy: type: RollingUpdate selector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: console app.kubernetes.io/part-of: minio template: metadata: labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: console app.kubernetes.io/part-of: minio spec: serviceAccountName: minio-demo automountServiceAccountToken: false affinity: podAffinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - podAffinityTerm: labelSelector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: console topologyKey: kubernetes.io/hostname weight: 1 nodeAffinity: securityContext: fsGroup: 1001 fsGroupChangePolicy: Always supplementalGroups: [] sysctls: [] containers: - name: console image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-bitnami-minio-object-browser:2.0.1-debian-12-r2 imagePullPolicy: IfNotPresent securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL privileged: false readOnlyRootFilesystem: true runAsGroup: 1001 runAsNonRoot: true runAsUser: 1001 seLinuxOptions: {} seccompProfile: type: RuntimeDefault args: - server - --host - "0.0.0.0" - --port - "9090" env: - name: CONSOLE_MINIO_SERVER value: "http://minio-demo:9000" resources: limits: cpu: 150m ephemeral-storage: 2Gi memory: 192Mi requests: cpu: 100m ephemeral-storage: 50Mi memory: 128Mi ports: - name: http containerPort: 9090 livenessProbe: failureThreshold: 5 initialDelaySeconds: 5 periodSeconds: 5 successThreshold: 1 timeoutSeconds: 5 tcpSocket: port: http readinessProbe: failureThreshold: 5 initialDelaySeconds: 5 periodSeconds: 5 successThreshold: 1 timeoutSeconds: 5 httpGet: path: /minio port: http volumeMounts: - name: empty-dir mountPath: /tmp subPath: tmp-dir - name: empty-dir mountPath: /.console subPath: app-console-dir volumes: - name: empty-dir emptyDir: {} --- # Source: minio/templates/application.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: minio-demo namespace: "minio" labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio spec: selector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio podManagementPolicy: Parallel replicas: 2 serviceName: minio-demo-headless updateStrategy: type: RollingUpdate template: metadata: labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: minio app.kubernetes.io/version: 2025.5.24 helm.sh/chart: minio-17.0.5 app.kubernetes.io/component: minio app.kubernetes.io/part-of: minio annotations: checksum/credentials-secret: b06d639ea8d96eecf600100351306b11b3607d0ae288f01fe3489b67b6cc4873 prometheus.io/path: /minio/v2/metrics/cluster prometheus.io/port: "9000" prometheus.io/scrape: "true" spec: serviceAccountName: minio-demo affinity: podAffinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - podAffinityTerm: labelSelector: matchLabels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio app.kubernetes.io/component: minio topologyKey: kubernetes.io/hostname weight: 1 nodeAffinity: automountServiceAccountToken: false securityContext: fsGroup: 1001 fsGroupChangePolicy: OnRootMismatch supplementalGroups: [] sysctls: [] initContainers: containers: - name: minio image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-bitnami-minio:2025.5.24-debian-12-r6 imagePullPolicy: "IfNotPresent" securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL privileged: false readOnlyRootFilesystem: true runAsGroup: 1001 runAsNonRoot: true runAsUser: 1001 seLinuxOptions: {} seccompProfile: type: RuntimeDefault env: - name: BITNAMI_DEBUG value: "false" - name: MINIO_DISTRIBUTED_MODE_ENABLED value: "yes" - name: MINIO_DISTRIBUTED_NODES value: "minio-demo-{0...1}.minio-demo-headless.minio.svc.cluster.local:9000/bitnami/minio/data-{0...1}" - name: MINIO_SCHEME value: "http" - name: MINIO_FORCE_NEW_KEYS value: "no" - name: MINIO_ROOT_USER_FILE value: /opt/bitnami/minio/secrets/root-user - name: MINIO_ROOT_PASSWORD_FILE value: /opt/bitnami/minio/secrets/root-password - name: MINIO_SKIP_CLIENT value: "yes" - name: MINIO_API_PORT_NUMBER value: "9000" - name: MINIO_BROWSER value: "off" - name: MINIO_PROMETHEUS_AUTH_TYPE value: "public" - name: MINIO_DATA_DIR value: "/bitnami/minio/data-0" ports: - name: api containerPort: 9000 livenessProbe: httpGet: path: /minio/health/live port: api scheme: "HTTP" initialDelaySeconds: 5 periodSeconds: 5 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: tcpSocket: port: api initialDelaySeconds: 5 periodSeconds: 5 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 5 resources: limits: cpu: 375m ephemeral-storage: 2Gi memory: 384Mi requests: cpu: 250m ephemeral-storage: 50Mi memory: 256Mi volumeMounts: - name: empty-dir mountPath: /tmp subPath: tmp-dir - name: empty-dir mountPath: /opt/bitnami/minio/tmp subPath: app-tmp-dir - name: empty-dir mountPath: /.mc subPath: app-mc-dir - name: minio-credentials mountPath: /opt/bitnami/minio/secrets/ - name: data-0 mountPath: /bitnami/minio/data-0 - name: data-1 mountPath: /bitnami/minio/data-1 volumes: - name: empty-dir emptyDir: {} - name: minio-credentials secret: secretName: minio-demo volumeClaimTemplates: - metadata: name: data-0 labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio spec: accessModes: - "ReadWriteOnce" resources: requests: storage: "10Gi" storageClassName: local-storage - metadata: name: data-1 labels: app.kubernetes.io/instance: minio-demo app.kubernetes.io/name: minio spec: accessModes: - "ReadWriteOnce" resources: requests: storage: "10Gi" storageClassName: local-storage 5.2.8查看资源信息root@k8s01:~/helm/minio/minio-demo/minio# kubectl get all -n minio NAME READY STATUS RESTARTS AGE pod/minio-demo-0 1/1 Running 10 (5h27m ago) 10d pod/minio-demo-1 1/1 Running 10 (5h27m ago) 27h pod/minio-demo-console-7b586c5f9c-l8hnc 1/1 Running 9 (5h27m ago) 10d NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/minio-demo ClusterIP 10.97.92.61 <none> 9000/TCP 10d service/minio-demo-console ClusterIP 10.101.127.112 <none> 9090/TCP 10d service/minio-demo-headless ClusterIP None <none> 9000/TCP 10d NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/minio-demo-console 1/1 1 1 10d NAME DESIRED CURRENT READY AGE replicaset.apps/minio-demo-console-7b586c5f9c 1 1 1 10d NAME READY AGE statefulset.apps/minio-demo 2/2 10d 5.2.9创建ingress资源#以ingrss-nginx为例: # cat > ingress.yaml << EOF apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: minio-ingreess namespace: minio annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx rules: - host: minio.local.com http: paths: - path: / pathType: Prefix backend: service: name: minio port: number: 9001 EOF#以traefik为例: root@k8s01:~/helm/minio/minio-demo/minio# cat ingress.yaml apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: minio-console namespace: minio spec: entryPoints: - web routes: - match: Host(`minio.local.com`) kind: Rule services: - name: minio-demo-console # 修正为 Console Service 名称 port: 9090 # 修正为 Console 端口 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: minio-api namespace: minio spec: entryPoints: - web routes: - match: Host(`minio-api.local.com`) kind: Rule services: - name: minio-demo # 保持 API Service 名称 port: 9000 # 保持 API 端口5.2.10获取用户名密码# 获取用户名和密码 [root@k8s-master minio]# kubectl get secret --namespace minio minio -o jsonpath="{.data.root-user}" | base64 -d admin [root@k8s-master minio]# kubectl get secret --namespace minio minio -o jsonpath="{.data.root-password}" | base64 -d HWLLGMhgkp5.2.11访问web管理页5.3operator部署minIO企业版需要收费六、部署 Prometheus如果已安装metrics-server需要先卸载,否则冲突https://axzys.cn/index.php/archives/423/七、部署Thanos监控[可选]Thanos 很好的弥补了 Prometheus 在持久化存储和 多个 prometheus 集群之间跨集群查询方面的不足的问题。具体可参考文档https://thanos.io/, 部署参考文档:https://github.com/thanos-io/kube-thanos,本实例使用 receive 模式部署。 如果需要使用 sidecar 模式部署,可参考文档:https://github.com/prometheus-operator/prometheus-operator/blob/main/Documentation/platform/thanos.mdhttps://www.cuiliangblog.cn/detail/section/215968508八、部署 Grafanahttps://axzys.cn/index.php/archives/423/九、部署 OpenTelemetryhttps://www.cuiliangblog.cn/detail/section/215947486root@k8s01:~/helm/opentelemetry/cert-manager# cat new-center-collector.yaml apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector # 元数据定义部分 metadata: name: center # Collector 的名称为 center namespace: opentelemetry # 具体的配置内容 spec: replicas: 1 # 设置副本数量为1 # image: otel/opentelemetry-collector-contrib:latest # 使用支持 elasticsearch 的镜像 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/otel-opentelemetry-collector-contrib-latest:latest config: # 定义 Collector 配置 receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs) otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器 protocols: # 启用哪些协议来接收数据 grpc: endpoint: 0.0.0.0:4317 # 启用 gRPC 协议 http: endpoint: 0.0.0.0:4318 # 启用 HTTP 协议 processors: # 处理器,用于处理收集到的数据 batch: {} # 批处理器,用于将数据分批发送,提高效率 exporters: # 导出器,用于将处理后的数据发送到后端系统 debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试) otlp: # 数据发送到tempo的grpc端口 endpoint: "tempo:4317" tls: # 跳过证书验证 insecure: true prometheus: endpoint: "0.0.0.0:9464" # prometheus指标暴露端口 loki: endpoint: http://loki-gateway.loki.svc/loki/api/v1/push headers: X-Scope-OrgID: "fake" # 与Grafana配置一致 labels: attributes: # 从日志属性提取 k8s.pod.name: "pod" k8s.container.name: "container" k8s.namespace.name: "namespace" app: "application" # 映射应用中设置的标签 resource: # 从SDK资源属性提取 service.name: "service" service: # 服务配置部分 telemetry: logs: level: "debug" # 设置 Collector 自身日志等级为 debug(方便观察日志) pipelines: # 定义处理管道 traces: # 定义 trace 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [otlp] # 将数据导出到OTLP metrics: # 定义 metrics 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [prometheus] # 将数据导出到prometheus logs: receivers: [otlp] processors: [batch] # 使用批处理器 exporters: [loki] 十、部署 Tempo 10.1Tempo 介绍Grafana Tempo是一个开源、易于使用的大规模分布式跟踪后端。Tempo具有成本效益,仅需要对象存储即可运行,并且与Grafana,Prometheus和Loki深度集成,Tempo可以与任何开源跟踪协议一起使用,包括Jaeger、Zipkin和OpenTelemetry。它仅支持键/值查找,并且旨在与用于发现的日志和度量标准(示例性)协同工作。https://axzys.cn/index.php/archives/418/十一、部署Loki日志收集 11.1 loki 介绍 11.1.1组件功能Loki架构十分简单,由以下三个部分组成: Loki 是主服务器,负责存储日志和处理查询 。 promtail 是代理,负责收集日志并将其发送给 loki 。 Grafana 用于 UI 展示。 只要在应用程序服务器上安装promtail来收集日志然后发送给Loki存储,就可以在Grafana UI界面通过添加Loki为数据源进行日志查询11.1.2系统架构Distributor(接收日志入口):负责接收客户端发送的日志,进行标签解析、预处理、分片计算,转发给 Ingester。 Ingester(日志暂存处理):处理 Distributor 发送的日志,缓存到内存,定期刷写到对象存储或本地。支持查询时返回缓存数据。 Querier(日志查询器):负责处理来自 Grafana 或其他客户端的查询请求,并从 Ingester 和 Store 中读取数据。 Index:boltdb-shipper 模式的 Index 提供者 在分布式部署中,读取和缓存 index 数据,避免 S3 等远程存储频繁请求。 Chunks 是Loki 中一种核心的数据结构和存储形式,主要由 ingester 负责生成和管理。它不是像 distributor、querier 那样的可部署服务,但在 Loki 架构和存储中极其关键。11.1.3 部署 lokiloki 也分为整体式 、微服务式、可扩展式三种部署模式,具体可参考文档https://grafana.com/docs/loki/latest/setup/install/helm/concepts/,此处以可扩展式为例: loki 使用 minio 对象存储配置可参考文档:https://blog.min.io/how-to-grafana-loki-minio/# helm repo add grafana https://grafana.github.io/helm-charts "grafana" has been added to your repositories # helm pull grafana/loki --untar # ls charts Chart.yaml README.md requirements.lock requirements.yaml templates values.yaml--- # Source: loki/templates/backend/poddisruptionbudget-backend.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: loki-backend namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: backend spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend maxUnavailable: 1 --- # Source: loki/templates/chunks-cache/poddisruptionbudget-chunks-cache.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: loki-memcached-chunks-cache namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: memcached-chunks-cache spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: memcached-chunks-cache maxUnavailable: 1 --- # Source: loki/templates/read/poddisruptionbudget-read.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: loki-read namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: read spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read maxUnavailable: 1 --- # Source: loki/templates/results-cache/poddisruptionbudget-results-cache.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: loki-memcached-results-cache namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: memcached-results-cache spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: memcached-results-cache maxUnavailable: 1 --- # Source: loki/templates/write/poddisruptionbudget-write.yaml apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: loki-write namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: write spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: write maxUnavailable: 1 --- # Source: loki/templates/loki-canary/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: loki-canary namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: canary automountServiceAccountToken: true --- # Source: loki/templates/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: loki namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" automountServiceAccountToken: true --- # Source: loki/templates/config.yaml apiVersion: v1 kind: ConfigMap metadata: name: loki namespace: loki data: config.yaml: | auth_enabled: true bloom_build: builder: planner_address: loki-backend-headless.loki.svc.cluster.local:9095 enabled: false bloom_gateway: client: addresses: dnssrvnoa+_grpc._tcp.loki-backend-headless.loki.svc.cluster.local enabled: false chunk_store_config: chunk_cache_config: background: writeback_buffer: 500000 writeback_goroutines: 1 writeback_size_limit: 500MB memcached: batch_size: 4 parallelism: 5 memcached_client: addresses: dnssrvnoa+_memcached-client._tcp.loki-chunks-cache.loki.svc consistent_hash: true max_idle_conns: 72 timeout: 2000ms common: compactor_address: 'http://loki-backend:3100' path_prefix: /var/loki replication_factor: 3 frontend: scheduler_address: "" tail_proxy_url: "" frontend_worker: scheduler_address: "" index_gateway: mode: simple limits_config: max_cache_freshness_per_query: 10m query_timeout: 300s reject_old_samples: true reject_old_samples_max_age: 168h split_queries_by_interval: 15m volume_enabled: true memberlist: join_members: - loki-memberlist pattern_ingester: enabled: false query_range: align_queries_with_step: true cache_results: true results_cache: cache: background: writeback_buffer: 500000 writeback_goroutines: 1 writeback_size_limit: 500MB memcached_client: addresses: dnssrvnoa+_memcached-client._tcp.loki-results-cache.loki.svc consistent_hash: true timeout: 500ms update_interval: 1m ruler: storage: s3: access_key_id: admin bucketnames: null endpoint: minio-demo.minio.svc:9000 insecure: true s3: s3://admin:8fGYikcyi4@minio-demo.minio.svc:9000/loki s3forcepathstyle: true secret_access_key: 8fGYikcyi4 type: s3 wal: dir: /var/loki/ruler-wal runtime_config: file: /etc/loki/runtime-config/runtime-config.yaml schema_config: configs: - from: "2024-04-01" index: period: 24h prefix: index_ object_store: s3 schema: v13 store: tsdb server: grpc_listen_port: 9095 http_listen_port: 3100 http_server_read_timeout: 600s http_server_write_timeout: 600s storage_config: aws: access_key_id: admin secret_access_key: 8fGYikcyi4 region: "" endpoint: minio-demo.minio.svc:9000 insecure: true s3forcepathstyle: true bucketnames: loki bloom_shipper: working_directory: /var/loki/data/bloomshipper boltdb_shipper: index_gateway_client: server_address: dns+loki-backend-headless.loki.svc.cluster.local:9095 hedging: at: 250ms max_per_second: 20 up_to: 3 tsdb_shipper: index_gateway_client: server_address: dns+loki-backend-headless.loki.svc.cluster.local:9095 tracing: enabled: false --- # Source: loki/templates/gateway/configmap-gateway.yaml apiVersion: v1 kind: ConfigMap metadata: name: loki-gateway namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: gateway data: nginx.conf: | worker_processes 5; ## loki: 1 error_log /dev/stderr; pid /tmp/nginx.pid; worker_rlimit_nofile 8192; events { worker_connections 4096; ## loki: 1024 } http { client_body_temp_path /tmp/client_temp; proxy_temp_path /tmp/proxy_temp_path; fastcgi_temp_path /tmp/fastcgi_temp; uwsgi_temp_path /tmp/uwsgi_temp; scgi_temp_path /tmp/scgi_temp; client_max_body_size 4M; proxy_read_timeout 600; ## 10 minutes proxy_send_timeout 600; proxy_connect_timeout 600; proxy_http_version 1.1; #loki_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] $status ' '"$request" $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /dev/stderr main; sendfile on; tcp_nopush on; resolver kube-dns.kube-system.svc.cluster.local.; server { listen 8080; listen [::]:8080; location = / { return 200 'OK'; auth_basic off; } ######################################################## # Configure backend targets location ^~ /ui { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } # Distributor location = /api/prom/push { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } location = /loki/api/v1/push { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } location = /distributor/ring { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } location = /otlp/v1/logs { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } # Ingester location = /flush { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } location ^~ /ingester/ { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } location = /ingester { internal; # to suppress 301 } # Ring location = /ring { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } # MemberListKV location = /memberlist { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } # Ruler location = /ruler/ring { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /api/prom/rules { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location ^~ /api/prom/rules/ { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /loki/api/v1/rules { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location ^~ /loki/api/v1/rules/ { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /prometheus/api/v1/alerts { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /prometheus/api/v1/rules { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } # Compactor location = /compactor/ring { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /loki/api/v1/delete { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } location = /loki/api/v1/cache/generation_numbers { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } # IndexGateway location = /indexgateway/ring { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } # QueryScheduler location = /scheduler/ring { proxy_pass http://loki-backend.loki.svc.cluster.local:3100$request_uri; } # Config location = /config { proxy_pass http://loki-write.loki.svc.cluster.local:3100$request_uri; } # QueryFrontend, Querier location = /api/prom/tail { proxy_pass http://loki-read.loki.svc.cluster.local:3100$request_uri; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } location = /loki/api/v1/tail { proxy_pass http://loki-read.loki.svc.cluster.local:3100$request_uri; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } location ^~ /api/prom/ { proxy_pass http://loki-read.loki.svc.cluster.local:3100$request_uri; } location = /api/prom { internal; # to suppress 301 } # if the X-Query-Tags header is empty, set a noop= without a value as empty values are not logged set $query_tags $http_x_query_tags; if ($query_tags !~* '') { set $query_tags "noop="; } location ^~ /loki/api/v1/ { # pass custom headers set by Grafana as X-Query-Tags which are logged as key/value pairs in metrics.go log messages proxy_set_header X-Query-Tags "${query_tags},user=${http_x_grafana_user},dashboard_id=${http_x_dashboard_uid},dashboard_title=${http_x_dashboard_title},panel_id=${http_x_panel_id},panel_title=${http_x_panel_title},source_rule_uid=${http_x_rule_uid},rule_name=${http_x_rule_name},rule_folder=${http_x_rule_folder},rule_version=${http_x_rule_version},rule_source=${http_x_rule_source},rule_type=${http_x_rule_type}"; proxy_pass http://loki-read.loki.svc.cluster.local:3100$request_uri; } location = /loki/api/v1 { internal; # to suppress 301 } } } --- # Source: loki/templates/runtime-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: loki-runtime namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" data: runtime-config.yaml: | {} --- # Source: loki/templates/backend/clusterrole.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" name: loki-clusterrole rules: - apiGroups: [""] # "" indicates the core API group resources: ["configmaps", "secrets"] verbs: ["get", "watch", "list"] --- # Source: loki/templates/backend/clusterrolebinding.yaml kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: loki-clusterrolebinding labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" subjects: - kind: ServiceAccount name: loki namespace: loki roleRef: kind: ClusterRole name: loki-clusterrole apiGroup: rbac.authorization.k8s.io --- # Source: loki/templates/backend/query-scheduler-discovery.yaml apiVersion: v1 kind: Service metadata: name: loki-query-scheduler-discovery namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend prometheus.io/service-monitor: "false" annotations: spec: type: ClusterIP clusterIP: None publishNotReadyAddresses: true ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend --- # Source: loki/templates/backend/service-backend-headless.yaml apiVersion: v1 kind: Service metadata: name: loki-backend-headless namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend variant: headless prometheus.io/service-monitor: "false" annotations: spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP appProtocol: tcp selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend --- # Source: loki/templates/backend/service-backend.yaml apiVersion: v1 kind: Service metadata: name: loki-backend namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: backend annotations: spec: type: ClusterIP ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend --- # Source: loki/templates/chunks-cache/service-chunks-cache-headless.yaml apiVersion: v1 kind: Service metadata: name: loki-chunks-cache labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: "memcached-chunks-cache" annotations: {} namespace: "loki" spec: type: ClusterIP clusterIP: None ports: - name: memcached-client port: 11211 targetPort: 11211 - name: http-metrics port: 9150 targetPort: 9150 selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-chunks-cache" --- # Source: loki/templates/gateway/service-gateway.yaml apiVersion: v1 kind: Service metadata: name: loki-gateway namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: gateway prometheus.io/service-monitor: "false" annotations: spec: type: ClusterIP ports: - name: http-metrics port: 80 targetPort: http-metrics protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: gateway --- # Source: loki/templates/loki-canary/service.yaml apiVersion: v1 kind: Service metadata: name: loki-canary namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: canary annotations: spec: type: ClusterIP ports: - name: http-metrics port: 3500 targetPort: http-metrics protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: canary --- # Source: loki/templates/read/service-read-headless.yaml apiVersion: v1 kind: Service metadata: name: loki-read-headless namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read variant: headless prometheus.io/service-monitor: "false" annotations: spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP appProtocol: tcp selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read --- # Source: loki/templates/read/service-read.yaml apiVersion: v1 kind: Service metadata: name: loki-read namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: read annotations: spec: type: ClusterIP ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read --- # Source: loki/templates/results-cache/service-results-cache-headless.yaml apiVersion: v1 kind: Service metadata: name: loki-results-cache labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: "memcached-results-cache" annotations: {} namespace: "loki" spec: type: ClusterIP clusterIP: None ports: - name: memcached-client port: 11211 targetPort: 11211 - name: http-metrics port: 9150 targetPort: 9150 selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-results-cache" --- # Source: loki/templates/service-memberlist.yaml apiVersion: v1 kind: Service metadata: name: loki-memberlist namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" annotations: spec: type: ClusterIP clusterIP: None ports: - name: tcp port: 7946 targetPort: http-memberlist protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/part-of: memberlist --- # Source: loki/templates/write/service-write-headless.yaml apiVersion: v1 kind: Service metadata: name: loki-write-headless namespace: loki labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: write variant: headless prometheus.io/service-monitor: "false" annotations: spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP appProtocol: tcp selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: write --- # Source: loki/templates/write/service-write.yaml apiVersion: v1 kind: Service metadata: name: loki-write namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: write annotations: spec: type: ClusterIP ports: - name: http-metrics port: 3100 targetPort: http-metrics protocol: TCP - name: grpc port: 9095 targetPort: grpc protocol: TCP selector: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: write --- # Source: loki/templates/loki-canary/daemonset.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: loki-canary namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: canary spec: selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: canary updateStrategy: rollingUpdate: maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: canary spec: serviceAccountName: loki-canary securityContext: fsGroup: 10001 runAsGroup: 10001 runAsNonRoot: true runAsUser: 10001 containers: - name: loki-canary image: registry.cn-guangzhou.aliyuncs.com/xingcangku/grafana-loki-canary-3.5.0:3.5.0 imagePullPolicy: IfNotPresent args: - -addr=loki-gateway.loki.svc.cluster.local.:80 - -labelname=pod - -labelvalue=$(POD_NAME) - -user=self-monitoring - -tenant-id=self-monitoring - -pass= - -push=true securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true volumeMounts: ports: - name: http-metrics containerPort: 3500 protocol: TCP env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name readinessProbe: httpGet: path: /metrics port: http-metrics initialDelaySeconds: 15 timeoutSeconds: 1 volumes: --- # Source: loki/templates/gateway/deployment-gateway-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: loki-gateway namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: gateway spec: replicas: 1 strategy: type: RollingUpdate revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: gateway template: metadata: annotations: checksum/config: 440a9cd2e87de46e0aad42617818d58f1e2daacb1ae594bad1663931faa44ebc labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: gateway spec: serviceAccountName: loki enableServiceLinks: true securityContext: fsGroup: 101 runAsGroup: 101 runAsNonRoot: true runAsUser: 101 terminationGracePeriodSeconds: 30 containers: - name: nginx image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-nginxinc-nginx-unprivileged-1.28-alpine:1.28-alpine imagePullPolicy: IfNotPresent ports: - name: http-metrics containerPort: 8080 protocol: TCP readinessProbe: httpGet: path: / port: http-metrics initialDelaySeconds: 15 timeoutSeconds: 1 securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true volumeMounts: - name: config mountPath: /etc/nginx - name: tmp mountPath: /tmp - name: docker-entrypoint-d-override mountPath: /docker-entrypoint.d resources: {} affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app.kubernetes.io/component: gateway topologyKey: kubernetes.io/hostname volumes: - name: config configMap: name: loki-gateway - name: tmp emptyDir: {} - name: docker-entrypoint-d-override emptyDir: {} --- # Source: loki/templates/read/deployment-read.yaml apiVersion: apps/v1 kind: Deployment metadata: name: loki-read namespace: loki labels: app.kubernetes.io/part-of: memberlist helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: read spec: replicas: 3 strategy: rollingUpdate: maxSurge: 0 maxUnavailable: 1 revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read template: metadata: annotations: checksum/config: 1616415aaf41d5dec62fea8a013eab1aa2a559579f5f72299f7041e5cd6ea4c7 labels: app.kubernetes.io/part-of: memberlist app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: read spec: serviceAccountName: loki automountServiceAccountToken: true securityContext: fsGroup: 10001 runAsGroup: 10001 runAsNonRoot: true runAsUser: 10001 terminationGracePeriodSeconds: 30 containers: - name: loki image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-grafana-loki-3.5.0:3.5.0 imagePullPolicy: IfNotPresent args: - -config.file=/etc/loki/config/config.yaml - -target=read - -legacy-read-mode=false - -common.compactor-grpc-address=loki-backend.loki.svc.cluster.local:9095 ports: - name: http-metrics containerPort: 3100 protocol: TCP - name: grpc containerPort: 9095 protocol: TCP - name: http-memberlist containerPort: 7946 protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true readinessProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 30 timeoutSeconds: 1 volumeMounts: - name: config mountPath: /etc/loki/config - name: runtime-config mountPath: /etc/loki/runtime-config - name: tmp mountPath: /tmp - name: data mountPath: /var/loki resources: {} affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app.kubernetes.io/component: read topologyKey: kubernetes.io/hostname volumes: - name: tmp emptyDir: {} - name: data emptyDir: {} - name: config configMap: name: loki items: - key: "config.yaml" path: "config.yaml" - name: runtime-config configMap: name: loki-runtime --- # Source: loki/templates/backend/statefulset-backend.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: loki-backend namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: backend app.kubernetes.io/part-of: memberlist spec: replicas: 3 podManagementPolicy: Parallel updateStrategy: rollingUpdate: partition: 0 serviceName: loki-backend-headless revisionHistoryLimit: 10 persistentVolumeClaimRetentionPolicy: whenDeleted: Delete whenScaled: Delete selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: backend template: metadata: annotations: checksum/config: 1616415aaf41d5dec62fea8a013eab1aa2a559579f5f72299f7041e5cd6ea4c7 labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: backend app.kubernetes.io/part-of: memberlist spec: serviceAccountName: loki automountServiceAccountToken: true securityContext: fsGroup: 10001 runAsGroup: 10001 runAsNonRoot: true runAsUser: 10001 terminationGracePeriodSeconds: 300 containers: - name: loki-sc-rules image: "registry.cn-guangzhou.aliyuncs.com/xingcangku/kiwigrid-k8s-sidecar-1.30.3:1.30.3" imagePullPolicy: IfNotPresent env: - name: METHOD value: WATCH - name: LABEL value: "loki_rule" - name: FOLDER value: "/rules" - name: RESOURCE value: "both" - name: WATCH_SERVER_TIMEOUT value: "60" - name: WATCH_CLIENT_TIMEOUT value: "60" - name: LOG_LEVEL value: "INFO" securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true volumeMounts: - name: sc-rules-volume mountPath: "/rules" - name: loki image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-grafana-loki-3.5.0:3.5.0 imagePullPolicy: IfNotPresent args: - -config.file=/etc/loki/config/config.yaml - -target=backend - -legacy-read-mode=false ports: - name: http-metrics containerPort: 3100 protocol: TCP - name: grpc containerPort: 9095 protocol: TCP - name: http-memberlist containerPort: 7946 protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true readinessProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 30 timeoutSeconds: 1 volumeMounts: - name: config mountPath: /etc/loki/config - name: runtime-config mountPath: /etc/loki/runtime-config - name: tmp mountPath: /tmp - name: data mountPath: /var/loki - name: sc-rules-volume mountPath: "/rules" resources: {} affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app.kubernetes.io/component: backend topologyKey: kubernetes.io/hostname volumes: - name: tmp emptyDir: {} - name: config configMap: name: loki items: - key: "config.yaml" path: "config.yaml" - name: runtime-config configMap: name: loki-runtime - name: sc-rules-volume emptyDir: {} volumeClaimTemplates: - metadata: name: data spec: storageClassName: "ceph-cephfs" # 显式指定存储类 accessModes: - ReadWriteOnce resources: requests: storage: 10Gi --- # Source: loki/templates/chunks-cache/statefulset-chunks-cache.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: loki-chunks-cache labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: "memcached-chunks-cache" name: "memcached-chunks-cache" annotations: {} namespace: "loki" spec: podManagementPolicy: Parallel replicas: 1 selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-chunks-cache" name: "memcached-chunks-cache" updateStrategy: type: RollingUpdate serviceName: loki-chunks-cache template: metadata: labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-chunks-cache" name: "memcached-chunks-cache" annotations: spec: serviceAccountName: loki securityContext: fsGroup: 11211 runAsGroup: 11211 runAsNonRoot: true runAsUser: 11211 initContainers: [] nodeSelector: {} affinity: {} topologySpreadConstraints: [] tolerations: [] terminationGracePeriodSeconds: 60 containers: - name: memcached image: registry.cn-guangzhou.aliyuncs.com/xingcangku/memcached-1.6.38-alpine:1.6.38-alpine imagePullPolicy: IfNotPresent resources: limits: memory: 4096Mi requests: cpu: 500m memory: 2048Mi ports: - containerPort: 11211 name: client args: - -m 4096 - --extended=modern,track_sizes - -I 5m - -c 16384 - -v - -u 11211 env: envFrom: securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true - name: exporter image: registry.cn-guangzhou.aliyuncs.com/xingcangku/prom-memcached-exporter-v0.15.2:v0.15.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9150 name: http-metrics args: - "--memcached.address=localhost:11211" - "--web.listen-address=0.0.0.0:9150" resources: limits: {} requests: {} securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true --- # Source: loki/templates/results-cache/statefulset-results-cache.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: loki-results-cache labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: "memcached-results-cache" name: "memcached-results-cache" annotations: {} namespace: "loki" spec: podManagementPolicy: Parallel replicas: 1 selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-results-cache" name: "memcached-results-cache" updateStrategy: type: RollingUpdate serviceName: loki-results-cache template: metadata: labels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: "memcached-results-cache" name: "memcached-results-cache" annotations: spec: serviceAccountName: loki securityContext: fsGroup: 11211 runAsGroup: 11211 runAsNonRoot: true runAsUser: 11211 initContainers: [] nodeSelector: {} affinity: {} topologySpreadConstraints: [] tolerations: [] terminationGracePeriodSeconds: 60 containers: - name: memcached image: registry.cn-guangzhou.aliyuncs.com/xingcangku/memcached-1.6.38-alpine:1.6.38-alpine imagePullPolicy: IfNotPresent resources: limits: memory: 1229Mi requests: cpu: 500m memory: 1229Mi ports: - containerPort: 11211 name: client args: - -m 1024 - --extended=modern,track_sizes - -I 5m - -c 16384 - -v - -u 11211 env: envFrom: securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true - name: exporter image: registry.cn-guangzhou.aliyuncs.com/xingcangku/prom-memcached-exporter-v0.15.2:v0.15.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9150 name: http-metrics args: - "--memcached.address=localhost:11211" - "--web.listen-address=0.0.0.0:9150" resources: limits: {} requests: {} securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true --- # Source: loki/templates/write/statefulset-write.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: loki-write namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: write app.kubernetes.io/part-of: memberlist spec: replicas: 3 podManagementPolicy: Parallel updateStrategy: rollingUpdate: partition: 0 serviceName: loki-write-headless revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/component: write template: metadata: annotations: checksum/config: 1616415aaf41d5dec62fea8a013eab1aa2a559579f5f72299f7041e5cd6ea4c7 labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: write app.kubernetes.io/part-of: memberlist spec: serviceAccountName: loki automountServiceAccountToken: true enableServiceLinks: true securityContext: fsGroup: 10001 runAsGroup: 10001 runAsNonRoot: true runAsUser: 10001 terminationGracePeriodSeconds: 300 containers: - name: loki image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-grafana-loki-3.5.0:3.5.0 imagePullPolicy: IfNotPresent args: - -config.file=/etc/loki/config/config.yaml - -target=write ports: - name: http-metrics containerPort: 3100 protocol: TCP - name: grpc containerPort: 9095 protocol: TCP - name: http-memberlist containerPort: 7946 protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true readinessProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 30 timeoutSeconds: 1 volumeMounts: - name: config mountPath: /etc/loki/config - name: runtime-config mountPath: /etc/loki/runtime-config - name: data mountPath: /var/loki resources: {} affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app.kubernetes.io/component: write topologyKey: kubernetes.io/hostname volumes: - name: config configMap: name: loki items: - key: "config.yaml" path: "config.yaml" - name: runtime-config configMap: name: loki-runtime volumeClaimTemplates: - apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data spec: accessModes: - ReadWriteOnce resources: requests: storage: "10Gi" --- # Source: loki/templates/tests/test-canary.yaml apiVersion: v1 kind: Pod metadata: name: "loki-helm-test" namespace: loki labels: helm.sh/chart: loki-6.30.1 app.kubernetes.io/name: loki app.kubernetes.io/instance: loki app.kubernetes.io/version: "3.5.0" app.kubernetes.io/component: helm-test annotations: "helm.sh/hook": test spec: containers: - name: loki-helm-test image: registry.cn-guangzhou.aliyuncs.com/xingcangku/docker.io-grafana-loki-helm-test-ewelch-distributed-helm-chart-1:ewelch-distributed-helm-chart-17db5ee env: - name: CANARY_SERVICE_ADDRESS value: "http://loki-canary:3500/metrics" - name: CANARY_PROMETHEUS_ADDRESS value: "" - name: CANARY_TEST_TIMEOUT value: "1m" args: - -test.v restartPolicy: Never root@k8s01:~/helm/loki/loki# kubectl get pod -n loki NAME READY STATUS RESTARTS AGE loki-backend-0 2/2 Running 2 (6h13m ago) 30h loki-backend-1 2/2 Running 2 (6h13m ago) 30h loki-backend-2 2/2 Running 2 (6h13m ago) 30h loki-canary-62z48 1/1 Running 1 (6h13m ago) 30h loki-canary-lg62j 1/1 Running 1 (6h13m ago) 30h loki-canary-nrph4 1/1 Running 1 (6h13m ago) 30h loki-chunks-cache-0 2/2 Running 0 6h12m loki-gateway-75d8cf9754-nwpdw 1/1 Running 13 (6h12m ago) 30h loki-read-dc7bdc98-8kzwk 1/1 Running 1 (6h13m ago) 30h loki-read-dc7bdc98-lmzcd 1/1 Running 1 (6h13m ago) 30h loki-read-dc7bdc98-nrz5h 1/1 Running 1 (6h13m ago) 30h loki-results-cache-0 2/2 Running 2 (6h13m ago) 30h loki-write-0 1/1 Running 1 (6h13m ago) 30h loki-write-1 1/1 Running 1 (6h13m ago) 30h loki-write-2 1/1 Running 1 (6h13m ago) 30h root@k8s01:~/helm/loki/loki# kubectl get svc -n loki NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE loki-backend ClusterIP 10.101.131.151 <none> 3100/TCP,9095/TCP 30h loki-backend-headless ClusterIP None <none> 3100/TCP,9095/TCP 30h loki-canary ClusterIP 10.109.131.175 <none> 3500/TCP 30h loki-chunks-cache ClusterIP None <none> 11211/TCP,9150/TCP 30h loki-gateway ClusterIP 10.98.126.160 <none> 80/TCP 30h loki-memberlist ClusterIP None <none> 7946/TCP 30h loki-query-scheduler-discovery ClusterIP None <none> 3100/TCP,9095/TCP 30h loki-read ClusterIP 10.103.248.164 <none> 3100/TCP,9095/TCP 30h loki-read-headless ClusterIP None <none> 3100/TCP,9095/TCP 30h loki-results-cache ClusterIP None <none> 11211/TCP,9150/TCP 30h loki-write ClusterIP 10.108.223.18 <none> 3100/TCP,9095/TCP 30h loki-write-headless ClusterIP None <none> 3100/TCP,9095/TCP 30h code here...