搜索到

8

篇与

的结果

-

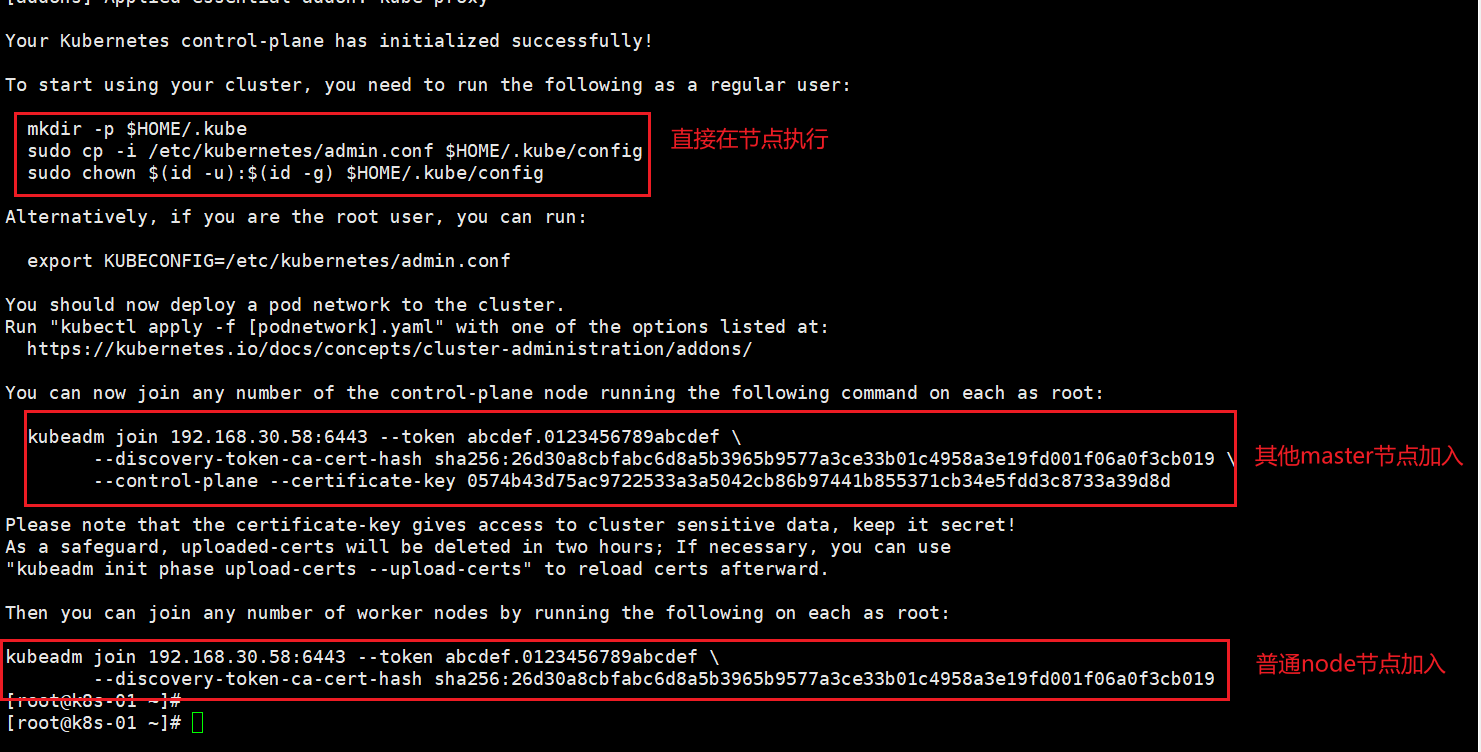

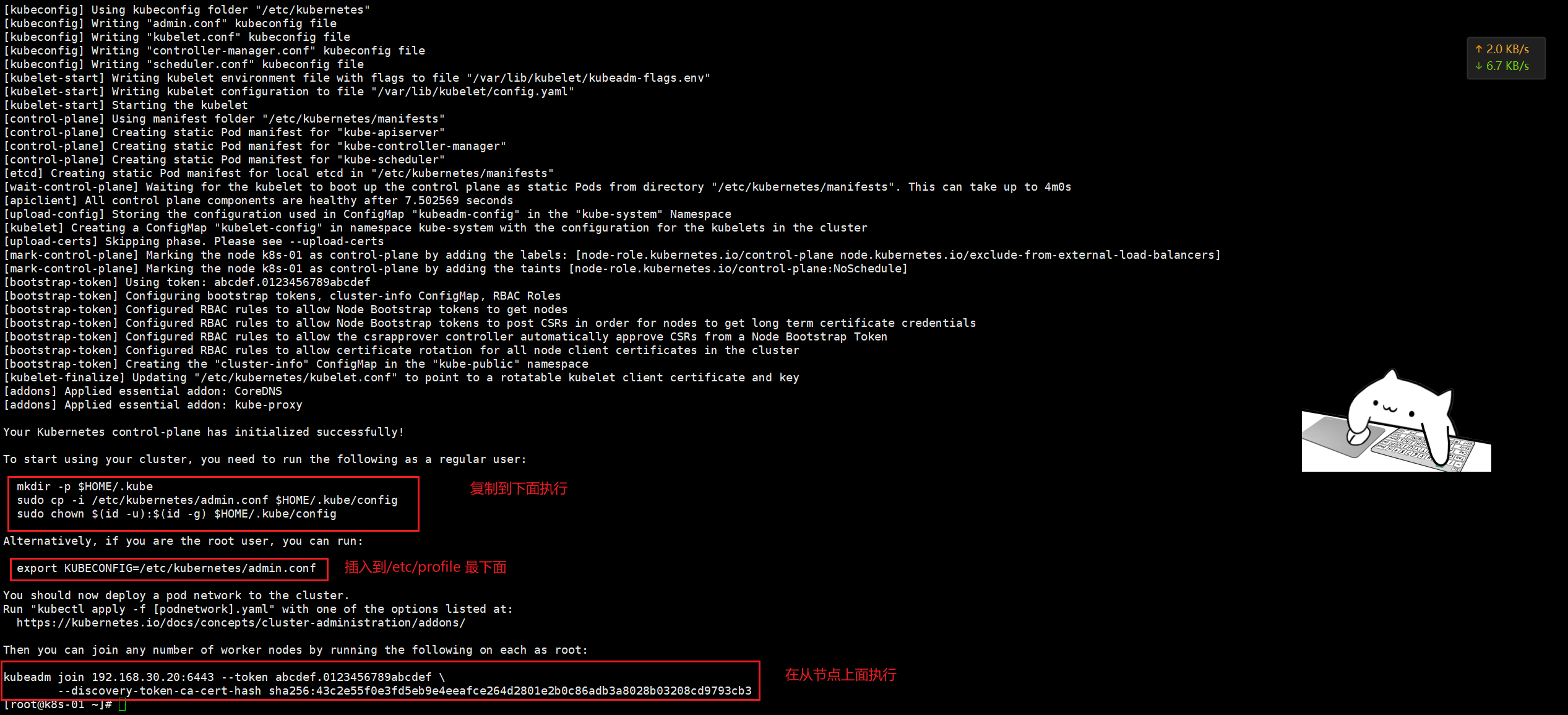

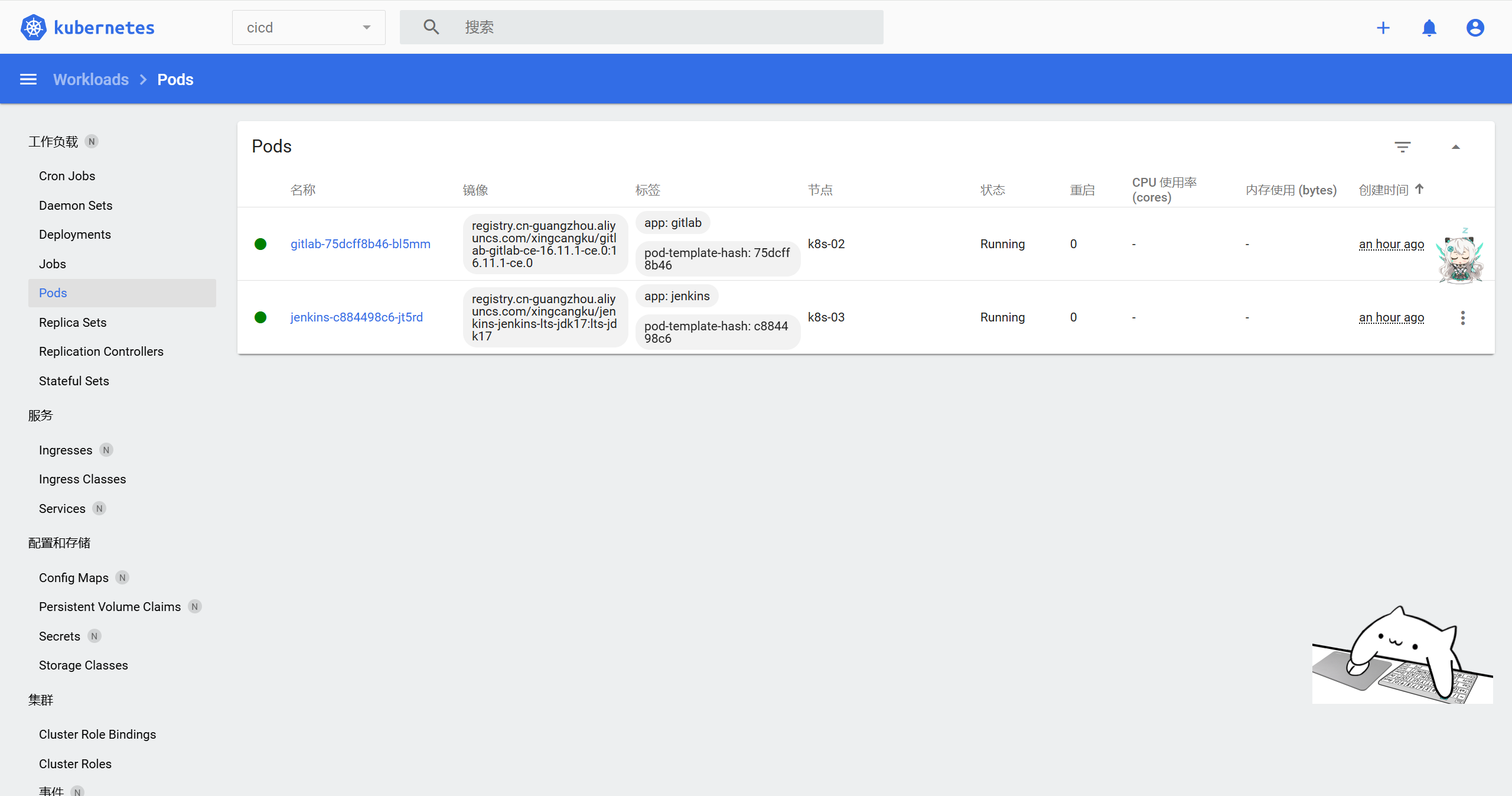

rocky linux 9 安装 多主架构 一、固定IP地址#配置 sudo nmcli connection modify ens160 \ ipv4.method manual \ ipv4.addresses 192.168.30.50/24 \ ipv4.gateway 192.168.30.2 \ ipv4.dns "8.8.8.8,8.8.4.4" #更新配置 sudo nmcli connection down ens160 && sudo nmcli connection up ens160二、准备工作 2.0 修改主机名#每个节点对应一个 hostnamectl set-hostname k8s-01 hostnamectl set-hostname k8s-02 hostnamectl set-hostname k8s-03#提前配好vip ip 三个节点都要做 cat >>/etc/hosts <<'EOF' 192.168.30.50 k8s-01 192.168.30.51 k8s-02 192.168.30.52 k8s-03 192.168.30.58 k8s-vip EOF2.1 配置yum源#sudo mkdir /etc/yum.repos.d/backup #sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/ 直接执行下面的 # 使用阿里云推荐的配置方法 sudo sed -e 's!^mirrorlist=!#mirrorlist=!g' \ -e 's!^#baseurl=http://dl.rockylinux.org/$contentdir!baseurl=https://mirrors.aliyun.com/rockylinux!g' \ -i /etc/yum.repos.d/Rocky-*.repo #清理并重建缓存 sudo dnf clean all sudo dnf makecache #测试更新 sudo dnf -y update sudo dnf -y install wget curl vim tar gzip2.2设置时区#查看当前时区设置 timedatectl #设置时区为中国时区(上海时间) sudo timedatectl set-timezone Asia/Shanghai2.3设置时间#安装并配置 Chrony(推荐) # RHEL/CentOS/Alma/Rocky sudo dnf -y install chrony || sudo yum -y install chrony sudo systemctl enable --now chronyd # 编辑配置文件 sudo vi /etc/chrony.conf #把默认的 pool/server 行注释掉(没外网也无用),然后加入(或确认存在)以下内容: # 把 30.50 作为“本地时间源”,无外部上游时自成一体 local stratum 10 # 允许本网段客户端访问 allow 192.168.30.0/24 # 绑定监听到这块网卡(可选,但建议写上) bindaddress 192.168.30.50 # 客户端第一次偏差大时允许快速步进校时 makestep 1 3 # 用系统时钟做源,且把系统时间同步到硬件时钟(断电后也较准) rtcsync #保存重启 sudo systemctl restart chronyd #防火墙放行 # firewalld(RHEL系) sudo firewall-cmd --add-service=ntp --permanent sudo firewall-cmd --reload #验证服务器状态 # 查看 chrony 源与自我状态 chronyc tracking # 查看已连接的客户端(执行一会儿后能看到) chronyc clients # 确认监听 123/udp sudo ss -lunp | grep :123# 客户端安装 # RHEL系 sudo dnf -y install chrony || sudo yum -y install chrony # Debian/Ubuntu sudo apt -y install chrony # 配置(RHEL: /etc/chrony.conf;Ubuntu/Debian: /etc/chrony/chrony.conf) # 注释掉原来的 pool/server 行,新增: server 192.168.30.50 iburst # 重启并查看 sudo systemctl restart chronyd chronyc sources -v chronyc tracking2.4关闭swap分区sudo swapoff -a sudo sed -ri '/\sswap\s/s/^#?/#/' /etc/fstab2.5关闭selinuxsudo systemctl disable --now firewalld #推荐:保持 Enforcing(Kubernetes + containerd 在 RHEL9 系已支持),同时安装策略包: sudo dnf -y install container-selinux getenforce # 看到 Enforcing 即可 #图省事(不太安全):设为 Permissive: sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config2.6内核模块与 sysctl(所有节点)# 加载并持久化必须内核模块 cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 必备内核参数(转发与桥接) cat <<'EOF' | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #说明:RHEL9/ Rocky9 默认 cgroup v2,Kubernetes + containerd 完全支持,无需改动。2.7文件描述符(fd/ulimit)与进程数# 系统级最大打开文件数 cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn2.8kube-proxy 的 IPVS 模式#安装 sudo dnf -y install ipset ipvsadm cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter # 如启用 IPVS,取消以下行的注释: ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF # 立即加载 sudo modprobe overlay sudo modprobe br_netfilter # 如果要用 IPVS,再执行: for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do sudo modprobe $m; done #验证模块 lsmod | egrep 'br_netfilter|ip_vs|nf_conntrack'三、安装containerd(所有k8s节点都要做) 3.1 使用阿里云的源sudo dnf config-manager --set-enabled powertools # Rocky Linux 8/9需启用PowerTools仓库 sudo dnf install -y yum-utils device-mapper-persistent-data lvm2 #1、卸载之前的 dnf remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo sudo tee /etc/yum.repos.d/docker-ce.repo <<-'EOF' [docker-ce-stable] name=Docker CE Stable - AliOS baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg EOF # 3、安装 sudo dnf install -y containerd.io sudo dnf install containerd* -y3.2配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sudo sed -i 's|registry.k8s.io/pause:3.8|registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8|g' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version四、安装nginx+keepalived#安装与开启 dnf install -y nginx keepalived curl dnf install -y nginx-mod-stream systemctl enable nginx keepalived #配置 Nginx(两台 Master 都要配) #目标:在本机 0.0.0.0:16443 监听,转发到两个后端的 kube-apiserver(50:16443、51:16443) #编辑 /etc/nginx/nginx.conf(保留 http 段也没关系,关键是顶层加上 stream 段;Rocky9 的 nginx 支持动态模块): # /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # 使用系统提供的动态模块配置(若已安装将自动加载 stream 模块) include /usr/share/nginx/modules/*.conf; events { worker_connections 10240; } # 四层转发到两台 apiserver stream { upstream k8s_apiserver { server 192.168.30.50:6443 max_fails=3 fail_timeout=10s; server 192.168.30.51:6443 max_fails=3 fail_timeout=10s; } server { listen 0.0.0.0:16443; proxy_connect_timeout 5s; proxy_timeout 30s; proxy_pass k8s_apiserver; } } http { # 这里保持nginx默认 http 配置即可,删与不删均可。 include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 81; return 200 "ok\n"; } } #配置 Keepalived(两台 Master) #创建健康检查脚本 /etc/keepalived/check_nginx_kube.sh: cat >/etc/keepalived/check_nginx_kube.sh <<'EOF' #!/usr/bin/env bash # 通过本地Nginx转发口探活K8s apiserver(无认证的 /readyz,HTTP 200 即通过) curl -fsSk --connect-timeout 2 https://127.0.0.1:16443/readyz >/dev/null EOF chmod +x /etc/keepalived/check_nginx_kube.sh #Master1(192.168.30.50) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_50 # vrrp_strict # 若使用部分虚拟化/容器网络会引发问题,可注释掉 } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 # 改为你的网卡 virtual_router_id 58 # 1-255 任意一致值,这里取 58 priority 150 # Master1 高优先 advert_int 1 # 单播,避免二层组播受限环境(强烈推荐) unicast_src_ip 192.168.30.50 unicast_peer { 192.168.30.51 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #Master2(192.168.30.151) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_51 # vrrp_strict } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 58 priority 100 # 次优先 advert_int 1 unicast_src_ip 192.168.30.51 unicast_peer { 192.168.30.50 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #启动 systemctl restart keepalived ip a | grep 192.168.30.58 #停掉 Master1 的 keepalived:systemctl stop keepalived,VIP 应在 Master2 出现,验证完再 systemctl start keepalived。五、安装k8s 5.1 准备k8s源# 创建repo文件 cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF sudo dnf makecache #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce dnf install -y kubelet-1.27* kubeadm-1.27* kubectl-1.27* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet 安装锁定版本的插件 sudo dnf install -y dnf-plugin-versionlock 锁定版本不让后续更新sudo dnf versionlock add kubelet-1.27* kubeadm-1.27* kubectl-1.27* containerd.io [root@k8s-01 ~]# sudo dnf versionlock list Last metadata expiration check: 0:35:21 ago on Fri Aug 8 10:40:25 2025. kubelet-0:1.27.6-0.* kubeadm-0:1.27.6-0.* kubectl-0:1.27.6-0.* containerd.io-0:1.7.27-3.1.el9.* #sudo dnf update就会排除锁定的应用5.2 主节点操作(node节点不执行)[root@k8s-01 ~]# kubeadm config images list I0906 16:16:30.198629 49023 version.go:256] remote version is much newer: v1.34.0; falling back to: stable-1.27 registry.k8s.io/kube-apiserver:v1.27.16 registry.k8s.io/kube-controller-manager:v1.27.16 registry.k8s.io/kube-scheduler:v1.27.16 registry.k8s.io/kube-proxy:v1.27.16 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.7-0 registry.k8s.io/coredns/coredns:v1.10.1 kubeadm config print init-defaults > kubeadm.yaml[root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration bootstrapTokens: - token: abcdef.0123456789abcdef ttl: 24h0m0s usages: ["signing","authentication"] groups: ["system:bootstrappers:kubeadm:default-node-token"] localAPIEndpoint: # 这里必须是你要执行 kubeadm init 的这台机器的真实IP(Master1) advertiseAddress: 192.168.30.50 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent # 不要在这里硬编码 name,默认会用主机的 hostname,避免复用此文件时出错 taints: null --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 controlPlaneEndpoint: "192.168.30.58:16443" # 指向 Nginx+Keepalived 的 VIP:PORT certificatesDir: /etc/kubernetes/pki imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 # 供 Calico 使用,此网段可保持不变 apiServer: timeoutForControlPlane: 4m0s certSANs: # 建议把 VIP、两台 Master 的 IP 和主机名都放进 SAN,避免证书不信任 - "192.168.30.58" # VIP - "192.168.30.50" - "192.168.30.51" - "k8s-01" # 如你的主机名不同,请改成实际 hostname - "k8s-02" - "127.0.0.1" - "localhost" - "kubernetes" - "kubernetes.default" - "kubernetes.default.svc" - "kubernetes.default.svc.cluster.local" controllerManager: {} scheduler: {} etcd: local: dataDir: /var/lib/etcd --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: "systemd"root@k8s-01 ~]# kubeadm init --config kubeadm.yaml --upload-certs [init] Using Kubernetes version: v1.27.16 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0906 17:26:53.821977 54526 checks.go:835] detected that the sandbox image "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-01 k8s-02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost] and IPs [10.96.0.1 192.168.30.50 192.168.30.58 192.168.30.51 127.0.0.1] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 12.002658 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d [mark-control-plane] Marking the node k8s-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 \ --control-plane --certificate-key 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 #如果出现失败的情况 kubeadm reset -f [root@k8s-01 ~]# kubeadm reset -f [preflight] Running pre-flight checks W0906 17:08:03.892290 53705 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] Deleted contents of the etcd data directory: /var/lib/etcd [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" W0906 17:08:03.899240 53705 cleanupnode.go:134] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory [reset] Deleting contents of directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your systems IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. #还需要手动删除 rm -rf /$HOME/.kube/config systemctl restart containerd rm -rf ~/.kube /etc/kubernetes/pki/* /etc/kubernetes/manifests/*#安装 CNI #Flannel(简单) kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/v0.25.5/Documentation/kube-flannel.yml #Calico(功能更全) kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/calico.yaml [root@k8s-02 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-59765c79db-rvqm5 1/1 Running 0 8m3s kube-system calico-node-4jlgw 1/1 Running 0 8m3s kube-system calico-node-lvzgx 1/1 Running 0 8m3s kube-system calico-node-qdrmn 1/1 Running 0 8m3s kube-system coredns-65dcc469f7-gktmx 1/1 Running 0 51m kube-system coredns-65dcc469f7-wmppd 1/1 Running 0 51m kube-system etcd-k8s-01 1/1 Running 0 51m kube-system etcd-k8s-02 1/1 Running 0 20m kube-system kube-apiserver-k8s-01 1/1 Running 0 51m kube-system kube-apiserver-k8s-02 1/1 Running 0 19m kube-system kube-controller-manager-k8s-01 1/1 Running 1 (20m ago) 51m kube-system kube-controller-manager-k8s-02 1/1 Running 0 19m kube-system kube-proxy-k7z9v 1/1 Running 0 22m kube-system kube-proxy-sgrln 1/1 Running 0 51m kube-system kube-proxy-wpkjb 1/1 Running 0 20m kube-system kube-scheduler-k8s-01 1/1 Running 1 (19m ago) 51m kube-system kube-scheduler-k8s-02 1/1 Running 0 19m #测试切换 #在当前 VIP 所在主机执行: systemctl stop keepalived #观察另外一台是否接管 VIP: ip a | grep 192.168.30.58 #再次访问: 正常会返回ok curl -k https://192.168.30.58:6443/readyz #恢复 vip会自动漂移回来 systemctl start keepalived#kubectl 正常 [root@k8s-01 ~]# kubectl get cs 2>/dev/null || \ kubectl get --raw='/readyz?verbose' | head NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy

rocky linux 9 安装 多主架构 一、固定IP地址#配置 sudo nmcli connection modify ens160 \ ipv4.method manual \ ipv4.addresses 192.168.30.50/24 \ ipv4.gateway 192.168.30.2 \ ipv4.dns "8.8.8.8,8.8.4.4" #更新配置 sudo nmcli connection down ens160 && sudo nmcli connection up ens160二、准备工作 2.0 修改主机名#每个节点对应一个 hostnamectl set-hostname k8s-01 hostnamectl set-hostname k8s-02 hostnamectl set-hostname k8s-03#提前配好vip ip 三个节点都要做 cat >>/etc/hosts <<'EOF' 192.168.30.50 k8s-01 192.168.30.51 k8s-02 192.168.30.52 k8s-03 192.168.30.58 k8s-vip EOF2.1 配置yum源#sudo mkdir /etc/yum.repos.d/backup #sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/ 直接执行下面的 # 使用阿里云推荐的配置方法 sudo sed -e 's!^mirrorlist=!#mirrorlist=!g' \ -e 's!^#baseurl=http://dl.rockylinux.org/$contentdir!baseurl=https://mirrors.aliyun.com/rockylinux!g' \ -i /etc/yum.repos.d/Rocky-*.repo #清理并重建缓存 sudo dnf clean all sudo dnf makecache #测试更新 sudo dnf -y update sudo dnf -y install wget curl vim tar gzip2.2设置时区#查看当前时区设置 timedatectl #设置时区为中国时区(上海时间) sudo timedatectl set-timezone Asia/Shanghai2.3设置时间#安装并配置 Chrony(推荐) # RHEL/CentOS/Alma/Rocky sudo dnf -y install chrony || sudo yum -y install chrony sudo systemctl enable --now chronyd # 编辑配置文件 sudo vi /etc/chrony.conf #把默认的 pool/server 行注释掉(没外网也无用),然后加入(或确认存在)以下内容: # 把 30.50 作为“本地时间源”,无外部上游时自成一体 local stratum 10 # 允许本网段客户端访问 allow 192.168.30.0/24 # 绑定监听到这块网卡(可选,但建议写上) bindaddress 192.168.30.50 # 客户端第一次偏差大时允许快速步进校时 makestep 1 3 # 用系统时钟做源,且把系统时间同步到硬件时钟(断电后也较准) rtcsync #保存重启 sudo systemctl restart chronyd #防火墙放行 # firewalld(RHEL系) sudo firewall-cmd --add-service=ntp --permanent sudo firewall-cmd --reload #验证服务器状态 # 查看 chrony 源与自我状态 chronyc tracking # 查看已连接的客户端(执行一会儿后能看到) chronyc clients # 确认监听 123/udp sudo ss -lunp | grep :123# 客户端安装 # RHEL系 sudo dnf -y install chrony || sudo yum -y install chrony # Debian/Ubuntu sudo apt -y install chrony # 配置(RHEL: /etc/chrony.conf;Ubuntu/Debian: /etc/chrony/chrony.conf) # 注释掉原来的 pool/server 行,新增: server 192.168.30.50 iburst # 重启并查看 sudo systemctl restart chronyd chronyc sources -v chronyc tracking2.4关闭swap分区sudo swapoff -a sudo sed -ri '/\sswap\s/s/^#?/#/' /etc/fstab2.5关闭selinuxsudo systemctl disable --now firewalld #推荐:保持 Enforcing(Kubernetes + containerd 在 RHEL9 系已支持),同时安装策略包: sudo dnf -y install container-selinux getenforce # 看到 Enforcing 即可 #图省事(不太安全):设为 Permissive: sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config2.6内核模块与 sysctl(所有节点)# 加载并持久化必须内核模块 cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 必备内核参数(转发与桥接) cat <<'EOF' | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #说明:RHEL9/ Rocky9 默认 cgroup v2,Kubernetes + containerd 完全支持,无需改动。2.7文件描述符(fd/ulimit)与进程数# 系统级最大打开文件数 cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn2.8kube-proxy 的 IPVS 模式#安装 sudo dnf -y install ipset ipvsadm cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter # 如启用 IPVS,取消以下行的注释: ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF # 立即加载 sudo modprobe overlay sudo modprobe br_netfilter # 如果要用 IPVS,再执行: for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do sudo modprobe $m; done #验证模块 lsmod | egrep 'br_netfilter|ip_vs|nf_conntrack'三、安装containerd(所有k8s节点都要做) 3.1 使用阿里云的源sudo dnf config-manager --set-enabled powertools # Rocky Linux 8/9需启用PowerTools仓库 sudo dnf install -y yum-utils device-mapper-persistent-data lvm2 #1、卸载之前的 dnf remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo sudo tee /etc/yum.repos.d/docker-ce.repo <<-'EOF' [docker-ce-stable] name=Docker CE Stable - AliOS baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg EOF # 3、安装 sudo dnf install -y containerd.io sudo dnf install containerd* -y3.2配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sudo sed -i 's|registry.k8s.io/pause:3.8|registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8|g' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version四、安装nginx+keepalived#安装与开启 dnf install -y nginx keepalived curl dnf install -y nginx-mod-stream systemctl enable nginx keepalived #配置 Nginx(两台 Master 都要配) #目标:在本机 0.0.0.0:16443 监听,转发到两个后端的 kube-apiserver(50:16443、51:16443) #编辑 /etc/nginx/nginx.conf(保留 http 段也没关系,关键是顶层加上 stream 段;Rocky9 的 nginx 支持动态模块): # /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # 使用系统提供的动态模块配置(若已安装将自动加载 stream 模块) include /usr/share/nginx/modules/*.conf; events { worker_connections 10240; } # 四层转发到两台 apiserver stream { upstream k8s_apiserver { server 192.168.30.50:6443 max_fails=3 fail_timeout=10s; server 192.168.30.51:6443 max_fails=3 fail_timeout=10s; } server { listen 0.0.0.0:16443; proxy_connect_timeout 5s; proxy_timeout 30s; proxy_pass k8s_apiserver; } } http { # 这里保持nginx默认 http 配置即可,删与不删均可。 include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 81; return 200 "ok\n"; } } #配置 Keepalived(两台 Master) #创建健康检查脚本 /etc/keepalived/check_nginx_kube.sh: cat >/etc/keepalived/check_nginx_kube.sh <<'EOF' #!/usr/bin/env bash # 通过本地Nginx转发口探活K8s apiserver(无认证的 /readyz,HTTP 200 即通过) curl -fsSk --connect-timeout 2 https://127.0.0.1:16443/readyz >/dev/null EOF chmod +x /etc/keepalived/check_nginx_kube.sh #Master1(192.168.30.50) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_50 # vrrp_strict # 若使用部分虚拟化/容器网络会引发问题,可注释掉 } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 # 改为你的网卡 virtual_router_id 58 # 1-255 任意一致值,这里取 58 priority 150 # Master1 高优先 advert_int 1 # 单播,避免二层组播受限环境(强烈推荐) unicast_src_ip 192.168.30.50 unicast_peer { 192.168.30.51 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #Master2(192.168.30.151) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_51 # vrrp_strict } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 58 priority 100 # 次优先 advert_int 1 unicast_src_ip 192.168.30.51 unicast_peer { 192.168.30.50 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #启动 systemctl restart keepalived ip a | grep 192.168.30.58 #停掉 Master1 的 keepalived:systemctl stop keepalived,VIP 应在 Master2 出现,验证完再 systemctl start keepalived。五、安装k8s 5.1 准备k8s源# 创建repo文件 cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF sudo dnf makecache #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce dnf install -y kubelet-1.27* kubeadm-1.27* kubectl-1.27* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet 安装锁定版本的插件 sudo dnf install -y dnf-plugin-versionlock 锁定版本不让后续更新sudo dnf versionlock add kubelet-1.27* kubeadm-1.27* kubectl-1.27* containerd.io [root@k8s-01 ~]# sudo dnf versionlock list Last metadata expiration check: 0:35:21 ago on Fri Aug 8 10:40:25 2025. kubelet-0:1.27.6-0.* kubeadm-0:1.27.6-0.* kubectl-0:1.27.6-0.* containerd.io-0:1.7.27-3.1.el9.* #sudo dnf update就会排除锁定的应用5.2 主节点操作(node节点不执行)[root@k8s-01 ~]# kubeadm config images list I0906 16:16:30.198629 49023 version.go:256] remote version is much newer: v1.34.0; falling back to: stable-1.27 registry.k8s.io/kube-apiserver:v1.27.16 registry.k8s.io/kube-controller-manager:v1.27.16 registry.k8s.io/kube-scheduler:v1.27.16 registry.k8s.io/kube-proxy:v1.27.16 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.7-0 registry.k8s.io/coredns/coredns:v1.10.1 kubeadm config print init-defaults > kubeadm.yaml[root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration bootstrapTokens: - token: abcdef.0123456789abcdef ttl: 24h0m0s usages: ["signing","authentication"] groups: ["system:bootstrappers:kubeadm:default-node-token"] localAPIEndpoint: # 这里必须是你要执行 kubeadm init 的这台机器的真实IP(Master1) advertiseAddress: 192.168.30.50 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent # 不要在这里硬编码 name,默认会用主机的 hostname,避免复用此文件时出错 taints: null --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 controlPlaneEndpoint: "192.168.30.58:16443" # 指向 Nginx+Keepalived 的 VIP:PORT certificatesDir: /etc/kubernetes/pki imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 # 供 Calico 使用,此网段可保持不变 apiServer: timeoutForControlPlane: 4m0s certSANs: # 建议把 VIP、两台 Master 的 IP 和主机名都放进 SAN,避免证书不信任 - "192.168.30.58" # VIP - "192.168.30.50" - "192.168.30.51" - "k8s-01" # 如你的主机名不同,请改成实际 hostname - "k8s-02" - "127.0.0.1" - "localhost" - "kubernetes" - "kubernetes.default" - "kubernetes.default.svc" - "kubernetes.default.svc.cluster.local" controllerManager: {} scheduler: {} etcd: local: dataDir: /var/lib/etcd --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: "systemd"root@k8s-01 ~]# kubeadm init --config kubeadm.yaml --upload-certs [init] Using Kubernetes version: v1.27.16 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0906 17:26:53.821977 54526 checks.go:835] detected that the sandbox image "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-01 k8s-02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost] and IPs [10.96.0.1 192.168.30.50 192.168.30.58 192.168.30.51 127.0.0.1] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 12.002658 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d [mark-control-plane] Marking the node k8s-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 \ --control-plane --certificate-key 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 #如果出现失败的情况 kubeadm reset -f [root@k8s-01 ~]# kubeadm reset -f [preflight] Running pre-flight checks W0906 17:08:03.892290 53705 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] Deleted contents of the etcd data directory: /var/lib/etcd [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" W0906 17:08:03.899240 53705 cleanupnode.go:134] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory [reset] Deleting contents of directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your systems IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. #还需要手动删除 rm -rf /$HOME/.kube/config systemctl restart containerd rm -rf ~/.kube /etc/kubernetes/pki/* /etc/kubernetes/manifests/*#安装 CNI #Flannel(简单) kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/v0.25.5/Documentation/kube-flannel.yml #Calico(功能更全) kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/calico.yaml [root@k8s-02 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-59765c79db-rvqm5 1/1 Running 0 8m3s kube-system calico-node-4jlgw 1/1 Running 0 8m3s kube-system calico-node-lvzgx 1/1 Running 0 8m3s kube-system calico-node-qdrmn 1/1 Running 0 8m3s kube-system coredns-65dcc469f7-gktmx 1/1 Running 0 51m kube-system coredns-65dcc469f7-wmppd 1/1 Running 0 51m kube-system etcd-k8s-01 1/1 Running 0 51m kube-system etcd-k8s-02 1/1 Running 0 20m kube-system kube-apiserver-k8s-01 1/1 Running 0 51m kube-system kube-apiserver-k8s-02 1/1 Running 0 19m kube-system kube-controller-manager-k8s-01 1/1 Running 1 (20m ago) 51m kube-system kube-controller-manager-k8s-02 1/1 Running 0 19m kube-system kube-proxy-k7z9v 1/1 Running 0 22m kube-system kube-proxy-sgrln 1/1 Running 0 51m kube-system kube-proxy-wpkjb 1/1 Running 0 20m kube-system kube-scheduler-k8s-01 1/1 Running 1 (19m ago) 51m kube-system kube-scheduler-k8s-02 1/1 Running 0 19m #测试切换 #在当前 VIP 所在主机执行: systemctl stop keepalived #观察另外一台是否接管 VIP: ip a | grep 192.168.30.58 #再次访问: 正常会返回ok curl -k https://192.168.30.58:6443/readyz #恢复 vip会自动漂移回来 systemctl start keepalived#kubectl 正常 [root@k8s-01 ~]# kubectl get cs 2>/dev/null || \ kubectl get --raw='/readyz?verbose' | head NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy -

rocky-linux-9离线安装k8s 1.27 一、阶段 A:在「有网打包机」制作离线包打包机推荐同为 Rocky 9;也可用任意 x86_64 Linux。以下默认使用 dnf 和 ctr/docker 二选一抓镜像。1.1 目录与变量export K8S_VER="1.27.16" export K8S_MINOR="v1.27" export WORK="/opt/k8s-offline-${K8S_VER}" sudo mkdir -p $WORK/{rpms,images,cni,calico,tools}1.2 配置 Kubernetes 1.27 专属 RPM 仓库(仅打包机临时用)#/etc/yum.repos.d/kubernetes-1.27.repo [kubernetes-1.27] name=Kubernetes 1.27 baseurl=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/ enabled=1 gpgcheck=1 gpgkey=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/repodata/repomd.xml.keyKubernetes 从 2023 起使用 pkgs.k8s.io 的分小版本仓库,上面这个是 1.27 专用源。1.3 下载 RPM(含依赖,供离线节点安装)sudo dnf -y install dnf-plugins-core # containerd / runc / 常用依赖 sudo dnf -y download --resolve --destdir=$WORK/rpms \ containerd runc conntrack-tools iptables iproute-tc ethtool socat \ tar openssl curl bash-completion #Rocky 默认仓库里没有叫 containerd 的包,所以 dnf download 在严格模式下直接退出了 所以要加下面这步 # 安装 dnf 插件并添加 Docker CE 源(RHEL/EL9 适用) sudo dnf -y install dnf-plugins-core sudo dnf config-manager --add-repo https://download.docker.com/linux/rhel/docker-ce.repo # 更新元数据 sudo dnf clean all && sudo dnf makecache # kube 组件(固定 1.27.16) #sudo dnf -y download --resolve --destdir=$WORK/rpms \ #kubelet-${K8S_VER} kubeadm-${K8S_VER} kubectl-${K8S_VER} \ #kubernetes-cni cri-tools #上面别用 # 仅下载,不解析依赖 sudo dnf -y download --destdir="$WORK/rpms" \ kubelet-${K8S_VER} kubeadm-${K8S_VER} kubectl-${K8S_VER} \ kubernetes-cni cri-tools1.4 下载 CNI 插件与 crictl 工具# CNI plugins(官方二进制包,放到 /opt/cni/bin) curl -L -o $WORK/cni/cni-plugins-linux-amd64-v1.3.0.tgz \ https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz # crictl(来自 cri-tools) CRICTL_VER="v1.27.0" # 与集群兼容即可 curl -L -o $WORK/tools/crictl-${CRICTL_VER}-linux-amd64.tar.gz \ https://github.com/kubernetes-sigs/cri-tools/releases/download/${CRICTL_VER}/crictl-${CRICTL_VER}-linux-amd64.tar.gz1.5 下载 Calico 清单与镜像curl -L -o $WORK/calico/calico-v3.26.4.yaml \ https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/calico.yaml # 提取镜像名(也可手工列出) grep -E "image: .*calico" $WORK/calico/calico-v3.26.4.yaml | awk '{print $2}' | sort -u > $WORK/images/calico-images.txt [root@localhost ~]# cat $WORK/images/calico-images.txt docker.io/calico/cni:v3.26.4 docker.io/calico/kube-controllers:v3.26.4 docker.io/calico/node:v3.26.4 1.6 生成 kubeadm 所需镜像清单(精确到 v1.27.16)# 本机先临时装 kubeadm(或用容器)来打印镜像列表 sudo dnf -y install kubeadm-${K8S_VER} kubeadm config images list --kubernetes-version v${K8S_VER} > $WORK/images/k8s-images.txt #kubeadm config images list 是官方推荐获取离线镜像列表的方式;也支持 --config 指定自定义仓库。1.7 拉取并打包镜像(二选一:有 Docker 或有 containerd)# 方式 A:Docker while read -r img; do docker pull "$img"; done < $WORK/images/k8s-images.txt while read -r img; do docker pull "$img"; done < $WORK/images/calico-images.txt docker save $(cat $WORK/images/k8s-images.txt $WORK/images/calico-images.txt) \ -o $WORK/images/k8s-${K8S_VER}-and-calico-v3.26.4.tar # 方式 B:containerd(ctr) sudo systemctl enable --now containerd || true while read -r img; do sudo ctr -n k8s.io i pull "$img"; done < $WORK/images/k8s-images.txt while read -r img; do sudo ctr -n k8s.io i pull "$img"; done < $WORK/images/calico-images.txt sudo ctr -n k8s.io i export $WORK/images/k8s-${K8S_VER}-and-calico-v3.26.4.tar $(cat $WORK/images/k8s-images.txt $WORK/images/calico-images.txt) 1.8 打总包cd $(dirname $WORK) sudo tar czf k8s-offline-${K8S_VER}-rocky9.tar.gz $(basename $WORK) # 把这个 tar.gz 拷贝到所有离线节点(控制面/工作节点) 二、docker安装离线包 2.1在线机器上打离线包# 0) 变量 export WORK="/opt/docker-offline-$(date +%F)" sudo mkdir -p "$WORK"/{rpms,images,scripts} ARCH=$(uname -m) # 一般是 x86_64;如是 ARM64 则为 aarch64 # 1) 加 Docker 官方仓库(RHEL/EL 系列通用,Rocky 9 适用) sudo dnf -y install dnf-plugins-core sudo dnf config-manager --add-repo https://download.docker.com/linux/rhel/docker-ce.repo sudo dnf clean all && sudo dnf makecache # 2) 下载“完整功能”所需 RPM(含依赖) PKGS="docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin docker-ce-rootless-extras" # 用 --resolve 拉全依赖;若个别包临时不可用,strict=0 可跳过不中断 sudo dnf -y download --resolve --setopt=strict=0 \ --destdir="$WORK/rpms" --arch="$ARCH" $PKGS # 同时把 Rootless 相关常见依赖也一并打包(如尚未被上面带下) sudo dnf -y download --resolve --setopt=strict=0 \ --destdir="$WORK/rpms" --arch="$ARCH" \ slirp4netns fuse-overlayfs container-selinux # 3)(可选)打基础测试镜像离线包 docker pull hello-world:latest docker pull alpine:latest docker pull busybox:stable docker save hello-world:latest alpine:latest busybox:stable -o "$WORK/images/docker-base-images.tar" # 4) 生成本地仓库元数据 + 安装脚本 sudo dnf -y install createrepo_c createrepo_c "$WORK/rpms" cat > "$WORK/scripts/install-offline.sh" <<"EOF" #!/usr/bin/env bash set -euo pipefail DIR="$(cd "$(dirname "$0")"/.. && pwd)" # 临时本地仓库安装方法(更稳妥) sudo dnf -y install createrepo_c || true sudo createrepo_c "$DIR/rpms" sudo tee /etc/yum.repos.d/docker-offline.repo >/dev/null <<REPO [docker-offline] name=Docker Offline baseurl=file://$DIR/rpms enabled=1 gpgcheck=0 REPO # 安装 sudo dnf -y install docker-ce docker-ce-cli containerd.io \ docker-buildx-plugin docker-compose-plugin docker-ce-rootless-extras # 启动并开机自启 sudo systemctl enable --now docker # 可选:把当前用户加入 docker 组(需要重新登录生效) if id -u "$SUDO_USER" &>/dev/null; then sudo usermod -aG docker "$SUDO_USER" || true fi # 导入基础镜像(如存在) if [ -f "$DIR/images/docker-base-images.tar" ]; then sudo docker load -i "$DIR/images/docker-base-images.tar" fi echo "Done. Check: docker version && docker compose version && docker buildx version" EOF chmod +x "$WORK/scripts/install-offline.sh" # 5) 打一个总包 sudo tar -C "$(dirname "$WORK")" -czf "${WORK}.tar.gz" "$(basename "$WORK")" echo "离线包已生成:${WORK}.tar.gz" 2.2 离线机器上安装#把 ${WORK}.tar.gz 拷贝到离线主机,解压并执行脚本: sudo tar -C /opt -xzf /path/to/docker-offline-*.tar.gz cd /opt/docker-offline-*/scripts #sudo ./install-offline.sh sudo dnf -y --disablerepo='*' --nogpgcheck install \ /opt/docker-offline-2025-09-01/rpms/*.rpm # 重新登录后验证 docker version [root@localhost opt]# docker version Client: Docker Engine - Community Version: 28.3.3 API version: 1.51 Go version: go1.24.5 Git commit: 980b856 Built: Fri Jul 25 11:36:28 2025 OS/Arch: linux/amd64 Context: default Server: Docker Engine - Community Engine: Version: 28.3.3 API version: 1.51 (minimum version 1.24) Go version: go1.24.5 Git commit: bea959c Built: Fri Jul 25 11:33:28 2025 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.7.27 GitCommit: 05044ec0a9a75232cad458027ca83437aae3f4da runc: Version: 1.2.5 GitCommit: v1.2.5-0-g59923ef docker-init: Version: 0.19.0 GitCommit: de40ad0 docker compose version # 注意:是 docker compose(v2 插件),不是老的 docker-compose docker run --rm hello-world三、阶段 B:在「离线节点」安装与初始化 3.1 系统准备(所有节点)sudo tar xzf k8s-offline-1.27.16-rocky9.tar.gz -C / OFF="/opt/k8s-offline-1.27.16" hostnamectl set-hostname k8s-01 echo "192.168.30.150 k8s-01" >> /etc/hosts ping -c1 k8s-01swapoff -a sed -ri 's/^\s*([^#].*\sswap\s)/#\1/' /etc/fstab cat >/etc/sysctl.d/k8s.conf <<'EOF' net.ipv4.ip_forward=1 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 EOF sysctl --system #先加载 IPVS 内核模块 cat >/etc/modules-load.d/ipvs.conf <<'EOF' ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do modprobe $m; done 3.1.1 关闭 swap(含 zram)#Rocky 9 默认启用 zram,kubelet 需要禁用 swap: sudo swapoff -a # 永久:卸载 zram 生成器或禁用其单元 sudo dnf -y remove zram-generator-defaults || true # 如有 /etc/fstab 的 swap 条目,注释掉;并确认: lsblk | grep -E 'SWAP|zram' || true #RHEL9/基于 systemd 的发行版一般通过 zram-generator 提供 swap;禁用/移除是官方建议之一。 3.1.2 内核模块与 sysctl(bridge/overlay/IP 转发)# /etc/modules-load.d/k8s.conf echo -e "overlay\nbr_netfilter" | sudo tee /etc/modules-load.d/k8s.conf sudo modprobe overlay && sudo modprobe br_netfilter # /etc/sysctl.d/k8s.conf cat <<'EOF' | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #(这些设置是 Kubernetes 官方与 Fedora/Rocky 指南里明确要求的) 3.1.3 SELinux 与防火墙1. 建议保留 SELinux Enforcing(若遇容器标记问题可先设为 Permissive 再排障)。 2. 防火墙可开放必要端口或临时停用;端口清单见官方“Ports and Protocols”。至少: 控制面:6443/TCP(API)、2379-2380/TCP(etcd)、10250/10257/10259/TCP 所有节点:10250/TCP;CNI 端口(如 Calico VXLAN 默认 4789/UDP)等按 CNI 文档配置。3.2 安装 RPM(离线目录直接安装)cd $OFF/rpms sudo dnf -y --disablerepo='*' install ./*.rpm sudo systemctl enable --now containerd #(--disablerepo='*' 可避免 dnf 去查线上元数据,离线时很有用)3.2.1 安装 CNI 与 crictlsudo mkdir -p /opt/cni/bin sudo tar -xzf $OFF/cni/cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin sudo tar -xzf $OFF/tools/crictl-v1.27.0-linux-amd64.tar.gz -C /usr/local/bin3.3配置 containerd(systemd cgroup & pause 镜像)# 生成默认配置后修改 mkdir -p /etc/containerd containerd config default > /etc/containerd/config.toml # 关键点:设置 SystemdCgroup=true,并确保 sandbox_image 使用我们已导入的 pause:3.9 sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml sudo sed -i 's@sandbox_image = .*@sandbox_image = "registry.k8s.io/pause:3.9"@' /etc/containerd/config.toml #打开 /etc/containerd/config.toml,确保这几处: disabled_plugins = [] #如果看到 io.containerd.grpc.v1.cri 出现在 disabled_plugins 里,删掉它。 #存在并启用 CRI 插件段落(一般默认就有): [plugins."io.containerd.grpc.v1.cri"] # 这里还有一堆子配置,保持默认即可 #kubelet 要求 systemd cgroup,改成: [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true #建议把 pause 镜像固定为 3.9(1.27.x 对应): [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.k8s.io/pause:3.9" # 离线或私有仓库环境就改成你的地址,比如: # sandbox_image = "192.168.30.150:5000/pause:3.9" #打开 /etc/containerd/config.toml,确认/修改以下几处(都在同一文件里): # 顶部:不要禁用 CRI disabled_plugins = [] # ← 把 ["cri"] 改成 [],或直接删掉此行 version = 2 # 如果模板没有这一行,建议加上 [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.k8s.io/pause:3.9" # 你已离线导入了这个镜像,正好保持一致 # 如用私有仓库,写成 "你的仓库/pause:3.9" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true # kubelet 要求 systemd cgroup #重启并自检 systemctl daemon-reload systemctl enable --now containerd #kubectl暂时不用启动 等kubeadm启动 systemctl status containerd --no-pager -l #确认 CRI 插件已加载(任一条有结果即可): ctr plugins ls | grep cri # 期望看到 io.containerd.grpc.v1.cri <OK> # 或者 crictl --runtime-endpoint unix:///run/containerd/containerd.sock info # 能输出 runtimeName 等信息即 OK;若没装 crictl 可跳过 sudo systemctl restart containerd #(K8s 在 RHEL9/cgroup v2 上推荐 systemd cgroup 驱动;containerd 侧需显式开启3.4 预载镜像(离线导入)sudo ctr -n k8s.io images import $OFF/images/k8s-1.27.16-and-calico-v3.26.4.tar sudo ctr -n k8s.io images ls | grep -E 'kube-|coredns|etcd|pause|calico' 3.5 kubeadm 初始化(控制面节点)创建 kubeadm-config.yaml(按需改 advertiseAddress、Pod/Service 网段;Calico 习惯 192.168.0.0/16):# kubeadm-config.yaml [root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.30.150 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 imageRepository: registry.k8s.io networking: serviceSubnet: 10.96.0.0/12 podSubnet: 172.20.0.0/16 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd 下面是开启ipvs apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.30.151 # ← 改成本机控制面IP bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 imageRepository: registry.k8s.io # 离线/内网镜像时改成你的私仓 networking: serviceSubnet: 10.96.0.0/12 podSubnet: 172.20.0.0/16 # 要与 Calico 使用的网段一致(你现在就是用这个) dns: type: CoreDNS --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs ipvs: scheduler: rr # 可选:rr / wrr / wlc / sh / mh 等 # strictARP: true # 以后用 MetalLB L2 时再打开 --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd# 0) 主机名解析(避免之前的 hostname 警告) hostnamectl set-hostname k8s-01 grep -q '192.168.30.150 k8s-01' /etc/hosts || echo '192.168.30.150 k8s-01' >> /etc/hosts # 1) 关闭 swap(若未关) swapoff -a sed -ri 's/^\s*([^#].*\sswap\s)/#\1/' /etc/fstab # 2) 必要内核 & sysctl(kubelet 常见阻塞点) modprobe br_netfilter || true cat >/etc/modules-load.d/k8s.conf <<'EOF' br_netfilter EOF cat >/etc/sysctl.d/k8s.conf <<'EOF' net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 EOF sysctl --system # 3) (可选)避免策略阻塞:SELinux/防火墙(离线/内网先松) setenforce 0 2>/dev/null || true sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config 2>/dev/null || true systemctl disable --now firewalld 2>/dev/null || true # 4) 重启关键服务 systemctl restart containerd systemctl restart kubelet # 5) 再次观察 crictl --runtime-endpoint /run/containerd/containerd.sock ps -a | egrep 'kube-(apiserver|controller-manager|scheduler)|etcd' journalctl -u kubelet -e --no-pager | tail -n 200 #执行初始化: sudo kubeadm init --config kubeadm-config.yaml #初始化离线 不联网 kubeadm init --config kubeadm.yaml --upload-certs -v=5 # 成功后配置 kubectl mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configsudo systemctl disable --now firewalld || true # 立刻加载模块 sudo modprobe overlay && sudo modprobe br_netfilter # 持久化 echo -e "overlay\nbr_netfilter" | sudo tee /etc/modules-load.d/k8s.conf # 必要 sysctl sudo tee /etc/sysctl.d/k8s.conf >/dev/null <<'EOF' net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF sudo sysctl --system # 快速确认三项都为 1 sysctl net.ipv4.ip_forward sysctl net.bridge.bridge-nf-call-iptables sysctl net.bridge.bridge-nf-call-ip6tables 安装 Calico(离线文件): kubectl apply -f $OFF/calico/calico-v3.26.4.yaml kubectl -n kube-system get pods -w mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config kubectl get pods -n kube-system -o wide kubectl get nodes -o wide #kubelet 开机自启(kubeadm 已临时启动,设为自启更规范) systemctl enable --now kubelet #配好 kubectl,并验证控制面 # 生成过 admin.conf 的话(kubeadm 已经写过) [ -f /etc/kubernetes/admin.conf ] && { mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config } kubectl cluster-info kubectl get pods -n kube-system -o wide kubectl get nodes -o wide # 现在控制面起来了,但在装 CNI 前 Node 可能是 NotReady #如果意外没有 /etc/kubernetes/admin.conf(极少数情况),可补一条: kubeadm init phase kubeconfig admin #加载 IPVS 内核模块(你 kube-proxy 设了 ipvs) modprobe ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack cat >/etc/modules-load.d/ipvs.conf <<'EOF' ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF #安装你的 CNI(Calico 离线) 确保清单里 CALICO_IPV4POOL_CIDR 与你 kubeadm 的 podSubnet: 172.20.0.0/16 一致。 你本地已导入镜像 calico/node|cni|kube-controllers:v3.26.4,直接套用离线 calico.yaml 即可: kubectl apply -f /path/to/calico.yaml kubectl -n kube-system get pods -w # 等 calico-*、coredns、kube-proxy 全部 Running kubectl get nodes # 状态应变为 Ready 3.6 加入工作节点#在每个工作节点重复 系统准备/安装 RPM/导入镜像 的步骤,然后在控制面上生成 join 命令: [root@k8s-01 ~]# kubeadm token create --print-join-command kubeadm join 192.168.30.150:6443 --token fnturx.ph8jg99zgdmze81w --discovery-token-ca-cert-hash sha256:1ef5e1f3558c8f9336dd4785c0207cb837cceb37c253179e9988f03dc0c00146 #把输出的 kubeadm join ... 在各工作节点执行即可。 #拿到的命令在每个 worker 上执行即可加入集群。 #若以后要加 额外控制面节点,再执行: kubeadm init phase upload-certs --skip-certificate-key-print kubeadm token create --print-join-command --certificate-key <上一步输出的key> #持久化服务 systemctl enable --now kubelet systemctl enable --now containerd

rocky-linux-9离线安装k8s 1.27 一、阶段 A:在「有网打包机」制作离线包打包机推荐同为 Rocky 9;也可用任意 x86_64 Linux。以下默认使用 dnf 和 ctr/docker 二选一抓镜像。1.1 目录与变量export K8S_VER="1.27.16" export K8S_MINOR="v1.27" export WORK="/opt/k8s-offline-${K8S_VER}" sudo mkdir -p $WORK/{rpms,images,cni,calico,tools}1.2 配置 Kubernetes 1.27 专属 RPM 仓库(仅打包机临时用)#/etc/yum.repos.d/kubernetes-1.27.repo [kubernetes-1.27] name=Kubernetes 1.27 baseurl=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/ enabled=1 gpgcheck=1 gpgkey=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/repodata/repomd.xml.keyKubernetes 从 2023 起使用 pkgs.k8s.io 的分小版本仓库,上面这个是 1.27 专用源。1.3 下载 RPM(含依赖,供离线节点安装)sudo dnf -y install dnf-plugins-core # containerd / runc / 常用依赖 sudo dnf -y download --resolve --destdir=$WORK/rpms \ containerd runc conntrack-tools iptables iproute-tc ethtool socat \ tar openssl curl bash-completion #Rocky 默认仓库里没有叫 containerd 的包,所以 dnf download 在严格模式下直接退出了 所以要加下面这步 # 安装 dnf 插件并添加 Docker CE 源(RHEL/EL9 适用) sudo dnf -y install dnf-plugins-core sudo dnf config-manager --add-repo https://download.docker.com/linux/rhel/docker-ce.repo # 更新元数据 sudo dnf clean all && sudo dnf makecache # kube 组件(固定 1.27.16) #sudo dnf -y download --resolve --destdir=$WORK/rpms \ #kubelet-${K8S_VER} kubeadm-${K8S_VER} kubectl-${K8S_VER} \ #kubernetes-cni cri-tools #上面别用 # 仅下载,不解析依赖 sudo dnf -y download --destdir="$WORK/rpms" \ kubelet-${K8S_VER} kubeadm-${K8S_VER} kubectl-${K8S_VER} \ kubernetes-cni cri-tools1.4 下载 CNI 插件与 crictl 工具# CNI plugins(官方二进制包,放到 /opt/cni/bin) curl -L -o $WORK/cni/cni-plugins-linux-amd64-v1.3.0.tgz \ https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz # crictl(来自 cri-tools) CRICTL_VER="v1.27.0" # 与集群兼容即可 curl -L -o $WORK/tools/crictl-${CRICTL_VER}-linux-amd64.tar.gz \ https://github.com/kubernetes-sigs/cri-tools/releases/download/${CRICTL_VER}/crictl-${CRICTL_VER}-linux-amd64.tar.gz1.5 下载 Calico 清单与镜像curl -L -o $WORK/calico/calico-v3.26.4.yaml \ https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/calico.yaml # 提取镜像名(也可手工列出) grep -E "image: .*calico" $WORK/calico/calico-v3.26.4.yaml | awk '{print $2}' | sort -u > $WORK/images/calico-images.txt [root@localhost ~]# cat $WORK/images/calico-images.txt docker.io/calico/cni:v3.26.4 docker.io/calico/kube-controllers:v3.26.4 docker.io/calico/node:v3.26.4 1.6 生成 kubeadm 所需镜像清单(精确到 v1.27.16)# 本机先临时装 kubeadm(或用容器)来打印镜像列表 sudo dnf -y install kubeadm-${K8S_VER} kubeadm config images list --kubernetes-version v${K8S_VER} > $WORK/images/k8s-images.txt #kubeadm config images list 是官方推荐获取离线镜像列表的方式;也支持 --config 指定自定义仓库。1.7 拉取并打包镜像(二选一:有 Docker 或有 containerd)# 方式 A:Docker while read -r img; do docker pull "$img"; done < $WORK/images/k8s-images.txt while read -r img; do docker pull "$img"; done < $WORK/images/calico-images.txt docker save $(cat $WORK/images/k8s-images.txt $WORK/images/calico-images.txt) \ -o $WORK/images/k8s-${K8S_VER}-and-calico-v3.26.4.tar # 方式 B:containerd(ctr) sudo systemctl enable --now containerd || true while read -r img; do sudo ctr -n k8s.io i pull "$img"; done < $WORK/images/k8s-images.txt while read -r img; do sudo ctr -n k8s.io i pull "$img"; done < $WORK/images/calico-images.txt sudo ctr -n k8s.io i export $WORK/images/k8s-${K8S_VER}-and-calico-v3.26.4.tar $(cat $WORK/images/k8s-images.txt $WORK/images/calico-images.txt) 1.8 打总包cd $(dirname $WORK) sudo tar czf k8s-offline-${K8S_VER}-rocky9.tar.gz $(basename $WORK) # 把这个 tar.gz 拷贝到所有离线节点(控制面/工作节点) 二、docker安装离线包 2.1在线机器上打离线包# 0) 变量 export WORK="/opt/docker-offline-$(date +%F)" sudo mkdir -p "$WORK"/{rpms,images,scripts} ARCH=$(uname -m) # 一般是 x86_64;如是 ARM64 则为 aarch64 # 1) 加 Docker 官方仓库(RHEL/EL 系列通用,Rocky 9 适用) sudo dnf -y install dnf-plugins-core sudo dnf config-manager --add-repo https://download.docker.com/linux/rhel/docker-ce.repo sudo dnf clean all && sudo dnf makecache # 2) 下载“完整功能”所需 RPM(含依赖) PKGS="docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin docker-ce-rootless-extras" # 用 --resolve 拉全依赖;若个别包临时不可用,strict=0 可跳过不中断 sudo dnf -y download --resolve --setopt=strict=0 \ --destdir="$WORK/rpms" --arch="$ARCH" $PKGS # 同时把 Rootless 相关常见依赖也一并打包(如尚未被上面带下) sudo dnf -y download --resolve --setopt=strict=0 \ --destdir="$WORK/rpms" --arch="$ARCH" \ slirp4netns fuse-overlayfs container-selinux # 3)(可选)打基础测试镜像离线包 docker pull hello-world:latest docker pull alpine:latest docker pull busybox:stable docker save hello-world:latest alpine:latest busybox:stable -o "$WORK/images/docker-base-images.tar" # 4) 生成本地仓库元数据 + 安装脚本 sudo dnf -y install createrepo_c createrepo_c "$WORK/rpms" cat > "$WORK/scripts/install-offline.sh" <<"EOF" #!/usr/bin/env bash set -euo pipefail DIR="$(cd "$(dirname "$0")"/.. && pwd)" # 临时本地仓库安装方法(更稳妥) sudo dnf -y install createrepo_c || true sudo createrepo_c "$DIR/rpms" sudo tee /etc/yum.repos.d/docker-offline.repo >/dev/null <<REPO [docker-offline] name=Docker Offline baseurl=file://$DIR/rpms enabled=1 gpgcheck=0 REPO # 安装 sudo dnf -y install docker-ce docker-ce-cli containerd.io \ docker-buildx-plugin docker-compose-plugin docker-ce-rootless-extras # 启动并开机自启 sudo systemctl enable --now docker # 可选:把当前用户加入 docker 组(需要重新登录生效) if id -u "$SUDO_USER" &>/dev/null; then sudo usermod -aG docker "$SUDO_USER" || true fi # 导入基础镜像(如存在) if [ -f "$DIR/images/docker-base-images.tar" ]; then sudo docker load -i "$DIR/images/docker-base-images.tar" fi echo "Done. Check: docker version && docker compose version && docker buildx version" EOF chmod +x "$WORK/scripts/install-offline.sh" # 5) 打一个总包 sudo tar -C "$(dirname "$WORK")" -czf "${WORK}.tar.gz" "$(basename "$WORK")" echo "离线包已生成:${WORK}.tar.gz" 2.2 离线机器上安装#把 ${WORK}.tar.gz 拷贝到离线主机,解压并执行脚本: sudo tar -C /opt -xzf /path/to/docker-offline-*.tar.gz cd /opt/docker-offline-*/scripts #sudo ./install-offline.sh sudo dnf -y --disablerepo='*' --nogpgcheck install \ /opt/docker-offline-2025-09-01/rpms/*.rpm # 重新登录后验证 docker version [root@localhost opt]# docker version Client: Docker Engine - Community Version: 28.3.3 API version: 1.51 Go version: go1.24.5 Git commit: 980b856 Built: Fri Jul 25 11:36:28 2025 OS/Arch: linux/amd64 Context: default Server: Docker Engine - Community Engine: Version: 28.3.3 API version: 1.51 (minimum version 1.24) Go version: go1.24.5 Git commit: bea959c Built: Fri Jul 25 11:33:28 2025 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.7.27 GitCommit: 05044ec0a9a75232cad458027ca83437aae3f4da runc: Version: 1.2.5 GitCommit: v1.2.5-0-g59923ef docker-init: Version: 0.19.0 GitCommit: de40ad0 docker compose version # 注意:是 docker compose(v2 插件),不是老的 docker-compose docker run --rm hello-world三、阶段 B:在「离线节点」安装与初始化 3.1 系统准备(所有节点)sudo tar xzf k8s-offline-1.27.16-rocky9.tar.gz -C / OFF="/opt/k8s-offline-1.27.16" hostnamectl set-hostname k8s-01 echo "192.168.30.150 k8s-01" >> /etc/hosts ping -c1 k8s-01swapoff -a sed -ri 's/^\s*([^#].*\sswap\s)/#\1/' /etc/fstab cat >/etc/sysctl.d/k8s.conf <<'EOF' net.ipv4.ip_forward=1 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 EOF sysctl --system #先加载 IPVS 内核模块 cat >/etc/modules-load.d/ipvs.conf <<'EOF' ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do modprobe $m; done 3.1.1 关闭 swap(含 zram)#Rocky 9 默认启用 zram,kubelet 需要禁用 swap: sudo swapoff -a # 永久:卸载 zram 生成器或禁用其单元 sudo dnf -y remove zram-generator-defaults || true # 如有 /etc/fstab 的 swap 条目,注释掉;并确认: lsblk | grep -E 'SWAP|zram' || true #RHEL9/基于 systemd 的发行版一般通过 zram-generator 提供 swap;禁用/移除是官方建议之一。 3.1.2 内核模块与 sysctl(bridge/overlay/IP 转发)# /etc/modules-load.d/k8s.conf echo -e "overlay\nbr_netfilter" | sudo tee /etc/modules-load.d/k8s.conf sudo modprobe overlay && sudo modprobe br_netfilter # /etc/sysctl.d/k8s.conf cat <<'EOF' | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #(这些设置是 Kubernetes 官方与 Fedora/Rocky 指南里明确要求的) 3.1.3 SELinux 与防火墙1. 建议保留 SELinux Enforcing(若遇容器标记问题可先设为 Permissive 再排障)。 2. 防火墙可开放必要端口或临时停用;端口清单见官方“Ports and Protocols”。至少: 控制面:6443/TCP(API)、2379-2380/TCP(etcd)、10250/10257/10259/TCP 所有节点:10250/TCP;CNI 端口(如 Calico VXLAN 默认 4789/UDP)等按 CNI 文档配置。3.2 安装 RPM(离线目录直接安装)cd $OFF/rpms sudo dnf -y --disablerepo='*' install ./*.rpm sudo systemctl enable --now containerd #(--disablerepo='*' 可避免 dnf 去查线上元数据,离线时很有用)3.2.1 安装 CNI 与 crictlsudo mkdir -p /opt/cni/bin sudo tar -xzf $OFF/cni/cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin sudo tar -xzf $OFF/tools/crictl-v1.27.0-linux-amd64.tar.gz -C /usr/local/bin3.3配置 containerd(systemd cgroup & pause 镜像)# 生成默认配置后修改 mkdir -p /etc/containerd containerd config default > /etc/containerd/config.toml # 关键点:设置 SystemdCgroup=true,并确保 sandbox_image 使用我们已导入的 pause:3.9 sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml sudo sed -i 's@sandbox_image = .*@sandbox_image = "registry.k8s.io/pause:3.9"@' /etc/containerd/config.toml #打开 /etc/containerd/config.toml,确保这几处: disabled_plugins = [] #如果看到 io.containerd.grpc.v1.cri 出现在 disabled_plugins 里,删掉它。 #存在并启用 CRI 插件段落(一般默认就有): [plugins."io.containerd.grpc.v1.cri"] # 这里还有一堆子配置,保持默认即可 #kubelet 要求 systemd cgroup,改成: [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true #建议把 pause 镜像固定为 3.9(1.27.x 对应): [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.k8s.io/pause:3.9" # 离线或私有仓库环境就改成你的地址,比如: # sandbox_image = "192.168.30.150:5000/pause:3.9" #打开 /etc/containerd/config.toml,确认/修改以下几处(都在同一文件里): # 顶部:不要禁用 CRI disabled_plugins = [] # ← 把 ["cri"] 改成 [],或直接删掉此行 version = 2 # 如果模板没有这一行,建议加上 [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.k8s.io/pause:3.9" # 你已离线导入了这个镜像,正好保持一致 # 如用私有仓库,写成 "你的仓库/pause:3.9" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true # kubelet 要求 systemd cgroup #重启并自检 systemctl daemon-reload systemctl enable --now containerd #kubectl暂时不用启动 等kubeadm启动 systemctl status containerd --no-pager -l #确认 CRI 插件已加载(任一条有结果即可): ctr plugins ls | grep cri # 期望看到 io.containerd.grpc.v1.cri <OK> # 或者 crictl --runtime-endpoint unix:///run/containerd/containerd.sock info # 能输出 runtimeName 等信息即 OK;若没装 crictl 可跳过 sudo systemctl restart containerd #(K8s 在 RHEL9/cgroup v2 上推荐 systemd cgroup 驱动;containerd 侧需显式开启3.4 预载镜像(离线导入)sudo ctr -n k8s.io images import $OFF/images/k8s-1.27.16-and-calico-v3.26.4.tar sudo ctr -n k8s.io images ls | grep -E 'kube-|coredns|etcd|pause|calico' 3.5 kubeadm 初始化(控制面节点)创建 kubeadm-config.yaml(按需改 advertiseAddress、Pod/Service 网段;Calico 习惯 192.168.0.0/16):# kubeadm-config.yaml [root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.30.150 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 imageRepository: registry.k8s.io networking: serviceSubnet: 10.96.0.0/12 podSubnet: 172.20.0.0/16 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd 下面是开启ipvs apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.30.151 # ← 改成本机控制面IP bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 imageRepository: registry.k8s.io # 离线/内网镜像时改成你的私仓 networking: serviceSubnet: 10.96.0.0/12 podSubnet: 172.20.0.0/16 # 要与 Calico 使用的网段一致(你现在就是用这个) dns: type: CoreDNS --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs ipvs: scheduler: rr # 可选:rr / wrr / wlc / sh / mh 等 # strictARP: true # 以后用 MetalLB L2 时再打开 --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd# 0) 主机名解析(避免之前的 hostname 警告) hostnamectl set-hostname k8s-01 grep -q '192.168.30.150 k8s-01' /etc/hosts || echo '192.168.30.150 k8s-01' >> /etc/hosts # 1) 关闭 swap(若未关) swapoff -a sed -ri 's/^\s*([^#].*\sswap\s)/#\1/' /etc/fstab # 2) 必要内核 & sysctl(kubelet 常见阻塞点) modprobe br_netfilter || true cat >/etc/modules-load.d/k8s.conf <<'EOF' br_netfilter EOF cat >/etc/sysctl.d/k8s.conf <<'EOF' net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 EOF sysctl --system # 3) (可选)避免策略阻塞:SELinux/防火墙(离线/内网先松) setenforce 0 2>/dev/null || true sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config 2>/dev/null || true systemctl disable --now firewalld 2>/dev/null || true # 4) 重启关键服务 systemctl restart containerd systemctl restart kubelet # 5) 再次观察 crictl --runtime-endpoint /run/containerd/containerd.sock ps -a | egrep 'kube-(apiserver|controller-manager|scheduler)|etcd' journalctl -u kubelet -e --no-pager | tail -n 200 #执行初始化: sudo kubeadm init --config kubeadm-config.yaml #初始化离线 不联网 kubeadm init --config kubeadm.yaml --upload-certs -v=5 # 成功后配置 kubectl mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configsudo systemctl disable --now firewalld || true # 立刻加载模块 sudo modprobe overlay && sudo modprobe br_netfilter # 持久化 echo -e "overlay\nbr_netfilter" | sudo tee /etc/modules-load.d/k8s.conf # 必要 sysctl sudo tee /etc/sysctl.d/k8s.conf >/dev/null <<'EOF' net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF sudo sysctl --system # 快速确认三项都为 1 sysctl net.ipv4.ip_forward sysctl net.bridge.bridge-nf-call-iptables sysctl net.bridge.bridge-nf-call-ip6tables 安装 Calico(离线文件): kubectl apply -f $OFF/calico/calico-v3.26.4.yaml kubectl -n kube-system get pods -w mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config kubectl get pods -n kube-system -o wide kubectl get nodes -o wide #kubelet 开机自启(kubeadm 已临时启动,设为自启更规范) systemctl enable --now kubelet #配好 kubectl,并验证控制面 # 生成过 admin.conf 的话(kubeadm 已经写过) [ -f /etc/kubernetes/admin.conf ] && { mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config } kubectl cluster-info kubectl get pods -n kube-system -o wide kubectl get nodes -o wide # 现在控制面起来了,但在装 CNI 前 Node 可能是 NotReady #如果意外没有 /etc/kubernetes/admin.conf(极少数情况),可补一条: kubeadm init phase kubeconfig admin #加载 IPVS 内核模块(你 kube-proxy 设了 ipvs) modprobe ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack cat >/etc/modules-load.d/ipvs.conf <<'EOF' ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF #安装你的 CNI(Calico 离线) 确保清单里 CALICO_IPV4POOL_CIDR 与你 kubeadm 的 podSubnet: 172.20.0.0/16 一致。 你本地已导入镜像 calico/node|cni|kube-controllers:v3.26.4,直接套用离线 calico.yaml 即可: kubectl apply -f /path/to/calico.yaml kubectl -n kube-system get pods -w # 等 calico-*、coredns、kube-proxy 全部 Running kubectl get nodes # 状态应变为 Ready 3.6 加入工作节点#在每个工作节点重复 系统准备/安装 RPM/导入镜像 的步骤,然后在控制面上生成 join 命令: [root@k8s-01 ~]# kubeadm token create --print-join-command kubeadm join 192.168.30.150:6443 --token fnturx.ph8jg99zgdmze81w --discovery-token-ca-cert-hash sha256:1ef5e1f3558c8f9336dd4785c0207cb837cceb37c253179e9988f03dc0c00146 #把输出的 kubeadm join ... 在各工作节点执行即可。 #拿到的命令在每个 worker 上执行即可加入集群。 #若以后要加 额外控制面节点,再执行: kubeadm init phase upload-certs --skip-certificate-key-print kubeadm token create --print-join-command --certificate-key <上一步输出的key> #持久化服务 systemctl enable --now kubelet systemctl enable --now containerd -