搜索到

93

篇与

的结果

-

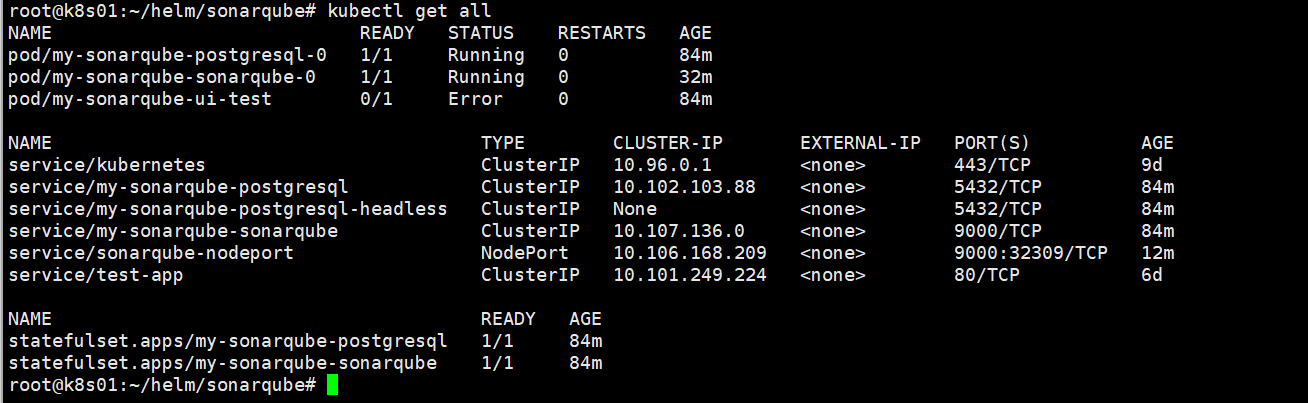

OpenTelemetry 应用埋点 一、部署示例应用 1、部署java应用apiVersion: apps/v1 kind: Deployment metadata: name: java-demo spec: selector: matchLabels: app: java-demo template: metadata: labels: app: java-demo spec: containers: - name: java-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/spring-petclinic:1.5.1 imagePullPolicy: IfNotPresent resources: limits: memory: "1Gi" # 增加内存 cpu: "500m" ports: - containerPort: 8080 --- apiVersion: v1 kind: Service metadata: name: java-demo spec: type: ClusterIP # 改为 ClusterIP,Traefik 使用服务发现 selector: app: java-demo ports: - port: 80 targetPort: 8080 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: java-demo spec: entryPoints: - web # 使用 WEB 入口点 (端口 8000) routes: - match: Host(`java-demo.local.cn`) # 可以修改为您需要的域名 kind: Rule services: - name: java-demo port: 80 2、部署python应用apiVersion: apps/v1 kind: Deployment metadata: name: python-demo spec: selector: matchLabels: app: python-demo template: metadata: labels: app: python-demo spec: containers: - name: python-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/python-demoapp:latest imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 5000 --- apiVersion: v1 kind: Service metadata: name: python-demo spec: selector: app: python-demo ports: - port: 5000 targetPort: 5000 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: python-demo spec: entryPoints: - web routes: - match: Host(`python-demo.local.com`) kind: Rule services: - name: python-demo port: 5000二、应用埋点 1、java应用自动埋点apiVersion: opentelemetry.io/v1alpha1 kind: Instrumentation # 声明资源类型为 Instrumentation(用于语言自动注入) metadata: name: java-instrumentation # Instrumentation 资源的名称(可以被 Deployment 等引用) namespace: opentelemetry spec: propagators: # 指定用于 trace 上下文传播的方式,支持多种格式 - tracecontext # W3C Trace Context(最通用的跨服务追踪格式) - baggage # 传播用户定义的上下文键值对 - b3 # Zipkin 的 B3 header(用于兼容 Zipkin 环境) sampler: # 定义采样策略(决定是否收集 trace) type: always_on # 始终采样所有请求(适合测试或调试环境) java: # image: ghcr.io/open-telemetry/opentelemetry-operator/autoinstrumentation-java:latest # 使用的 Java 自动注入 agent 镜像地址 image: harbor.cuiliangblog.cn/otel/autoinstrumentation-java:latest env: - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://center-collector.opentelemetry.svc:4318#为了启用自动检测,我们需要更新部署文件并向其添加注解。这样我们可以告诉 OpenTelemetry Operator 将 sidecar 和 java-instrumentation 注入到我们的应用程序中。修改 Deployment 配置如下: apiVersion: apps/v1 kind: Deployment metadata: name: java-demo spec: selector: matchLabels: app: java-demo template: metadata: labels: app: java-demo annotations: instrumentation.opentelemetry.io/inject-java: "opentelemetry/java-instrumentation" # 填写 Instrumentation 资源的名称 sidecar.opentelemetry.io/inject: "opentelemetry/sidecar" # 注入一个 sidecar 模式的 OpenTelemetry Collector spec: containers: - name: java-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/spring-petclinic:1.5.1 imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 8080#接下来更新 deployment,然后查看资源信息,java-demo 容器已经变为两个。 root@k8s01:~/helm/opentelemetry# kubectl get pods NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 0/2 Init:0/1 0 6s java-demo-5f4d989b88-xrzg7 1/1 Running 0 42m my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 16m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h root@k8s01:~/helm/opentelemetry# kubectl get pods -w NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 0/2 PodInitializing 0 9s java-demo-5f4d989b88-xrzg7 1/1 Running 0 42m my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 17m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h java-demo-5cdd74d47-vmqqx 2/2 Running 0 23s java-demo-5f4d989b88-xrzg7 1/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m root@k8s01:~/helm/opentelemetry# kubectl get pods -w NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 2/2 Running 0 28s my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 17m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h ^Croot@k8s01:~/helm/opentelemetry# kubectl get opentelemetrycollectors -A NAMESPACE NAME MODE VERSION READY AGE IMAGE MANAGEMENT opentelemetry center deployment 0.127.0 1/1 3h22m registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 managed opentelemetry sidecar sidecar 0.127.0 3h19m managed root@k8s01:~/helm/opentelemetry# kubectl get instrumentations -A NAMESPACE NAME AGE ENDPOINT SAMPLER SAMPLER ARG opentelemetry java-instrumentation 2m26s always_on #查看 sidecar日志,已正常启动并发送 spans 数据 root@k8s01:~/helm/opentelemetry# kubectl logs java-demo-5cdd74d47-vmqqx -c otc-container 2025-06-14T15:31:35.013Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z info builders/builders.go:26 Development component. May change in the future. {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "otelcol.component.id": "batch", "otelcol.component.kind": "processor", "otelcol.pipeline.id": "traces", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver"} 2025-06-14T15:31:35.021Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", "NumCPU": 8} 2025-06-14T15:31:35.021Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{URL:url.URL{Scheme:"passthrough", Opaque:"", User:(*url.Userinfo)(nil), Host:"", Path:"/center-collector.opentelemetry.svc:4317", RawPath:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collector.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB policy "pick_first" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] Received new config { "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTING{"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity change to CONNECTING {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new address "center-collector.opentelemetry.svc:4317" to connect {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T15:31:35.025Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.025Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T15:31:35.026Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"resource": {}} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity change to READY {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] SubConn 0xc0008e1db0 reported connectivity state READY and the health listener is disabled. Transitioning SubConn to READY. {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"resource": {}, "grpc_log": true} root@k8s01:~/helm/opentelemetry# kubectl logs java-demo-5cdd74d47-vmqqx -c otc-container 2025-06-14T15:31:35.013Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp 2025-06-14T15:31:35.014Z info builders/builders.go:26 Development component. May change in the future. {"resource": {aces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "oteles", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp 2025-06-14T15:31:35.014Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "otel 2025-06-14T15:31:35.021Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", 2025-06-14T15:31:35.021Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelemetr 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "grpc 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{URL:ector.opentelemetry.svc:4317", RawPath:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": { 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collector. 2025-06-14T15:31:35.022Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB policy " 2025-06-14T15:31:35.023Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTING 2025-06-14T15:31:35.023Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel created 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource": { 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity chang 2025-06-14T15:31:35.024Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new addres 2025-06-14T15:31:35.024Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "grpc 2025-06-14T15:31:35.024Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.comp 2025-06-14T15:31:35.025Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"reso 2025-06-14T15:31:35.025Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.comp 2025-06-14T15:31:35.026Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"reso 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity chang 2025-06-14T15:31:35.034Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090]ansitioning SubConn to READY. {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"reso #查看collector 日志,已经收到 traces 数据 root@k8s01:~/helm/opentelemetry# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3h24m root@k8s01:~/helm/opentelemetry# kubectl get -n opentelemetry pods NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3h25m root@k8s01:~/helm/opentelemetry# kubectl logs -n opentelemetry center-collector-78f7bbdf45-j798s 2025-06-14T12:09:21.290Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T12:09:21.291Z info builders/builders.go:26 Development component. May change in the future. {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T12:09:21.294Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", "NumCPU": 8} 2025-06-14T12:09:21.294Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T12:09:21.294Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T12:09:21.295Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T12:09:21.295Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"resource": {}} root@k8s01:~/helm/opentelemetry# 2、python应用自动埋点与 java 应用类似,python 应用同样也支持自动埋点, OpenTelemetry 提供了 opentelemetry-instrument CLI 工具,在启动 Python 应用时通过 sitecustomize 或环境变量注入自动 instrumentation。 我们先创建一个java-instrumentation 资源apiVersion: opentelemetry.io/v1alpha1 kind: Instrumentation # 声明资源类型为 Instrumentation(用于语言自动注入) metadata: name: python-instrumentation # Instrumentation 资源的名称(可以被 Deployment 等引用) namespace: opentelemetry spec: propagators: # 指定用于 trace 上下文传播的方式,支持多种格式 - tracecontext # W3C Trace Context(最通用的跨服务追踪格式) - baggage # 传播用户定义的上下文键值对 - b3 # Zipkin 的 B3 header(用于兼容 Zipkin 环境) sampler: # 定义采样策略(决定是否收集 trace) type: always_on # 始终采样所有请求(适合测试或调试环境) python: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/autoinstrumentation-python:latest env: - name: OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED # 启用日志的自动检测 value: "true" - name: OTEL_PYTHON_LOG_CORRELATION # 在日志中启用跟踪上下文注入 value: "true" - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://center-collector.opentelemetry.svc:4318^Croot@k8s01:~/helm/opentelemetry# cat new-python-demo.yaml apiVersion: apps/v1 kind: Deployment metadata: name: python-demo spec: selector: matchLabels: app: python-demo template: metadata: labels: app: python-demo annotations: instrumentation.opentelemetry.io/inject-python: "opentelemetry/python-instrumentation" # 填写 Instrumentation 资源的名称 sidecar.opentelemetry.io/inject: "opentelemetry/sidecar" # 注入一个 sidecar 模式的 OpenTelemetry Collector spec: containers: - name: pyhton-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/python-demoapp:latest imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 5000 oot@k8s03:~# kubectl get pods NAME READY STATUS RESTARTS AGE java-demo-5559f949b9-74p68 2/2 Running 0 2m14s java-demo-5559f949b9-kwgpc 0/2 Terminating 0 14m my-sonarqube-postgresql-0 1/1 Running 8 (2d22h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d7h python-demo-599fc7f8d6-lbhnr 2/2 Running 0 20m redis-5ff4857944-v2vz5 1/1 Running 5 (2d22h ago) 6d3h root@k8s03:~# kubectl logs python-demo-599fc7f8d6-lbhnr -c otc-container 2025-06-14T15:57:12.951Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:57:12.952Z info builders/builders.go:26 Development component. May change in the future. {"resource{}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "p", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "lcol.component.id": "batch", "otelcol.component.kind": "processor", "otelcol.pipeline.id": "traces", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "p", "otelcol.component.kind": "receiver", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "lcol.component.id": "otlp", "otelcol.component.kind": "receiver"} 2025-06-14T15:57:12.953Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127, "NumCPU": 8} 2025-06-14T15:57:12.953Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:57:12.953Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelery.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "c_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{:url.URL{Scheme:"passthrough", Opaque:"", User:(*url.Userinfo)(nil), Host:"", Path:"/center-collector.opentelemetry.svc:4317", Rawh:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collec.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB poli"pick_first" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc00046e] Received new config { "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTI"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel create"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource{}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity cge to CONNECTING {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new adss "center-collector.opentelemetry.svc:4317" to connect {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "c_log": true} 2025-06-14T15:57:12.954Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.ponent.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T15:57:12.954Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.ponent.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T15:57:12.954Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"ource": {}} 2025-06-14T15:57:12.955Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"ource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity cge to READY {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc00046e] SubConn 0xc0005fccd0 reported connectivity state READY and the health listener is disabled. Transitioning SubConn to READY. {"ource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"ource": {}, "grpc_log": true} root@k8s03:~# root@k8s03:~# kubectl logs -n opentelemetry center-collector-78f7bbdf45-j798s 2025-06-14T12:09:21.290Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T12:09:21.291Z info builders/builders.go:26 Development component. May change in the future. {"resourceaces"} 2025-06-14T12:09:21.294Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127 2025-06-14T12:09:21.294Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T12:09:21.294Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol. 2025-06-14T12:09:21.295Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol. 2025-06-14T12:09:21.295Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {" 2025-06-14T16:05:11.811Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:05:16.636Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:05:26.894Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:18:11.294Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:18:21.350Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor root@k8s03:~#

OpenTelemetry 应用埋点 一、部署示例应用 1、部署java应用apiVersion: apps/v1 kind: Deployment metadata: name: java-demo spec: selector: matchLabels: app: java-demo template: metadata: labels: app: java-demo spec: containers: - name: java-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/spring-petclinic:1.5.1 imagePullPolicy: IfNotPresent resources: limits: memory: "1Gi" # 增加内存 cpu: "500m" ports: - containerPort: 8080 --- apiVersion: v1 kind: Service metadata: name: java-demo spec: type: ClusterIP # 改为 ClusterIP,Traefik 使用服务发现 selector: app: java-demo ports: - port: 80 targetPort: 8080 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: java-demo spec: entryPoints: - web # 使用 WEB 入口点 (端口 8000) routes: - match: Host(`java-demo.local.cn`) # 可以修改为您需要的域名 kind: Rule services: - name: java-demo port: 80 2、部署python应用apiVersion: apps/v1 kind: Deployment metadata: name: python-demo spec: selector: matchLabels: app: python-demo template: metadata: labels: app: python-demo spec: containers: - name: python-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/python-demoapp:latest imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 5000 --- apiVersion: v1 kind: Service metadata: name: python-demo spec: selector: app: python-demo ports: - port: 5000 targetPort: 5000 --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: python-demo spec: entryPoints: - web routes: - match: Host(`python-demo.local.com`) kind: Rule services: - name: python-demo port: 5000二、应用埋点 1、java应用自动埋点apiVersion: opentelemetry.io/v1alpha1 kind: Instrumentation # 声明资源类型为 Instrumentation(用于语言自动注入) metadata: name: java-instrumentation # Instrumentation 资源的名称(可以被 Deployment 等引用) namespace: opentelemetry spec: propagators: # 指定用于 trace 上下文传播的方式,支持多种格式 - tracecontext # W3C Trace Context(最通用的跨服务追踪格式) - baggage # 传播用户定义的上下文键值对 - b3 # Zipkin 的 B3 header(用于兼容 Zipkin 环境) sampler: # 定义采样策略(决定是否收集 trace) type: always_on # 始终采样所有请求(适合测试或调试环境) java: # image: ghcr.io/open-telemetry/opentelemetry-operator/autoinstrumentation-java:latest # 使用的 Java 自动注入 agent 镜像地址 image: harbor.cuiliangblog.cn/otel/autoinstrumentation-java:latest env: - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://center-collector.opentelemetry.svc:4318#为了启用自动检测,我们需要更新部署文件并向其添加注解。这样我们可以告诉 OpenTelemetry Operator 将 sidecar 和 java-instrumentation 注入到我们的应用程序中。修改 Deployment 配置如下: apiVersion: apps/v1 kind: Deployment metadata: name: java-demo spec: selector: matchLabels: app: java-demo template: metadata: labels: app: java-demo annotations: instrumentation.opentelemetry.io/inject-java: "opentelemetry/java-instrumentation" # 填写 Instrumentation 资源的名称 sidecar.opentelemetry.io/inject: "opentelemetry/sidecar" # 注入一个 sidecar 模式的 OpenTelemetry Collector spec: containers: - name: java-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/spring-petclinic:1.5.1 imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 8080#接下来更新 deployment,然后查看资源信息,java-demo 容器已经变为两个。 root@k8s01:~/helm/opentelemetry# kubectl get pods NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 0/2 Init:0/1 0 6s java-demo-5f4d989b88-xrzg7 1/1 Running 0 42m my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 16m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h root@k8s01:~/helm/opentelemetry# kubectl get pods -w NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 0/2 PodInitializing 0 9s java-demo-5f4d989b88-xrzg7 1/1 Running 0 42m my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 17m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h java-demo-5cdd74d47-vmqqx 2/2 Running 0 23s java-demo-5f4d989b88-xrzg7 1/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m java-demo-5f4d989b88-xrzg7 0/1 Terminating 0 43m root@k8s01:~/helm/opentelemetry# kubectl get pods -w NAME READY STATUS RESTARTS AGE java-demo-5cdd74d47-vmqqx 2/2 Running 0 28s my-sonarqube-postgresql-0 1/1 Running 8 (2d21h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d6h python-demo-69c56c549c-jcgmj 1/1 Running 0 17m redis-5ff4857944-v2vz5 1/1 Running 5 (2d21h ago) 6d2h ^Croot@k8s01:~/helm/opentelemetry# kubectl get opentelemetrycollectors -A NAMESPACE NAME MODE VERSION READY AGE IMAGE MANAGEMENT opentelemetry center deployment 0.127.0 1/1 3h22m registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 managed opentelemetry sidecar sidecar 0.127.0 3h19m managed root@k8s01:~/helm/opentelemetry# kubectl get instrumentations -A NAMESPACE NAME AGE ENDPOINT SAMPLER SAMPLER ARG opentelemetry java-instrumentation 2m26s always_on #查看 sidecar日志,已正常启动并发送 spans 数据 root@k8s01:~/helm/opentelemetry# kubectl logs java-demo-5cdd74d47-vmqqx -c otc-container 2025-06-14T15:31:35.013Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z info builders/builders.go:26 Development component. May change in the future. {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "otelcol.component.id": "batch", "otelcol.component.kind": "processor", "otelcol.pipeline.id": "traces", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver"} 2025-06-14T15:31:35.021Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", "NumCPU": 8} 2025-06-14T15:31:35.021Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{URL:url.URL{Scheme:"passthrough", Opaque:"", User:(*url.Userinfo)(nil), Host:"", Path:"/center-collector.opentelemetry.svc:4317", RawPath:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collector.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB policy "pick_first" {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] Received new config { "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTING{"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity change to CONNECTING {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new address "center-collector.opentelemetry.svc:4317" to connect {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.024Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T15:31:35.025Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.025Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T15:31:35.026Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"resource": {}} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity change to READY {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] SubConn 0xc0008e1db0 reported connectivity state READY and the health listener is disabled. Transitioning SubConn to READY. {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"resource": {}, "grpc_log": true} root@k8s01:~/helm/opentelemetry# kubectl logs java-demo-5cdd74d47-vmqqx -c otc-container 2025-06-14T15:31:35.013Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp 2025-06-14T15:31:35.014Z info builders/builders.go:26 Development component. May change in the future. {"resource": {aces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "oteles", "otelcol.signal": "traces"} 2025-06-14T15:31:35.014Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "otlp 2025-06-14T15:31:35.014Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "otel 2025-06-14T15:31:35.021Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", 2025-06-14T15:31:35.021Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelemetr 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "grpc 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{URL:ector.opentelemetry.svc:4317", RawPath:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": { 2025-06-14T15:31:35.021Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collector. 2025-06-14T15:31:35.022Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.022Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB policy " 2025-06-14T15:31:35.023Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090] "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTING 2025-06-14T15:31:35.023Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel created 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource": { 2025-06-14T15:31:35.023Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity chang 2025-06-14T15:31:35.024Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new addres 2025-06-14T15:31:35.024Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "grpc 2025-06-14T15:31:35.024Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.comp 2025-06-14T15:31:35.025Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"reso 2025-06-14T15:31:35.025Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.comp 2025-06-14T15:31:35.026Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"reso 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity chang 2025-06-14T15:31:35.034Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc000bc6090]ansitioning SubConn to READY. {"resource": {}, "grpc_log": true} 2025-06-14T15:31:35.034Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"reso #查看collector 日志,已经收到 traces 数据 root@k8s01:~/helm/opentelemetry# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3h24m root@k8s01:~/helm/opentelemetry# kubectl get -n opentelemetry pods NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3h25m root@k8s01:~/helm/opentelemetry# kubectl logs -n opentelemetry center-collector-78f7bbdf45-j798s 2025-06-14T12:09:21.290Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T12:09:21.291Z info builders/builders.go:26 Development component. May change in the future. {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T12:09:21.294Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127.0", "NumCPU": 8} 2025-06-14T12:09:21.294Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T12:09:21.294Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T12:09:21.295Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T12:09:21.295Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"resource": {}} root@k8s01:~/helm/opentelemetry# 2、python应用自动埋点与 java 应用类似,python 应用同样也支持自动埋点, OpenTelemetry 提供了 opentelemetry-instrument CLI 工具,在启动 Python 应用时通过 sitecustomize 或环境变量注入自动 instrumentation。 我们先创建一个java-instrumentation 资源apiVersion: opentelemetry.io/v1alpha1 kind: Instrumentation # 声明资源类型为 Instrumentation(用于语言自动注入) metadata: name: python-instrumentation # Instrumentation 资源的名称(可以被 Deployment 等引用) namespace: opentelemetry spec: propagators: # 指定用于 trace 上下文传播的方式,支持多种格式 - tracecontext # W3C Trace Context(最通用的跨服务追踪格式) - baggage # 传播用户定义的上下文键值对 - b3 # Zipkin 的 B3 header(用于兼容 Zipkin 环境) sampler: # 定义采样策略(决定是否收集 trace) type: always_on # 始终采样所有请求(适合测试或调试环境) python: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/autoinstrumentation-python:latest env: - name: OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED # 启用日志的自动检测 value: "true" - name: OTEL_PYTHON_LOG_CORRELATION # 在日志中启用跟踪上下文注入 value: "true" - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://center-collector.opentelemetry.svc:4318^Croot@k8s01:~/helm/opentelemetry# cat new-python-demo.yaml apiVersion: apps/v1 kind: Deployment metadata: name: python-demo spec: selector: matchLabels: app: python-demo template: metadata: labels: app: python-demo annotations: instrumentation.opentelemetry.io/inject-python: "opentelemetry/python-instrumentation" # 填写 Instrumentation 资源的名称 sidecar.opentelemetry.io/inject: "opentelemetry/sidecar" # 注入一个 sidecar 模式的 OpenTelemetry Collector spec: containers: - name: pyhton-demo image: registry.cn-guangzhou.aliyuncs.com/xingcangku/python-demoapp:latest imagePullPolicy: IfNotPresent resources: limits: memory: "500Mi" cpu: "200m" ports: - containerPort: 5000 oot@k8s03:~# kubectl get pods NAME READY STATUS RESTARTS AGE java-demo-5559f949b9-74p68 2/2 Running 0 2m14s java-demo-5559f949b9-kwgpc 0/2 Terminating 0 14m my-sonarqube-postgresql-0 1/1 Running 8 (2d22h ago) 9d my-sonarqube-sonarqube-0 0/1 Pending 0 6d7h python-demo-599fc7f8d6-lbhnr 2/2 Running 0 20m redis-5ff4857944-v2vz5 1/1 Running 5 (2d22h ago) 6d3h root@k8s03:~# kubectl logs python-demo-599fc7f8d6-lbhnr -c otc-container 2025-06-14T15:57:12.951Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T15:57:12.952Z info builders/builders.go:26 Development component. May change in the future. {"resource{}, "otelcol.component.id": "debug", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "p", "otelcol.component.kind": "exporter", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Beta component. May change in the future. {"resource": {}, "lcol.component.id": "batch", "otelcol.component.kind": "processor", "otelcol.pipeline.id": "traces", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug builders/builders.go:24 Stable component. {"resource": {}, "otelcol.component.id": "p", "otelcol.component.kind": "receiver", "otelcol.signal": "traces"} 2025-06-14T15:57:12.952Z debug otlpreceiver@v0.127.0/otlp.go:58 created signal-agnostic logger {"resource": {}, "lcol.component.id": "otlp", "otelcol.component.kind": "receiver"} 2025-06-14T15:57:12.953Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127, "NumCPU": 8} 2025-06-14T15:57:12.953Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T15:57:12.953Z info grpc@v1.72.1/clientconn.go:176 [core] original dial target is: "center-collector.opentelery.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:459 [core] [Channel #1]Channel created {"resource": {}, "c_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:207 [core] [Channel #1]parsed dial target is: resolver.Target{:url.URL{Scheme:"passthrough", Opaque:"", User:(*url.Userinfo)(nil), Host:"", Path:"/center-collector.opentelemetry.svc:4317", Rawh:"", OmitHost:false, ForceQuery:false, RawQuery:"", Fragment:"", RawFragment:""}} {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:208 [core] [Channel #1]Channel authority set to "center-collec.opentelemetry.svc:4317" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/resolver_wrapper.go:210 [core] [Channel #1]Resolver state updated: { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } (resolver returned new addresses) {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/balancer_wrapper.go:122 [core] [Channel #1]Channel switches to new LB poli"pick_first" {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info gracefulswitch/gracefulswitch.go:194 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc00046e] Received new config { "shuffleAddressList": false }, resolver state { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Endpoints": [ { "Addresses": [ { "Addr": "center-collector.opentelemetry.svc:4317", "ServerName": "", "Attributes": null, "BalancerAttributes": null, "Metadata": null } ], "Attributes": null } ], "ServiceConfig": null, "Attributes": null } {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to CONNECTI"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/balancer_wrapper.go:195 [core] [Channel #1 SubChannel #2]Subchannel create"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:364 [core] [Channel #1]Channel exiting idle mode {"resource{}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity cge to CONNECTING {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/clientconn.go:1343 [core] [Channel #1 SubChannel #2]Subchannel picks a new adss "center-collector.opentelemetry.svc:4317" to connect {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.954Z info grpc@v1.72.1/server.go:690 [core] [Server #3]Server created {"resource": {}, "c_log": true} 2025-06-14T15:57:12.954Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol.ponent.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4317"} 2025-06-14T15:57:12.954Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol.ponent.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "0.0.0.0:4318"} 2025-06-14T15:57:12.954Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {"ource": {}} 2025-06-14T15:57:12.955Z info grpc@v1.72.1/server.go:886 [core] [Server #3 ListenSocket #4]ListenSocket created {"ource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info grpc@v1.72.1/clientconn.go:1224 [core] [Channel #1 SubChannel #2]Subchannel Connectivity cge to READY {"resource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info pickfirstleaf/pickfirstleaf.go:197 [pick-first-leaf-lb] [pick-first-leaf-lb 0xc00046e] SubConn 0xc0005fccd0 reported connectivity state READY and the health listener is disabled. Transitioning SubConn to READY. {"ource": {}, "grpc_log": true} 2025-06-14T15:57:12.962Z info grpc@v1.72.1/clientconn.go:563 [core] [Channel #1]Channel Connectivity change to READY {"ource": {}, "grpc_log": true} root@k8s03:~# root@k8s03:~# kubectl logs -n opentelemetry center-collector-78f7bbdf45-j798s 2025-06-14T12:09:21.290Z info service@v0.127.0/service.go:199 Setting up own telemetry... {"resource": {}} 2025-06-14T12:09:21.291Z info builders/builders.go:26 Development component. May change in the future. {"resourceaces"} 2025-06-14T12:09:21.294Z info service@v0.127.0/service.go:266 Starting otelcol... {"resource": {}, "Version": "0.127 2025-06-14T12:09:21.294Z info extensions/extensions.go:41 Starting extensions... {"resource": {}} 2025-06-14T12:09:21.294Z info otlpreceiver@v0.127.0/otlp.go:116 Starting GRPC server {"resource": {}, "otelcol. 2025-06-14T12:09:21.295Z info otlpreceiver@v0.127.0/otlp.go:173 Starting HTTP server {"resource": {}, "otelcol. 2025-06-14T12:09:21.295Z info service@v0.127.0/service.go:289 Everything is ready. Begin running and processing data. {" 2025-06-14T16:05:11.811Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:05:16.636Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:05:26.894Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:18:11.294Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor 2025-06-14T16:18:21.350Z info Traces {"resource": {}, "otelcol.component.id": "debug", "otelcol.component.kind": "expor root@k8s03:~# -

OpenTelemetry部署 建议使用 OpenTelemetry Operator 来部署,因为它可以帮助我们轻松部署和管理 OpenTelemetry 收集器,还可以自动检测应用程序。具体可参考文档https://opentelemetry.io/docs/platforms/kubernetes/operator/一、部署cert-manager因为 Operator 使用了 Admission Webhook 通过 HTTP 回调机制对资源进行校验/修改。Kubernetes 要求 Webhook 服务必须使用 TLS,因此 Operator 需要为其 webhook server 签发证书,所以需要先安装cert-manager。# wget https://github.com/cert-manager/cert-manager/releases/latest/download/cert-manager.yaml # kubectl apply -f cert-manager.yaml root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get -n cert-manager pod NAME READY STATUS RESTARTS AGE cert-manager-7bd494778-gs44k 1/1 Running 0 37s cert-manager-cainjector-76474c8c48-w9r5p 1/1 Running 0 37s cert-manager-webhook-6797c49f67-thvcz 1/1 Running 0 37s root@k8s01:~/helm/opentelemetry/cert-manager# 二、部署Operator在 Kubernetes 上使用 OpenTelemetry,主要就是部署 OpenTelemetry 收集器。# wget https://github.com/open-telemetry/opentelemetry-operator/releases/latest/download/opentelemetry-operator.yaml # kubectl apply -f opentelemetry-operator.yaml # kubectl get pod -n opentelemetry-operator-system NAME READY STATUS RESTARTS AGE opentelemetry-operator-controller-manager-6d94c5db75-cz957 2/2 Running 0 74s # kubectl get crd |grep opentelemetry instrumentations.opentelemetry.io 2025-04-21T09:48:53Z opampbridges.opentelemetry.io 2025-04-21T09:48:54Z opentelemetrycollectors.opentelemetry.io 2025-04-21T09:48:54Z targetallocators.opentelemetry.io 2025-04-21T09:48:54Zroot@k8s01:~/helm/opentelemetry/cert-manager# kubectl apply -f opentelemetry-operator.yaml namespace/opentelemetry-operator-system created customresourcedefinition.apiextensions.k8s.io/instrumentations.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/opampbridges.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/opentelemetrycollectors.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/targetallocators.opentelemetry.io created serviceaccount/opentelemetry-operator-controller-manager created role.rbac.authorization.k8s.io/opentelemetry-operator-leader-election-role created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-manager-role created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-metrics-reader created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-proxy-role created rolebinding.rbac.authorization.k8s.io/opentelemetry-operator-leader-election-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/opentelemetry-operator-manager-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/opentelemetry-operator-proxy-rolebinding created service/opentelemetry-operator-controller-manager-metrics-service created service/opentelemetry-operator-webhook-service created deployment.apps/opentelemetry-operator-controller-manager created Warning: spec.privateKey.rotationPolicy: In cert-manager >= v1.18.0, the default value changed from `Never` to `Always`. certificate.cert-manager.io/opentelemetry-operator-serving-cert created issuer.cert-manager.io/opentelemetry-operator-selfsigned-issuer created mutatingwebhookconfiguration.admissionregistration.k8s.io/opentelemetry-operator-mutating-webhook-configuration created validatingwebhookconfiguration.admissionregistration.k8s.io/opentelemetry-operator-validating-webhook-configuration created root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pods -n opentelemetry-operator-system NAME READY STATUS RESTARTS AGE opentelemetry-operator-controller-manager-f78fc55f7-xtjk2 2/2 Running 0 107s root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get crd |grep opentelemetry instrumentations.opentelemetry.io 2025-06-14T11:30:01Z opampbridges.opentelemetry.io 2025-06-14T11:30:01Z opentelemetrycollectors.opentelemetry.io 2025-06-14T11:30:02Z targetallocators.opentelemetry.io 2025-06-14T11:30:02Z三、部署Collector(中心)接下来我们部署一个精简版的 OpenTelemetry Collector,用于接收 OTLP 格式的 trace 数据,通过 gRPC 或 HTTP 协议接入,经过内存控制与批处理后,打印到日志中以供调试使用。 root@k8s01:~/helm/opentelemetry/cert-manager# cat center-collector.yaml apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector # 元数据定义部分 metadata: name: center # Collector 的名称为 center namespace: opentelemetry # 具体的配置内容 spec: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 replicas: 1 # 设置副本数量为1 config: # 定义 Collector 配置 receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs) otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器 protocols: # 启用哪些协议来接收数据 grpc: endpoint: 0.0.0.0:4317 # 启用 gRPC 协议 http: endpoint: 0.0.0.0:4318 # 启用 HTTP 协议 processors: # 处理器,用于处理收集到的数据 batch: {} # 批处理器,用于将数据分批发送,提高效率 exporters: # 导出器,用于将处理后的数据发送到后端系统 debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试) service: # 服务配置部分 pipelines: # 定义处理管道 traces: # 定义 trace 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [debug] # 将数据打印到终端 root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 43s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 12m root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get svc -n opentelemetry NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE center-collector ClusterIP 10.105.241.233 <none> 4317/TCP,4318/TCP 49s center-collector-headless ClusterIP None <none> 4317/TCP,4318/TCP 49s center-collector-monitoring ClusterIP 10.96.61.65 <none> 8888/TCP 49s root@k8s01:~/helm/opentelemetry/cert-manager# 四、部署Collector(代理)我们使用 Sidecar 模式部署 OpenTelemetry 代理。该代理会将应用程序的追踪发送到我们刚刚部署的中心OpenTelemetry 收集器。root@k8s01:~/helm/opentelemetry/cert-manager# cat center-collector.yaml apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector # 元数据定义部分 metadata: name: center # Collector 的名称为 center namespace: opentelemetry # 具体的配置内容 spec: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 replicas: 1 # 设置副本数量为1 config: # 定义 Collector 配置 receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs) otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器 protocols: # 启用哪些协议来接收数据 grpc: endpoint: 0.0.0.0:4317 # 启用 gRPC 协议 http: endpoint: 0.0.0.0:4318 # 启用 HTTP 协议 processors: # 处理器,用于处理收集到的数据 batch: {} # 批处理器,用于将数据分批发送,提高效率 exporters: # 导出器,用于将处理后的数据发送到后端系统 debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试) service: # 服务配置部分 pipelines: # 定义处理管道 traces: # 定义 trace 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [debug] # 将数据打印到终端 root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 43s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 12m root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get svc -n opentelemetry NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE center-collector ClusterIP 10.105.241.233 <none> 4317/TCP,4318/TCP 49s center-collector-headless ClusterIP None <none> 4317/TCP,4318/TCP 49s center-collector-monitoring ClusterIP 10.96.61.65 <none> 8888/TCP 49s root@k8s01:~/helm/opentelemetry/cert-manager# vi sidecar-collector.yaml root@k8s01:~/helm/opentelemetry/cert-manager# kubectl apply -f sidecar-collector.yaml opentelemetrycollector.opentelemetry.io/sidecar created root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get opentelemetrycollectors -n opentelemetry NAME MODE VERSION READY AGE IMAGE MANAGEMENT center deployment 0.127.0 1/1 3m3s registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 managed sidecar sidecar 0.127.0 7s managed root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get opentelemetrycollectors -n opentelemetry NAME MODE VERSION READY AGE IMAGE center deployment 0.127.0 1/1 3m8s registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 sidecar sidecar 0.127.0 12s root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3m31s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 15m sidecar 代理依赖于应用程序启动,因此现在创建后并不会立即启动,需要我们创建一个应用程序并使用这个 sidecar 模式的 collector。

OpenTelemetry部署 建议使用 OpenTelemetry Operator 来部署,因为它可以帮助我们轻松部署和管理 OpenTelemetry 收集器,还可以自动检测应用程序。具体可参考文档https://opentelemetry.io/docs/platforms/kubernetes/operator/一、部署cert-manager因为 Operator 使用了 Admission Webhook 通过 HTTP 回调机制对资源进行校验/修改。Kubernetes 要求 Webhook 服务必须使用 TLS,因此 Operator 需要为其 webhook server 签发证书,所以需要先安装cert-manager。# wget https://github.com/cert-manager/cert-manager/releases/latest/download/cert-manager.yaml # kubectl apply -f cert-manager.yaml root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get -n cert-manager pod NAME READY STATUS RESTARTS AGE cert-manager-7bd494778-gs44k 1/1 Running 0 37s cert-manager-cainjector-76474c8c48-w9r5p 1/1 Running 0 37s cert-manager-webhook-6797c49f67-thvcz 1/1 Running 0 37s root@k8s01:~/helm/opentelemetry/cert-manager# 二、部署Operator在 Kubernetes 上使用 OpenTelemetry,主要就是部署 OpenTelemetry 收集器。# wget https://github.com/open-telemetry/opentelemetry-operator/releases/latest/download/opentelemetry-operator.yaml # kubectl apply -f opentelemetry-operator.yaml # kubectl get pod -n opentelemetry-operator-system NAME READY STATUS RESTARTS AGE opentelemetry-operator-controller-manager-6d94c5db75-cz957 2/2 Running 0 74s # kubectl get crd |grep opentelemetry instrumentations.opentelemetry.io 2025-04-21T09:48:53Z opampbridges.opentelemetry.io 2025-04-21T09:48:54Z opentelemetrycollectors.opentelemetry.io 2025-04-21T09:48:54Z targetallocators.opentelemetry.io 2025-04-21T09:48:54Zroot@k8s01:~/helm/opentelemetry/cert-manager# kubectl apply -f opentelemetry-operator.yaml namespace/opentelemetry-operator-system created customresourcedefinition.apiextensions.k8s.io/instrumentations.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/opampbridges.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/opentelemetrycollectors.opentelemetry.io created customresourcedefinition.apiextensions.k8s.io/targetallocators.opentelemetry.io created serviceaccount/opentelemetry-operator-controller-manager created role.rbac.authorization.k8s.io/opentelemetry-operator-leader-election-role created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-manager-role created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-metrics-reader created clusterrole.rbac.authorization.k8s.io/opentelemetry-operator-proxy-role created rolebinding.rbac.authorization.k8s.io/opentelemetry-operator-leader-election-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/opentelemetry-operator-manager-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/opentelemetry-operator-proxy-rolebinding created service/opentelemetry-operator-controller-manager-metrics-service created service/opentelemetry-operator-webhook-service created deployment.apps/opentelemetry-operator-controller-manager created Warning: spec.privateKey.rotationPolicy: In cert-manager >= v1.18.0, the default value changed from `Never` to `Always`. certificate.cert-manager.io/opentelemetry-operator-serving-cert created issuer.cert-manager.io/opentelemetry-operator-selfsigned-issuer created mutatingwebhookconfiguration.admissionregistration.k8s.io/opentelemetry-operator-mutating-webhook-configuration created validatingwebhookconfiguration.admissionregistration.k8s.io/opentelemetry-operator-validating-webhook-configuration created root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pods -n opentelemetry-operator-system NAME READY STATUS RESTARTS AGE opentelemetry-operator-controller-manager-f78fc55f7-xtjk2 2/2 Running 0 107s root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get crd |grep opentelemetry instrumentations.opentelemetry.io 2025-06-14T11:30:01Z opampbridges.opentelemetry.io 2025-06-14T11:30:01Z opentelemetrycollectors.opentelemetry.io 2025-06-14T11:30:02Z targetallocators.opentelemetry.io 2025-06-14T11:30:02Z三、部署Collector(中心)接下来我们部署一个精简版的 OpenTelemetry Collector,用于接收 OTLP 格式的 trace 数据,通过 gRPC 或 HTTP 协议接入,经过内存控制与批处理后,打印到日志中以供调试使用。 root@k8s01:~/helm/opentelemetry/cert-manager# cat center-collector.yaml apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector # 元数据定义部分 metadata: name: center # Collector 的名称为 center namespace: opentelemetry # 具体的配置内容 spec: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 replicas: 1 # 设置副本数量为1 config: # 定义 Collector 配置 receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs) otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器 protocols: # 启用哪些协议来接收数据 grpc: endpoint: 0.0.0.0:4317 # 启用 gRPC 协议 http: endpoint: 0.0.0.0:4318 # 启用 HTTP 协议 processors: # 处理器,用于处理收集到的数据 batch: {} # 批处理器,用于将数据分批发送,提高效率 exporters: # 导出器,用于将处理后的数据发送到后端系统 debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试) service: # 服务配置部分 pipelines: # 定义处理管道 traces: # 定义 trace 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [debug] # 将数据打印到终端 root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 43s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 12m root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get svc -n opentelemetry NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE center-collector ClusterIP 10.105.241.233 <none> 4317/TCP,4318/TCP 49s center-collector-headless ClusterIP None <none> 4317/TCP,4318/TCP 49s center-collector-monitoring ClusterIP 10.96.61.65 <none> 8888/TCP 49s root@k8s01:~/helm/opentelemetry/cert-manager# 四、部署Collector(代理)我们使用 Sidecar 模式部署 OpenTelemetry 代理。该代理会将应用程序的追踪发送到我们刚刚部署的中心OpenTelemetry 收集器。root@k8s01:~/helm/opentelemetry/cert-manager# cat center-collector.yaml apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector # 元数据定义部分 metadata: name: center # Collector 的名称为 center namespace: opentelemetry # 具体的配置内容 spec: image: registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 replicas: 1 # 设置副本数量为1 config: # 定义 Collector 配置 receivers: # 接收器,用于接收遥测数据(如 trace、metrics、logs) otlp: # 配置 OTLP(OpenTelemetry Protocol)接收器 protocols: # 启用哪些协议来接收数据 grpc: endpoint: 0.0.0.0:4317 # 启用 gRPC 协议 http: endpoint: 0.0.0.0:4318 # 启用 HTTP 协议 processors: # 处理器,用于处理收集到的数据 batch: {} # 批处理器,用于将数据分批发送,提高效率 exporters: # 导出器,用于将处理后的数据发送到后端系统 debug: {} # 使用 debug 导出器,将数据打印到终端(通常用于测试或调试) service: # 服务配置部分 pipelines: # 定义处理管道 traces: # 定义 trace 类型的管道 receivers: [otlp] # 接收器为 OTLP processors: [batch] # 使用批处理器 exporters: [debug] # 将数据打印到终端 root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 43s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 12m root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get svc -n opentelemetry NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE center-collector ClusterIP 10.105.241.233 <none> 4317/TCP,4318/TCP 49s center-collector-headless ClusterIP None <none> 4317/TCP,4318/TCP 49s center-collector-monitoring ClusterIP 10.96.61.65 <none> 8888/TCP 49s root@k8s01:~/helm/opentelemetry/cert-manager# vi sidecar-collector.yaml root@k8s01:~/helm/opentelemetry/cert-manager# kubectl apply -f sidecar-collector.yaml opentelemetrycollector.opentelemetry.io/sidecar created root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get opentelemetrycollectors -n opentelemetry NAME MODE VERSION READY AGE IMAGE MANAGEMENT center deployment 0.127.0 1/1 3m3s registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 managed sidecar sidecar 0.127.0 7s managed root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get opentelemetrycollectors -n opentelemetry NAME MODE VERSION READY AGE IMAGE center deployment 0.127.0 1/1 3m8s registry.cn-guangzhou.aliyuncs.com/xingcangku/opentelemetry-collector-0.127.0:0.127.0 sidecar sidecar 0.127.0 12s root@k8s01:~/helm/opentelemetry/cert-manager# kubectl get pod -n opentelemetry NAME READY STATUS RESTARTS AGE center-collector-78f7bbdf45-j798s 1/1 Running 0 3m31s center-collector-7b7b8b9b97-qwhdr 0/1 Terminating 0 15m sidecar 代理依赖于应用程序启动,因此现在创建后并不会立即启动,需要我们创建一个应用程序并使用这个 sidecar 模式的 collector。 -