搜索到

92

篇与

的结果

-

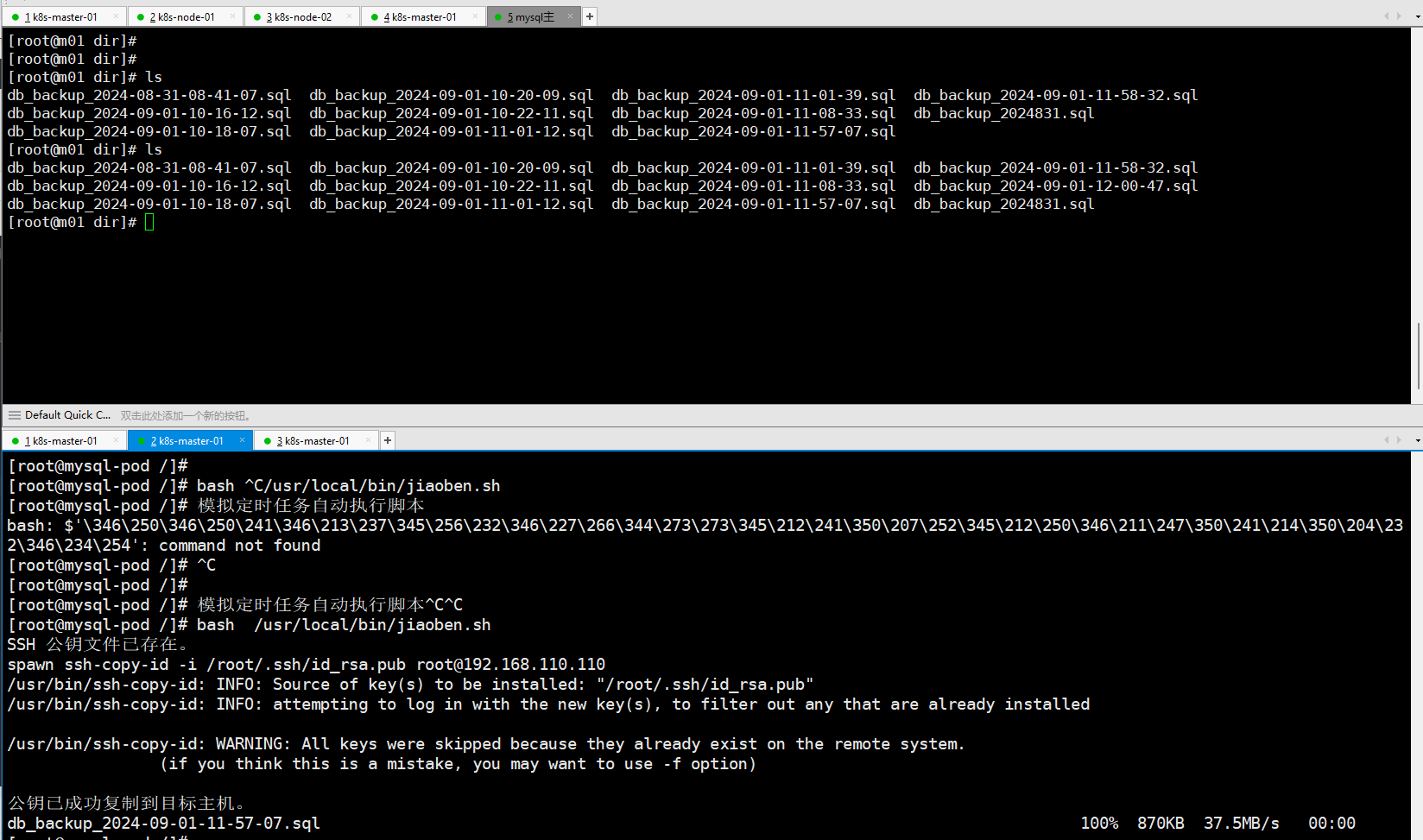

webhook开发 侧车容器 一、 先准备好webhook环境之前博客有写二、 准备业务镜像1. 下载镜像 docker pull centos:7 2.配置yum源 rm -f /etc/yum.repos.d/CentOS-Base.repo cat > /etc/yum.repos.d/CentOS-Base.repo <<EOF [base] name=CentOS-\$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/os/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/os/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/os/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-\$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/updates/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/updates/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/updates/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-\$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/extras/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/extras/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/extras/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-\$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/centosplus/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/centosplus/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/centosplus/\$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #contrib - packages by Centos Users [contrib] name=CentOS-\$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/contrib/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/contrib/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/contrib/\$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 EOF 3.安装需要的应用 yum install -y cronie openssh-server ssh wget 4.安装mysql5.7 wget https://dev.mysql.com/get/mysql57-community-release-el7-11.noarch.rpm rpm -ivh mysql57-community-release-el7-11.noarch.rpm yum repolist enabled | grep "mysql.*-community.*" yum install mysql-community-server 5.创建用户mysql并且给权限 chown -R mysql:mysql /var/lib/mysql chmod -R 755 /var/lib/mysql 6.后台启动数据库 /usr/sbin/mysqld --user=mysql & 7.初始化数据库 /usr/sbin/mysqld --initialize 8.查看初始化的密码 grep 'temporary password' /var/log/mysqld.log mysql -u root -p 9.把初始化的密码输入上 ALTER USER 'root'@'localhost' IDENTIFIED BY 'newpassword'; 10.测试创建一个账号还有一个库和一个表 -- 创建用户 'axing' 并设置密码 CREATE USER 'axing'@'localhost' IDENTIFIED BY 'Egon@123'; -- 授予所有权限给用户 'axing' 在所有数据库中 GRANT ALL PRIVILEGES ON *.* TO 'axing'@'localhost' WITH GRANT OPTION; -- 刷新权限表以使更改生效 FLUSH PRIVILEGES; --创建库 CREATE DATABASE my_database; USE my_database; --创建表 CREATE TABLE my_table ( id INT AUTO_INCREMENT PRIMARY KEY, name VARCHAR(100) NOT NULL, created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP ); 三、准备测车镜像(1)准备数据库备份的脚本cat jiaoben.py脚本里面有些地方还可以优化,嫌麻烦没完善了。(按这个是可以跑起来的修改好对应的账号密码还有IP地址)#!/bin/bash /usr/sbin/mysqld --user=mysql & /usr/sbin/sshd # 配置 #!/bin/bash # 启动 MySQL 和 SSH 服务 /usr/sbin/mysqld --user=mysql & # 启动 MySQL 数据库服务 /usr/sbin/sshd # 启动 SSH 服务 # 配置变量 MYSQL_HOST="127.0.0.1" # MySQL 数据库主机地址 MYSQL_USER="root" # MySQL 数据库用户名 MYSQL_PASSWORD="Egon@123" # MySQL 数据库密码 BACKUP_DIR="/backup" # 本地备份文件存储目录 REMOTE_HOST="192.168.110.110" # 远程主机地址 REMOTE_USER="root" # 远程主机用户名 REMOTE_DIR="/remote/backup/dir" # 远程备份文件存储目录 host_info="root:1" # 存储用户名和密码的变量 target_ip="192.168.110.110" # 目标主机 IP 地址 # 提取用户名和密码 user=$(echo $host_info | awk -F: '{print $1}') # 从 host_info 中提取用户名 pass=$(echo $host_info | awk -F: '{print $2}') # 从 host_info 中提取密码 # SSH 密钥路径 key_path="/root/.ssh/id_rsa" # SSH 私钥路径 pub_key_path="/root/.ssh/id_rsa.pub" # SSH 公钥路径 # 检查并生成 SSH 密钥对 if [ ! -f "$pub_key_path" ]; then echo "SSH 公钥文件不存在,生成新的密钥对..." # 如果公钥文件不存在,则生成新的密钥对 ssh-keygen -t rsa -b 4096 -f "$key_path" -N "" # 生成新的 SSH 密钥对 else echo "SSH 公钥文件已存在。" # 如果公钥文件已存在,则不进行生成 fi # 使用 expect 自动化 ssh-copy-id 过程 expect << EOF spawn ssh-copy-id -i $pub_key_path $user@$target_ip expect { "yes/no" {send "yes\n"; exp_continue} "password:" {send "$pass\n"} } expect eof EOF # 检查 expect 命令的退出状态 if [ $? -eq 0 ]; then echo "公钥已成功复制到目标主机。" # 如果 expect 命令成功执行,打印成功消息 else echo "公钥复制过程失败。" # 如果 expect 命令失败,打印失败消息 fi # 创建备份目录 mkdir -p $BACKUP_DIR # 创建备份存储目录(如果不存在) # 获取当前时间 TIMESTAMP=$(date +"%F-%H-%M-%S") # 获取当前时间,格式为 YYYY-MM-DD-HH-MM-SS # 备份数据库 mysqldump -h $MYSQL_HOST --all-databases > $BACKUP_DIR/db_backup_$TIMESTAMP.sql # 使用 mysqldump 工具备份所有数据库到本地文件 # 创建远程备份目录(如果不存在) ssh root@$REMOTE_HOST "mkdir -p $REMOTE_DIR" # 在远程主机上创建备份存储目录(如果不存在) # 上传备份到远程主机 scp $BACKUP_DIR/db_backup_$TIMESTAMP.sql $REMOTE_USER@$REMOTE_HOST:$REMOTE_DIR # 使用 scp 工具将备份文件上传到远程主机 # 删除本地备份文件 rm $BACKUP_DIR/db_backup_$TIMESTAMP.sql # 删除本地备份文件,以节省磁盘空间(2)准备定时任务的文件cat crontab0 2 * * * /usr/local/bin/jiaoben.py(3)构建镜像cat dockerfile可以偷懒直接用上面业务镜像 # 使用 CentOS 作为基础镜像 FROM registry.cn-guangzhou.aliyuncs.com/xingcangku/axingcangku:latest # 复制备份脚本 COPY jiaoben.sh /usr/local/bin/jiaoben.sh RUN chmod +x /usr/local/bin/jiaoben.sh # 复制 crontab 文件 COPY crontab /etc/cron.d/backup-cron RUN chmod 0644 /etc/cron.d/backup-cron # 确保 cron 服务在容器中运行 RUN crontab /etc/cron.d/backup-cron # 创建 MySQL 配置文件 .my.cnf RUN echo "[client]" > /root/.my.cnf && \ echo "user=root" >> /root/.my.cnf && \ echo "password=Egon@123" >> /root/.my.cnf && \ chmod 600 /root/.my.cnf # 启动 cron 服务,并保持容器运行 CMD ["crond", "-n"]四、准备webhook.py文件这个是webhook pod里面运行的python文件有两个功能1.检测到带有app:mysql标签的pod的时候,会自动创建一个侧车容器,会定时备份数据给远程主机。2.如果创建的pod没有带有标签会自动添加标签3.labels 中存在 'app: test' 标签,则添加 annotation reloader.stakater.com/auto: "true"# -*- coding: utf-8 -*- from flask import Flask, request, jsonify import base64 import json import ssl import kubernetes.client from kubernetes.client.rest import ApiException from kubernetes import config import os import threading import time # 创建 Flask 应用实例 app = Flask(__name__) # 加载 Kubernetes 集群内的配置 config.load_incluster_config() @app.route('/mutate', methods=['POST']) def mutate_pod(): try: # 从 AdmissionReview 请求中解析 JSON 数据 admission_review = request.get_json() pod = admission_review['request']['object'] patch = [] # 初始化 metadata, labels, 和 annotations 如果它们不存在 # 检查 pod 是否有 metadata 部分,如果没有则添加 if 'metadata' not in pod: patch.append({ "op": "add", "path": "/metadata", "value": {} }) # 检查 metadata 是否有 labels 部分,如果没有则添加 if 'labels' not in pod.get('metadata', {}): patch.append({ "op": "add", "path": "/metadata/labels", "value": {} }) # 检查 metadata 是否有 annotations 部分,如果没有则添加 if 'annotations' not in pod.get('metadata', {}): patch.append({ "op": "add", "path": "/metadata/annotations", "value": {} }) # 获取现有的 labels 和 annotations labels = pod.get('metadata', {}).get('labels', {}) annotations = pod.get('metadata', {}).get('annotations', {}) # 如果 labels 中不存在 'environment' 标签,则添加 if 'environment' not in labels: patch.append({ "op": "add", "path": "/metadata/labels/environment", "value": "production" }) # 如果 labels 中存在 'app: test' 标签,则添加 annotation reloader.stakater.com/auto: "true" if labels.get('app') == 'test': if 'reloader.stakater.com/auto' not in annotations: patch.append({ "op": "add", "path": "/metadata/annotations/reloader.stakater.com~1auto", "value": "true" }) # 如果 labels 中存在 'app: mysql' 标签,则在 Pod 中添加 sidecar 容器 if labels.get('app') == 'mysql': container = { "name": "sidecar-container", "image": "registry.cn-guangzhou.aliyuncs.com/xingcangku/axingcangku:v1.1", "ports": [{"containerPort": 8080}] } patch.append({ "op": "add", "path": "/spec/containers/-", "value": container }) # 构造 AdmissionReview 响应 admission_response = { "apiVersion": "admission.k8s.io/v1", "kind": "AdmissionReview", "response": { "uid": admission_review['request']['uid'], "allowed": True, "patchType": "JSONPatch", "patch": base64.b64encode(json.dumps(patch).encode()).decode() # 对 patch 进行 Base64 编码 } } # 返回 AdmissionReview 响应 return jsonify(admission_response) except Exception as e: # 如果发生异常,返回包含错误消息的响应 return jsonify({ "apiVersion": "admission.k8s.io/v1", "kind": "AdmissionReview", "response": { "uid": admission_review['request']['uid'], "allowed": False, "status": { "message": str(e) # 将异常消息作为响应的一部分 } } }), 500 def keep_alive(): while True: time.sleep(3600) # 每小时休眠一次,保持进程活跃 if __name__ == '__main__': # 创建 SSL 上下文并加载证书 context = ssl.create_default_context(ssl.Purpose.CLIENT_AUTH) context.load_cert_chain('/certs/tls.crt', '/certs/tls.key') # 加载 SSL 证书和私钥 # 使用 SSL 上下文启动 Flask 应用,监听所有网络接口的 8080 端口 app.run(host='0.0.0.0', port=8080, ssl_context=context) 五、总结:这个测车容器主要实现了,在使用mysql为业务pod的时候有数据备份的功能。实现了定时自动备份数据的功能。六、更新可以安装ansible直接执行下面的命令部署,但是因为镜像里面的webhook.py文件的定制。 如果需要自定义功能需要按上面的步骤创建镜像然后在下面的代码中更新镜像地址即可。--- - name: Deploy Kubernetes Webhook hosts: localhost connection: local become: yes tasks: # 1. 生成 CA 私钥 - name: Generate CA private key command: openssl genrsa -out ca.key 2048 args: creates: ca.key # 2. 生成自签名 CA 证书 - name: Generate self-signed CA certificate command: > openssl req -x509 -new -nodes -key ca.key -subj "/CN=webhook-service.default.svc" -days 36500 -out ca.crt args: creates: ca.crt # 3. 创建 OpenSSL 配置文件 - name: Create OpenSSL configuration file copy: dest: webhook-openssl.cnf content: | [req] default_bits = 2048 prompt = no default_md = sha256 req_extensions = req_ext distinguished_name = dn [dn] C = CN ST = Shanghai L = Shanghai O = egonlin OU = egonlin CN = webhook-service.default.svc [req_ext] subjectAltName = @alt_names [alt_names] DNS.1 = webhook-service DNS.2 = webhook-service.default DNS.3 = webhook-service.default.svc DNS.4 = webhook-service.default.svc.cluster.local [req_distinguished_name] CN = webhook-service.default.svc [v3_req] keyUsage = critical, digitalSignature, keyEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [v3_ext] authorityKeyIdentifier=keyid,issuer:always basicConstraints=CA:FALSE keyUsage=keyEncipherment,dataEncipherment extendedKeyUsage=serverAuth,clientAuth subjectAltName=@alt_names # 4. 生成 Webhook 服务的私钥 - name: Generate webhook service private key command: openssl genrsa -out webhook.key 2048 args: creates: webhook.key # 5. 使用配置文件生成 CSR - name: Generate CSR command: openssl req -new -key webhook.key -out webhook.csr -config webhook-openssl.cnf args: creates: webhook.csr # 6. 生成 webhook 证书 - name: Generate webhook certificate command: > openssl x509 -req -in webhook.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out webhook.crt -days 36500 -extensions v3_ext -extfile webhook-openssl.cnf args: creates: webhook.crt # 7. 删除旧的 Kubernetes Secret - name: Delete existing Kubernetes Secret command: kubectl delete secret webhook-certs --namespace=default ignore_errors: true # 8. 创建 Kubernetes Secret - name: Create Kubernetes Secret for webhook certificates command: > kubectl create secret tls webhook-certs --cert=webhook.crt --key=webhook.key --namespace=default --dry-run=client -o yaml register: secret_yaml - name: Apply Kubernetes Secret command: kubectl apply -f - args: stdin: "{{ secret_yaml.stdout }}" # 9. 创建 webhook Deployment 和 Service - name: Create webhook deployment YAML copy: dest: webhook-deployment.yaml content: | apiVersion: apps/v1 kind: Deployment metadata: name: webhook-deployment namespace: default spec: replicas: 1 selector: matchLabels: app: webhook template: metadata: labels: app: webhook spec: containers: - name: webhook image: registry.cn-guangzhou.aliyuncs.com/xingcangku/webhook:v1.0 command: [ "python", "/app/webhook.py" ] volumeMounts: - name: webhook-certs mountPath: /certs readOnly: true volumes: - name: webhook-certs secret: secretName: webhook-certs - name: Apply webhook deployment command: kubectl apply -f webhook-deployment.yaml - name: Create webhook service YAML copy: dest: webhook-service.yaml content: | apiVersion: v1 kind: Service metadata: name: webhook-service namespace: default spec: ports: - port: 443 targetPort: 443 selector: app: webhook - name: Apply webhook service command: kubectl apply -f webhook-service.yaml # 10. 生成 base64 编码的 CA 证书 - name: Create base64 encoded CA certificate shell: base64 -w 0 ca.crt > ca.crt.base64 args: creates: ca.crt.base64 # 11. 读取 base64 内容并生成 MutatingWebhookConfiguration YAML - name: Read CA base64 content slurp: src: ca.crt.base64 register: ca_base64 - name: Generate MutatingWebhookConfiguration YAML copy: dest: m-w-c.yaml content: | apiVersion: admissionregistration.k8s.io/v1 kind: MutatingWebhookConfiguration metadata: name: example-mutating-webhook webhooks: - name: example.webhook.com clientConfig: service: name: webhook-service namespace: default path: "/mutate" caBundle: "{{ ca_base64.content | b64decode }}" rules: - operations: ["CREATE"] apiGroups: [""] apiVersions: ["v1"] resources: ["pods"] admissionReviewVersions: ["v1"] sideEffects: None - name: Apply MutatingWebhookConfiguration command: kubectl apply -f m-w-c.yaml

webhook开发 侧车容器 一、 先准备好webhook环境之前博客有写二、 准备业务镜像1. 下载镜像 docker pull centos:7 2.配置yum源 rm -f /etc/yum.repos.d/CentOS-Base.repo cat > /etc/yum.repos.d/CentOS-Base.repo <<EOF [base] name=CentOS-\$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/os/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/os/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/os/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-\$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/updates/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/updates/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/updates/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-\$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/extras/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/extras/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/extras/\$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-\$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/centosplus/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/centosplus/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/centosplus/\$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #contrib - packages by Centos Users [contrib] name=CentOS-\$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/\$releasever/contrib/\$basearch/ http://mirrors.aliyuncs.com/centos/\$releasever/contrib/\$basearch/ http://mirrors.cloud.aliyuncs.com/centos/\$releasever/contrib/\$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 EOF 3.安装需要的应用 yum install -y cronie openssh-server ssh wget 4.安装mysql5.7 wget https://dev.mysql.com/get/mysql57-community-release-el7-11.noarch.rpm rpm -ivh mysql57-community-release-el7-11.noarch.rpm yum repolist enabled | grep "mysql.*-community.*" yum install mysql-community-server 5.创建用户mysql并且给权限 chown -R mysql:mysql /var/lib/mysql chmod -R 755 /var/lib/mysql 6.后台启动数据库 /usr/sbin/mysqld --user=mysql & 7.初始化数据库 /usr/sbin/mysqld --initialize 8.查看初始化的密码 grep 'temporary password' /var/log/mysqld.log mysql -u root -p 9.把初始化的密码输入上 ALTER USER 'root'@'localhost' IDENTIFIED BY 'newpassword'; 10.测试创建一个账号还有一个库和一个表 -- 创建用户 'axing' 并设置密码 CREATE USER 'axing'@'localhost' IDENTIFIED BY 'Egon@123'; -- 授予所有权限给用户 'axing' 在所有数据库中 GRANT ALL PRIVILEGES ON *.* TO 'axing'@'localhost' WITH GRANT OPTION; -- 刷新权限表以使更改生效 FLUSH PRIVILEGES; --创建库 CREATE DATABASE my_database; USE my_database; --创建表 CREATE TABLE my_table ( id INT AUTO_INCREMENT PRIMARY KEY, name VARCHAR(100) NOT NULL, created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP ); 三、准备测车镜像(1)准备数据库备份的脚本cat jiaoben.py脚本里面有些地方还可以优化,嫌麻烦没完善了。(按这个是可以跑起来的修改好对应的账号密码还有IP地址)#!/bin/bash /usr/sbin/mysqld --user=mysql & /usr/sbin/sshd # 配置 #!/bin/bash # 启动 MySQL 和 SSH 服务 /usr/sbin/mysqld --user=mysql & # 启动 MySQL 数据库服务 /usr/sbin/sshd # 启动 SSH 服务 # 配置变量 MYSQL_HOST="127.0.0.1" # MySQL 数据库主机地址 MYSQL_USER="root" # MySQL 数据库用户名 MYSQL_PASSWORD="Egon@123" # MySQL 数据库密码 BACKUP_DIR="/backup" # 本地备份文件存储目录 REMOTE_HOST="192.168.110.110" # 远程主机地址 REMOTE_USER="root" # 远程主机用户名 REMOTE_DIR="/remote/backup/dir" # 远程备份文件存储目录 host_info="root:1" # 存储用户名和密码的变量 target_ip="192.168.110.110" # 目标主机 IP 地址 # 提取用户名和密码 user=$(echo $host_info | awk -F: '{print $1}') # 从 host_info 中提取用户名 pass=$(echo $host_info | awk -F: '{print $2}') # 从 host_info 中提取密码 # SSH 密钥路径 key_path="/root/.ssh/id_rsa" # SSH 私钥路径 pub_key_path="/root/.ssh/id_rsa.pub" # SSH 公钥路径 # 检查并生成 SSH 密钥对 if [ ! -f "$pub_key_path" ]; then echo "SSH 公钥文件不存在,生成新的密钥对..." # 如果公钥文件不存在,则生成新的密钥对 ssh-keygen -t rsa -b 4096 -f "$key_path" -N "" # 生成新的 SSH 密钥对 else echo "SSH 公钥文件已存在。" # 如果公钥文件已存在,则不进行生成 fi # 使用 expect 自动化 ssh-copy-id 过程 expect << EOF spawn ssh-copy-id -i $pub_key_path $user@$target_ip expect { "yes/no" {send "yes\n"; exp_continue} "password:" {send "$pass\n"} } expect eof EOF # 检查 expect 命令的退出状态 if [ $? -eq 0 ]; then echo "公钥已成功复制到目标主机。" # 如果 expect 命令成功执行,打印成功消息 else echo "公钥复制过程失败。" # 如果 expect 命令失败,打印失败消息 fi # 创建备份目录 mkdir -p $BACKUP_DIR # 创建备份存储目录(如果不存在) # 获取当前时间 TIMESTAMP=$(date +"%F-%H-%M-%S") # 获取当前时间,格式为 YYYY-MM-DD-HH-MM-SS # 备份数据库 mysqldump -h $MYSQL_HOST --all-databases > $BACKUP_DIR/db_backup_$TIMESTAMP.sql # 使用 mysqldump 工具备份所有数据库到本地文件 # 创建远程备份目录(如果不存在) ssh root@$REMOTE_HOST "mkdir -p $REMOTE_DIR" # 在远程主机上创建备份存储目录(如果不存在) # 上传备份到远程主机 scp $BACKUP_DIR/db_backup_$TIMESTAMP.sql $REMOTE_USER@$REMOTE_HOST:$REMOTE_DIR # 使用 scp 工具将备份文件上传到远程主机 # 删除本地备份文件 rm $BACKUP_DIR/db_backup_$TIMESTAMP.sql # 删除本地备份文件,以节省磁盘空间(2)准备定时任务的文件cat crontab0 2 * * * /usr/local/bin/jiaoben.py(3)构建镜像cat dockerfile可以偷懒直接用上面业务镜像 # 使用 CentOS 作为基础镜像 FROM registry.cn-guangzhou.aliyuncs.com/xingcangku/axingcangku:latest # 复制备份脚本 COPY jiaoben.sh /usr/local/bin/jiaoben.sh RUN chmod +x /usr/local/bin/jiaoben.sh # 复制 crontab 文件 COPY crontab /etc/cron.d/backup-cron RUN chmod 0644 /etc/cron.d/backup-cron # 确保 cron 服务在容器中运行 RUN crontab /etc/cron.d/backup-cron # 创建 MySQL 配置文件 .my.cnf RUN echo "[client]" > /root/.my.cnf && \ echo "user=root" >> /root/.my.cnf && \ echo "password=Egon@123" >> /root/.my.cnf && \ chmod 600 /root/.my.cnf # 启动 cron 服务,并保持容器运行 CMD ["crond", "-n"]四、准备webhook.py文件这个是webhook pod里面运行的python文件有两个功能1.检测到带有app:mysql标签的pod的时候,会自动创建一个侧车容器,会定时备份数据给远程主机。2.如果创建的pod没有带有标签会自动添加标签3.labels 中存在 'app: test' 标签,则添加 annotation reloader.stakater.com/auto: "true"# -*- coding: utf-8 -*- from flask import Flask, request, jsonify import base64 import json import ssl import kubernetes.client from kubernetes.client.rest import ApiException from kubernetes import config import os import threading import time # 创建 Flask 应用实例 app = Flask(__name__) # 加载 Kubernetes 集群内的配置 config.load_incluster_config() @app.route('/mutate', methods=['POST']) def mutate_pod(): try: # 从 AdmissionReview 请求中解析 JSON 数据 admission_review = request.get_json() pod = admission_review['request']['object'] patch = [] # 初始化 metadata, labels, 和 annotations 如果它们不存在 # 检查 pod 是否有 metadata 部分,如果没有则添加 if 'metadata' not in pod: patch.append({ "op": "add", "path": "/metadata", "value": {} }) # 检查 metadata 是否有 labels 部分,如果没有则添加 if 'labels' not in pod.get('metadata', {}): patch.append({ "op": "add", "path": "/metadata/labels", "value": {} }) # 检查 metadata 是否有 annotations 部分,如果没有则添加 if 'annotations' not in pod.get('metadata', {}): patch.append({ "op": "add", "path": "/metadata/annotations", "value": {} }) # 获取现有的 labels 和 annotations labels = pod.get('metadata', {}).get('labels', {}) annotations = pod.get('metadata', {}).get('annotations', {}) # 如果 labels 中不存在 'environment' 标签,则添加 if 'environment' not in labels: patch.append({ "op": "add", "path": "/metadata/labels/environment", "value": "production" }) # 如果 labels 中存在 'app: test' 标签,则添加 annotation reloader.stakater.com/auto: "true" if labels.get('app') == 'test': if 'reloader.stakater.com/auto' not in annotations: patch.append({ "op": "add", "path": "/metadata/annotations/reloader.stakater.com~1auto", "value": "true" }) # 如果 labels 中存在 'app: mysql' 标签,则在 Pod 中添加 sidecar 容器 if labels.get('app') == 'mysql': container = { "name": "sidecar-container", "image": "registry.cn-guangzhou.aliyuncs.com/xingcangku/axingcangku:v1.1", "ports": [{"containerPort": 8080}] } patch.append({ "op": "add", "path": "/spec/containers/-", "value": container }) # 构造 AdmissionReview 响应 admission_response = { "apiVersion": "admission.k8s.io/v1", "kind": "AdmissionReview", "response": { "uid": admission_review['request']['uid'], "allowed": True, "patchType": "JSONPatch", "patch": base64.b64encode(json.dumps(patch).encode()).decode() # 对 patch 进行 Base64 编码 } } # 返回 AdmissionReview 响应 return jsonify(admission_response) except Exception as e: # 如果发生异常,返回包含错误消息的响应 return jsonify({ "apiVersion": "admission.k8s.io/v1", "kind": "AdmissionReview", "response": { "uid": admission_review['request']['uid'], "allowed": False, "status": { "message": str(e) # 将异常消息作为响应的一部分 } } }), 500 def keep_alive(): while True: time.sleep(3600) # 每小时休眠一次,保持进程活跃 if __name__ == '__main__': # 创建 SSL 上下文并加载证书 context = ssl.create_default_context(ssl.Purpose.CLIENT_AUTH) context.load_cert_chain('/certs/tls.crt', '/certs/tls.key') # 加载 SSL 证书和私钥 # 使用 SSL 上下文启动 Flask 应用,监听所有网络接口的 8080 端口 app.run(host='0.0.0.0', port=8080, ssl_context=context) 五、总结:这个测车容器主要实现了,在使用mysql为业务pod的时候有数据备份的功能。实现了定时自动备份数据的功能。六、更新可以安装ansible直接执行下面的命令部署,但是因为镜像里面的webhook.py文件的定制。 如果需要自定义功能需要按上面的步骤创建镜像然后在下面的代码中更新镜像地址即可。--- - name: Deploy Kubernetes Webhook hosts: localhost connection: local become: yes tasks: # 1. 生成 CA 私钥 - name: Generate CA private key command: openssl genrsa -out ca.key 2048 args: creates: ca.key # 2. 生成自签名 CA 证书 - name: Generate self-signed CA certificate command: > openssl req -x509 -new -nodes -key ca.key -subj "/CN=webhook-service.default.svc" -days 36500 -out ca.crt args: creates: ca.crt # 3. 创建 OpenSSL 配置文件 - name: Create OpenSSL configuration file copy: dest: webhook-openssl.cnf content: | [req] default_bits = 2048 prompt = no default_md = sha256 req_extensions = req_ext distinguished_name = dn [dn] C = CN ST = Shanghai L = Shanghai O = egonlin OU = egonlin CN = webhook-service.default.svc [req_ext] subjectAltName = @alt_names [alt_names] DNS.1 = webhook-service DNS.2 = webhook-service.default DNS.3 = webhook-service.default.svc DNS.4 = webhook-service.default.svc.cluster.local [req_distinguished_name] CN = webhook-service.default.svc [v3_req] keyUsage = critical, digitalSignature, keyEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [v3_ext] authorityKeyIdentifier=keyid,issuer:always basicConstraints=CA:FALSE keyUsage=keyEncipherment,dataEncipherment extendedKeyUsage=serverAuth,clientAuth subjectAltName=@alt_names # 4. 生成 Webhook 服务的私钥 - name: Generate webhook service private key command: openssl genrsa -out webhook.key 2048 args: creates: webhook.key # 5. 使用配置文件生成 CSR - name: Generate CSR command: openssl req -new -key webhook.key -out webhook.csr -config webhook-openssl.cnf args: creates: webhook.csr # 6. 生成 webhook 证书 - name: Generate webhook certificate command: > openssl x509 -req -in webhook.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out webhook.crt -days 36500 -extensions v3_ext -extfile webhook-openssl.cnf args: creates: webhook.crt # 7. 删除旧的 Kubernetes Secret - name: Delete existing Kubernetes Secret command: kubectl delete secret webhook-certs --namespace=default ignore_errors: true # 8. 创建 Kubernetes Secret - name: Create Kubernetes Secret for webhook certificates command: > kubectl create secret tls webhook-certs --cert=webhook.crt --key=webhook.key --namespace=default --dry-run=client -o yaml register: secret_yaml - name: Apply Kubernetes Secret command: kubectl apply -f - args: stdin: "{{ secret_yaml.stdout }}" # 9. 创建 webhook Deployment 和 Service - name: Create webhook deployment YAML copy: dest: webhook-deployment.yaml content: | apiVersion: apps/v1 kind: Deployment metadata: name: webhook-deployment namespace: default spec: replicas: 1 selector: matchLabels: app: webhook template: metadata: labels: app: webhook spec: containers: - name: webhook image: registry.cn-guangzhou.aliyuncs.com/xingcangku/webhook:v1.0 command: [ "python", "/app/webhook.py" ] volumeMounts: - name: webhook-certs mountPath: /certs readOnly: true volumes: - name: webhook-certs secret: secretName: webhook-certs - name: Apply webhook deployment command: kubectl apply -f webhook-deployment.yaml - name: Create webhook service YAML copy: dest: webhook-service.yaml content: | apiVersion: v1 kind: Service metadata: name: webhook-service namespace: default spec: ports: - port: 443 targetPort: 443 selector: app: webhook - name: Apply webhook service command: kubectl apply -f webhook-service.yaml # 10. 生成 base64 编码的 CA 证书 - name: Create base64 encoded CA certificate shell: base64 -w 0 ca.crt > ca.crt.base64 args: creates: ca.crt.base64 # 11. 读取 base64 内容并生成 MutatingWebhookConfiguration YAML - name: Read CA base64 content slurp: src: ca.crt.base64 register: ca_base64 - name: Generate MutatingWebhookConfiguration YAML copy: dest: m-w-c.yaml content: | apiVersion: admissionregistration.k8s.io/v1 kind: MutatingWebhookConfiguration metadata: name: example-mutating-webhook webhooks: - name: example.webhook.com clientConfig: service: name: webhook-service namespace: default path: "/mutate" caBundle: "{{ ca_base64.content | b64decode }}" rules: - operations: ["CREATE"] apiGroups: [""] apiVersions: ["v1"] resources: ["pods"] admissionReviewVersions: ["v1"] sideEffects: None - name: Apply MutatingWebhookConfiguration command: kubectl apply -f m-w-c.yaml -

k8s 滚动发布 蓝绿发布 金丝雀发布 灰度发布 先部署apiVersion: apps/v1 kind: Deployment metadata: labels: app: production name: production spec: replicas: 1 # 为了测试方便,就设置1个副本吧 selector: matchLabels: app: production strategy: {} template: metadata: labels: app: production spec: containers: - image: nginx:1.18 name: nginx --- apiVersion: v1 kind: Service metadata: labels: app: production name: production spec: ports: - port: 9999 protocol: TCP targetPort: 80 selector: app: production type: ClusterIP status: loadBalancer: {} --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: production spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: production port: number: 9999滚动更新apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999金丝雀apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-by-header: canary nginx.ingress.kubernetes.io/canary-by-header-value: user-value nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999灰度发布apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-by-cookie: 'users_from_Shanghai' nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999

k8s 滚动发布 蓝绿发布 金丝雀发布 灰度发布 先部署apiVersion: apps/v1 kind: Deployment metadata: labels: app: production name: production spec: replicas: 1 # 为了测试方便,就设置1个副本吧 selector: matchLabels: app: production strategy: {} template: metadata: labels: app: production spec: containers: - image: nginx:1.18 name: nginx --- apiVersion: v1 kind: Service metadata: labels: app: production name: production spec: ports: - port: 9999 protocol: TCP targetPort: 80 selector: app: production type: ClusterIP status: loadBalancer: {} --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: production spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: production port: number: 9999滚动更新apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999金丝雀apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-by-header: canary nginx.ingress.kubernetes.io/canary-by-header-value: user-value nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999灰度发布apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: canary annotations: nginx.ingress.kubernetes.io/canary: 'true' # 要开启金丝雀发布机制,首先需要启用 Canary nginx.ingress.kubernetes.io/canary-by-cookie: 'users_from_Shanghai' nginx.ingress.kubernetes.io/canary-weight: '30' # 分配30%流量到当前Canary版本 spec: ingressClassName: nginx rules: - host: egon.ingress.com http: paths: - path: / pathType: Prefix backend: service: name: canary port: number: 9999 -

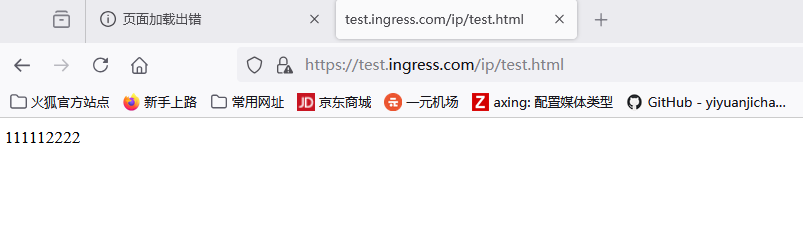

k8s ingress的部署 一、储备知识:ingress有3种部署方案(到底用k8s中的哪种控制器资源来进行管理有何区别) 按照是否需要为ingress的pod创建svc来区分,可以分为两大方案 1、需要创建(非hostNetwork网络模式) depoyment来部署ingress的pod(pod的网络不是hostNetwork) + svc(type为LoadBalancer) depoyment来部署ingress的pod(pod的网络不是hostNetwork) + svc(type为NodePort) 2、不需要创建(用hostNetwork网络模式)转发路径更短,效率更高 Daemonset来部署ingress的pod(pod的网络就是hostNetwork)二、先部署depoyment来部署ingress的pod(pod的网络不是hostNetwork) + svc(type为NodePort) 先部署ingress{collapse}{collapse-item label="cat deploy.yaml" open}apiVersion: v1 kind: Namespace metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx name: ingress-nginx --- apiVersion: v1 automountServiceAccountToken: true kind: ServiceAccount metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - "" resources: - namespaces verbs: - get - apiGroups: - "" resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - coordination.k8s.io resourceNames: - ingress-nginx-leader resources: - leases verbs: - get - update - apiGroups: - coordination.k8s.io resources: - leases verbs: - create - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx rules: - apiGroups: - "" resources: - secrets verbs: - get - create --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets - namespaces verbs: - list - watch - apiGroups: - coordination.k8s.io resources: - leases verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: v1 data: allow-snippet-annotations: "false" kind: ConfigMap metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller namespace: ingress-nginx --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller namespace: ingress-nginx spec: ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - appProtocol: http name: http port: 80 protocol: TCP targetPort: http - appProtocol: https name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: NodePort --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller-admission namespace: ingress-nginx spec: ports: - appProtocol: https name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller namespace: ingress-nginx spec: minReadySeconds: 0 revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx strategy: rollingUpdate: maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 spec: containers: - args: - /nginx-ingress-controller - --election-id=ingress-nginx-leader - --controller-class=k8s.io/ingress-nginx - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key - --enable-metrics=false env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/ingress-controller:v1.10.1 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 name: controller ports: - containerPort: 80 name: http protocol: TCP - containerPort: 443 name: https protocol: TCP - containerPort: 8443 name: webhook protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 resources: requests: cpu: 100m memory: 90Mi securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - ALL readOnlyRootFilesystem: false runAsNonRoot: true runAsUser: 101 seccompProfile: type: RuntimeDefault volumeMounts: - mountPath: /usr/local/certificates/ name: webhook-cert readOnly: true dnsPolicy: ClusterFirst nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- apiVersion: batch/v1 kind: Job metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-create namespace: ingress-nginx spec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-create spec: containers: - args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent name: create securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65532 seccompProfile: type: RuntimeDefault nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission --- apiVersion: batch/v1 kind: Job metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-patch namespace: ingress-nginx spec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-patch spec: containers: - args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent name: patch securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65532 seccompProfile: type: RuntimeDefault nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission --- apiVersion: networking.k8s.io/v1 kind: IngressClass metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: nginx spec: controller: k8s.io/ingress-nginx --- apiVersion: admissionregistration.k8s.io/v1 kind: ValidatingWebhookConfiguration metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission webhooks: - admissionReviewVersions: - v1 clientConfig: service: name: ingress-nginx-controller-admission namespace: ingress-nginx path: /networking/v1/ingresses failurePolicy: Fail matchPolicy: Equivalent name: validate.nginx.ingress.kubernetes.io rules: - apiGroups: - networking.k8s.io apiVersions: - v1 operations: - CREATE - UPDATE resources: - ingresses sideEffects: None {/collapse-item}{collapse-item label="kubectl apply -f deploy.yaml"} 折叠内容二namespace/ingress-nginx created serviceaccount/ingress-nginx created serviceaccount/ingress-nginx-admission created role.rbac.authorization.k8s.io/ingress-nginx created role.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrole.rbac.authorization.k8s.io/ingress-nginx created clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created rolebinding.rbac.authorization.k8s.io/ingress-nginx created rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created configmap/ingress-nginx-controller created service/ingress-nginx-controller created service/ingress-nginx-controller-admission created deployment.apps/ingress-nginx-controller created job.batch/ingress-nginx-admission-create created job.batch/ingress-nginx-admission-patch created ingressclass.networking.k8s.io/nginx created validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created [root@k8s-master-01 test2]# grep -i imge deploy.yaml [root@k8s-master-01 test2]# grep -i image deploy.yaml image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/ingress-controller:v1.10.1 imagePullPolicy: IfNotPresent image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent{/collapse-item}{/collapse}查看部署好的ingress[root@k8s-master-01 test2]# kubectl -n ingress-nginx get pods NAME READY STATUS RESTARTS AGE ingress-nginx-admission-create-2pz6l 0/1 Completed 0 90s ingress-nginx-admission-patch-m7zkg 0/1 Completed 0 90s ingress-nginx-controller-8698cc7676-2lth6 1/1 Running 0 90s [root@k8s-master-01 test2]# kubectl -n ingress-nginx get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE ingress-nginx-controller 1/1 1 1 2m7s [root@k8s-master-01 test2]# kubectl -n ingress-nginx get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller NodePort 10.111.188.71 <none> 80:32593/TCP,443:32318/TCP 4m46s ingress-nginx-controller-admission ClusterIP 10.108.110.90 <none> 443/TCP 创建微服务和对应的svc{collapse}{collapse-item label="cat gowebhost-svc" open}apiVersion: apps/v1 kind: Deployment metadata: labels: app: gowebhost name: gowebhost spec: replicas: 2 selector: matchLabels: app: gowebhost strategy: {} template: metadata: labels: app: gowebhost spec: containers: - image: nginx:1.18 name: nginx --- apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: gowebhost name: gowebhost spec: ports: - port: 9999 protocol: TCP targetPort: 80 selector: app: gowebhost type: ClusterIP status: loadBalancer: {}{/collapse-item}{collapse-item label="cat gowebip-svc.yaml"}apiVersion: apps/v1 kind: Deployment metadata: labels: app: gowebip name: gowebip spec: replicas: 2 selector: matchLabels: app: gowebip strategy: {} template: metadata: labels: app: gowebip spec: containers: - image: nginx:1.18 name: nginx --- apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: gowebip name: gowebip spec: ports: - port: 8888 protocol: TCP targetPort: 80 selector: app: gowebip type: ClusterIP status: loadBalancer: {} {/collapse-item}{/collapse}[root@k8s-master-01 test2]# kubectl apply -f gowebip-svc.yaml deployment.apps/gowebip created service/gowebip created [root@k8s-master-01 test2]# kubectl apply -f gowebhost-svc.yaml deployment.apps/gowebhost created service/gowebhost created [root@k8s-master-01 test2]# kubectl get pods NAME READY STATUS RESTARTS AGE busybox1 1/1 Running 3 (20m ago) 7h56m busybox2 1/1 Running 3 (20m ago) 7h56m gowebhost-5d6cf777b6-f2h9f 1/1 Running 0 10s gowebhost-5d6cf777b6-trdt6 1/1 Running 0 10s gowebip-f647fbd59-25dnc 1/1 Running 0 14s gowebip-f647fbd59-4xp9r 1/1 Running 0 14s3. 部署ingress的pods {collapse}{collapse-item label="cat ingress-test1.yaml" open}apiVersion: networking.k8s.io/v1 # kubectl explain ingress.apiVersion kind: Ingress metadata: name: ingress-test namespace: default annotations: #kubernetes.io/ingress.class: "nginx" # 开启use-regex,启用path的正则匹配 nginx.ingress.kubernetes.io/use-regex: "true" spec: ingressClassName: nginx rules: # 定义域名 - host: test.ingress.com http: paths: # 不同path转发到不同端口 - path: /ip pathType: Prefix backend: service: name: gowebip port: number: 8888 - path: /host pathType: Prefix backend: service: name: gowebhost port: number: 9999{/collapse}[root@k8s-master-01 test2]# kubectl get ingress No resources found in default namespace. [root@k8s-master-01 test2]# kubectl apply -f ingress-test1.yaml ingress.networking.k8s.io/ingress-test created [root@k8s-master-01 test2]# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-test nginx test.ingress.com 80 2s [root@k8s-master-01 test2]# kubectl get ingress -w NAME CLASS HOSTS ADDRESS PORTS AGE ingress-test nginx test.ingress.com 80 6s ^C[root@k8s-master-01 test2]# ^C [root@k8s-master-01 test2]# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-test nginx test.ingress.com 80 44s [root@k8s-master-01 test2]# kubectl get ingress -w NAME CLASS HOSTS ADDRESS PORTS AGE ingress-test nginx test.ingress.com 80 46s ingress-test nginx test.ingress.com 192.168.110.213 80 47s4. 查看[root@k8s-master-01 test2]# curl 10.244.2.144 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> [root@k8s-master-01 test2]# kubectl -n ingress-nginx get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller NodePort 10.111.188.71 <none> 80:32593/TCP,443:32318/TCP 118m ingress-nginx-controller-admission ClusterIP 10.108.110.90 <none> 443/TCP 118m [root@k8s-master-01 test2]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.110.97:32318 rr -> 10.244.1.188:443 Masq 1 0 0 TCP 192.168.110.97:32593 rr -> 10.244.1.188:80 Masq 1 0 1 TCP 10.96.0.1:443 rr -> 192.168.110.97:6443 Masq 1 2 0 TCP 10.96.0.10:53 rr -> 10.244.0.28:53 Masq 1 0 0 -> 10.244.1.171:53 Masq 1 0 0 TCP 10.96.0.10:9153 rr -> 10.244.0.28:9153 Masq 1 0 0 -> 10.244.1.171:9153 Masq 1 0 0 TCP 10.98.113.34:443 rr -> 10.244.2.132:10250 Masq 1 0 0 TCP 10.108.110.90:443 rr -> 10.244.1.188:8443 Masq 1 0 0 TCP 10.110.213.242:9999 rr -> 10.244.1.190:80 Masq 1 0 0 -> 10.244.2.144:80 Masq 1 0 0 TCP 10.110.238.126:8888 rr -> 10.244.1.189:80 Masq 1 0 0 -> 10.244.2.143:80 Masq 1 0 0 TCP 10.111.188.71:80 rr -> 10.244.1.188:80 Masq 1 0 0 TCP 10.111.188.71:443 rr -> 10.244.1.188:443 Masq 1 0 0 TCP 10.244.0.0:32318 rr -> 10.244.1.188:443 Masq 1 0 0 TCP 10.244.0.0:32593 rr -> 10.244.1.188:80 Masq 1 0 0 TCP 10.244.0.1:32318 rr -> 10.244.1.188:443 Masq 1 0 0 TCP 10.244.0.1:32593 rr -> 10.244.1.188:80 Masq 1 0 0 UDP 10.96.0.10:53 rr -> 10.244.0.28:53 Masq 1 0 0 -> 10.244.1.171:53 Masq 1 0 0 [root@k8s-master-01 test2]# [root@k8s-master-01 test2]# [root@k8s-master-01 test2]# kubectl -n ingress-nginx get pods -o wide(这里只有一个pod可以修改yaml文件扩成3个) NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-admission-create-2pz6l 0/1 Completed 0 126m 10.244.1.187 k8s-node-01 <none> <none> ingress-nginx-admission-patch-m7zkg 0/1 Completed 0 126m 10.244.2.142 k8s-node-02 <none> <none> ingress-nginx-controller-8698cc7676-2lth6 1/1 Running 0 126m 10.244.1.188 k8s-node-01 <none> 5. 给微服务里面添加内容然后curl测试[root@k8s-master-01 test2]kubectl exec -it gowebip-f647fbd59-4xp9r -- /bin/sh -c "mkdir -p /usr/share/nginx/html/ip && echo 111112222 > /usr/share/nginx/html/ip/test.html" [root@k8s-master-01 test2]kubectl exec -it gowebip-f647fbd59-25dnc -- /bin/sh -c "mkdir -p /usr/share/nginx/html/ip && echo 11111 > /usr/share/nginx/html/ip/test.html" [root@k8s-master-01 test2]curl 10.244.2.143/ip/test.html 111116. 在集群外部访问host文件改好 win路径 C:\Windows\System32\drivers\etc 192.168.110.97 test.ingress.com C:\Users\MIKU>curl http://test.ingress.com:32593/ip/test.html 11111 C:\Users\MIKU>curl http://test.ingress.com:32593/ip/test.html 11111 C:\Users\MIKU>curl http://test.ingress.com:32593/ip/test.html 111112222222 C:\Users\MIKU>curl http://test.ingress.com:32593/ip/test.html 111112222222{lamp/}{lamp/} 7. 不需要创建(用hostNetwork网络模式)转发路径更短,效率更高 Daemonset来部署ingress的pod(pod的网络就是hostNetwork)如果是上面那个实验做过的,这里要先把之前的deploy.yaml文件删了kubectl delete -f deploy.yaml{collapse}{collapse-item label="cat daemonset" open}apiVersion: v1 kind: Namespace metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx name: ingress-nginx --- apiVersion: v1 automountServiceAccountToken: true kind: ServiceAccount metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - "" resources: - namespaces verbs: - get - apiGroups: - "" resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - coordination.k8s.io resourceNames: - ingress-nginx-leader resources: - leases verbs: - get - update - apiGroups: - coordination.k8s.io resources: - leases verbs: - create - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx rules: - apiGroups: - "" resources: - secrets verbs: - get - create --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets - namespaces verbs: - list - watch - apiGroups: - coordination.k8s.io resources: - leases verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: v1 data: allow-snippet-annotations: "false" kind: ConfigMap metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller namespace: ingress-nginx --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller-admission namespace: ingress-nginx spec: ports: - appProtocol: https name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: ClusterIP --- apiVersion: apps/v1 kind: DaemonSet metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-controller namespace: ingress-nginx spec: minReadySeconds: 0 revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx template: metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 spec: hostNetwork: true containers: - args: - /nginx-ingress-controller - --election-id=ingress-nginx-leader - --controller-class=k8s.io/ingress-nginx - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key - --enable-metrics=false env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/ingress-controller:v1.10.1 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 name: controller ports: - containerPort: 80 name: http protocol: TCP - containerPort: 443 name: https protocol: TCP - containerPort: 8443 name: webhook protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 resources: requests: cpu: 100m memory: 90Mi securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - ALL readOnlyRootFilesystem: false runAsNonRoot: true runAsUser: 101 seccompProfile: type: RuntimeDefault volumeMounts: - mountPath: /usr/local/certificates/ name: webhook-cert readOnly: true dnsPolicy: ClusterFirst nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- apiVersion: batch/v1 kind: Job metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-create namespace: ingress-nginx spec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-create spec: containers: - args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent name: create securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65532 seccompProfile: type: RuntimeDefault nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission --- apiVersion: batch/v1 kind: Job metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-patch namespace: ingress-nginx spec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission-patch spec: containers: - args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.cn-qingdao.aliyuncs.com/egon-k8s-test/kube-webhook-certgen:v1.4.1 imagePullPolicy: IfNotPresent name: patch securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65532 seccompProfile: type: RuntimeDefault nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission --- apiVersion: networking.k8s.io/v1 kind: IngressClass metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: nginx spec: controller: k8s.io/ingress-nginx --- apiVersion: admissionregistration.k8s.io/v1 kind: ValidatingWebhookConfiguration metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.10.1 name: ingress-nginx-admission webhooks: - admissionReviewVersions: - v1 clientConfig: service: name: ingress-nginx-controller-admission namespace: ingress-nginx path: /networking/v1/ingresses failurePolicy: Fail matchPolicy: Equivalent name: validate.nginx.ingress.kubernetes.io rules: - apiGroups: - networking.k8s.io apiVersions: - v1 operations: - CREATE - UPDATE resources: - ingresses sideEffects: None {/collapse}kubectl apply -f daemonset.yaml部署ingress的规则可以ssl连接cat ingress-test1-ssl.yamlapiVersion: networking.k8s.io/v1 # kubectl explain ingress.apiVersion kind: Ingress metadata: name: ingress-test namespace: default annotations: #kubernetes.io/ingress.class: "nginx" # 开启use-regex,启用path的正则匹配 nginx.ingress.kubernetes.io/use-regex: "true" spec: tls: - hosts: - test.ingress.com secretName: ingress-tls ingressClassName: nginx rules: # 定义域名 - host: test.ingress.com http: paths: # 不同path转发到不同端口 - path: /ip pathType: Prefix backend: service: name: gowebip port: number: 8888 - path: /host pathType: Prefix backend: service: name: gowebhost port: number: 9999#可以把之前残留的给删了 kubectl delete -f ingress-test.yaml8. 查看端口[root@k8s-master-01 test2]# netstat -an |grep 80 tcp 0 0 192.168.110.97:2380 0.0.0.0:* LISTEN tcp 0 0 10.244.0.1:48756 10.244.0.29:8080 TIME_WAIT tcp 0 0 127.0.0.1:36480 127.0.0.1:2379 ESTABLISHED tcp 0 0 127.0.0.1:2379 127.0.0.1:36380 ESTABLISHED tcp 0 0 127.0.0.1:2379 127.0.0.1:36480 ESTABLISHED tcp 0 0 127.0.0.1:36380 127.0.0.1:2379 ESTABLISHED tcp 0 0 10.244.0.1:50044 10.244.0.29:8080 TIME_WAIT unix 2 [ ACC ] STREAM LISTENING 24356 /run/containerd/s/c259f4f33d1f76cfc9d27ea0ff86080e5d837adbeb3f2836dd63df79a862f5c3 unix 2 [ ACC ] STREAM LISTENING 24360 /run/containerd/s/0f8e770aec32fad8b31af9ccee9b8e7875778093b9418014071d704e81f5e24f unix 3 [ ] STREAM CONNECTED 25883 /run/containerd/s/c259f4f33d1f76cfc9d27ea0ff86080e5d837adbeb3f2836dd63df79a862f5c3 unix 3 [ ] STREAM CONNECTED 25865 /run/containerd/s/0f8e770aec32fad8b31af9ccee9b8e7875778093b9418014071d704e81f5e24f unix 3 [ ] STREAM CONNECTED 28039 /run/containerd/containerd.sock.ttrpc unix 3 [ ] STREAM CONNECTED 24802 unix 3 [ ] STREAM CONNECTED 28038 unix 3 [ ] STREAM CONNECTED 28032 unix 3 [ ] STREAM CONNECTED 23411 /run/containerd/s/0f8e770aec32fad8b31af9ccee9b8e7875778093b9418014071d704e81f5e24f unix 3 [ ] STREAM CONNECTED 23407 /run/containerd/s/c259f4f33d1f76cfc9d27ea0ff86080e5d837adbeb3f2836dd63df79a862f5c3 unix 3 [ ] STREAM CONNECTED 22680 /run/systemd/journal/stdout unix 3 [ ] STREAM CONNECTED 28064 /run/containerd/s/f7d5f17994654e129e8b4fee26256945ccd2a09eb22254e4cdd5bcea83781192 unix 3 [ ] STREAM CONNECTED 25809 [root@k8s-master-01 test2]# kubectl -n ingress-nginx get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-controller-78pdk 0/1 Terminating 0 5m9s 192.168.110.97 k8s-master-01 <none> <none> [root@k8s-master-01 test2]# kubectl -n ingress-nginx get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-admission-create-ttrj5 0/1 Completed 0 9s 10.244.1.199 k8s-node-01 <none> <none> ingress-nginx-admission-patch-bgrp8 0/1 Completed 1 9s 10.244.1.200 k8s-node-01 <none> <none> ingress-nginx-controller-2h2dt 0/1 Running 0 9s 192.168.110.2 k8s-node-02 <none> <none> ingress-nginx-controller-cjwtb 0/1 Running 0 9s 192.168.110.213 k8s-node-01 <none> <none> ingress-nginx-controller-jxwkf 0/1 Running 0 9s 192.168.110.97 k8s-master-01 <none> <none> [root@k8s-master-01 test2]# kubectl -n ingress-nginx get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-admission-create-ttrj5 0/1 Completed 0 46m 10.244.1.199 k8s-node-01 <none> <none> ingress-nginx-admission-patch-bgrp8 0/1 Completed 1 46m 10.244.1.200 k8s-node-01 <none> <none> ingress-nginx-controller-2h2dt 1/1 Running 0 46m 192.168.110.2 k8s-node-02 <none> <none> ingress-nginx-controller-cjwtb 1/1 Running 0 46m 192.168.110.213 k8s-node-01 <none> <none> ingress-nginx-controller-jxwkf 1/1 Running 0 46m 192.168.110.97 k8s-master-01 <none> <none> 9. 生成证书openssl genrsa -out tls.key 2048 openssl req -x509 -key tls.key -out tls.crt -subj "/C=CN/ST=ShangHai/L=ShangHai/O=Ingress/CN=test.ingress.com" kubectl -n default create secret tls ingress-tls --cert=tls.crt --key=tls.key{lamp/}10. 一些查询[root@k8s-master-01 test2]kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gowebhost ClusterIP 10.99.181.85 <none> 9999/TCP 5m12s gowebip ClusterIP 10.100.100.184 <none> 8888/TCP 4m59s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16d my-service ExternalName <none> www.baidu.com <none> 19h mysql-k8s ClusterIP None <none> 13306/TCP 19h [root@k8s-master-01 test2]kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-test nginx test.ingress.com 80 5m21s kubectl get secrets #查看证书 kubectl get deployments kubectl delete deployment <deployment-name> kubectl delete service <service-name> [root@k8s-master-01 test2]kubectl get svc -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller NodePort 10.100.138.120 <none> 80:31220/TCP,443:30969/TCP 34m ingress-nginx-controller-admission ClusterIP 10.97.181.161 <none> 443/TCP 34m 删除限制 [root@k8s-master-01 test2]kubectl describe node k8s-master-01 | grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule [root@k8s-master-01 test2]kubectl describe node k8s-master-01 | grep Taints Taints: <none>