搜索到

93

篇与

的结果

-

Jenkins与Harbor连接 一、先部署Jenkins和k8s连接和动态slavehttps://axzys.cn/index.php/archives/521/二、流水线的配置cat > Dockerfile << EOF FROM busybox CMD ["echo","hello","container"] EOF nerdctl build -t busybox:v1 . root@k8s-03:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE busybox v1 05610df32232 19 seconds ago linux/amd64 4.338MB 2.146MB buildkitd-test v1 05610df32232 41 hours ago linux/amd64 4.338MB 2.146MB #打标签 root@k8s-03:~# nerdctl tag busybox:v1 192.168.30.180:30003/test/busybox:v1 #推送报错,https连接 要证书 root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: waiting |--------------------------------------| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: waiting |--------------------------------------| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: waiting |--------------------------------------| elapsed: 0.1 s total: 0.0 B (0.0 B/s) FATA[0000] failed to do request: Head "https://192.168.30.180:30003/v2/test/busybox/blobs/sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c": tls: failed to verify certificate: x509: certificate signed by unknown authority #从Harbor中下载ca证书 root@k8s-03:~# sudo cp ca.crt /usr/local/share/ca-certificates/192.168.30.180-registry.crt #更新证书 root@k8s-03:~# sudo update-ca-certificates Updating certificates in /etc/ssl/certs... rehash: warning: skipping ca-certificates.crt,it does not contain exactly one certificate or CRL 1 added, 0 removed; done. Running hooks in /etc/ca-certificates/update.d... done. #重启containerd root@k8s-03:~# sudo systemctl restart containerd #推送镜像到Harbor,下面报错了原因是没有登录Harbor root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: waiting |--------------------------------------| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: waiting |--------------------------------------| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: waiting |--------------------------------------| elapsed: 0.1 s total: 0.0 B (0.0 B/s) FATA[0000] push access denied, repository does not exist or may require authorization: authorization failed: no basic auth credentials 、 #登录Harbor root@k8s-03:~# nerdctl login 192.168.30.180:30003 Enter Username: admin Enter Password: WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'. Configure a credential helper to remove this warning. See https://docs.docker.com/go/credential-store/ Login Succeeded #再次推送成功 root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: done |++++++++++++++++++++++++++++++++++++++| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: done |++++++++++++++++++++++++++++++++++++++| elapsed: 0.7 s total: 2.0 Mi (2.9 MiB/s) root@k8s-03:~#

Jenkins与Harbor连接 一、先部署Jenkins和k8s连接和动态slavehttps://axzys.cn/index.php/archives/521/二、流水线的配置cat > Dockerfile << EOF FROM busybox CMD ["echo","hello","container"] EOF nerdctl build -t busybox:v1 . root@k8s-03:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE busybox v1 05610df32232 19 seconds ago linux/amd64 4.338MB 2.146MB buildkitd-test v1 05610df32232 41 hours ago linux/amd64 4.338MB 2.146MB #打标签 root@k8s-03:~# nerdctl tag busybox:v1 192.168.30.180:30003/test/busybox:v1 #推送报错,https连接 要证书 root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: waiting |--------------------------------------| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: waiting |--------------------------------------| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: waiting |--------------------------------------| elapsed: 0.1 s total: 0.0 B (0.0 B/s) FATA[0000] failed to do request: Head "https://192.168.30.180:30003/v2/test/busybox/blobs/sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c": tls: failed to verify certificate: x509: certificate signed by unknown authority #从Harbor中下载ca证书 root@k8s-03:~# sudo cp ca.crt /usr/local/share/ca-certificates/192.168.30.180-registry.crt #更新证书 root@k8s-03:~# sudo update-ca-certificates Updating certificates in /etc/ssl/certs... rehash: warning: skipping ca-certificates.crt,it does not contain exactly one certificate or CRL 1 added, 0 removed; done. Running hooks in /etc/ca-certificates/update.d... done. #重启containerd root@k8s-03:~# sudo systemctl restart containerd #推送镜像到Harbor,下面报错了原因是没有登录Harbor root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: waiting |--------------------------------------| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: waiting |--------------------------------------| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: waiting |--------------------------------------| elapsed: 0.1 s total: 0.0 B (0.0 B/s) FATA[0000] push access denied, repository does not exist or may require authorization: authorization failed: no basic auth credentials 、 #登录Harbor root@k8s-03:~# nerdctl login 192.168.30.180:30003 Enter Username: admin Enter Password: WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'. Configure a credential helper to remove this warning. See https://docs.docker.com/go/credential-store/ Login Succeeded #再次推送成功 root@k8s-03:~# nerdctl push 192.168.30.180:30003/test/busybox:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348) manifest-sha256:05610df32232fdd6d6276d0aa50c628fc3acd75deb010cf15a4ac74cf35ea348: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:90b9666d4aed1893ff122f238948dfd5e8efdcf6c444fe92371ea0f01750bf8c: done |++++++++++++++++++++++++++++++++++++++| config-sha256:0b44030dca1d1504de8aa100696d5c86f19b06cec660cf55c2ba6c5c36d1fb89: done |++++++++++++++++++++++++++++++++++++++| elapsed: 0.7 s total: 2.0 Mi (2.9 MiB/s) root@k8s-03:~# -

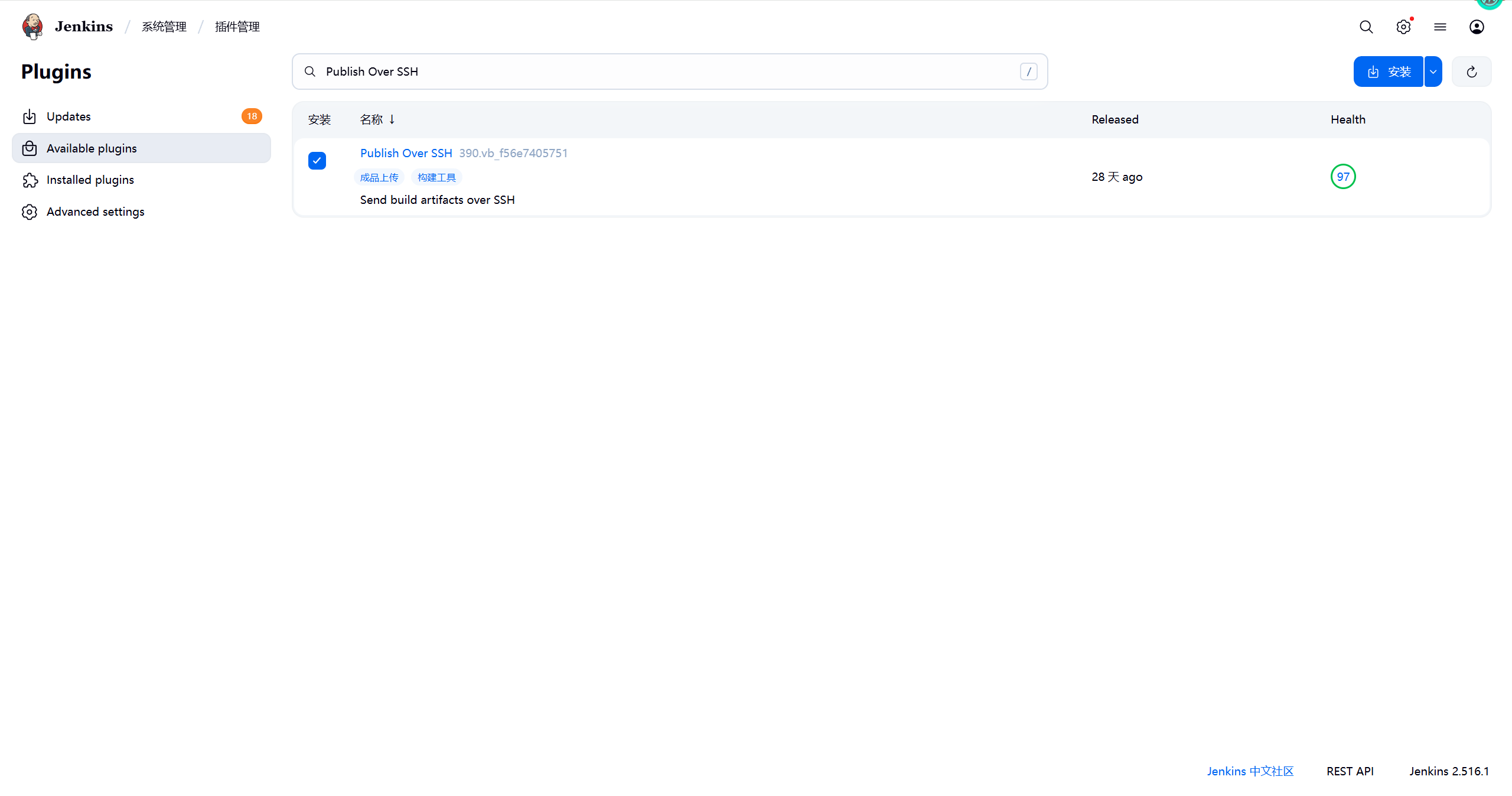

jenkins远程服务器执行shell 一、jenkins免密登录配置 1.1安装插件Publish Over SSH1.2配置SSH免密登录在jenkins主机执行操作。root@k8s-03:~# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa Your public key has been saved in /root/.ssh/id_rsa.pub The key fingerprint is: SHA256:bIagD4/Gebf8fslUICpXfV6Qf6+7nw+Z8vX/5ETwWcc root@k8s-03 The key's randomart image is: +---[RSA 3072]----+ | . .o | | o o o .. | | . o . + o. E| | ...oo o .o=| | o o. S . o+| | . * o . +.| | = + . o .. +.+| | . . o . + o.*o| | ooo. +=@| +----[SHA256]-----+ root@k8s-03:~# ssh-copy-id 192.168.30.181 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.30.181's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.30.181'" and check to make sure that only the key(s) you wanted were added. root@k8s-03:~# ssh-copy-id 192.168.30.180 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.30.180's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.30.180'" and check to make sure that only the key(s) you wanted were added. root@k8s-03:~# ssh 192.168.30.180 Welcome to Ubuntu 22.04.4 LTS (GNU/Linux 5.15.0-151-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/pro System information as of Sun Aug 3 05:39:01 AM UTC 2025 System load: 0.3642578125 Processes: 314 Usage of /: 28.4% of 49.48GB Users logged in: 1 Memory usage: 13% IPv4 address for docker0: 172.17.0.1 Swap usage: 0% IPv4 address for ens33: 192.168.30.180 * Strictly confined Kubernetes makes edge and IoT secure. Learn how MicroK8s just raised the bar for easy, resilient and secure K8s cluster deployment. https://ubuntu.com/engage/secure-kubernetes-at-the-edge Expanded Security Maintenance for Applications is not enabled. 66 updates can be applied immediately. To see these additional updates run: apt list --upgradable Enable ESM Apps to receive additional future security updates. See https://ubuntu.com/esm or run: sudo pro status New release '24.04.2 LTS' available. Run 'do-release-upgrade' to upgrade to it. Last login: Sun Aug 3 04:48:27 2025 from 192.168.30.1 root@k8s-01:~# exit logout Connection to 192.168.30.180 closed. 1.3插件配置在Jenkins中【系统管理】—【系统配置】,找到“Publish over SSH”来配置该插件信息。#key通过查看jenkins服务器cat .ssh/id_rsa获取。 #或者填写path to key路径/root/.ssh/id_ras。 root@k8s-03:~# cat .ssh/id_rsa id_rsa id_rsa.pub root@k8s-03:~# cat .ssh/id_rsa -----BEGIN OPENSSH PRIVATE KEY----- b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn NhAAAAAwEAAQAAAYEAlqUUzPuPLsWhkfacpkwX5moTGpDTjSOi8aqFwQsWwlWsFZco50Bg C+aLNSvlKkwxPWPEorx2lcJ5PBhC3eoubNNjs7Lo7tHE3ugugpwTTOj701783GuT2F8KB5 9sB9p0qRRU5w0CocFM4FrQecykJx9JyVVCIl+F732WjsFDGUYG8sDje6sCViaDAmi0lsg1 RnisGepuDP9FjKLx+7j4q9vMKebcLdYiEsPYMt6qknIVpZJIBNrqhoTOvZGOZE97ZWIux9 bHqEBKdHYnkLQAYj48yzR9S42Q7n4pGXx8bFKt/+L/MgFJuD0mmHoBsieTkpe/hmjSkszg aUFBqJgXAXWBz/1mX5+r3qMTTVadCcZU0GonQaEspYT2tWThXQF9FklYfYprLrlHqbTzOo jlziojXgQnRckZYdQt7x74U0bR0b8Gp5jw93nh0KBjDLPRgGbtF7Icb6jlvQOFj5/9DOQl TXq30jd2mOaATdb8RzKVGpFItwMI3JPu15uxXyqDAAAFiHOrvcRzq73EAAAAB3NzaC1yc2 EAAAGBAJalFMz7jy7FoZH2nKZMF+ZqExqQ040jovGqhcELFsJVrBWXKOdAYAvmizUr5SpM MT1jxKK8dpXCeTwYQt3qLmzTY7Oy6O7RxN7oLoKcE0zo+9Ne/Nxrk9hfCgefbAfadKkUVO cNAqHBTOBa0HnMpCcfSclVQiJfhe99lo7BQxlGBvLA43urAlYmgwJotJbINUZ4rBnqbgz/ RYyi8fu4+KvbzCnm3C3WIhLD2DLeqpJyFaWSSATa6oaEzr2RjmRPe2ViLsfWx6hASnR2J5 C0AGI+PMs0fUuNkO5+KRl8fGxSrf/i/zIBSbg9Jph6AbInk5KXv4Zo0pLM4GlBQaiYFwF1 gc/9Zl+fq96jE01WnQnGVNBqJ0GhLKWE9rVk4V0BfRZJWH2Kay65R6m08zqI5c4qI14EJ0 XJGWHULe8e+FNG0dG/BqeY8Pd54dCgYwyz0YBm7ReyHG+o5b0DhY+f/QzkJU16t9I3dpjm gE3W/EcylRqRSLcDCNyT7tebsV8qgwAAAAMBAAEAAAGARgHBgOEG0T2WsDZi5gVGthVle1 sCdPyypIwSTHvSv80Ag6gToiQQfndNChC0FswBtf2/S8E5eq89l+fOa0KBAKHcG45xIUrq qoxL0PanIX7ESJ5D9EsqEctY5eH4Wk3zGQAR1K3/IxyplTme2Ht5bZTkvWOZsE+j9n/uj7 jQbdZVNErfbIE0PMT47Q2rnsa7u1wo3oJLUk7vC0ROICT9qyAh6PcYK1Tv0LiiqEFCE3xh AujP/zGc+5aMXRxfh85HW2waCNa+ALhiylKFXjrgmlRvPCyTMGeqewWk+M0w1UdZ3p3A9n aqIha0O2pqmFmMLbjRXdtbVRq/hoqxAWJcbFGwo7qQCSNOh2lhtuAugjHuzf9S6H+XBzAG Qt0Mo/TZK04s3hoRGIOWVhZ69zDytBvtO2N2W1lBa9tBV+wlW6yf3j7q9ri4xGZVKtPuNT pOCFx8KOcCZmNfOvRlS9qS6iHXQDnbooV2vEQLNwqZwiK4g1eIVoAUTQ+8YVlGGCjBAAAA wQCbUT2H3RTbwvWY+VdOKqiUjq1VEzvooSe+UQKutNv0+h1TP/kpc3PloX0v5rXSXmx56K uwuSXSZ8lI3disWu4lz/EIwLuF8ose5E1Lt0taA4Y7ufJrHe7QLpuCRd1bwNePxBZUSdAB opHu5Ei0onXORG26qZDLWy3TCGI+5RHwBEcXnStyqcNDOW9SYp6LJ+VeWSj/9yv/Q0fRY8 lkXIEkVdzyEPPJ6TVSjI1Suq+BFdp62KbRvLaDN8gAF8bvuawAAADBAMja0CluVgovEQrw lLuWHuIq1dnnF0Xv48L6AbVyFwLhDAMmOHYnaP7SRAMpAWWQ2dDcW0TCpYUMqHUXwwGegt rbPgBLIWquyBaLzWDDnKf3fu1cTUjwW1jjx58KOizlF1n7slCW7hKMwxV1UFXopPK3rMt7 dy8KquA8jExAEeqozJvSFV0xuBMn2zZZxmMpLZKQ/KXhPR0gc28AwaWFgDz17ynyEEWm+q JFqV02Hfc42bJQYlD/bilivnFqbh5JrQAAAMEAwAE89Hl3Z64Th98lWh/XPEA9t3Cj2FYa nxvKVl8tgsskdXHNWwWmJeA9roti2z8zz8+1Bz28NqbUFbLP2XKAumwGtSzTFKb9pd07TB zf80ETWVpePjNX3a8gABF+w/gcCMQQuOVfxQ2HHsyScA7sBPLSXfFfeP3NnUH8QVHpPAR4 pTm5xDgnxMpQKI5aaqnhtFII4Fbc7GhBojzcyvhnqGsBeA0GzDxZyeMlqEqnd+GRqicdSV 1I9GKrPvD7SirvAAAAC3Jvb3RAazhzLTAzAQIDBAUGBw== -----END OPENSSH PRIVATE KEY-----二、验证测试 2.1创建自由风格项目2.2创建测试脚本在jenkins服务器ssh-test目录下root@k8s-03:~# mkdir -p /var/lib/jenkins/workspace/ssh-test root@k8s-03:~# cd /var/lib/jenkins/workspace/ssh-test/ root@k8s-03:/var/lib/jenkins/workspace/ssh-test# ls root@k8s-03:/var/lib/jenkins/workspace/ssh-test# pwd /var/lib/jenkins/workspace/ssh-test root@k8s-03:/var/lib/jenkins/workspace/ssh-test# vi test.sh root@k8s-03:/var/lib/jenkins/workspace/ssh-test# cat test.sh #!/bin/bash date >> /tmp/date.txt2.3添加构建步骤Name:“系统管理>系统配置”设置的SSH Sverver的名字Name。 Source files:允许为空,复制到远程主机上的文件,**/*意思是当前工作目录下所有问题 Remove prefix:允许为空,文件复制时要过滤的目录。 Remote directory:允许为空,文件得到到远程机上的目录,如果填写目录名则是相对于“SSH Server”中的“Remote directory”的,如果不存在将会自动创建。 Exec command:在这里填写在远程主机上执行的命令。2.4工作空间要理解的细节 1、Jenkins是k8s部署起来的时候,如果没有固定pod的启动的节点则是会变化的。那么pod挂载卷对应的目录也会变化。 2、如果要指定节点则需要配置(General)里面加上对应的节点,节点必须提前配置好https://axzys.cn/index.php/archives/572/2.5构建查看结果由控制台打印内容可知,已经成功传输一个文件。 登录服务器查看执行结果。root@k8s-02:~# cd /opt/jenkins/ root@k8s-02:/opt/jenkins# ls remoting test.sh root@k8s-02:/opt/jenkins# cat test.sh #!/bin/bash date >> /tmp/date.txt root@k8s-02:/opt/jenkins# cat /tmp/date.txt Sun Aug 3 09:35:34 AM UTC 2025 root@k8s-02:/opt/jenkins#

jenkins远程服务器执行shell 一、jenkins免密登录配置 1.1安装插件Publish Over SSH1.2配置SSH免密登录在jenkins主机执行操作。root@k8s-03:~# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa Your public key has been saved in /root/.ssh/id_rsa.pub The key fingerprint is: SHA256:bIagD4/Gebf8fslUICpXfV6Qf6+7nw+Z8vX/5ETwWcc root@k8s-03 The key's randomart image is: +---[RSA 3072]----+ | . .o | | o o o .. | | . o . + o. E| | ...oo o .o=| | o o. S . o+| | . * o . +.| | = + . o .. +.+| | . . o . + o.*o| | ooo. +=@| +----[SHA256]-----+ root@k8s-03:~# ssh-copy-id 192.168.30.181 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.30.181's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.30.181'" and check to make sure that only the key(s) you wanted were added. root@k8s-03:~# ssh-copy-id 192.168.30.180 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.30.180's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.30.180'" and check to make sure that only the key(s) you wanted were added. root@k8s-03:~# ssh 192.168.30.180 Welcome to Ubuntu 22.04.4 LTS (GNU/Linux 5.15.0-151-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/pro System information as of Sun Aug 3 05:39:01 AM UTC 2025 System load: 0.3642578125 Processes: 314 Usage of /: 28.4% of 49.48GB Users logged in: 1 Memory usage: 13% IPv4 address for docker0: 172.17.0.1 Swap usage: 0% IPv4 address for ens33: 192.168.30.180 * Strictly confined Kubernetes makes edge and IoT secure. Learn how MicroK8s just raised the bar for easy, resilient and secure K8s cluster deployment. https://ubuntu.com/engage/secure-kubernetes-at-the-edge Expanded Security Maintenance for Applications is not enabled. 66 updates can be applied immediately. To see these additional updates run: apt list --upgradable Enable ESM Apps to receive additional future security updates. See https://ubuntu.com/esm or run: sudo pro status New release '24.04.2 LTS' available. Run 'do-release-upgrade' to upgrade to it. Last login: Sun Aug 3 04:48:27 2025 from 192.168.30.1 root@k8s-01:~# exit logout Connection to 192.168.30.180 closed. 1.3插件配置在Jenkins中【系统管理】—【系统配置】,找到“Publish over SSH”来配置该插件信息。#key通过查看jenkins服务器cat .ssh/id_rsa获取。 #或者填写path to key路径/root/.ssh/id_ras。 root@k8s-03:~# cat .ssh/id_rsa id_rsa id_rsa.pub root@k8s-03:~# cat .ssh/id_rsa -----BEGIN OPENSSH PRIVATE KEY----- b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn NhAAAAAwEAAQAAAYEAlqUUzPuPLsWhkfacpkwX5moTGpDTjSOi8aqFwQsWwlWsFZco50Bg C+aLNSvlKkwxPWPEorx2lcJ5PBhC3eoubNNjs7Lo7tHE3ugugpwTTOj701783GuT2F8KB5 9sB9p0qRRU5w0CocFM4FrQecykJx9JyVVCIl+F732WjsFDGUYG8sDje6sCViaDAmi0lsg1 RnisGepuDP9FjKLx+7j4q9vMKebcLdYiEsPYMt6qknIVpZJIBNrqhoTOvZGOZE97ZWIux9 bHqEBKdHYnkLQAYj48yzR9S42Q7n4pGXx8bFKt/+L/MgFJuD0mmHoBsieTkpe/hmjSkszg aUFBqJgXAXWBz/1mX5+r3qMTTVadCcZU0GonQaEspYT2tWThXQF9FklYfYprLrlHqbTzOo jlziojXgQnRckZYdQt7x74U0bR0b8Gp5jw93nh0KBjDLPRgGbtF7Icb6jlvQOFj5/9DOQl TXq30jd2mOaATdb8RzKVGpFItwMI3JPu15uxXyqDAAAFiHOrvcRzq73EAAAAB3NzaC1yc2 EAAAGBAJalFMz7jy7FoZH2nKZMF+ZqExqQ040jovGqhcELFsJVrBWXKOdAYAvmizUr5SpM MT1jxKK8dpXCeTwYQt3qLmzTY7Oy6O7RxN7oLoKcE0zo+9Ne/Nxrk9hfCgefbAfadKkUVO cNAqHBTOBa0HnMpCcfSclVQiJfhe99lo7BQxlGBvLA43urAlYmgwJotJbINUZ4rBnqbgz/ RYyi8fu4+KvbzCnm3C3WIhLD2DLeqpJyFaWSSATa6oaEzr2RjmRPe2ViLsfWx6hASnR2J5 C0AGI+PMs0fUuNkO5+KRl8fGxSrf/i/zIBSbg9Jph6AbInk5KXv4Zo0pLM4GlBQaiYFwF1 gc/9Zl+fq96jE01WnQnGVNBqJ0GhLKWE9rVk4V0BfRZJWH2Kay65R6m08zqI5c4qI14EJ0 XJGWHULe8e+FNG0dG/BqeY8Pd54dCgYwyz0YBm7ReyHG+o5b0DhY+f/QzkJU16t9I3dpjm gE3W/EcylRqRSLcDCNyT7tebsV8qgwAAAAMBAAEAAAGARgHBgOEG0T2WsDZi5gVGthVle1 sCdPyypIwSTHvSv80Ag6gToiQQfndNChC0FswBtf2/S8E5eq89l+fOa0KBAKHcG45xIUrq qoxL0PanIX7ESJ5D9EsqEctY5eH4Wk3zGQAR1K3/IxyplTme2Ht5bZTkvWOZsE+j9n/uj7 jQbdZVNErfbIE0PMT47Q2rnsa7u1wo3oJLUk7vC0ROICT9qyAh6PcYK1Tv0LiiqEFCE3xh AujP/zGc+5aMXRxfh85HW2waCNa+ALhiylKFXjrgmlRvPCyTMGeqewWk+M0w1UdZ3p3A9n aqIha0O2pqmFmMLbjRXdtbVRq/hoqxAWJcbFGwo7qQCSNOh2lhtuAugjHuzf9S6H+XBzAG Qt0Mo/TZK04s3hoRGIOWVhZ69zDytBvtO2N2W1lBa9tBV+wlW6yf3j7q9ri4xGZVKtPuNT pOCFx8KOcCZmNfOvRlS9qS6iHXQDnbooV2vEQLNwqZwiK4g1eIVoAUTQ+8YVlGGCjBAAAA wQCbUT2H3RTbwvWY+VdOKqiUjq1VEzvooSe+UQKutNv0+h1TP/kpc3PloX0v5rXSXmx56K uwuSXSZ8lI3disWu4lz/EIwLuF8ose5E1Lt0taA4Y7ufJrHe7QLpuCRd1bwNePxBZUSdAB opHu5Ei0onXORG26qZDLWy3TCGI+5RHwBEcXnStyqcNDOW9SYp6LJ+VeWSj/9yv/Q0fRY8 lkXIEkVdzyEPPJ6TVSjI1Suq+BFdp62KbRvLaDN8gAF8bvuawAAADBAMja0CluVgovEQrw lLuWHuIq1dnnF0Xv48L6AbVyFwLhDAMmOHYnaP7SRAMpAWWQ2dDcW0TCpYUMqHUXwwGegt rbPgBLIWquyBaLzWDDnKf3fu1cTUjwW1jjx58KOizlF1n7slCW7hKMwxV1UFXopPK3rMt7 dy8KquA8jExAEeqozJvSFV0xuBMn2zZZxmMpLZKQ/KXhPR0gc28AwaWFgDz17ynyEEWm+q JFqV02Hfc42bJQYlD/bilivnFqbh5JrQAAAMEAwAE89Hl3Z64Th98lWh/XPEA9t3Cj2FYa nxvKVl8tgsskdXHNWwWmJeA9roti2z8zz8+1Bz28NqbUFbLP2XKAumwGtSzTFKb9pd07TB zf80ETWVpePjNX3a8gABF+w/gcCMQQuOVfxQ2HHsyScA7sBPLSXfFfeP3NnUH8QVHpPAR4 pTm5xDgnxMpQKI5aaqnhtFII4Fbc7GhBojzcyvhnqGsBeA0GzDxZyeMlqEqnd+GRqicdSV 1I9GKrPvD7SirvAAAAC3Jvb3RAazhzLTAzAQIDBAUGBw== -----END OPENSSH PRIVATE KEY-----二、验证测试 2.1创建自由风格项目2.2创建测试脚本在jenkins服务器ssh-test目录下root@k8s-03:~# mkdir -p /var/lib/jenkins/workspace/ssh-test root@k8s-03:~# cd /var/lib/jenkins/workspace/ssh-test/ root@k8s-03:/var/lib/jenkins/workspace/ssh-test# ls root@k8s-03:/var/lib/jenkins/workspace/ssh-test# pwd /var/lib/jenkins/workspace/ssh-test root@k8s-03:/var/lib/jenkins/workspace/ssh-test# vi test.sh root@k8s-03:/var/lib/jenkins/workspace/ssh-test# cat test.sh #!/bin/bash date >> /tmp/date.txt2.3添加构建步骤Name:“系统管理>系统配置”设置的SSH Sverver的名字Name。 Source files:允许为空,复制到远程主机上的文件,**/*意思是当前工作目录下所有问题 Remove prefix:允许为空,文件复制时要过滤的目录。 Remote directory:允许为空,文件得到到远程机上的目录,如果填写目录名则是相对于“SSH Server”中的“Remote directory”的,如果不存在将会自动创建。 Exec command:在这里填写在远程主机上执行的命令。2.4工作空间要理解的细节 1、Jenkins是k8s部署起来的时候,如果没有固定pod的启动的节点则是会变化的。那么pod挂载卷对应的目录也会变化。 2、如果要指定节点则需要配置(General)里面加上对应的节点,节点必须提前配置好https://axzys.cn/index.php/archives/572/2.5构建查看结果由控制台打印内容可知,已经成功传输一个文件。 登录服务器查看执行结果。root@k8s-02:~# cd /opt/jenkins/ root@k8s-02:/opt/jenkins# ls remoting test.sh root@k8s-02:/opt/jenkins# cat test.sh #!/bin/bash date >> /tmp/date.txt root@k8s-02:/opt/jenkins# cat /tmp/date.txt Sun Aug 3 09:35:34 AM UTC 2025 root@k8s-02:/opt/jenkins# -

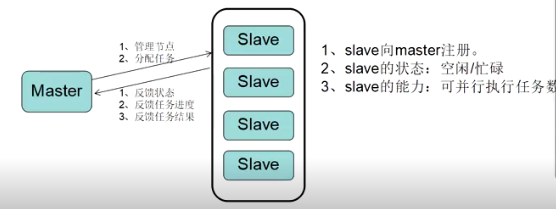

jenkins添加节点-slave集群配置 一、Jenkins的Master/Slave机制Jenkins采用Master/Slave架构。Master/Slave相当于Server和agent的概念,Master提供web接口让用户来管理Job和Slave,Job可以运行在Master本机或者被分配到Slave上运行。一个Master可以关联多个Slave用来为不同的Job或相同的Job的不同配置来服务。 Jenkins的Master/Slave机制除了可以并发的执行构建任务,加速构建以外。还可以用于分布式自动化测试,当自动化测试代码非常多或者是需要在多个浏览器上并行的时候,可以把测试代码划分到不同节点上运行,从而加速自动化测试的执行。二、集群角色功能**Master:**Jenkins服务器。主要是处理调度构建作业,把构建分发到Slave节点实际执行,监视Slave节点的状态。当然,也并不是说Master节点不能跑任务。构建结果和构建产物最后还是传回到Master节点,比如说在jenkins工作目录下面的workspace内的内容,在Master节点照样是有一份的。 **Slave:**执行机(奴隶机)。执行Master分配的任务,并返回任务的进度和结果。Jenkins Master/Slave的搭建需要至少两台机器,一台Master节点,一台Slave节点(实际生产中会有多个Slave节点)。三、搭建步骤Master不需要主动去建立,安装Jenkins,在登录到主界面时,这台电脑就已经默认为master。 选择“Manage Jenkins”->“Manage Nodes and Clouds”,可以看到Master节点相关信息:四、为Jenkins添加Slave Node 4.1开启tcp代理端口jenkins web代理是指slave通过jenkins服务端提供的一个tcp端口,与jenkins服务端建立连接,docker版的jenkins默认开启web tcp代理,端口为50000,而自己手动制作的jenkins容器或者在物理机环境部署的jenkins,都需要手动开启web代理端口,如果不开启,slave无法通过web代理的方式与jenkins建立连接。 jenkins web代理的tcp端口不是通过命令启动的而是通过在全局安全设置中配置的,配置成功后会在系统上运行一个指定的端口4.2添加节点信息在Jenkins界面选择“Manage Jenkins”->“Manage Nodes and Clouds”->“New Node配置Agent信息Name:Slave机器的名字 Description:描述 ,不重要 随意填 Number of excutors:允许在这个节点上并发执行任务的数量,即同时可以下发多少个Job到Slave上执行,一般设置为 cpu 支持的线程数。[注:Master Node也可以通过此参数配置Master是否也执行构建任务、还是仅作为Jenkins调度节点] Remote root directory:用来放工程的文件夹,jenkins master上设置的下载的代码会放到这个工作目录下。 Lables:标签,用于实现后续Job调度策略,根据Jobs配置的Label选择Salve Node Usage:支持两种模式“Use this Node as much as possible”、“Only build Jobs with Label expressiong matching this Node”。选择“Only build Jobs with Label expressiong matching this Node”, 添加完毕后,在Jenkins主界面,可以看到新添加的Slave Node,但是红叉表示此时的Slave并未与Master建立起联系。4.3slave节点配置安装jdk#dnf -y install java-17-openjdk root@k8s-02:~# java -version openjdk version "17.0.16" 2025-07-15 LTS OpenJDK Runtime Environment Corretto-17.0.16.8.1 (build 17.0.16+8-LTS) OpenJDK 64-Bit Server VM Corretto-17.0.16.8.1 (build 17.0.16+8-LTS, mixed mode, sharing) 安装agent 点击节点信息,根据控制台提示执行安装agent命令root@k8s-02:~# curl -sO http://192.168.30.180:31530/jnlpJars/agent.jar root@k8s-02:~# java -jar agent.jar -url http://192.168.30.180:31530/ -secret 6e87c37900dfbdcff98099f6681f7b195a141de1cacb157679efc98f8fec2644 -name "k8s-02" -webSocket -workDir "/opt/jenkins" Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /opt/jenkins/remoting as a remoting work directory Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /opt/jenkins/remoting Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: k8s-02 Aug 03, 2025 12:52:47 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3309.v27b_9314fd1a_4 Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /opt/jenkins/remoting as a remoting work directory Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher$CuiListener status INFO: WebSocket connection open Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher$CuiListener status INFO: Connected4.4查看agent状态指定Node调度策略 创建Job的页面,“General”下勾选“Restric where this project can be run”,填写Label Expression。

jenkins添加节点-slave集群配置 一、Jenkins的Master/Slave机制Jenkins采用Master/Slave架构。Master/Slave相当于Server和agent的概念,Master提供web接口让用户来管理Job和Slave,Job可以运行在Master本机或者被分配到Slave上运行。一个Master可以关联多个Slave用来为不同的Job或相同的Job的不同配置来服务。 Jenkins的Master/Slave机制除了可以并发的执行构建任务,加速构建以外。还可以用于分布式自动化测试,当自动化测试代码非常多或者是需要在多个浏览器上并行的时候,可以把测试代码划分到不同节点上运行,从而加速自动化测试的执行。二、集群角色功能**Master:**Jenkins服务器。主要是处理调度构建作业,把构建分发到Slave节点实际执行,监视Slave节点的状态。当然,也并不是说Master节点不能跑任务。构建结果和构建产物最后还是传回到Master节点,比如说在jenkins工作目录下面的workspace内的内容,在Master节点照样是有一份的。 **Slave:**执行机(奴隶机)。执行Master分配的任务,并返回任务的进度和结果。Jenkins Master/Slave的搭建需要至少两台机器,一台Master节点,一台Slave节点(实际生产中会有多个Slave节点)。三、搭建步骤Master不需要主动去建立,安装Jenkins,在登录到主界面时,这台电脑就已经默认为master。 选择“Manage Jenkins”->“Manage Nodes and Clouds”,可以看到Master节点相关信息:四、为Jenkins添加Slave Node 4.1开启tcp代理端口jenkins web代理是指slave通过jenkins服务端提供的一个tcp端口,与jenkins服务端建立连接,docker版的jenkins默认开启web tcp代理,端口为50000,而自己手动制作的jenkins容器或者在物理机环境部署的jenkins,都需要手动开启web代理端口,如果不开启,slave无法通过web代理的方式与jenkins建立连接。 jenkins web代理的tcp端口不是通过命令启动的而是通过在全局安全设置中配置的,配置成功后会在系统上运行一个指定的端口4.2添加节点信息在Jenkins界面选择“Manage Jenkins”->“Manage Nodes and Clouds”->“New Node配置Agent信息Name:Slave机器的名字 Description:描述 ,不重要 随意填 Number of excutors:允许在这个节点上并发执行任务的数量,即同时可以下发多少个Job到Slave上执行,一般设置为 cpu 支持的线程数。[注:Master Node也可以通过此参数配置Master是否也执行构建任务、还是仅作为Jenkins调度节点] Remote root directory:用来放工程的文件夹,jenkins master上设置的下载的代码会放到这个工作目录下。 Lables:标签,用于实现后续Job调度策略,根据Jobs配置的Label选择Salve Node Usage:支持两种模式“Use this Node as much as possible”、“Only build Jobs with Label expressiong matching this Node”。选择“Only build Jobs with Label expressiong matching this Node”, 添加完毕后,在Jenkins主界面,可以看到新添加的Slave Node,但是红叉表示此时的Slave并未与Master建立起联系。4.3slave节点配置安装jdk#dnf -y install java-17-openjdk root@k8s-02:~# java -version openjdk version "17.0.16" 2025-07-15 LTS OpenJDK Runtime Environment Corretto-17.0.16.8.1 (build 17.0.16+8-LTS) OpenJDK 64-Bit Server VM Corretto-17.0.16.8.1 (build 17.0.16+8-LTS, mixed mode, sharing) 安装agent 点击节点信息,根据控制台提示执行安装agent命令root@k8s-02:~# curl -sO http://192.168.30.180:31530/jnlpJars/agent.jar root@k8s-02:~# java -jar agent.jar -url http://192.168.30.180:31530/ -secret 6e87c37900dfbdcff98099f6681f7b195a141de1cacb157679efc98f8fec2644 -name "k8s-02" -webSocket -workDir "/opt/jenkins" Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /opt/jenkins/remoting as a remoting work directory Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging INFO: Both error and output logs will be printed to /opt/jenkins/remoting Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher createEngine INFO: Setting up agent: k8s-02 Aug 03, 2025 12:52:47 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3309.v27b_9314fd1a_4 Aug 03, 2025 12:52:47 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir INFO: Using /opt/jenkins/remoting as a remoting work directory Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher$CuiListener status INFO: WebSocket connection open Aug 03, 2025 12:52:47 PM hudson.remoting.Launcher$CuiListener status INFO: Connected4.4查看agent状态指定Node调度策略 创建Job的页面,“General”下勾选“Restric where this project can be run”,填写Label Expression。 -

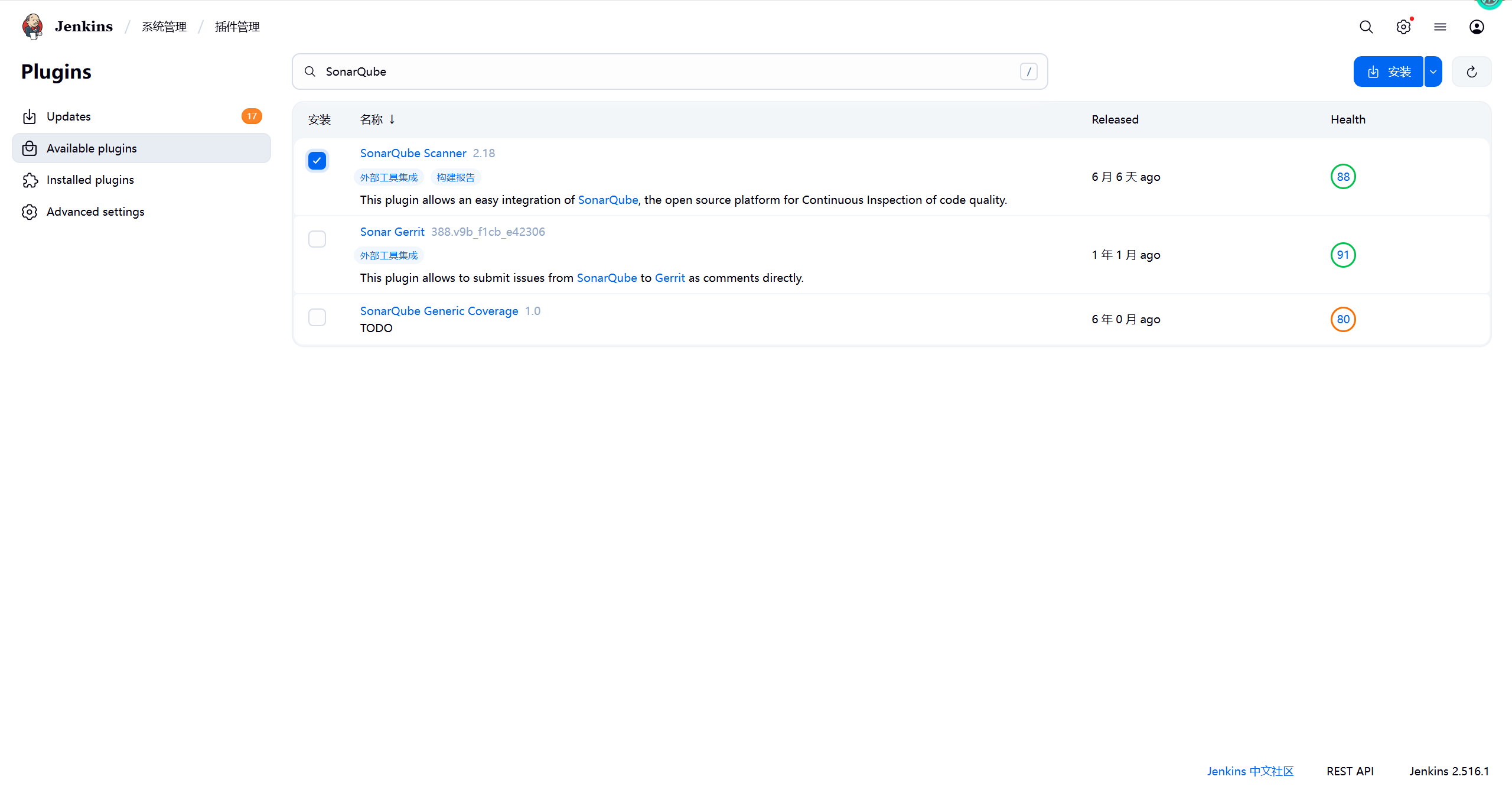

jenkins与SonarQube连接 一、jenkins安装插件 1.1下载SonarQube插件进入Jenkins的系统管理->插件管理->可选插件,搜索框输入sonarqube,安装重启。1.2启用SonarQubeJenkins的系统管理->系统配置,添加SonarQube服务。二、SonarQube配置 2.1禁用审查结果上传到SCM功能2.2生成token添加jenkin用户 token:squ_4bc173eb520dd35c176104baa1b899a992e88c88三、jenkins配置 3.1添加令牌Jenkins的系统管理->系统配置->添加token类型切换成Secret text,粘贴token,点击添加。选上刚刚添加的令牌凭证,点击应用保存。3.2SonarQube Scanner 安装进入Jenkins的系统管理->全局工具配置,下滑找到图片里的地方,点击新增SonarQube Scanner,我们选择自动安装并选择最新的版本。四、非流水线项目添加代码审查 4.1添加构建步骤编辑之前的自由风格构建的demo项目,在构建阶段新增步骤。analysis properties参数如下# 项目名称id,全局唯一 sonar.projectKey=sprint_boot_demo # 项目名称 sonar.projectName=sprint_boot_demo sonar.projectVersion=1.0 # 扫描路径,当前项目根目录 sonar.sources=./src # 排除目录 sonar.exclusions=**/test/**,**/target/** # jdk版本 sonar.java.source=1.17 sonar.java.target=1.17 # 字符编码 sonar.sourceEncoding=UTF-8 # binaries路径 sonar.java.binaries=target/classes4.2构建并查看结果jenkins点击立即构建,查看构建结果查看SonarQube扫描结果五、流水线项目添加代码审查 5.1创建sonar-project.properties文件项目根目录下,创建sonar-project.properties文件,内容如下# 项目名称id,全局唯一 sonar.projectKey=sprint_boot_demo # 项目名称 sonar.projectName=sprint_boot_demo sonar.projectVersion=1.0 # 扫描路径,当前项目根目录 sonar.sources=./src # 排除目录 sonar.exclusions=**/test/**,**/target/** # jdk版本 sonar.java.source=1.17 sonar.java.target=1.17 # 字符编码 sonar.sourceEncoding=UTF-8 # binaries路径 sonar.java.binaries=target/classes5.2修改Jenkinsfile加入SonarQube代码审查阶段pipeline { agent any stages { stage('拉取代码') { steps { echo '开始拉取代码' checkout([$class: 'GitSCM', branches: [[name: '*/master']], userRemoteConfigs: [[url: 'https://gitee.com/axzys/sprint_boot_demo.git']]]) echo '拉取代码完成' } } stage('打包编译') { steps { echo '开始打包编译' sh 'mvn clean package' echo '打包编译完成' } } stage('代码审查') { steps { echo '开始代码审查' script { // 引入SonarQube scanner,名称与jenkins 全局工具SonarQube Scanner的name保持一致 def scannerHome = tool 'SonarQube' // 引入SonarQube Server,名称与jenkins 系统配置SonarQube servers的name保持一致 withSonarQubeEnv('SonarQube') { sh "${scannerHome}/bin/sonar-scanner" } } echo '代码审查完成' } } stage('部署项目') { steps { echo '开始部署项目' echo '部署项目完成' } } } } 5.3构建测试

jenkins与SonarQube连接 一、jenkins安装插件 1.1下载SonarQube插件进入Jenkins的系统管理->插件管理->可选插件,搜索框输入sonarqube,安装重启。1.2启用SonarQubeJenkins的系统管理->系统配置,添加SonarQube服务。二、SonarQube配置 2.1禁用审查结果上传到SCM功能2.2生成token添加jenkin用户 token:squ_4bc173eb520dd35c176104baa1b899a992e88c88三、jenkins配置 3.1添加令牌Jenkins的系统管理->系统配置->添加token类型切换成Secret text,粘贴token,点击添加。选上刚刚添加的令牌凭证,点击应用保存。3.2SonarQube Scanner 安装进入Jenkins的系统管理->全局工具配置,下滑找到图片里的地方,点击新增SonarQube Scanner,我们选择自动安装并选择最新的版本。四、非流水线项目添加代码审查 4.1添加构建步骤编辑之前的自由风格构建的demo项目,在构建阶段新增步骤。analysis properties参数如下# 项目名称id,全局唯一 sonar.projectKey=sprint_boot_demo # 项目名称 sonar.projectName=sprint_boot_demo sonar.projectVersion=1.0 # 扫描路径,当前项目根目录 sonar.sources=./src # 排除目录 sonar.exclusions=**/test/**,**/target/** # jdk版本 sonar.java.source=1.17 sonar.java.target=1.17 # 字符编码 sonar.sourceEncoding=UTF-8 # binaries路径 sonar.java.binaries=target/classes4.2构建并查看结果jenkins点击立即构建,查看构建结果查看SonarQube扫描结果五、流水线项目添加代码审查 5.1创建sonar-project.properties文件项目根目录下,创建sonar-project.properties文件,内容如下# 项目名称id,全局唯一 sonar.projectKey=sprint_boot_demo # 项目名称 sonar.projectName=sprint_boot_demo sonar.projectVersion=1.0 # 扫描路径,当前项目根目录 sonar.sources=./src # 排除目录 sonar.exclusions=**/test/**,**/target/** # jdk版本 sonar.java.source=1.17 sonar.java.target=1.17 # 字符编码 sonar.sourceEncoding=UTF-8 # binaries路径 sonar.java.binaries=target/classes5.2修改Jenkinsfile加入SonarQube代码审查阶段pipeline { agent any stages { stage('拉取代码') { steps { echo '开始拉取代码' checkout([$class: 'GitSCM', branches: [[name: '*/master']], userRemoteConfigs: [[url: 'https://gitee.com/axzys/sprint_boot_demo.git']]]) echo '拉取代码完成' } } stage('打包编译') { steps { echo '开始打包编译' sh 'mvn clean package' echo '打包编译完成' } } stage('代码审查') { steps { echo '开始代码审查' script { // 引入SonarQube scanner,名称与jenkins 全局工具SonarQube Scanner的name保持一致 def scannerHome = tool 'SonarQube' // 引入SonarQube Server,名称与jenkins 系统配置SonarQube servers的name保持一致 withSonarQubeEnv('SonarQube') { sh "${scannerHome}/bin/sonar-scanner" } } echo '代码审查完成' } } stage('部署项目') { steps { echo '开始部署项目' echo '部署项目完成' } } } } 5.3构建测试 -

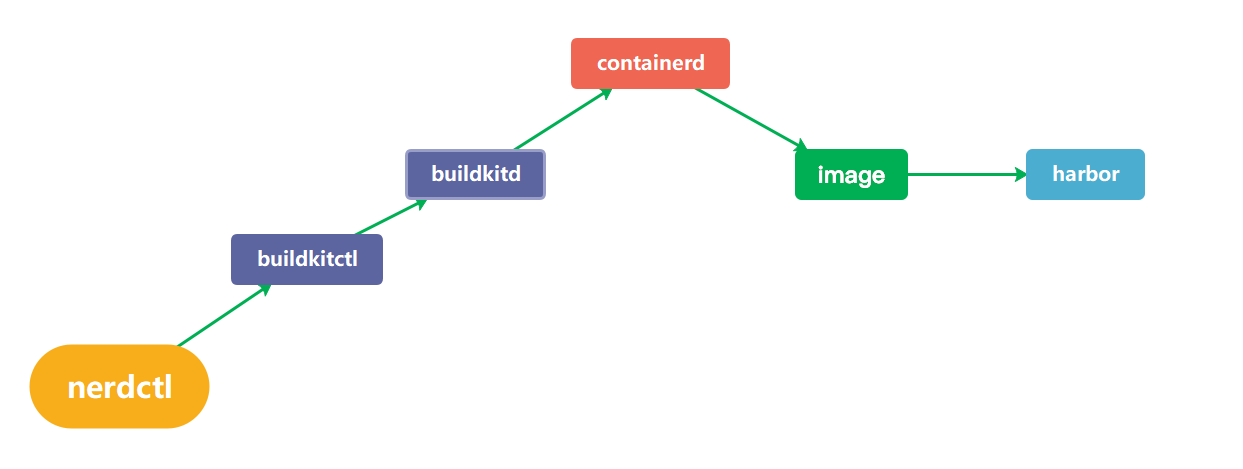

buildctl和nerdctl 安装配置 一、安装与使用nerdctlcontainerd虽然可直接提供给终端用户直接使用,也提供了命令行工具(ctr),但并不是很友好,所以nerdctl应运而生,它也是containerd的命令行工具,支持docker cli关于容器生命周期管理的所有命令,并且支持docker compose (nerdctl compose up)1.1安装nerdctl下载地址:https://github.com/containerd/nerdctl/releases# 下载 [root@k8s-master ~]# wget https://github.com/containerd/nerdctl/releases/download/v2.1.2/nerdctl-2.1.2-linux-amd64.tar.gz # 解压 [root@k8s-master ~]# tar -zxvf nerdctl-2.1.2-linux-amd64.tar.gz nerdctl containerd-rootless-setuptool.sh containerd-rootless.sh # 复制文件 [root@k8s-master ~]# mv nerdctl /usr/bin/ # 配置 nerdctl 参数自动补齐 [root@k8s-master ~]# echo 'source <(nerdctl completion bash)' >> /etc/profile [root@k8s-master ~]# source /etc/profile # 验证 [root@k8s-master ~]# nerdctl -v nerdctl version 2.1.21.2命名空间这个和K8s的名字空间不是一回事,其中default就是containerd的默认名字空间,http://k8s.io是K8s的名字空间root@k8s-03:~/bin# nerdctl ns ls NAME CONTAINERS IMAGES VOLUMES LABELS buildkit 0 0 0 buildkit_history 0 0 0 default 0 1 0 k8s.io 70 66 0 # 创建命名空间 [root@k8s-master ~]# nerdctl ns create test # 删除命名空间 [root@k8s-master ~]# nerdctl ns remove test test # 查看名称空间详情 [root@k8s-master ~]# nerdctl ns inspect k8s.io [ { "Name": "k8s.io", "Labels": null } ]1.3镜像root@k8s-03:~/bin# nerdctl -n k8s.io images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-cangku <none> b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB <none> <none> b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-cangku v1 b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl <none> a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB <none> <none> a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl v2.13.0 a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon <none> cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB <none> <none> cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon v2.13.0 cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core <none> d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB <none> <none> d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core v2.13.0 d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon <none> b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB <none> <none> b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon v2.13.0 b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal <none> 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB <none> <none> 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal v2.13.0 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB registry.cn-guangzhou.aliyuncs.com/xingcangku/gitlab-gitlab-ce-16.11.1-ce.0 <none> decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB <none> <none> decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB registry.cn-guangzhou.aliyuncs.com/xingcangku/gitlab-gitlab-ce-16.11.1-ce.0 16.11.1-ce.0 decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik <none> 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB <none> <none> 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik v3.0.0 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard <none> e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB <none> <none> e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard v2.7.0 e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper <none> ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB <none> <none> ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper v1.0.8 ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql <none> 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB <none> <none> 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql 11.14.0-debian-10-r22 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB registry.cn-guangzhou.aliyuncs.com/xingcangku/sonarqube-community <none> b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB <none> <none> b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB registry.cn-guangzhou.aliyuncs.com/xingcangku/sonarqube-community 25.5.0.107428-community b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17 <none> bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB <none> <none> bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17 lts-jdk17 bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon <none> ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB <none> <none> ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon v2.13.0 ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db <none> dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB <none> <none> dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db v2.13.0 dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice <none> 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB <none> <none> 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice v2.13.0 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon <none> 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB <none> <none> 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon v2.13.0 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns <none> 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB <none> <none> 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.10.1 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc <none> f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB <none> <none> f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc 0.25.5 f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd <none> 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB <none> <none> 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd 1.5.1 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy <none> c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB <none> <none> c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.27.0 c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee <none> 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB <none> <none> 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee 3.8 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB # 拉取镜像 [root@k8s-master ~]# nerdctl -n test pull nginx:alpine # 构建镜像 [root@k8s-master ~]# cat Dockerfile FROM debian RUN apt-get install -y --force-yes locales RUN echo "LC_ALL=\"zh_CN.UTF-8\"" >> /etc/default/locale RUN locale-gen "zh_CN.UTF-8" [root@k8s-master ~]# nerdctl -n test build -t abc.com/debian . # 上传镜像 [root@k8s-master ~]# nerdctl -n test push abc.com/debian # 导出镜像 [root@k8s-master ~]# nerdctl -n test save -o debian.tar abc.com/debian # 导入镜像 [root@k8s-master ~]# nerdctl -n test load -i debian.tar 1.4容器root@k8s-03:~/bin# nerdctl -n k8s.io ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 90fbd223a72f registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17:lts-jdk17 "/usr/bin/tini -- /u…" 9 hours ago Up k8s://cicd/jenkins-7d65887794-s4vhr/jenkins 681d3d0f9346 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 9 hours ago Up k8s://cicd/jenkins-7d65887794-s4vhr cfb418a6f445 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice:v2.13.0 "/harbor/entrypoint.…" 11 hours ago Up k8s://harbor/harbor-jobservice-6c766cbf57-4t4rv/jobservice 566e8a6194f8 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-jobservice-6c766cbf57-4t4rv 2f44c7d4f045 registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon:v2.13.0 "nginx -g daemon off;" 11 hours ago Up k8s://harbor/harbor-nginx-6569fc6f48-n58m4/nginx 73298e3ed41f registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql:11.14.0-debian-10-r22 "/opt/bitnami/script…" 11 hours ago Up k8s://sonarqube/my-sonarqube-postgresql-0/my-sonarqube-postgresql a3904a1442a7 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://sonarqube/my-sonarqube-postgresql-0 090451297d0e registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper:v1.0.8 "/metrics-sidecar" 11 hours ago Up k8s://kubernetes-dashboard/dashboard-metrics-scraper-f9669b96-gqv9b/dashboard-metrics-scraper 5e2e10ece736 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kubernetes-dashboard/dashboard-metrics-scraper-f9669b96-gqv9b 5fe6f542a5af registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard:v2.7.0 "/dashboard --insecu…" 11 hours ago Up k8s://kubernetes-dashboard/kubernetes-dashboard-5d8977b4cd-hn9wj/kubernetes-dashboard 5f5145461dba registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal:v2.13.0 "nginx -g daemon off;" 11 hours ago Up k8s://harbor/harbor-portal-7b67bff87d-hhbwf/portal 0c91dee84ef4 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db:v2.13.0 "/docker-entrypoint.…" 11 hours ago Up k8s://harbor/harbor-database-0/database 00f905053c35 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kubernetes-dashboard/kubernetes-dashboard-5d8977b4cd-hn9wj bd3f1dd15a7a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-portal-7b67bff87d-hhbwf abd9c09c0d84 registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon:v2.13.0 "/home/scanner/entry…" 11 hours ago Up k8s://harbor/harbor-trivy-0/trivy 398f6f60263a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-trivy-0 13b06ef3a148 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl:v2.13.0 "/home/harbor/start.…" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc/registryctl da585e4b08bd registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon:v2.13.0 "/home/harbor/entryp…" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc/registry ece6da7ad469 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-nginx-6569fc6f48-n58m4 c0d6e45cec3c registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc 6bcc595f312c registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1 "/coredns -conf /etc…" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-xphsz/coredns 8dd7971b626b registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-xphsz e1daa9e322e5 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1 "/coredns -conf /etc…" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-fg85n/coredns 6a75b9f4e905 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-fg85n bfd37ad46a64 registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik:v3.0.0 "/entrypoint.sh --gl…" 11 hours ago Up k8s://traefik/traefik-release-589c7ff647-ch4cz/traefik-release baacee8d0a07 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://traefik/traefik-release-589c7ff647-ch4cz eca5589418a2 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-database-0 b543a7a9e25c registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core:v2.13.0 "/harbor/entrypoint.…" 11 hours ago Up k8s://harbor/harbor-core-797d458f8c-2gcjf/core 558406c8bd5c registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-core-797d458f8c-2gcjf 1a14db05c528 registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon:v2.13.0 "redis-server /etc/r…" 11 hours ago Up k8s://harbor/harbor-redis-0/redis 5c518e649a4a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-redis-0 8f75c2fd204b registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 "/opt/bin/flanneld -…" 11 hours ago Up k8s://kube-flannel/kube-flannel-ds-zp4jv/kube-flannel ea7d12788ef6 registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.27.0 "/usr/local/bin/kube…" 11 hours ago Up k8s://kube-system/kube-proxy-vfcq8/kube-proxy 98f0354f74e5 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-flannel/kube-flannel-ds-zp4jv a9c920f9e7c7 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/kube-proxy-vfcq8 # 启动容器 [root@k8s-master ~]# nerdctl -n test run -d -p 80:80 --name web nginx:alpine # 进入容器 [root@k8s-master ~]# nerdctl -n test exec -it web sh / # # 停止容器 [root@k8s-master ~]# nerdctl -n test stop web web # 删除容器 [root@k8s-master ~]# nerdctl -n test rm web web 1.5其他操作# 查看网络信息 [root@k8s-master ~]# nerdctl network ls NETWORK ID NAME FILE cbr0 /etc/cni/net.d/10-flannel.conflist 17f29b073143 bridge /etc/cni/net.d/nerdctl-bridge.conflist host none # 查看系统信息 [root@k8s-master ~]# nerdctl system info Client: Namespace: default Debug Mode: false Server: Server Version: 1.6.4 Storage Driver: overlayfs Logging Driver: json-file Cgroup Driver: cgroupfs Cgroup Version: 1 Plugins: Log: fluentd journald json-file syslog Storage: native overlayfs Security Options: seccomp Profile: default Kernel Version: 4.18.0-425.13.1.el8_7.x86_64 Operating System: Rocky Linux 8.7 (Green Obsidian) OSType: linux Architecture: x86_64 CPUs: 2 Total Memory: 3.618GiB Name: k8s-master ID: d2b76909-9552-4be5-a12a-00b955f756f2 # 清理数据,它不是和Docker那样只是把标签为"none"的镜像清理掉,而是把所有没有"正在使用"的镜像清理了 [root@k8s-master ~]# nerdctl system prune -h二、nerdctl+buildkitd构建镜像 2.1buildkit介绍buildkit 从Docker公司的开源的镜像构建工具包,支持OCI标准的镜像构建 buildkitd组成部分: buildkitd(服务端),目前支持runc和containerd作为镜像构建环境,默认是runc,可以更换containerd。 buildctl(客户端),负责解析Dockerfile文件、并向服务端buildkitd发出构建请求。 构建镜像并推送至Harbor为例,整个服务调用过程如下:2.2安装buildkit软件包下载地址:https://github.com/moby/buildkit/releases[root@master ~]# wget https://github.com/moby/buildkit/releases/download/v0.13.2/buildkit-v0.13.2.linux-amd64.tar.gz [root@master ~]# tar -zxvf buildkit-v0.13.2.linux-amd64.tar.gz bin/ bin/buildctl bin/buildkit-cni-bridge bin/buildkit-cni-firewall bin/buildkit-cni-host-local bin/buildkit-cni-loopback bin/buildkit-qemu-aarch64 bin/buildkit-qemu-arm bin/buildkit-qemu-i386 bin/buildkit-qemu-mips64 bin/buildkit-qemu-mips64el bin/buildkit-qemu-ppc64le bin/buildkit-qemu-riscv64 bin/buildkit-qemu-s390x bin/buildkit-runc bin/buildkitd [root@master ~]# cd bin/ [root@master bin]# cp * /usr/local/bin/创建service脚本[root@master bin]# cat /etc/systemd/system/buildkitd.service [Unit] Description=BuildKit Documentation=https://github.com/moby/buildkit [Service] ExecStart=/usr/local/bin/buildkitd --oci-worker=false --containerd-worker=true [Install] WantedBy=multi-user.target新增buildkitd配置文件,添加镜像仓库使用http访问[root@master bin]# vim /etc/buildkit/buildkitd.toml [registry."harbor.local.com"] http = false insecure = true启动buildkitd[root@master bin]# systemctl daemon-reload [root@master bin]# systemctl start buildkitd [root@master bin]# systemctl enable buildkitd2.3构建镜像并测试[root@master ~]# cat Dockerfile FROM busybox CMD ["echo","hello","container"] [root@master ~]# nerdctl build -t busybox:v1 . [root@master ~]# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE busybox v1 fb6a2dfc7899 About a minute ago linux/amd64 4.1 MiB 2.1 MiB [root@master ~]# nerdctl run busybox:v1 hello container2.4推送至Harbor仓库[root@master ~]# nerdctl tag busybox:v1 harbor.local.com/app/busybox:v1 [root@master ~]# nerdctl push harbor.local.com/app/busybox:v1此时查看Harbor仓库发现已经推送成功

buildctl和nerdctl 安装配置 一、安装与使用nerdctlcontainerd虽然可直接提供给终端用户直接使用,也提供了命令行工具(ctr),但并不是很友好,所以nerdctl应运而生,它也是containerd的命令行工具,支持docker cli关于容器生命周期管理的所有命令,并且支持docker compose (nerdctl compose up)1.1安装nerdctl下载地址:https://github.com/containerd/nerdctl/releases# 下载 [root@k8s-master ~]# wget https://github.com/containerd/nerdctl/releases/download/v2.1.2/nerdctl-2.1.2-linux-amd64.tar.gz # 解压 [root@k8s-master ~]# tar -zxvf nerdctl-2.1.2-linux-amd64.tar.gz nerdctl containerd-rootless-setuptool.sh containerd-rootless.sh # 复制文件 [root@k8s-master ~]# mv nerdctl /usr/bin/ # 配置 nerdctl 参数自动补齐 [root@k8s-master ~]# echo 'source <(nerdctl completion bash)' >> /etc/profile [root@k8s-master ~]# source /etc/profile # 验证 [root@k8s-master ~]# nerdctl -v nerdctl version 2.1.21.2命名空间这个和K8s的名字空间不是一回事,其中default就是containerd的默认名字空间,http://k8s.io是K8s的名字空间root@k8s-03:~/bin# nerdctl ns ls NAME CONTAINERS IMAGES VOLUMES LABELS buildkit 0 0 0 buildkit_history 0 0 0 default 0 1 0 k8s.io 70 66 0 # 创建命名空间 [root@k8s-master ~]# nerdctl ns create test # 删除命名空间 [root@k8s-master ~]# nerdctl ns remove test test # 查看名称空间详情 [root@k8s-master ~]# nerdctl ns inspect k8s.io [ { "Name": "k8s.io", "Labels": null } ]1.3镜像root@k8s-03:~/bin# nerdctl -n k8s.io images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-cangku <none> b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB <none> <none> b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-cangku v1 b3e519ae85d0 4 hours ago linux/amd64 406.2MB 179.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl <none> a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB <none> <none> a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl v2.13.0 a13c1fd0b23e 22 hours ago linux/amd64 163.5MB 67.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon <none> cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB <none> <none> cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon v2.13.0 cb5883e8415a 22 hours ago linux/amd64 171.5MB 61MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core <none> d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB <none> <none> d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core v2.13.0 d75212166cdb 22 hours ago linux/amd64 202.4MB 63.85MB registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon <none> b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB <none> <none> b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon v2.13.0 b9139a9005f9 22 hours ago linux/amd64 87.67MB 33.14MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal <none> 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB <none> <none> 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal v2.13.0 19712b3eeee5 22 hours ago linux/amd64 165.2MB 53.6MB registry.cn-guangzhou.aliyuncs.com/xingcangku/gitlab-gitlab-ce-16.11.1-ce.0 <none> decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB <none> <none> decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB registry.cn-guangzhou.aliyuncs.com/xingcangku/gitlab-gitlab-ce-16.11.1-ce.0 16.11.1-ce.0 decbed64a538 2 days ago linux/amd64 3.109GB 1.253GB registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik <none> 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB <none> <none> 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik v3.0.0 39f367894114 2 days ago linux/amd64 225.8MB 58.3MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard <none> e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB <none> <none> e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard v2.7.0 e291095692ba 3 days ago linux/amd64 257.7MB 75.79MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper <none> ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB <none> <none> ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper v1.0.8 ca7729489386 3 days ago linux/amd64 43.82MB 19.74MB registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql <none> 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB <none> <none> 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql 11.14.0-debian-10-r22 94485e7c7d1d 3 days ago linux/amd64 280.1MB 90.55MB registry.cn-guangzhou.aliyuncs.com/xingcangku/sonarqube-community <none> b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB <none> <none> b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB registry.cn-guangzhou.aliyuncs.com/xingcangku/sonarqube-community 25.5.0.107428-community b5e625526868 3 days ago linux/amd64 1.24GB 957.4MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17 <none> bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB <none> <none> bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17 lts-jdk17 bb363b39bef3 3 days ago linux/amd64 483.5MB 271.9MB registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon <none> ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB <none> <none> ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon v2.13.0 ad014f12e11c 3 days ago linux/amd64 383.3MB 126.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db <none> dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB <none> <none> dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db v2.13.0 dc08b59ada6d 3 days ago linux/amd64 285.4MB 108.1MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice <none> 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB <none> <none> 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice v2.13.0 8ccc99b52f23 3 days ago linux/amd64 178.5MB 72.67MB registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon <none> 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB <none> <none> 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon v2.13.0 87662c08516c 3 days ago linux/amd64 156.5MB 51.41MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns <none> 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB <none> <none> 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.10.1 90d3eeb2e210 3 days ago linux/amd64 53.61MB 16.19MB registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc <none> f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB <none> <none> f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc 0.25.5 f3e2173b0e48 3 days ago linux/amd64 82.5MB 31.09MB registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd <none> 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB <none> <none> 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd 1.5.1 564119549dd9 3 days ago linux/amd64 10.73MB 4.755MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy <none> c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB <none> <none> c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.27.0 c1fd57dc0883 3 days ago linux/amd64 75.16MB 23.91MB registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee <none> 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB <none> <none> 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee 3.8 0d0658a57932 3 days ago linux/amd64 712.7kB 308.4kB # 拉取镜像 [root@k8s-master ~]# nerdctl -n test pull nginx:alpine # 构建镜像 [root@k8s-master ~]# cat Dockerfile FROM debian RUN apt-get install -y --force-yes locales RUN echo "LC_ALL=\"zh_CN.UTF-8\"" >> /etc/default/locale RUN locale-gen "zh_CN.UTF-8" [root@k8s-master ~]# nerdctl -n test build -t abc.com/debian . # 上传镜像 [root@k8s-master ~]# nerdctl -n test push abc.com/debian # 导出镜像 [root@k8s-master ~]# nerdctl -n test save -o debian.tar abc.com/debian # 导入镜像 [root@k8s-master ~]# nerdctl -n test load -i debian.tar 1.4容器root@k8s-03:~/bin# nerdctl -n k8s.io ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 90fbd223a72f registry.cn-guangzhou.aliyuncs.com/xingcangku/jenkins-jenkins-lts-jdk17:lts-jdk17 "/usr/bin/tini -- /u…" 9 hours ago Up k8s://cicd/jenkins-7d65887794-s4vhr/jenkins 681d3d0f9346 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 9 hours ago Up k8s://cicd/jenkins-7d65887794-s4vhr cfb418a6f445 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-jobservice:v2.13.0 "/harbor/entrypoint.…" 11 hours ago Up k8s://harbor/harbor-jobservice-6c766cbf57-4t4rv/jobservice 566e8a6194f8 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-jobservice-6c766cbf57-4t4rv 2f44c7d4f045 registry.cn-guangzhou.aliyuncs.com/xingcangku/nginx-photon:v2.13.0 "nginx -g daemon off;" 11 hours ago Up k8s://harbor/harbor-nginx-6569fc6f48-n58m4/nginx 73298e3ed41f registry.cn-guangzhou.aliyuncs.com/xingcangku/bitnami-postgresql:11.14.0-debian-10-r22 "/opt/bitnami/script…" 11 hours ago Up k8s://sonarqube/my-sonarqube-postgresql-0/my-sonarqube-postgresql a3904a1442a7 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://sonarqube/my-sonarqube-postgresql-0 090451297d0e registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-metrics-scraper:v1.0.8 "/metrics-sidecar" 11 hours ago Up k8s://kubernetes-dashboard/dashboard-metrics-scraper-f9669b96-gqv9b/dashboard-metrics-scraper 5e2e10ece736 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kubernetes-dashboard/dashboard-metrics-scraper-f9669b96-gqv9b 5fe6f542a5af registry.cn-guangzhou.aliyuncs.com/xingcangku/kubernetesui-dashboard:v2.7.0 "/dashboard --insecu…" 11 hours ago Up k8s://kubernetes-dashboard/kubernetes-dashboard-5d8977b4cd-hn9wj/kubernetes-dashboard 5f5145461dba registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-portal:v2.13.0 "nginx -g daemon off;" 11 hours ago Up k8s://harbor/harbor-portal-7b67bff87d-hhbwf/portal 0c91dee84ef4 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-db:v2.13.0 "/docker-entrypoint.…" 11 hours ago Up k8s://harbor/harbor-database-0/database 00f905053c35 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kubernetes-dashboard/kubernetes-dashboard-5d8977b4cd-hn9wj bd3f1dd15a7a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-portal-7b67bff87d-hhbwf abd9c09c0d84 registry.cn-guangzhou.aliyuncs.com/xingcangku/trivy-adapter-photon:v2.13.0 "/home/scanner/entry…" 11 hours ago Up k8s://harbor/harbor-trivy-0/trivy 398f6f60263a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-trivy-0 13b06ef3a148 registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-registryctl:v2.13.0 "/home/harbor/start.…" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc/registryctl da585e4b08bd registry.cn-guangzhou.aliyuncs.com/xingcangku/registry-photon:v2.13.0 "/home/harbor/entryp…" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc/registry ece6da7ad469 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-nginx-6569fc6f48-n58m4 c0d6e45cec3c registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-registry-84dc65db77-rq9qc 6bcc595f312c registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1 "/coredns -conf /etc…" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-xphsz/coredns 8dd7971b626b registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-xphsz e1daa9e322e5 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1 "/coredns -conf /etc…" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-fg85n/coredns 6a75b9f4e905 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/coredns-65dcc469f7-fg85n bfd37ad46a64 registry.cn-guangzhou.aliyuncs.com/xingcangku/traefik:v3.0.0 "/entrypoint.sh --gl…" 11 hours ago Up k8s://traefik/traefik-release-589c7ff647-ch4cz/traefik-release baacee8d0a07 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://traefik/traefik-release-589c7ff647-ch4cz eca5589418a2 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-database-0 b543a7a9e25c registry.cn-guangzhou.aliyuncs.com/xingcangku/harbor-core:v2.13.0 "/harbor/entrypoint.…" 11 hours ago Up k8s://harbor/harbor-core-797d458f8c-2gcjf/core 558406c8bd5c registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-core-797d458f8c-2gcjf 1a14db05c528 registry.cn-guangzhou.aliyuncs.com/xingcangku/redis-photon:v2.13.0 "redis-server /etc/r…" 11 hours ago Up k8s://harbor/harbor-redis-0/redis 5c518e649a4a registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://harbor/harbor-redis-0 8f75c2fd204b registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 "/opt/bin/flanneld -…" 11 hours ago Up k8s://kube-flannel/kube-flannel-ds-zp4jv/kube-flannel ea7d12788ef6 registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.27.0 "/usr/local/bin/kube…" 11 hours ago Up k8s://kube-system/kube-proxy-vfcq8/kube-proxy 98f0354f74e5 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-flannel/kube-flannel-ds-zp4jv a9c920f9e7c7 registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8 "/pause" 11 hours ago Up k8s://kube-system/kube-proxy-vfcq8 # 启动容器 [root@k8s-master ~]# nerdctl -n test run -d -p 80:80 --name web nginx:alpine # 进入容器 [root@k8s-master ~]# nerdctl -n test exec -it web sh / # # 停止容器 [root@k8s-master ~]# nerdctl -n test stop web web # 删除容器 [root@k8s-master ~]# nerdctl -n test rm web web 1.5其他操作# 查看网络信息 [root@k8s-master ~]# nerdctl network ls NETWORK ID NAME FILE cbr0 /etc/cni/net.d/10-flannel.conflist 17f29b073143 bridge /etc/cni/net.d/nerdctl-bridge.conflist host none # 查看系统信息 [root@k8s-master ~]# nerdctl system info Client: Namespace: default Debug Mode: false Server: Server Version: 1.6.4 Storage Driver: overlayfs Logging Driver: json-file Cgroup Driver: cgroupfs Cgroup Version: 1 Plugins: Log: fluentd journald json-file syslog Storage: native overlayfs Security Options: seccomp Profile: default Kernel Version: 4.18.0-425.13.1.el8_7.x86_64 Operating System: Rocky Linux 8.7 (Green Obsidian) OSType: linux Architecture: x86_64 CPUs: 2 Total Memory: 3.618GiB Name: k8s-master ID: d2b76909-9552-4be5-a12a-00b955f756f2 # 清理数据,它不是和Docker那样只是把标签为"none"的镜像清理掉,而是把所有没有"正在使用"的镜像清理了 [root@k8s-master ~]# nerdctl system prune -h二、nerdctl+buildkitd构建镜像 2.1buildkit介绍buildkit 从Docker公司的开源的镜像构建工具包,支持OCI标准的镜像构建 buildkitd组成部分: buildkitd(服务端),目前支持runc和containerd作为镜像构建环境,默认是runc,可以更换containerd。 buildctl(客户端),负责解析Dockerfile文件、并向服务端buildkitd发出构建请求。 构建镜像并推送至Harbor为例,整个服务调用过程如下:2.2安装buildkit软件包下载地址:https://github.com/moby/buildkit/releases[root@master ~]# wget https://github.com/moby/buildkit/releases/download/v0.13.2/buildkit-v0.13.2.linux-amd64.tar.gz [root@master ~]# tar -zxvf buildkit-v0.13.2.linux-amd64.tar.gz bin/ bin/buildctl bin/buildkit-cni-bridge bin/buildkit-cni-firewall bin/buildkit-cni-host-local bin/buildkit-cni-loopback bin/buildkit-qemu-aarch64 bin/buildkit-qemu-arm bin/buildkit-qemu-i386 bin/buildkit-qemu-mips64 bin/buildkit-qemu-mips64el bin/buildkit-qemu-ppc64le bin/buildkit-qemu-riscv64 bin/buildkit-qemu-s390x bin/buildkit-runc bin/buildkitd [root@master ~]# cd bin/ [root@master bin]# cp * /usr/local/bin/创建service脚本[root@master bin]# cat /etc/systemd/system/buildkitd.service [Unit] Description=BuildKit Documentation=https://github.com/moby/buildkit [Service] ExecStart=/usr/local/bin/buildkitd --oci-worker=false --containerd-worker=true [Install] WantedBy=multi-user.target新增buildkitd配置文件,添加镜像仓库使用http访问[root@master bin]# vim /etc/buildkit/buildkitd.toml [registry."harbor.local.com"] http = false insecure = true启动buildkitd[root@master bin]# systemctl daemon-reload [root@master bin]# systemctl start buildkitd [root@master bin]# systemctl enable buildkitd2.3构建镜像并测试[root@master ~]# cat Dockerfile FROM busybox CMD ["echo","hello","container"] [root@master ~]# nerdctl build -t busybox:v1 . [root@master ~]# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE busybox v1 fb6a2dfc7899 About a minute ago linux/amd64 4.1 MiB 2.1 MiB [root@master ~]# nerdctl run busybox:v1 hello container2.4推送至Harbor仓库[root@master ~]# nerdctl tag busybox:v1 harbor.local.com/app/busybox:v1 [root@master ~]# nerdctl push harbor.local.com/app/busybox:v1此时查看Harbor仓库发现已经推送成功