搜索到

1

篇与

的结果

-

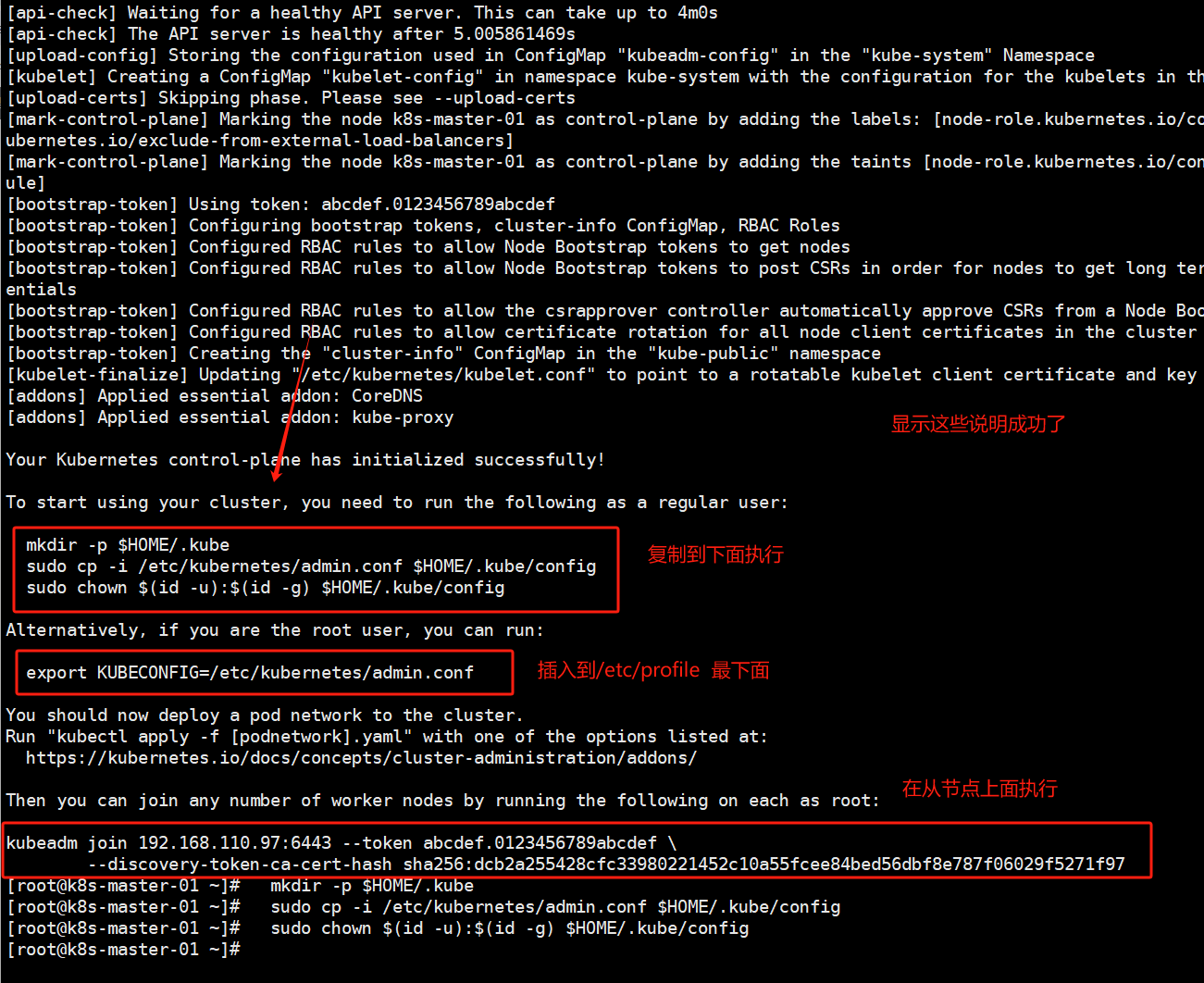

kubeadm 部署k8s 1.30 一、k8s包yum源介绍二、准备工作准备3台机器修改好网络改为固定IPcd /etc/NetworkManager/system-connections/ cp /etc/NetworkManager/system-connections/ens160.nmconnection /etc/NetworkManager/system-connections/ens160.nmconnection.backup vi ens160.nmconnection TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes #这个可以让开机不用nmcli IPADDR=192.168.110.97 GATEWAY=192.168.110.1 NETSTAT=255.255.255.0 DNS1=8.8.8.8 DNS2=192.168.110.1 sudo systemctl restart NetworkManager nmcli conn up ens33修改主机名及解析(三台节点)# 1、修改主机名 hostnamectl set-hostname k8s-master-01 hostnamectl set-hostname k8s-node-01 hostnamectl set-hostname k8s-node-02 # 2、三台机器添加host解析 cat >> /etc/hosts << "EOF" 192.168.110.97 k8s-master-01 m1 192.168.110.213 k8s-node-01 n1 192.168.110.2 k8s-node-02 n2 EOF关闭一些服务(三台节点)# 1、关闭selinux sed -i 's#enforcing#disabled#g' /etc/selinux/config setenforce 0 # 2、禁用防火墙,网络管理,邮箱 systemctl disable --now firewalld NetworkManager postfix # 3、关闭swap分区 swapoff -a # 注释swap分区 cp /etc/fstab /etc/fstab_bak sed -i '/swap/d' /etc/fstabsshd服务优化# 1、加速访问 sed -ri 's@^#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config sudo sed -ri 's@^#?\s*GSSAPIAuthentication\s+yes@GSSAPIAuthentication no@gi' /etc/ssh/sshd_config grep ^UseDNS /etc/ssh/sshd_config grep ^GSSAPIAuthentication /etc/ssh/sshd_config systemctl restart sshd # 2、密钥登录(主机点做):为了让后续一些远程拷贝操作更方便 ssh-keygen ssh-copy-id -i root@k8s-master-01 ssh-copy-id -i root@k8s-node-01 ssh-copy-id -i root@k8s-node-02 #连接测试 [root@m01 ~]# ssh 172.16.1.7 Last login: Tue Nov 24 09:02:26 2020 from 10.0.0.1 [root@web01 ~]#6.增大文件标识符数量(退出当前会话立即生效)cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn所有节点配置模块自动加载,此步骤不做的话(kubeadm init时会直接失败)modprobe br_netfilter modprobe ip_conntrack cat >>/etc/rc.sysinit<<EOF #!/bin/bash for file in /etc/sysconfig/modules/*.modules ; do [ -x $file ] && $file done EOF echo "modprobe br_netfilter" >/etc/sysconfig/modules/br_netfilter.modules echo "modprobe ip_conntrack" >/etc/sysconfig/modules/ip_conntrack.modules chmod 755 /etc/sysconfig/modules/br_netfilter.modules chmod 755 /etc/sysconfig/modules/ip_conntrack.modules lsmod | grep br_netfilter 同步集群时间# =====================》chrony服务端:服务端我们可以自己搭建,也可以直接用公网上的时间服务器,所以是否部署服务端看你自己 # 1、安装 yum -y install chrony # 2、修改配置文件 mv /etc/chrony.conf /etc/chrony.conf.bak cat > /etc/chrony.conf << EOF server ntp1.aliyun.com iburst minpoll 4 maxpoll 10 server ntp2.aliyun.com iburst minpoll 4 maxpoll 10 server ntp3.aliyun.com iburst minpoll 4 maxpoll 10 server ntp4.aliyun.com iburst minpoll 4 maxpoll 10 server ntp5.aliyun.com iburst minpoll 4 maxpoll 10 server ntp6.aliyun.com iburst minpoll 4 maxpoll 10 server ntp7.aliyun.com iburst minpoll 4 maxpoll 10 driftfile /var/lib/chrony/drift makestep 10 3 rtcsync allow 0.0.0.0/0 local stratum 10 keyfile /etc/chrony.keys logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF # 4、启动chronyd服务 systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置 systemctl enable chronyd.service systemctl status chronyd.service # =====================》chrony客户端:在需要与外部同步时间的机器上安装,启动后会自动与你指定的服务端同步时间 # 下述步骤一次性粘贴到每个客户端执行即可 # 1、安装chrony yum -y install chrony # 2、需改客户端配置文件 mv /etc/chrony.conf /etc/chrony.conf.bak cat > /etc/chrony.conf << EOF server 192.168.110.97 iburst driftfile /var/lib/chrony/drift makestep 10 3 rtcsync local stratum 10 keyfile /etc/chrony.key logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF # 3、启动chronyd systemctl restart chronyd.service systemctl enable chronyd.service systemctl status chronyd.service # 4、验证 chronyc sources -v更新基础yum源(三台机器)# 1、清理 rm -rf /etc/yum.repos.d/* yum remove epel-release -y rm -rf /var/cache/yum/x86_64/6/epel/ # 2、安装阿里的base与epel源 curl -s -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo curl -s -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum clean all yum makecache # 或者用华为的也行 # curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo # yum install -y https://repo.huaweicloud.com/epel/epel-release-latest-7.noarch.rpm更新基础yum源(三台机器)# 1、清理 rm -rf /etc/yum.repos.d/* yum remove epel-release -y rm -rf /var/cache/yum/x86_64/6/epel/ # 2、安装阿里的base与epel源 curl -s -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo curl -s -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum clean all yum makecache # 或者用华为的也行 # curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo # yum install -y https://repo.huaweicloud.com/epel/epel-release-latest-7.noarch.rpm更新系统软件(排除内核) yum update -y --exclud=kernel*安装基础常用软件yum -y install expect wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git ntpdate chrony bind-utils rsync unzip git更新内核(docker对系统内核要求比较高,最好使用4.4+)主节点操作wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.274-1.el7.elrepo.x86_64.rpm wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.274-1.el7.elrepo.x86_64.rpm for i in n1 n2 ; do scp kernel-lt-* $i:/root; done 补充:如果下载的慢就从网盘里拿吧 链接:https://pan.baidu.com/s/1gVyeBQsJPZjc336E8zGjyQ 提取码:Egon 三个节点操作 #安装 yum localinstall -y /root/kernel-lt* #调到默认启动 grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg #查看当前默认启动的内核 grubby --default-kernel #重启系统 reboot三个节点安装IPVS# 1、安装ipvsadm等相关工具 yum -y install ipvsadm ipset sysstat conntrack libseccomp # 2、配置加载 cat > /etc/sysconfig/modules/ipvs.modules <<"EOF" #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ $? -eq 0 ]; then /sbin/modprobe ${kernel_module} fi done EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs三台机器修改内核参数cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp.keepaliv.probes = 3 net.ipv4.tcp_keepalive_intvl = 15 net.ipv4.tcp.max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp.max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.top_timestamps = 0 net.core.somaxconn = 16384 EOF # 立即生效 sysctl --system三、 安装containerd(三台节点都要做)自Kubernetes1.24以后,K8S就不再原生支持docker了我们都知道containerd来自于docker,后被docker捐献给了云原生计算基金会(我们安装docker当然会一并安装上containerd)安装方法:centos的libseccomp的版本为2.3.1,不满足containerd的需求,需要下载2.4以上的版本即可,我这里部署2.5.1版本。 rpm -e libseccomp-2.5.1-1.el8.x86_64 --nodeps rpm -ivh libseccomp-2.5.1-1.e18.x8664.rpm #官网已经gg了,不更新了,请用阿里云 # wget http://rpmfind.net/linux/centos/8-stream/Base0s/x86 64/0s/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm wget https://mirrors.aliyun.com/centos/8/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm cd /root/rpms sudo yum localinstall libseccomp-2.5.1-1.el8.x86_64.rpm -y #yum libseccomp-2.5.1-1.el8.x86_64.rpm -y rpm -qa | grep libseccomp 安装方式一:(基于阿里云的源)推荐用这种方式,安装的是#1、卸载之前的 yum remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo cd /etc/yum.repos.d/ wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo # 3、安装 yum install containerd* -y配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sed -i 's/registry.k8s.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image="registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version-------------------------配置docker(下述内容不用操作,因为k8s1.30直接对接containerd) # 1、配置docker # 修改配置:驱动与kubelet保持一致,否则会后期无法启动kubelet cat > /etc/docker/daemon.json << "EOF" { "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors":["https://reg-mirror.qiniu.com/"] } EOF # 2、重启docker systemctl restart docker.service systemctl enable docker.service # 3、查看验证 [root@k8s-master-01 ~]# docker info |grep -i cgroup Cgroup Driver: systemd Cgroup Version: 1四、 安装k8s官网:https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-init/1、三台机器准备k8s源cat > /etc/yum.repos.d/kubernetes.repo <<"EOF" [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/ enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/repodata/repomd.xml.key EOF #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce yum install -y kubelet-1.30* kubeadm-1.30* kubectl-1.30* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet2、主节点操作(node节点不执行)初始化master节点(仅在master节点上执行) #可以kubeadm config images list查看 [root@k8s-master-01 ~]# kubeadm config images list registry.k8s.io/kube-apiserver:v1.30.0 registry.k8s.io/kube-controller-manager:v1.30.0 registry.k8s.io/kube-scheduler:v1.30.0 registry.k8s.io/kube-proxy:v1.30.0 registry.k8s.io/coredns/coredns:v1.11.1 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlvi kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.110.97 #这里要改为控制节点 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master-01 #这里要修改 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #要去阿里云创建仓库 kind: ClusterConfiguration kubernetesVersion: 1.30.3 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 #添加这行 scheduler: {} #在最后插入以下内容 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd部署K8Skubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap部署网络插件下载网络插件wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: docker.io/flannel/flannel:v0.25.5 image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1 image: docker.io/flannel/flannel:v0.25.5 改为下面 要去阿里云上面构建自己的镜像[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 部署在master上即可kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml #这个是删除网络插件的查看状态kubectl -n kube-flannel get pods kubectl -n kube-flannel get pods -w [root@k8s-master-01 ~]# kubectl get nodes # 全部ready [root@k8s-master-01 ~]# kubectl -n kube-system get pods # 两个coredns的pod也都ready部署kubectl命令提示(在所有节点上执行)yum install bash-completion* -y kubectl completion bash > ~/.kube/completion.bash.inc echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile source $HOME/.bash_profile排错解决方法:===========================================部署遇到问题之后,铲掉环境重新部署 # 在master节点上 kubeadm reset -f # 在所有节点包括master节点在内上执行如下命令 cd /tmp # 有时候在当前目录下可能与要卸载的包重名的而导致卸载报错,可以切个目录 rm -rf ~/.kube/ rm -rf /etc/kubernetes/ rm -rf /etc/cni rm -rf /opt/cni rm -rf /var/lib/etcd rm -rf /var/etcd rm -rf /run/flannel rm -rf /opt/cni rm -rf /etc/cni/net.d rm -rf /run/xtables.lock systemctl stop kubelet yum remove kube* -y for i in `df |grep kubelet |awk '{print $NF}'`;do umount -l $i ;done # 先卸载所有kubelet挂载否则下条命令无法删除 rm -rf /var/lib/kubelet rm -rf /etc/systemd/system/kubelet.service.d rm -rf /etc/systemd/system/kubelet.service rm -rf /usr/bin/kube* iptables -F reboot # 重新启动,从头再来 # 第一步:在所有节点执行 yum install -y kubelet-1.30* kubeadm-1.30* kubectl-1.30* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet # 第二步:只在master节点上执行 [root@k8s-master-01 ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap # 第三步:部署网络插件 kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml mkdir -p /etc/containerd/certs.d/registry.aliyuncs.com tee /etc/containerd/certs.d/registry.aliyuncs.com/hosts.toml <<EOF server = "https://registry.aliyuncs.com" [host."https://registry.aliyuncs.com"] capabilities = ["pull", "resolve"] EOF

kubeadm 部署k8s 1.30 一、k8s包yum源介绍二、准备工作准备3台机器修改好网络改为固定IPcd /etc/NetworkManager/system-connections/ cp /etc/NetworkManager/system-connections/ens160.nmconnection /etc/NetworkManager/system-connections/ens160.nmconnection.backup vi ens160.nmconnection TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes #这个可以让开机不用nmcli IPADDR=192.168.110.97 GATEWAY=192.168.110.1 NETSTAT=255.255.255.0 DNS1=8.8.8.8 DNS2=192.168.110.1 sudo systemctl restart NetworkManager nmcli conn up ens33修改主机名及解析(三台节点)# 1、修改主机名 hostnamectl set-hostname k8s-master-01 hostnamectl set-hostname k8s-node-01 hostnamectl set-hostname k8s-node-02 # 2、三台机器添加host解析 cat >> /etc/hosts << "EOF" 192.168.110.97 k8s-master-01 m1 192.168.110.213 k8s-node-01 n1 192.168.110.2 k8s-node-02 n2 EOF关闭一些服务(三台节点)# 1、关闭selinux sed -i 's#enforcing#disabled#g' /etc/selinux/config setenforce 0 # 2、禁用防火墙,网络管理,邮箱 systemctl disable --now firewalld NetworkManager postfix # 3、关闭swap分区 swapoff -a # 注释swap分区 cp /etc/fstab /etc/fstab_bak sed -i '/swap/d' /etc/fstabsshd服务优化# 1、加速访问 sed -ri 's@^#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config sudo sed -ri 's@^#?\s*GSSAPIAuthentication\s+yes@GSSAPIAuthentication no@gi' /etc/ssh/sshd_config grep ^UseDNS /etc/ssh/sshd_config grep ^GSSAPIAuthentication /etc/ssh/sshd_config systemctl restart sshd # 2、密钥登录(主机点做):为了让后续一些远程拷贝操作更方便 ssh-keygen ssh-copy-id -i root@k8s-master-01 ssh-copy-id -i root@k8s-node-01 ssh-copy-id -i root@k8s-node-02 #连接测试 [root@m01 ~]# ssh 172.16.1.7 Last login: Tue Nov 24 09:02:26 2020 from 10.0.0.1 [root@web01 ~]#6.增大文件标识符数量(退出当前会话立即生效)cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn所有节点配置模块自动加载,此步骤不做的话(kubeadm init时会直接失败)modprobe br_netfilter modprobe ip_conntrack cat >>/etc/rc.sysinit<<EOF #!/bin/bash for file in /etc/sysconfig/modules/*.modules ; do [ -x $file ] && $file done EOF echo "modprobe br_netfilter" >/etc/sysconfig/modules/br_netfilter.modules echo "modprobe ip_conntrack" >/etc/sysconfig/modules/ip_conntrack.modules chmod 755 /etc/sysconfig/modules/br_netfilter.modules chmod 755 /etc/sysconfig/modules/ip_conntrack.modules lsmod | grep br_netfilter 同步集群时间# =====================》chrony服务端:服务端我们可以自己搭建,也可以直接用公网上的时间服务器,所以是否部署服务端看你自己 # 1、安装 yum -y install chrony # 2、修改配置文件 mv /etc/chrony.conf /etc/chrony.conf.bak cat > /etc/chrony.conf << EOF server ntp1.aliyun.com iburst minpoll 4 maxpoll 10 server ntp2.aliyun.com iburst minpoll 4 maxpoll 10 server ntp3.aliyun.com iburst minpoll 4 maxpoll 10 server ntp4.aliyun.com iburst minpoll 4 maxpoll 10 server ntp5.aliyun.com iburst minpoll 4 maxpoll 10 server ntp6.aliyun.com iburst minpoll 4 maxpoll 10 server ntp7.aliyun.com iburst minpoll 4 maxpoll 10 driftfile /var/lib/chrony/drift makestep 10 3 rtcsync allow 0.0.0.0/0 local stratum 10 keyfile /etc/chrony.keys logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF # 4、启动chronyd服务 systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置 systemctl enable chronyd.service systemctl status chronyd.service # =====================》chrony客户端:在需要与外部同步时间的机器上安装,启动后会自动与你指定的服务端同步时间 # 下述步骤一次性粘贴到每个客户端执行即可 # 1、安装chrony yum -y install chrony # 2、需改客户端配置文件 mv /etc/chrony.conf /etc/chrony.conf.bak cat > /etc/chrony.conf << EOF server 192.168.110.97 iburst driftfile /var/lib/chrony/drift makestep 10 3 rtcsync local stratum 10 keyfile /etc/chrony.key logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF # 3、启动chronyd systemctl restart chronyd.service systemctl enable chronyd.service systemctl status chronyd.service # 4、验证 chronyc sources -v更新基础yum源(三台机器)# 1、清理 rm -rf /etc/yum.repos.d/* yum remove epel-release -y rm -rf /var/cache/yum/x86_64/6/epel/ # 2、安装阿里的base与epel源 curl -s -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo curl -s -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum clean all yum makecache # 或者用华为的也行 # curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo # yum install -y https://repo.huaweicloud.com/epel/epel-release-latest-7.noarch.rpm更新基础yum源(三台机器)# 1、清理 rm -rf /etc/yum.repos.d/* yum remove epel-release -y rm -rf /var/cache/yum/x86_64/6/epel/ # 2、安装阿里的base与epel源 curl -s -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo curl -s -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum clean all yum makecache # 或者用华为的也行 # curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo # yum install -y https://repo.huaweicloud.com/epel/epel-release-latest-7.noarch.rpm更新系统软件(排除内核) yum update -y --exclud=kernel*安装基础常用软件yum -y install expect wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git ntpdate chrony bind-utils rsync unzip git更新内核(docker对系统内核要求比较高,最好使用4.4+)主节点操作wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.274-1.el7.elrepo.x86_64.rpm wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.274-1.el7.elrepo.x86_64.rpm for i in n1 n2 ; do scp kernel-lt-* $i:/root; done 补充:如果下载的慢就从网盘里拿吧 链接:https://pan.baidu.com/s/1gVyeBQsJPZjc336E8zGjyQ 提取码:Egon 三个节点操作 #安装 yum localinstall -y /root/kernel-lt* #调到默认启动 grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg #查看当前默认启动的内核 grubby --default-kernel #重启系统 reboot三个节点安装IPVS# 1、安装ipvsadm等相关工具 yum -y install ipvsadm ipset sysstat conntrack libseccomp # 2、配置加载 cat > /etc/sysconfig/modules/ipvs.modules <<"EOF" #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ $? -eq 0 ]; then /sbin/modprobe ${kernel_module} fi done EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs三台机器修改内核参数cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp.keepaliv.probes = 3 net.ipv4.tcp_keepalive_intvl = 15 net.ipv4.tcp.max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp.max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.top_timestamps = 0 net.core.somaxconn = 16384 EOF # 立即生效 sysctl --system三、 安装containerd(三台节点都要做)自Kubernetes1.24以后,K8S就不再原生支持docker了我们都知道containerd来自于docker,后被docker捐献给了云原生计算基金会(我们安装docker当然会一并安装上containerd)安装方法:centos的libseccomp的版本为2.3.1,不满足containerd的需求,需要下载2.4以上的版本即可,我这里部署2.5.1版本。 rpm -e libseccomp-2.5.1-1.el8.x86_64 --nodeps rpm -ivh libseccomp-2.5.1-1.e18.x8664.rpm #官网已经gg了,不更新了,请用阿里云 # wget http://rpmfind.net/linux/centos/8-stream/Base0s/x86 64/0s/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm wget https://mirrors.aliyun.com/centos/8/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm cd /root/rpms sudo yum localinstall libseccomp-2.5.1-1.el8.x86_64.rpm -y #yum libseccomp-2.5.1-1.el8.x86_64.rpm -y rpm -qa | grep libseccomp 安装方式一:(基于阿里云的源)推荐用这种方式,安装的是#1、卸载之前的 yum remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo cd /etc/yum.repos.d/ wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo # 3、安装 yum install containerd* -y配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sed -i 's/registry.k8s.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image="registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version-------------------------配置docker(下述内容不用操作,因为k8s1.30直接对接containerd) # 1、配置docker # 修改配置:驱动与kubelet保持一致,否则会后期无法启动kubelet cat > /etc/docker/daemon.json << "EOF" { "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors":["https://reg-mirror.qiniu.com/"] } EOF # 2、重启docker systemctl restart docker.service systemctl enable docker.service # 3、查看验证 [root@k8s-master-01 ~]# docker info |grep -i cgroup Cgroup Driver: systemd Cgroup Version: 1四、 安装k8s官网:https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-init/1、三台机器准备k8s源cat > /etc/yum.repos.d/kubernetes.repo <<"EOF" [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/ enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm/repodata/repomd.xml.key EOF #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce yum install -y kubelet-1.30* kubeadm-1.30* kubectl-1.30* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet2、主节点操作(node节点不执行)初始化master节点(仅在master节点上执行) #可以kubeadm config images list查看 [root@k8s-master-01 ~]# kubeadm config images list registry.k8s.io/kube-apiserver:v1.30.0 registry.k8s.io/kube-controller-manager:v1.30.0 registry.k8s.io/kube-scheduler:v1.30.0 registry.k8s.io/kube-proxy:v1.30.0 registry.k8s.io/coredns/coredns:v1.11.1 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlvi kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.110.97 #这里要改为控制节点 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master-01 #这里要修改 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #要去阿里云创建仓库 kind: ClusterConfiguration kubernetesVersion: 1.30.3 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 #添加这行 scheduler: {} #在最后插入以下内容 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd部署K8Skubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap部署网络插件下载网络插件wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: docker.io/flannel/flannel:v0.25.5 image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1 image: docker.io/flannel/flannel:v0.25.5 改为下面 要去阿里云上面构建自己的镜像[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 部署在master上即可kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml #这个是删除网络插件的查看状态kubectl -n kube-flannel get pods kubectl -n kube-flannel get pods -w [root@k8s-master-01 ~]# kubectl get nodes # 全部ready [root@k8s-master-01 ~]# kubectl -n kube-system get pods # 两个coredns的pod也都ready部署kubectl命令提示(在所有节点上执行)yum install bash-completion* -y kubectl completion bash > ~/.kube/completion.bash.inc echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile source $HOME/.bash_profile排错解决方法:===========================================部署遇到问题之后,铲掉环境重新部署 # 在master节点上 kubeadm reset -f # 在所有节点包括master节点在内上执行如下命令 cd /tmp # 有时候在当前目录下可能与要卸载的包重名的而导致卸载报错,可以切个目录 rm -rf ~/.kube/ rm -rf /etc/kubernetes/ rm -rf /etc/cni rm -rf /opt/cni rm -rf /var/lib/etcd rm -rf /var/etcd rm -rf /run/flannel rm -rf /opt/cni rm -rf /etc/cni/net.d rm -rf /run/xtables.lock systemctl stop kubelet yum remove kube* -y for i in `df |grep kubelet |awk '{print $NF}'`;do umount -l $i ;done # 先卸载所有kubelet挂载否则下条命令无法删除 rm -rf /var/lib/kubelet rm -rf /etc/systemd/system/kubelet.service.d rm -rf /etc/systemd/system/kubelet.service rm -rf /usr/bin/kube* iptables -F reboot # 重新启动,从头再来 # 第一步:在所有节点执行 yum install -y kubelet-1.30* kubeadm-1.30* kubectl-1.30* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet # 第二步:只在master节点上执行 [root@k8s-master-01 ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap # 第三步:部署网络插件 kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml mkdir -p /etc/containerd/certs.d/registry.aliyuncs.com tee /etc/containerd/certs.d/registry.aliyuncs.com/hosts.toml <<EOF server = "https://registry.aliyuncs.com" [host."https://registry.aliyuncs.com"] capabilities = ["pull", "resolve"] EOF