搜索到

6

篇与

的结果

-

使用梯子来拉取docker镜像 一、实验准备 1.要准备好梯子,梯子这东西就不说了会搞的就搞,不会搞的就算了2.实验环境宿主机win11+VMware虚拟机(虚拟机跑的centos7.9)虚拟机网络设置的是TNA二、梯子这里使用的是clash Verge 设置开启局域网连接如果不打开,就只能本机访问7890端口,当然,如果你的docker也是本机,那也没问题三、虚拟机内设置 (1)docker 配置代理去掉镜像加速配置修改 /etc/docker/daemon.json 文件 ,去掉之前配置的镜像加速配置例如去掉这部分{ "registry-mirrors": [ "https://hub-mirror.c.163.com" ] }(2)配置代理这里10.0.0.1换成你主机的IPmkdir -p /etc/systemd/system/docker.service.d/ vim /etc/systemd/system/docker.service.d/proxy.conf [Service] Environment="HTTP_PROXY=http://10.0.0.1:7890" Environment="HTTPS_PROXY=http://10.0.0.1:7890"(3)重载配置,重启服务# 加载配置 systemctl daemon-reload # 重启docker systemctl restart docker # 查看代理配置是否生效 systemctl show --property=Environment docker ##---打印内容--- Environment=HTTP_PROXY=http://10.0.0.1:7890 HTTPS_PROXY=http://10.0.0.1:7890此时再拉取镜像,就非常流畅了当然这个流畅也取决于你的梯子质量{dotted startColor="#ff6c6c" endColor="#1989fa"/}四、给整个centos7.9配置上 {alert type="info"}测试 如果可以直接连接上后面就不用再配置了{/alert}curl -x http://192.168.110.119:7890 https://www.google.com(1)配置网络cat /etc/sysconfig/network-scripts/ifcfg-ens33TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=dhcp DEFROUTE=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes DNS1=8.8.8.8 DNS2=8.8.4.4 IPV4_FAILURE_FATAL=no IPV6INIT=no UUID=c96bc909-188e-ec64-3a96-6a90982b08ad PEERDNS=no (2)临时配置export http_proxy="http://192.168.110.119:7890" export https_proxy="http://192.168.110.119:7890" export ftp_proxy="http://192.168.110.119:7890" (3)永久设置/etc/profile在里面添加(ubuntu在/etc/environment)export http_proxy="http://192.168.110.119:7890" export https_proxy="http://192.168.110.119:7890" export ftp_proxy="http://192.168.110.119:7890"(4)加载配置source /etc/profile sudo systemctl restart network sudo systemctl restart NetworkManager

使用梯子来拉取docker镜像 一、实验准备 1.要准备好梯子,梯子这东西就不说了会搞的就搞,不会搞的就算了2.实验环境宿主机win11+VMware虚拟机(虚拟机跑的centos7.9)虚拟机网络设置的是TNA二、梯子这里使用的是clash Verge 设置开启局域网连接如果不打开,就只能本机访问7890端口,当然,如果你的docker也是本机,那也没问题三、虚拟机内设置 (1)docker 配置代理去掉镜像加速配置修改 /etc/docker/daemon.json 文件 ,去掉之前配置的镜像加速配置例如去掉这部分{ "registry-mirrors": [ "https://hub-mirror.c.163.com" ] }(2)配置代理这里10.0.0.1换成你主机的IPmkdir -p /etc/systemd/system/docker.service.d/ vim /etc/systemd/system/docker.service.d/proxy.conf [Service] Environment="HTTP_PROXY=http://10.0.0.1:7890" Environment="HTTPS_PROXY=http://10.0.0.1:7890"(3)重载配置,重启服务# 加载配置 systemctl daemon-reload # 重启docker systemctl restart docker # 查看代理配置是否生效 systemctl show --property=Environment docker ##---打印内容--- Environment=HTTP_PROXY=http://10.0.0.1:7890 HTTPS_PROXY=http://10.0.0.1:7890此时再拉取镜像,就非常流畅了当然这个流畅也取决于你的梯子质量{dotted startColor="#ff6c6c" endColor="#1989fa"/}四、给整个centos7.9配置上 {alert type="info"}测试 如果可以直接连接上后面就不用再配置了{/alert}curl -x http://192.168.110.119:7890 https://www.google.com(1)配置网络cat /etc/sysconfig/network-scripts/ifcfg-ens33TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=dhcp DEFROUTE=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes DNS1=8.8.8.8 DNS2=8.8.4.4 IPV4_FAILURE_FATAL=no IPV6INIT=no UUID=c96bc909-188e-ec64-3a96-6a90982b08ad PEERDNS=no (2)临时配置export http_proxy="http://192.168.110.119:7890" export https_proxy="http://192.168.110.119:7890" export ftp_proxy="http://192.168.110.119:7890" (3)永久设置/etc/profile在里面添加(ubuntu在/etc/environment)export http_proxy="http://192.168.110.119:7890" export https_proxy="http://192.168.110.119:7890" export ftp_proxy="http://192.168.110.119:7890"(4)加载配置source /etc/profile sudo systemctl restart network sudo systemctl restart NetworkManager -

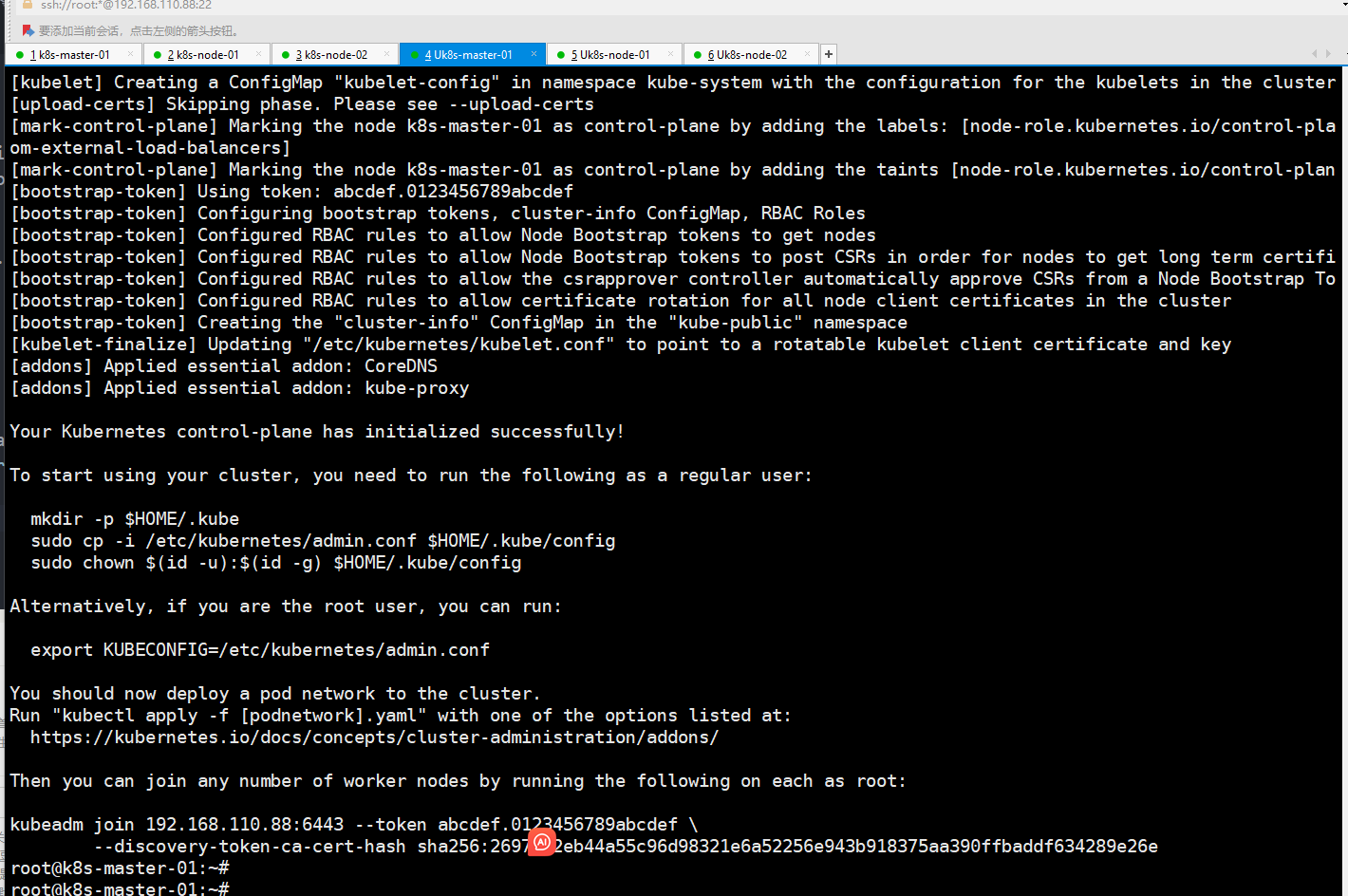

Ubuntu安装 kubeadm 部署k8s 1.30 一、准备工作0、ubuntu 添加root用户sudo passwd root su - root # 输入你刚刚设置的密码即可,退出,下次就可以用root登录 #关闭防火墙 systemctl status ufw.service systemctl stop ufw.service #ssh禁用了root连接可以开启 设置vi /etc/ssh/sshd_config配置开启 PermitRootLogin yes 重启服务 systemctl restart sshd#配置加速 代理长期生效 cat >/etc/profile.d/proxy.sh << 'EOF' export http_proxy="http://192.168.1.9:7890" export https_proxy="http://192.168.1.9:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" export no_proxy="127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc" export NO_PROXY="$no_proxy" EOF source /etc/profile.d/proxy.sh #下面部署完containerd后再操作 mkdir -p /etc/systemd/system/containerd.service.d cat >/etc/systemd/system/containerd.service.d/http-proxy.conf << 'EOF' [Service] Environment="HTTP_PROXY=http://192.168.1.9:7890" Environment="HTTPS_PROXY=http://192.168.1.9:7890" Environment="NO_PROXY=127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc" EOF systemctl daemon-reload systemctl restart containerd crictl pull docker.io/library/busybox:latest1、打开Netplan配置文件sudo nano /etc/netplan/00-installer-config.yaml # 根据实际文件名修改2、修改配置文件2.1动态IPnetwork: ethernets: ens33: # 网卡名(用 `ip a` 查看) dhcp4: true version: 22.2静态IPnetwork: ethernets: ens33: dhcp4: no addresses: [192.168.1.100/24] # IP/子网掩码 gateway4: 192.168.1.1 # 网关 nameservers: addresses: [8.8.8.8, 1.1.1.1] # DNS服务器 version: 23、应用配置sudo netplan apply4、SSH远程登录#修改/etc/ssh/sshd_config PermitRootLogin yessudo systemctl restart sshd三台主机ubuntu20.04.4使用阿里云的apt源先备份一份 sudo cp /etc/apt/sources.list /etc/apt/sources.list.bakvi /etc/apt/sources.list deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse机器配置#修改主机名 sudo hostnamectl set-hostname 主机名 #刷新主机名无需重启 sudo hostname -F /etc/hostnamecat >> /etc/hosts << "EOF" 192.168.110.88 k8s-master-01 m1 192.168.110.70 k8s-node-01 n1 192.168.110.176 k8s-node-02 n2 EOF 集群通信ssh-keygen ssh-copy-id m1 ssh-copy-id n1 ssh-copy-id n2关闭系统的交换分区swap集群内主机都需要执行sed -ri 's/^([^#].*swap.*)$/#\1/' /etc/fstab && grep swap /etc/fstab && swapoff -a && free -h同步时间主节点做sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat << 'EOF' > /etc/chrony/conf.d/aliyun.conf server ntp1.aliyun.com iburst minpoll 4 maxpoll 10 server ntp2.aliyun.com iburst minpoll 4 maxpoll 10 server ntp3.aliyun.com iburst minpoll 4 maxpoll 10 server ntp4.aliyun.com iburst minpoll 4 maxpoll 10 server ntp5.aliyun.com iburst minpoll 4 maxpoll 10 server ntp6.aliyun.com iburst minpoll 4 maxpoll 10 server ntp7.aliyun.com iburst minpoll 4 maxpoll 10 driftfile /var/lib/chrony/drift makestep 10 3 rtcsync allow 0.0.0.0/0 local stratum 10 keyfile /etc/chrony.keys logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置 systemctl enable chronyd.service systemctl status chronyd.service从节点做sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat > /etc/chrony/conf.d/aliyun.conf<< EOF server 192.168.110.88 iburst driftfile /var/lib/chrony/drift makestep 10 3 rtcsync local stratum 10 keyfile /etc/chrony.key logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF设置内核参数集群内主机都需要执行cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl = 15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF # 立即生效 sysctl --system# 1. 加载必要的内核模块 sudo modprobe br_netfilter # 2. 确保模块开机自动加载 echo "br_netfilter" | sudo tee /etc/modules-load.d/k8s.conf # 3. 配置网络参数 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF # 4. 应用配置 sudo sysctl --system # 5. 验证配置 ls /proc/sys/net/bridge/ # 应该显示 bridge-nf-call-iptables cat /proc/sys/net/bridge/bridge-nf-call-iptables # 应该输出 1安装常用工具sudo apt update sudo apt install -y expect wget jq psmisc vim net-tools telnet lvm2 git ntpdate chrony bind9-utils rsync unzip git安装ipvsadm安装ipvsadmsudo apt install -y ipvsadm ipset sysstat conntrack #libseccomp 是预装好的 dpkg -l | grep libseccomp在 Ubuntu 22.04.4 中,/etc/sysconfig/modules/ 目录通常不是默认存在的,因为 Ubuntu 使用的是 systemd 作为初始化系统,而不是传统的 SysVinit 或者其他初始化系统。因此,Ubuntu 不使用 /etc/sysconfig/modules/ 来管理模块加载。如果你想确保 IPVS 模块在系统启动时自动加载,你可以按照以下步骤操作:创建一个 /etc/modules-load.d/ipvs.conf 文件: 在这个文件中,你可以列出所有需要在启动时加载的模块。这样做可以确保在启动时自动加载这些模块。echo "ip_vs" > /etc/modules-load.d/ipvs.conf echo "ip_vs_lc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wlc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_rr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wrr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblcr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_dh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_fo" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_nq" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sed" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_ftp" >> /etc/modules-load.d/ipvs.conf echo "nf_conntrack" >> /etc/modules-load.d/ipvs.conf加载模块: 你可以使用 modprobe 命令来手动加载这些模块,或者让系统在下次重启时自动加载。sudo modprobe ip_vs sudo modprobe ip_vs_lc sudo modprobe ip_vs_wlc sudo modprobe ip_vs_rr sudo modprobe ip_vs_wrr sudo modprobe ip_vs_lblc sudo modprobe ip_vs_lblcr sudo modprobe ip_vs_dh sudo modprobe ip_vs_sh sudo modprobe ip_vs_fo sudo modprobe ip_vs_nq sudo modprobe ip_vs_sed sudo modprobe ip_vs_ftp sudo modprobe nf_conntrack验证模块是否加载: 你可以使用 lsmod 命令来验证这些模块是否已经被成功加载。lsmod | grep ip_vs# 1. 内核模块 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 2. 必要 sysctl cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # 3. 可选常用调优(按需) cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-extra.conf fs.inotify.max_user_watches = 524288 fs.inotify.max_user_instances = 8192 fs.file-max = 1000000 EOF # 4. 应用所有 sysctl sudo sysctl --system 二、安装containerd(三台节点都要做)#只要超过2.4就不用再安装了 root@k8s-master-01:/etc/modules-load.d# dpkg -l | grep libseccomp ii libseccomp2:amd64 2.5.3-2ubuntu2 amd64 high level interface to Linux seccomp filter开始安装apt install containerd* -y containerd --version #查看版本配置mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 vi /etc/containerd/config.toml 把下面改为自己构建的仓库 sandbox_image = sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8"#配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml 配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#配置containerd开机自启动 #启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #查看containerd状态 systemctl status containerd #查看containerd的版本 ctr version三、安装最新版本的kubeadm、kubelet 和 kubectl1、三台机器准备k8s配置安装源apt-get update && apt-get install -y apt-transport-https sudo mkdir -p /etc/apt/keyrings curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo tee /etc/apt/keyrings/kubernetes-apt-keyring.asc > /dev/null echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.asc] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list # 2. sudo mkdir -p /etc/apt/keyrings curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key \ | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" \ | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubeadm=1.30.14-1.1 kubelet=1.30.14-1.1 kubectl=1.30.14-1.1 sudo apt-mark hold kubelet kubeadm kubectl2、主节点操作(node节点不执行)初始化master节点(仅在master节点上执行) #可以kubeadm config images list查看 [root@k8s-master-01 ~]# kubeadm config images list registry.k8s.io/kube-apiserver:v1.30.0 registry.k8s.io/kube-controller-manager:v1.30.0 registry.k8s.io/kube-scheduler:v1.30.0 registry.k8s.io/kube-proxy:v1.30.0 registry.k8s.io/coredns/coredns:v1.11.1 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlroot@k8s-master-01:~# cat kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.110.88 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master-01 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.30.3 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd 部署K8Skubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap部署网络插件下载网络插件wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: docker.io/flannel/flannel:v0.25.5 image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1 image: docker.io/flannel/flannel:v0.25.5 改为下面 要去阿里云上面构建自己的镜像root@k8s-master-01:~# grep -i image kube-flannel.yml image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 部署在master上即可kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml #这个是删除网络插件的查看状态kubectl -n kube-flannel get pods kubectl -n kube-flannel get pods -w [root@k8s-master-01 ~]# kubectl get nodes # 全部ready [root@k8s-master-01 ~]# kubectl -n kube-system get pods # 两个coredns的pod也都ready部署kubectl命令提示(在所有节点上执行)yum install bash-completion* -y kubectl completion bash > ~/.kube/completion.bash.inc echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile source $HOME/.bash_profile出现root@k8s-node-01:~# kubectl get node E0720 07:32:10.289542 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.290237 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.292469 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.292759 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.294655 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused The connection to the server localhost:8080 was refused - did you specify the right host or port? #在node节点执行下面命令修改ip地址 mkdir -p $HOME/.kube scp root@192.168.30.135:/etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config重新触发证书上传(核心操作)在首次成功初始化控制平面(kubeadm init)后,需再次执行以下命令(秘钥有效期是两小时):root@k8s-01:~# sudo kubeadm init phase upload-certs --upload-certs I0807 05:49:38.988834 143146 version.go:256] remote version is much newer: v1.33.3; falling back to: stable-1.27 W0807 05:49:48.990339 143146 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.27.txt": Get "https://cdn.dl.k8s.io/release/stable-1.27.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W0807 05:49:48.990372 143146 version.go:105] falling back to the local client version: v1.27.6 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 52cb628f88aefbb45cccb94f09bb4e27f9dc77aff464e7bc60af0a9843f41a3fkubeadm join <MASTER_IP>:6443 --token <TOKEN> \ --discovery-token-ca-cert-hash sha256:<HASH> \ --control-plane --certificate-key <KEY>

Ubuntu安装 kubeadm 部署k8s 1.30 一、准备工作0、ubuntu 添加root用户sudo passwd root su - root # 输入你刚刚设置的密码即可,退出,下次就可以用root登录 #关闭防火墙 systemctl status ufw.service systemctl stop ufw.service #ssh禁用了root连接可以开启 设置vi /etc/ssh/sshd_config配置开启 PermitRootLogin yes 重启服务 systemctl restart sshd#配置加速 代理长期生效 cat >/etc/profile.d/proxy.sh << 'EOF' export http_proxy="http://192.168.1.9:7890" export https_proxy="http://192.168.1.9:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" export no_proxy="127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc" export NO_PROXY="$no_proxy" EOF source /etc/profile.d/proxy.sh #下面部署完containerd后再操作 mkdir -p /etc/systemd/system/containerd.service.d cat >/etc/systemd/system/containerd.service.d/http-proxy.conf << 'EOF' [Service] Environment="HTTP_PROXY=http://192.168.1.9:7890" Environment="HTTPS_PROXY=http://192.168.1.9:7890" Environment="NO_PROXY=127.0.0.1,localhost,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.cluster.local,.svc" EOF systemctl daemon-reload systemctl restart containerd crictl pull docker.io/library/busybox:latest1、打开Netplan配置文件sudo nano /etc/netplan/00-installer-config.yaml # 根据实际文件名修改2、修改配置文件2.1动态IPnetwork: ethernets: ens33: # 网卡名(用 `ip a` 查看) dhcp4: true version: 22.2静态IPnetwork: ethernets: ens33: dhcp4: no addresses: [192.168.1.100/24] # IP/子网掩码 gateway4: 192.168.1.1 # 网关 nameservers: addresses: [8.8.8.8, 1.1.1.1] # DNS服务器 version: 23、应用配置sudo netplan apply4、SSH远程登录#修改/etc/ssh/sshd_config PermitRootLogin yessudo systemctl restart sshd三台主机ubuntu20.04.4使用阿里云的apt源先备份一份 sudo cp /etc/apt/sources.list /etc/apt/sources.list.bakvi /etc/apt/sources.list deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse机器配置#修改主机名 sudo hostnamectl set-hostname 主机名 #刷新主机名无需重启 sudo hostname -F /etc/hostnamecat >> /etc/hosts << "EOF" 192.168.110.88 k8s-master-01 m1 192.168.110.70 k8s-node-01 n1 192.168.110.176 k8s-node-02 n2 EOF 集群通信ssh-keygen ssh-copy-id m1 ssh-copy-id n1 ssh-copy-id n2关闭系统的交换分区swap集群内主机都需要执行sed -ri 's/^([^#].*swap.*)$/#\1/' /etc/fstab && grep swap /etc/fstab && swapoff -a && free -h同步时间主节点做sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat << 'EOF' > /etc/chrony/conf.d/aliyun.conf server ntp1.aliyun.com iburst minpoll 4 maxpoll 10 server ntp2.aliyun.com iburst minpoll 4 maxpoll 10 server ntp3.aliyun.com iburst minpoll 4 maxpoll 10 server ntp4.aliyun.com iburst minpoll 4 maxpoll 10 server ntp5.aliyun.com iburst minpoll 4 maxpoll 10 server ntp6.aliyun.com iburst minpoll 4 maxpoll 10 server ntp7.aliyun.com iburst minpoll 4 maxpoll 10 driftfile /var/lib/chrony/drift makestep 10 3 rtcsync allow 0.0.0.0/0 local stratum 10 keyfile /etc/chrony.keys logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF systemctl restart chronyd.service # 最好重启,这样无论原来是否启动都可以重新加载配置 systemctl enable chronyd.service systemctl status chronyd.service从节点做sudo apt install chrony -y mv /etc/chrony/conf.d /etc/chrony/conf.d.bak cat > /etc/chrony/conf.d/aliyun.conf<< EOF server 192.168.110.88 iburst driftfile /var/lib/chrony/drift makestep 10 3 rtcsync local stratum 10 keyfile /etc/chrony.key logdir /var/log/chrony stratumweight 0.05 noclientlog logchange 0.5 EOF设置内核参数集群内主机都需要执行cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl = 15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF # 立即生效 sysctl --system# 1. 加载必要的内核模块 sudo modprobe br_netfilter # 2. 确保模块开机自动加载 echo "br_netfilter" | sudo tee /etc/modules-load.d/k8s.conf # 3. 配置网络参数 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF # 4. 应用配置 sudo sysctl --system # 5. 验证配置 ls /proc/sys/net/bridge/ # 应该显示 bridge-nf-call-iptables cat /proc/sys/net/bridge/bridge-nf-call-iptables # 应该输出 1安装常用工具sudo apt update sudo apt install -y expect wget jq psmisc vim net-tools telnet lvm2 git ntpdate chrony bind9-utils rsync unzip git安装ipvsadm安装ipvsadmsudo apt install -y ipvsadm ipset sysstat conntrack #libseccomp 是预装好的 dpkg -l | grep libseccomp在 Ubuntu 22.04.4 中,/etc/sysconfig/modules/ 目录通常不是默认存在的,因为 Ubuntu 使用的是 systemd 作为初始化系统,而不是传统的 SysVinit 或者其他初始化系统。因此,Ubuntu 不使用 /etc/sysconfig/modules/ 来管理模块加载。如果你想确保 IPVS 模块在系统启动时自动加载,你可以按照以下步骤操作:创建一个 /etc/modules-load.d/ipvs.conf 文件: 在这个文件中,你可以列出所有需要在启动时加载的模块。这样做可以确保在启动时自动加载这些模块。echo "ip_vs" > /etc/modules-load.d/ipvs.conf echo "ip_vs_lc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wlc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_rr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_wrr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblc" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_lblcr" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_dh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sh" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_fo" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_nq" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_sed" >> /etc/modules-load.d/ipvs.conf echo "ip_vs_ftp" >> /etc/modules-load.d/ipvs.conf echo "nf_conntrack" >> /etc/modules-load.d/ipvs.conf加载模块: 你可以使用 modprobe 命令来手动加载这些模块,或者让系统在下次重启时自动加载。sudo modprobe ip_vs sudo modprobe ip_vs_lc sudo modprobe ip_vs_wlc sudo modprobe ip_vs_rr sudo modprobe ip_vs_wrr sudo modprobe ip_vs_lblc sudo modprobe ip_vs_lblcr sudo modprobe ip_vs_dh sudo modprobe ip_vs_sh sudo modprobe ip_vs_fo sudo modprobe ip_vs_nq sudo modprobe ip_vs_sed sudo modprobe ip_vs_ftp sudo modprobe nf_conntrack验证模块是否加载: 你可以使用 lsmod 命令来验证这些模块是否已经被成功加载。lsmod | grep ip_vs# 1. 内核模块 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 2. 必要 sysctl cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # 3. 可选常用调优(按需) cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-extra.conf fs.inotify.max_user_watches = 524288 fs.inotify.max_user_instances = 8192 fs.file-max = 1000000 EOF # 4. 应用所有 sysctl sudo sysctl --system 二、安装containerd(三台节点都要做)#只要超过2.4就不用再安装了 root@k8s-master-01:/etc/modules-load.d# dpkg -l | grep libseccomp ii libseccomp2:amd64 2.5.3-2ubuntu2 amd64 high level interface to Linux seccomp filter开始安装apt install containerd* -y containerd --version #查看版本配置mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 vi /etc/containerd/config.toml 把下面改为自己构建的仓库 sandbox_image = sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/eeeee:3.8"#配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml 配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#配置containerd开机自启动 #启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #查看containerd状态 systemctl status containerd #查看containerd的版本 ctr version三、安装最新版本的kubeadm、kubelet 和 kubectl1、三台机器准备k8s配置安装源apt-get update && apt-get install -y apt-transport-https sudo mkdir -p /etc/apt/keyrings curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo tee /etc/apt/keyrings/kubernetes-apt-keyring.asc > /dev/null echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.asc] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list # 2. sudo mkdir -p /etc/apt/keyrings curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key \ | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" \ | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubeadm=1.30.14-1.1 kubelet=1.30.14-1.1 kubectl=1.30.14-1.1 sudo apt-mark hold kubelet kubeadm kubectl2、主节点操作(node节点不执行)初始化master节点(仅在master节点上执行) #可以kubeadm config images list查看 [root@k8s-master-01 ~]# kubeadm config images list registry.k8s.io/kube-apiserver:v1.30.0 registry.k8s.io/kube-controller-manager:v1.30.0 registry.k8s.io/kube-scheduler:v1.30.0 registry.k8s.io/kube-proxy:v1.30.0 registry.k8s.io/coredns/coredns:v1.11.1 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.12-0kubeadm config print init-defaults > kubeadm.yamlroot@k8s-master-01:~# cat kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.110.88 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master-01 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.30.3 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd 部署K8Skubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification --ignore-preflight-errors=Swap部署网络插件下载网络插件wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml[root@k8s-master-01 ~]# grep -i image kube-flannel.yml image: docker.io/flannel/flannel:v0.25.5 image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1 image: docker.io/flannel/flannel:v0.25.5 改为下面 要去阿里云上面构建自己的镜像root@k8s-master-01:~# grep -i image kube-flannel.yml image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/ddd:1.5.1 image: registry.cn-guangzhou.aliyuncs.com/xingcangku/cccc:0.25.5 部署在master上即可kubectl apply -f kube-flannel.yml kubectl delete -f kube-flannel.yml #这个是删除网络插件的查看状态kubectl -n kube-flannel get pods kubectl -n kube-flannel get pods -w [root@k8s-master-01 ~]# kubectl get nodes # 全部ready [root@k8s-master-01 ~]# kubectl -n kube-system get pods # 两个coredns的pod也都ready部署kubectl命令提示(在所有节点上执行)yum install bash-completion* -y kubectl completion bash > ~/.kube/completion.bash.inc echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile source $HOME/.bash_profile出现root@k8s-node-01:~# kubectl get node E0720 07:32:10.289542 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.290237 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.292469 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.292759 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused E0720 07:32:10.294655 18062 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused The connection to the server localhost:8080 was refused - did you specify the right host or port? #在node节点执行下面命令修改ip地址 mkdir -p $HOME/.kube scp root@192.168.30.135:/etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config重新触发证书上传(核心操作)在首次成功初始化控制平面(kubeadm init)后,需再次执行以下命令(秘钥有效期是两小时):root@k8s-01:~# sudo kubeadm init phase upload-certs --upload-certs I0807 05:49:38.988834 143146 version.go:256] remote version is much newer: v1.33.3; falling back to: stable-1.27 W0807 05:49:48.990339 143146 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.27.txt": Get "https://cdn.dl.k8s.io/release/stable-1.27.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W0807 05:49:48.990372 143146 version.go:105] falling back to the local client version: v1.27.6 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 52cb628f88aefbb45cccb94f09bb4e27f9dc77aff464e7bc60af0a9843f41a3fkubeadm join <MASTER_IP>:6443 --token <TOKEN> \ --discovery-token-ca-cert-hash sha256:<HASH> \ --control-plane --certificate-key <KEY> -

docker容器导出为镜像 容器分层可写层:upperdir镜像层(只读层):lowerdir容器被删掉后,可写层的数据就没了,如果我们的需求是基于一个基础镜像制作一个新镜像,那么我们可以这么做,pul一个基础镜像,然后用该镜像run启动一个容器,然后exec进入容器内部署各种软件做好一些配置,这些写操作都留在了upperdir层,一旦销毁容器一切都不复存在,此时可以在容器外使用commit命令把容器整体upperdir+lowerdir导出为一个新镜像下载基础镜像docker pull centos:7启动容器docker run -d --name test111 centos:7 sleep 10000进入容器安装、修改配置、编写启动文件[root@test03 ~]# docker exec -ti test111 sh sh-4.2# mkdir /soft sh-4.2# echo 111 > /soft/1.txt sh-4.2# echo 222 > /soft/2.txt sh-4.2# echo "echo start...;tail -f /dev/null" > /soft/run.sh sh-4.2# exitcommit镜像当前运行的容器,其实就是它的upperdir+lowerdir导出为一个新镜像[root@test03 ~]# docker commit test111 myimage:v1.0 [root@test03 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE myimage v1.0 adfed0daa724 4 seconds ago 204MB[root@test03 ~]# docker run -d --name test222 myimage:v1.0 sh /soft/run.sh ea04adcee6d7f157f764d2c8028eb5bdfd9c02a436ba3941c87a58304e853dfa [root@test03 ~]# [root@test03 ~]# [root@test03 ~]# docker top test222 UID PID PPID C STIME TTY TIME CMD root 21349 21330 0 11:39 ? 00:00:00 sh /soft/run.sh root 21375 21349 0 11:39 ? 00:00:00 tail -f /dev/null

docker容器导出为镜像 容器分层可写层:upperdir镜像层(只读层):lowerdir容器被删掉后,可写层的数据就没了,如果我们的需求是基于一个基础镜像制作一个新镜像,那么我们可以这么做,pul一个基础镜像,然后用该镜像run启动一个容器,然后exec进入容器内部署各种软件做好一些配置,这些写操作都留在了upperdir层,一旦销毁容器一切都不复存在,此时可以在容器外使用commit命令把容器整体upperdir+lowerdir导出为一个新镜像下载基础镜像docker pull centos:7启动容器docker run -d --name test111 centos:7 sleep 10000进入容器安装、修改配置、编写启动文件[root@test03 ~]# docker exec -ti test111 sh sh-4.2# mkdir /soft sh-4.2# echo 111 > /soft/1.txt sh-4.2# echo 222 > /soft/2.txt sh-4.2# echo "echo start...;tail -f /dev/null" > /soft/run.sh sh-4.2# exitcommit镜像当前运行的容器,其实就是它的upperdir+lowerdir导出为一个新镜像[root@test03 ~]# docker commit test111 myimage:v1.0 [root@test03 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE myimage v1.0 adfed0daa724 4 seconds ago 204MB[root@test03 ~]# docker run -d --name test222 myimage:v1.0 sh /soft/run.sh ea04adcee6d7f157f764d2c8028eb5bdfd9c02a436ba3941c87a58304e853dfa [root@test03 ~]# [root@test03 ~]# [root@test03 ~]# docker top test222 UID PID PPID C STIME TTY TIME CMD root 21349 21330 0 11:39 ? 00:00:00 sh /soft/run.sh root 21375 21349 0 11:39 ? 00:00:00 tail -f /dev/null -

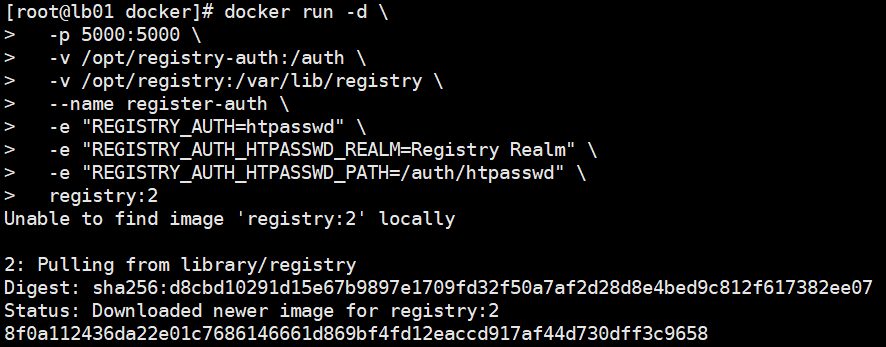

docker部署自己的镜像仓库 启动自己的registrydocker pull registry mkdir /opt/registry docker run -d -p 5000:5000 --restart=always --name registry -v /opt/registry:/var/lib/registry registry配置文件cat > /etc/docker/daemon.json << EOF { "storage-driver": "overlay2", "insecure-registries": ["192.168.110.138:5000"], "registry-mirrors": ["https://docker.chenby.cn"], "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true } EOFsystemctl restart docker往自定义仓库推送镜像docker images 镜像地址格式 192.168.15.100:5000/egonlin/nginx:v1.18 先打标签 docker tag centos:7 192.168.110.138:5000/egonlin/centos:7 后推送 docker push 172.16.10.14:5000/egonlin/centos:7在另外一台机器验证,pull镜像docker pull 192.168.110.138:5000/egonlin/centos:7扩展解决安全问题,上面的无论是谁都可以pull和push了,不合理,应该有账号认证yum install httpd-tools -y mkdir /opt/registry-auth -p htpasswd -Bbn axing 123 >> /opt/registry-auth/htpasswd重新启动容器docker container rm -f 容器registry的id号码docker run -d \ -p 5000:5000 \ -v /opt/registry-auth:/auth \ -v /opt/registry:/var/lib/registry \ --name register-auth \ -e "REGISTRY_AUTH=htpasswd" \ -e "REGISTRY_AUTH_HTPASSWD_REALM=Registry Realm" \ -e "REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd" \ registry:2在测试机进行登录测试docker login -u axing -p 123 192.168.110.138:5000然后进行push操作docker pull 192.168.110.138:5000/egonlin/centos:7问题总结# 一、问题: docker登录私有harbor,发现登陆报错; Error response from daemon:Get “https:.//.../v2/"": http: server gave HTTP response to HTTs client # 二、解决方法: 1.在服务器中,cd到docker目录下 cd /etc/docker 2.看这个目录下有没有daemon.json 这个文件,如果没有就手动创建 touch daemon.json,然后 vim daemon.json touch daemon.json vim daemon.json 3.在里面写入一个类似于json格式的键值对 { "insecure-registries":["你的harborip:端口"] } #这里填入的就是你的harbor ip地址 4.重启docker服务 Systemctl restart docker 5.然后把docker容器都起来 docker start container_name #container_name 就是你们docker里的容器名字,把他们都起来 6.现在再去登录docker harbor,即可登录成功

docker部署自己的镜像仓库 启动自己的registrydocker pull registry mkdir /opt/registry docker run -d -p 5000:5000 --restart=always --name registry -v /opt/registry:/var/lib/registry registry配置文件cat > /etc/docker/daemon.json << EOF { "storage-driver": "overlay2", "insecure-registries": ["192.168.110.138:5000"], "registry-mirrors": ["https://docker.chenby.cn"], "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true } EOFsystemctl restart docker往自定义仓库推送镜像docker images 镜像地址格式 192.168.15.100:5000/egonlin/nginx:v1.18 先打标签 docker tag centos:7 192.168.110.138:5000/egonlin/centos:7 后推送 docker push 172.16.10.14:5000/egonlin/centos:7在另外一台机器验证,pull镜像docker pull 192.168.110.138:5000/egonlin/centos:7扩展解决安全问题,上面的无论是谁都可以pull和push了,不合理,应该有账号认证yum install httpd-tools -y mkdir /opt/registry-auth -p htpasswd -Bbn axing 123 >> /opt/registry-auth/htpasswd重新启动容器docker container rm -f 容器registry的id号码docker run -d \ -p 5000:5000 \ -v /opt/registry-auth:/auth \ -v /opt/registry:/var/lib/registry \ --name register-auth \ -e "REGISTRY_AUTH=htpasswd" \ -e "REGISTRY_AUTH_HTPASSWD_REALM=Registry Realm" \ -e "REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd" \ registry:2在测试机进行登录测试docker login -u axing -p 123 192.168.110.138:5000然后进行push操作docker pull 192.168.110.138:5000/egonlin/centos:7问题总结# 一、问题: docker登录私有harbor,发现登陆报错; Error response from daemon:Get “https:.//.../v2/"": http: server gave HTTP response to HTTs client # 二、解决方法: 1.在服务器中,cd到docker目录下 cd /etc/docker 2.看这个目录下有没有daemon.json 这个文件,如果没有就手动创建 touch daemon.json,然后 vim daemon.json touch daemon.json vim daemon.json 3.在里面写入一个类似于json格式的键值对 { "insecure-registries":["你的harborip:端口"] } #这里填入的就是你的harbor ip地址 4.重启docker服务 Systemctl restart docker 5.然后把docker容器都起来 docker start container_name #container_name 就是你们docker里的容器名字,把他们都起来 6.现在再去登录docker harbor,即可登录成功 -

docker安装 1.首先关闭防火墙和selinuxsetenforce 0 systemctl stop firewalld iptables -t filter -F2.安装卸载之前的dockeryum remove docker docker-common docker-selinux docker-engine -yrocky linux 9sudo dnf remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine安装docker所需安装包yum install -y yum-utils device-mapper-persistent-data lvm2rocky linux 9sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo sudo dnf install docker-ce docker-ce-cli containerd.io -y sudo dnf install dnf-plugins-core -y安装docker yum源yum install wget -y # 官方源(国内访问不了了) wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo # 可以用阿里云的(与官网的是一致的,感谢阿里云) yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.reporocky linux 添加 Docker 官方仓库#注意:Rocky Linux兼容RHEL/CentOS仓库,直接使用CentOS 9的Docker仓库即可。 sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo安装docker# 1、安装 yum install docker-ce -y systemctl start docker # 启动后,才会创建出目录/etc/docker # 2、修改配置 vim /etc/docker/daemon.json # 修改文件时记得去掉下面的注释 { # 1、cgroup驱动,在介绍cgroup机制时会详细介绍 "exec-opts": ["native.cgroupdriver=systemd"], # 2、由于国内特殊的网络环境,往往我们从Docker Hub中拉取镜像并不能成功,而且速度特别慢。那么我们可以给Docker配置一个国内的registry mirror,当我们需要的镜像在mirror中则直接返回,如果没有则从Docker Hub中拉取。是否使用registry mirror对Docker用户来说是透明的。 "registry-mirrors": ["https://docker.chenby.cn"], # 3、# 修改数据的存放目录到/opt/mydocker/,原/var/lib/docker/ # 3.1 老版本docker-ce指定数据目录用graph # "graph": "/opt/mydocker", # 3.2 docker20.x.x新版本不用graph,而是用data-root # "data-root": "/opt/mydocker", # 4、重启docker服务,容器全部退出的解决办法 "live-restore": true }rocky linux 9 安装 Docker Enginesudo dnf install docker-ce docker-ce-cli containerd.io -y启动并设置开机自启先创建出数据目录 [root@docker01 ~]# mkdir -p /opt/mydocker [root@docker01 ~]# systemctl restart docker.service [root@docker01 ~]# systemctl enable --now docker.service [root@docker01 ~]# docker info # 查看rocky linux 9 启动并设置开机自启sudo systemctl start docker sudo systemctl enable docker加速cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": ["https://15.164.211.114:6443"], "insecure-registries": ["15.164.211.114:6443"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF# 重启Docker服务 systemctl daemon-reload && systemctl restart docker======================下面是ubuntu安装docker======================卸载旧版本(可选)sudo apt remove docker docker-engine docker.io containerd runc更新系统并安装依赖sudo apt update sudo apt install -y ca-certificates curl gnupg lsb-release添加 Docker 官方 GPG 密钥sudo mkdir -p /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg设置 Docker 仓库echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null安装 Docker Enginesudo apt update sudo apt install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin验证安装sudo docker run hello-world(可选)免 sudo 执行 Dockersudo usermod -aG docker $USER设置开机自启sudo systemctl enable docker.service sudo systemctl enable containerd.service#常用管理命令: sudo systemctl start docker # 启动 sudo systemctl stop docker # 停止 sudo systemctl restart docker # 重启 docker version # 查看版本

docker安装 1.首先关闭防火墙和selinuxsetenforce 0 systemctl stop firewalld iptables -t filter -F2.安装卸载之前的dockeryum remove docker docker-common docker-selinux docker-engine -yrocky linux 9sudo dnf remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine安装docker所需安装包yum install -y yum-utils device-mapper-persistent-data lvm2rocky linux 9sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo sudo dnf install docker-ce docker-ce-cli containerd.io -y sudo dnf install dnf-plugins-core -y安装docker yum源yum install wget -y # 官方源(国内访问不了了) wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo # 可以用阿里云的(与官网的是一致的,感谢阿里云) yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.reporocky linux 添加 Docker 官方仓库#注意:Rocky Linux兼容RHEL/CentOS仓库,直接使用CentOS 9的Docker仓库即可。 sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo安装docker# 1、安装 yum install docker-ce -y systemctl start docker # 启动后,才会创建出目录/etc/docker # 2、修改配置 vim /etc/docker/daemon.json # 修改文件时记得去掉下面的注释 { # 1、cgroup驱动,在介绍cgroup机制时会详细介绍 "exec-opts": ["native.cgroupdriver=systemd"], # 2、由于国内特殊的网络环境,往往我们从Docker Hub中拉取镜像并不能成功,而且速度特别慢。那么我们可以给Docker配置一个国内的registry mirror,当我们需要的镜像在mirror中则直接返回,如果没有则从Docker Hub中拉取。是否使用registry mirror对Docker用户来说是透明的。 "registry-mirrors": ["https://docker.chenby.cn"], # 3、# 修改数据的存放目录到/opt/mydocker/,原/var/lib/docker/ # 3.1 老版本docker-ce指定数据目录用graph # "graph": "/opt/mydocker", # 3.2 docker20.x.x新版本不用graph,而是用data-root # "data-root": "/opt/mydocker", # 4、重启docker服务,容器全部退出的解决办法 "live-restore": true }rocky linux 9 安装 Docker Enginesudo dnf install docker-ce docker-ce-cli containerd.io -y启动并设置开机自启先创建出数据目录 [root@docker01 ~]# mkdir -p /opt/mydocker [root@docker01 ~]# systemctl restart docker.service [root@docker01 ~]# systemctl enable --now docker.service [root@docker01 ~]# docker info # 查看rocky linux 9 启动并设置开机自启sudo systemctl start docker sudo systemctl enable docker加速cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": ["https://15.164.211.114:6443"], "insecure-registries": ["15.164.211.114:6443"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF# 重启Docker服务 systemctl daemon-reload && systemctl restart docker======================下面是ubuntu安装docker======================卸载旧版本(可选)sudo apt remove docker docker-engine docker.io containerd runc更新系统并安装依赖sudo apt update sudo apt install -y ca-certificates curl gnupg lsb-release添加 Docker 官方 GPG 密钥sudo mkdir -p /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg设置 Docker 仓库echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null安装 Docker Enginesudo apt update sudo apt install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin验证安装sudo docker run hello-world(可选)免 sudo 执行 Dockersudo usermod -aG docker $USER设置开机自启sudo systemctl enable docker.service sudo systemctl enable containerd.service#常用管理命令: sudo systemctl start docker # 启动 sudo systemctl stop docker # 停止 sudo systemctl restart docker # 重启 docker version # 查看版本