搜索到

92

篇与

的结果

-

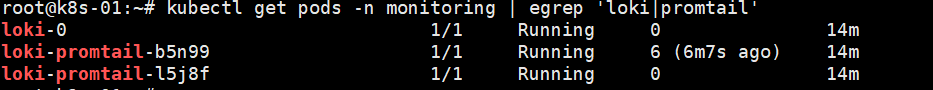

loki 部署和镜像代理 一、部署 1.1 添加 Grafana 的 Helm 仓库helm repo add grafana https://grafana.github.io/helm-charts helm repo update1.2 安装 Loki Stack(只装 Loki + Promtail,不重复装 Prometheus/Grafana)#如果你有代理 临时生效 export http_proxy="http://192.168.3.135:7890" export https_proxy="http://192.168.3.135:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" # 内网和 k8s service 网段一般不走代理 export no_proxy="localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" export NO_PROXY="$no_proxy" #要全局永久生效,可以写到 /etc/environment 或 /etc/profile 里: sudo tee -a /etc/environment >/dev/null <<EOF HTTP_PROXY=http://192.168.3.135:7890 HTTPS_PROXY=http://192.168.3.135:7890 http_proxy=http://192.168.3.135:7890 https_proxy=http://192.168.3.135:7890 NO_PROXY=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local no_proxy=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local EOF #如果是docker运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/docker.service.d sudo tee /etc/systemd/system/docker.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl show --property=Environment docker #如果是containerd运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/containerd.service.d sudo tee /etc/systemd/system/containerd.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart containerd sudo systemctl show --property=Environment containerd #创建pod helm install loki grafana/loki-stack \ -n monitoring --create-namespace \ --set grafana.enabled=false \ --set prometheus.enabled=false 二、配置 2.1 如果loki 和 prometheus在同一个名称空间下2.2 使用

loki 部署和镜像代理 一、部署 1.1 添加 Grafana 的 Helm 仓库helm repo add grafana https://grafana.github.io/helm-charts helm repo update1.2 安装 Loki Stack(只装 Loki + Promtail,不重复装 Prometheus/Grafana)#如果你有代理 临时生效 export http_proxy="http://192.168.3.135:7890" export https_proxy="http://192.168.3.135:7890" export HTTP_PROXY="$http_proxy" export HTTPS_PROXY="$https_proxy" # 内网和 k8s service 网段一般不走代理 export no_proxy="localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" export NO_PROXY="$no_proxy" #要全局永久生效,可以写到 /etc/environment 或 /etc/profile 里: sudo tee -a /etc/environment >/dev/null <<EOF HTTP_PROXY=http://192.168.3.135:7890 HTTPS_PROXY=http://192.168.3.135:7890 http_proxy=http://192.168.3.135:7890 https_proxy=http://192.168.3.135:7890 NO_PROXY=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local no_proxy=localhost,127.0.0.1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local EOF #如果是docker运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/docker.service.d sudo tee /etc/systemd/system/docker.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl show --property=Environment docker #如果是containerd运行时 每个节点都部署 其中 10.96.0.0/12 是很多 kubeadm 集群默认的 Service CIDR,可按你集群实际修改。 sudo mkdir -p /etc/systemd/system/containerd.service.d sudo tee /etc/systemd/system/containerd.service.d/http-proxy.conf >/dev/null <<EOF [Service] Environment="HTTP_PROXY=http://192.168.3.135:7890" Environment="HTTPS_PROXY=http://192.168.3.135:7890" Environment="NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local" EOF sudo systemctl daemon-reload sudo systemctl restart containerd sudo systemctl show --property=Environment containerd #创建pod helm install loki grafana/loki-stack \ -n monitoring --create-namespace \ --set grafana.enabled=false \ --set prometheus.enabled=false 二、配置 2.1 如果loki 和 prometheus在同一个名称空间下2.2 使用 -

-

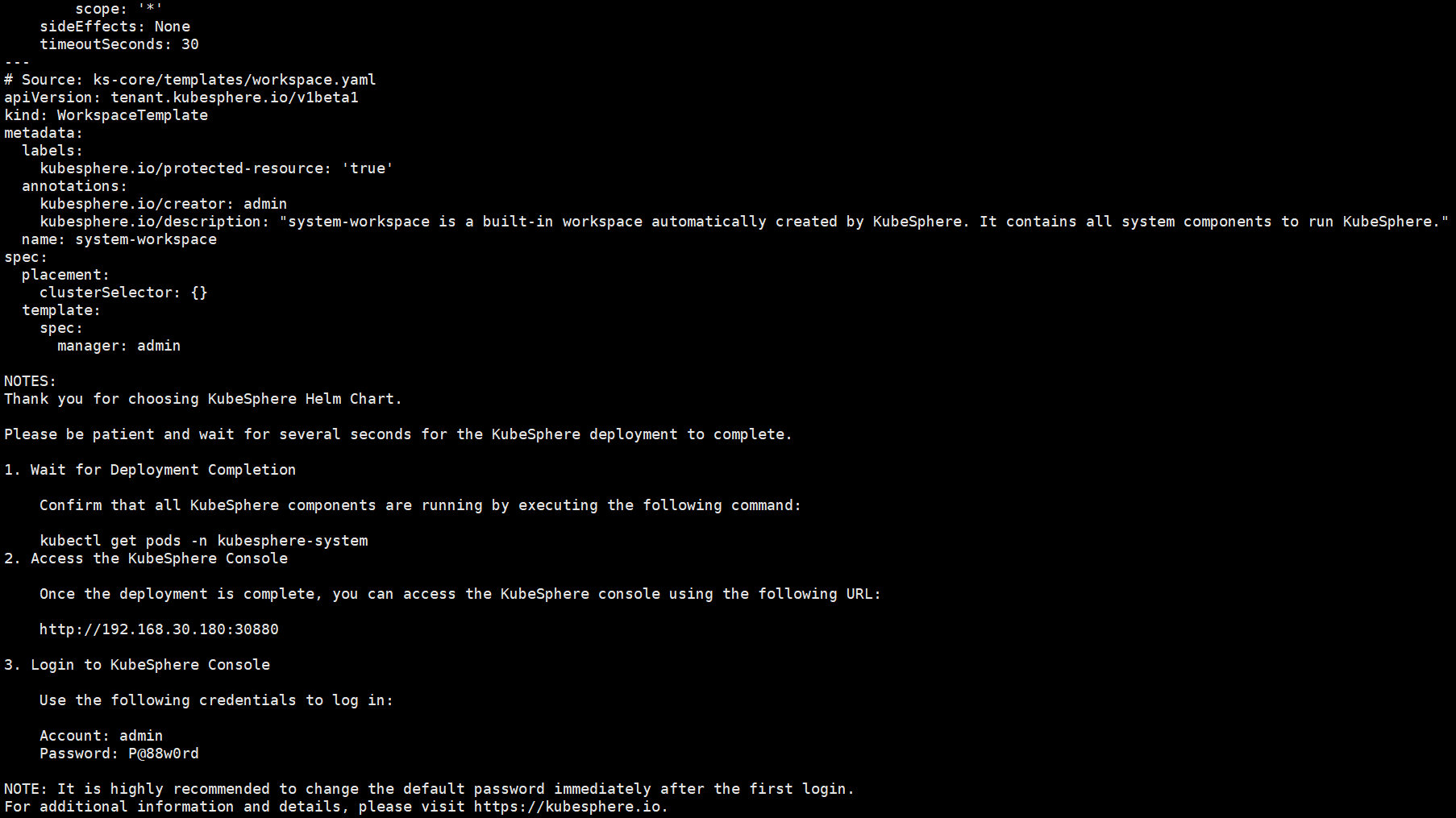

KubeSphere部署 一、部署安装#官网 https://docs.kubesphere.com.cn/v4.2.0/01-intro/1.1 helmcurl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash helm version1.2 安装 ks-corechart=oci://hub.kubesphere.com.cn/kse/ks-core version=1.2.2 helm upgrade --install \ -n kubesphere-system \ --create-namespace \ ks-core $chart \ --debug --wait \ --version $version \ --reset-values #拉取不到加这个 --set extension.imageRegistry=swr.cn-north-9.myhuaweicloud.com/ks1.3 查看安装结果kubectl get pods -n kubesphere-system1.4 访问http://192.168.30.180:30880 Account: admin Password: P@88w0rd二、激活 在web中拿到集群的ID然后去https://kubesphere.com.cn/apply-license/ 申请授权码 会发到邮箱中 然后在首页授权

KubeSphere部署 一、部署安装#官网 https://docs.kubesphere.com.cn/v4.2.0/01-intro/1.1 helmcurl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash helm version1.2 安装 ks-corechart=oci://hub.kubesphere.com.cn/kse/ks-core version=1.2.2 helm upgrade --install \ -n kubesphere-system \ --create-namespace \ ks-core $chart \ --debug --wait \ --version $version \ --reset-values #拉取不到加这个 --set extension.imageRegistry=swr.cn-north-9.myhuaweicloud.com/ks1.3 查看安装结果kubectl get pods -n kubesphere-system1.4 访问http://192.168.30.180:30880 Account: admin Password: P@88w0rd二、激活 在web中拿到集群的ID然后去https://kubesphere.com.cn/apply-license/ 申请授权码 会发到邮箱中 然后在首页授权 -

redis集群升级 总结升级redis 中间件的过程一、备份二、修改yal文件 更改镜像三、直接docker-compose up <容器的名字> 注意需要先升级slave再升级maste节点四、查看状态redis-cli -p 6001 -a <密码> info replication | egrep 'role|connected_slaves'root@37d65a2680e6:/data# redis-cli -c -p 6001 -a <密码> cluster info | egrep 'cluster_state|cluster_slots_ok|cluster_slots_fail'Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.cluster_state:okcluster_slots_ok:16384cluster_slots_fail:0

redis集群升级 总结升级redis 中间件的过程一、备份二、修改yal文件 更改镜像三、直接docker-compose up <容器的名字> 注意需要先升级slave再升级maste节点四、查看状态redis-cli -p 6001 -a <密码> info replication | egrep 'role|connected_slaves'root@37d65a2680e6:/data# redis-cli -c -p 6001 -a <密码> cluster info | egrep 'cluster_state|cluster_slots_ok|cluster_slots_fail'Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.cluster_state:okcluster_slots_ok:16384cluster_slots_fail:0 -

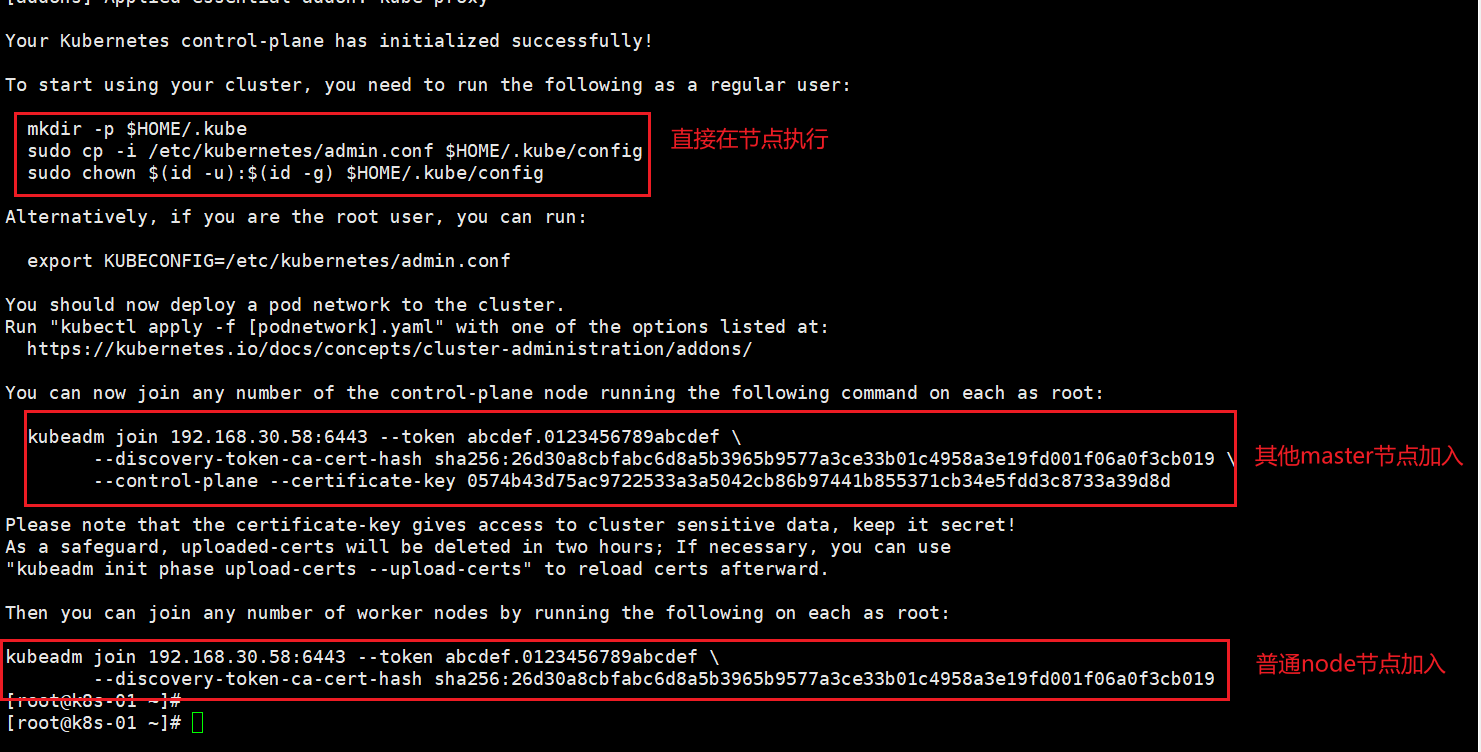

rocky linux 9 安装 多主架构 一、固定IP地址#配置 sudo nmcli connection modify ens160 \ ipv4.method manual \ ipv4.addresses 192.168.30.50/24 \ ipv4.gateway 192.168.30.2 \ ipv4.dns "8.8.8.8,8.8.4.4" #更新配置 sudo nmcli connection down ens160 && sudo nmcli connection up ens160二、准备工作 2.0 修改主机名#每个节点对应一个 hostnamectl set-hostname k8s-01 hostnamectl set-hostname k8s-02 hostnamectl set-hostname k8s-03#提前配好vip ip 三个节点都要做 cat >>/etc/hosts <<'EOF' 192.168.30.50 k8s-01 192.168.30.51 k8s-02 192.168.30.52 k8s-03 192.168.30.58 k8s-vip EOF2.1 配置yum源#sudo mkdir /etc/yum.repos.d/backup #sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/ 直接执行下面的 # 使用阿里云推荐的配置方法 sudo sed -e 's!^mirrorlist=!#mirrorlist=!g' \ -e 's!^#baseurl=http://dl.rockylinux.org/$contentdir!baseurl=https://mirrors.aliyun.com/rockylinux!g' \ -i /etc/yum.repos.d/Rocky-*.repo #清理并重建缓存 sudo dnf clean all sudo dnf makecache #测试更新 sudo dnf -y update sudo dnf -y install wget curl vim tar gzip2.2设置时区#查看当前时区设置 timedatectl #设置时区为中国时区(上海时间) sudo timedatectl set-timezone Asia/Shanghai2.3设置时间#安装并配置 Chrony(推荐) # RHEL/CentOS/Alma/Rocky sudo dnf -y install chrony || sudo yum -y install chrony sudo systemctl enable --now chronyd # 编辑配置文件 sudo vi /etc/chrony.conf #把默认的 pool/server 行注释掉(没外网也无用),然后加入(或确认存在)以下内容: # 把 30.50 作为“本地时间源”,无外部上游时自成一体 local stratum 10 # 允许本网段客户端访问 allow 192.168.30.0/24 # 绑定监听到这块网卡(可选,但建议写上) bindaddress 192.168.30.50 # 客户端第一次偏差大时允许快速步进校时 makestep 1 3 # 用系统时钟做源,且把系统时间同步到硬件时钟(断电后也较准) rtcsync #保存重启 sudo systemctl restart chronyd #防火墙放行 # firewalld(RHEL系) sudo firewall-cmd --add-service=ntp --permanent sudo firewall-cmd --reload #验证服务器状态 # 查看 chrony 源与自我状态 chronyc tracking # 查看已连接的客户端(执行一会儿后能看到) chronyc clients # 确认监听 123/udp sudo ss -lunp | grep :123# 客户端安装 # RHEL系 sudo dnf -y install chrony || sudo yum -y install chrony # Debian/Ubuntu sudo apt -y install chrony # 配置(RHEL: /etc/chrony.conf;Ubuntu/Debian: /etc/chrony/chrony.conf) # 注释掉原来的 pool/server 行,新增: server 192.168.30.50 iburst # 重启并查看 sudo systemctl restart chronyd chronyc sources -v chronyc tracking2.4关闭swap分区sudo swapoff -a sudo sed -ri '/\sswap\s/s/^#?/#/' /etc/fstab2.5关闭selinuxsudo systemctl disable --now firewalld #推荐:保持 Enforcing(Kubernetes + containerd 在 RHEL9 系已支持),同时安装策略包: sudo dnf -y install container-selinux getenforce # 看到 Enforcing 即可 #图省事(不太安全):设为 Permissive: sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config2.6内核模块与 sysctl(所有节点)# 加载并持久化必须内核模块 cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 必备内核参数(转发与桥接) cat <<'EOF' | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #说明:RHEL9/ Rocky9 默认 cgroup v2,Kubernetes + containerd 完全支持,无需改动。2.7文件描述符(fd/ulimit)与进程数# 系统级最大打开文件数 cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn2.8kube-proxy 的 IPVS 模式#安装 sudo dnf -y install ipset ipvsadm cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter # 如启用 IPVS,取消以下行的注释: ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF # 立即加载 sudo modprobe overlay sudo modprobe br_netfilter # 如果要用 IPVS,再执行: for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do sudo modprobe $m; done #验证模块 lsmod | egrep 'br_netfilter|ip_vs|nf_conntrack'三、安装containerd(所有k8s节点都要做) 3.1 使用阿里云的源sudo dnf config-manager --set-enabled powertools # Rocky Linux 8/9需启用PowerTools仓库 sudo dnf install -y yum-utils device-mapper-persistent-data lvm2 #1、卸载之前的 dnf remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo sudo tee /etc/yum.repos.d/docker-ce.repo <<-'EOF' [docker-ce-stable] name=Docker CE Stable - AliOS baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg EOF # 3、安装 sudo dnf install -y containerd.io sudo dnf install containerd* -y3.2配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sudo sed -i 's|registry.k8s.io/pause:3.8|registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8|g' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version四、安装nginx+keepalived#安装与开启 dnf install -y nginx keepalived curl dnf install -y nginx-mod-stream systemctl enable nginx keepalived #配置 Nginx(两台 Master 都要配) #目标:在本机 0.0.0.0:16443 监听,转发到两个后端的 kube-apiserver(50:16443、51:16443) #编辑 /etc/nginx/nginx.conf(保留 http 段也没关系,关键是顶层加上 stream 段;Rocky9 的 nginx 支持动态模块): # /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # 使用系统提供的动态模块配置(若已安装将自动加载 stream 模块) include /usr/share/nginx/modules/*.conf; events { worker_connections 10240; } # 四层转发到两台 apiserver stream { upstream k8s_apiserver { server 192.168.30.50:6443 max_fails=3 fail_timeout=10s; server 192.168.30.51:6443 max_fails=3 fail_timeout=10s; } server { listen 0.0.0.0:16443; proxy_connect_timeout 5s; proxy_timeout 30s; proxy_pass k8s_apiserver; } } http { # 这里保持nginx默认 http 配置即可,删与不删均可。 include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 81; return 200 "ok\n"; } } #配置 Keepalived(两台 Master) #创建健康检查脚本 /etc/keepalived/check_nginx_kube.sh: cat >/etc/keepalived/check_nginx_kube.sh <<'EOF' #!/usr/bin/env bash # 通过本地Nginx转发口探活K8s apiserver(无认证的 /readyz,HTTP 200 即通过) curl -fsSk --connect-timeout 2 https://127.0.0.1:16443/readyz >/dev/null EOF chmod +x /etc/keepalived/check_nginx_kube.sh #Master1(192.168.30.50) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_50 # vrrp_strict # 若使用部分虚拟化/容器网络会引发问题,可注释掉 } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 # 改为你的网卡 virtual_router_id 58 # 1-255 任意一致值,这里取 58 priority 150 # Master1 高优先 advert_int 1 # 单播,避免二层组播受限环境(强烈推荐) unicast_src_ip 192.168.30.50 unicast_peer { 192.168.30.51 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #Master2(192.168.30.151) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_51 # vrrp_strict } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 58 priority 100 # 次优先 advert_int 1 unicast_src_ip 192.168.30.51 unicast_peer { 192.168.30.50 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #启动 systemctl restart keepalived ip a | grep 192.168.30.58 #停掉 Master1 的 keepalived:systemctl stop keepalived,VIP 应在 Master2 出现,验证完再 systemctl start keepalived。五、安装k8s 5.1 准备k8s源# 创建repo文件 cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF sudo dnf makecache #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce dnf install -y kubelet-1.27* kubeadm-1.27* kubectl-1.27* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet 安装锁定版本的插件 sudo dnf install -y dnf-plugin-versionlock 锁定版本不让后续更新sudo dnf versionlock add kubelet-1.27* kubeadm-1.27* kubectl-1.27* containerd.io [root@k8s-01 ~]# sudo dnf versionlock list Last metadata expiration check: 0:35:21 ago on Fri Aug 8 10:40:25 2025. kubelet-0:1.27.6-0.* kubeadm-0:1.27.6-0.* kubectl-0:1.27.6-0.* containerd.io-0:1.7.27-3.1.el9.* #sudo dnf update就会排除锁定的应用5.2 主节点操作(node节点不执行)[root@k8s-01 ~]# kubeadm config images list I0906 16:16:30.198629 49023 version.go:256] remote version is much newer: v1.34.0; falling back to: stable-1.27 registry.k8s.io/kube-apiserver:v1.27.16 registry.k8s.io/kube-controller-manager:v1.27.16 registry.k8s.io/kube-scheduler:v1.27.16 registry.k8s.io/kube-proxy:v1.27.16 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.7-0 registry.k8s.io/coredns/coredns:v1.10.1 kubeadm config print init-defaults > kubeadm.yaml[root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration bootstrapTokens: - token: abcdef.0123456789abcdef ttl: 24h0m0s usages: ["signing","authentication"] groups: ["system:bootstrappers:kubeadm:default-node-token"] localAPIEndpoint: # 这里必须是你要执行 kubeadm init 的这台机器的真实IP(Master1) advertiseAddress: 192.168.30.50 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent # 不要在这里硬编码 name,默认会用主机的 hostname,避免复用此文件时出错 taints: null --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 controlPlaneEndpoint: "192.168.30.58:16443" # 指向 Nginx+Keepalived 的 VIP:PORT certificatesDir: /etc/kubernetes/pki imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 # 供 Calico 使用,此网段可保持不变 apiServer: timeoutForControlPlane: 4m0s certSANs: # 建议把 VIP、两台 Master 的 IP 和主机名都放进 SAN,避免证书不信任 - "192.168.30.58" # VIP - "192.168.30.50" - "192.168.30.51" - "k8s-01" # 如你的主机名不同,请改成实际 hostname - "k8s-02" - "127.0.0.1" - "localhost" - "kubernetes" - "kubernetes.default" - "kubernetes.default.svc" - "kubernetes.default.svc.cluster.local" controllerManager: {} scheduler: {} etcd: local: dataDir: /var/lib/etcd --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: "systemd"root@k8s-01 ~]# kubeadm init --config kubeadm.yaml --upload-certs [init] Using Kubernetes version: v1.27.16 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0906 17:26:53.821977 54526 checks.go:835] detected that the sandbox image "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-01 k8s-02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost] and IPs [10.96.0.1 192.168.30.50 192.168.30.58 192.168.30.51 127.0.0.1] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 12.002658 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d [mark-control-plane] Marking the node k8s-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 \ --control-plane --certificate-key 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 #如果出现失败的情况 kubeadm reset -f [root@k8s-01 ~]# kubeadm reset -f [preflight] Running pre-flight checks W0906 17:08:03.892290 53705 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] Deleted contents of the etcd data directory: /var/lib/etcd [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" W0906 17:08:03.899240 53705 cleanupnode.go:134] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory [reset] Deleting contents of directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your systems IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. #还需要手动删除 rm -rf /$HOME/.kube/config systemctl restart containerd rm -rf ~/.kube /etc/kubernetes/pki/* /etc/kubernetes/manifests/*#安装 CNI #Flannel(简单) kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/v0.25.5/Documentation/kube-flannel.yml #Calico(功能更全) kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/calico.yaml [root@k8s-02 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-59765c79db-rvqm5 1/1 Running 0 8m3s kube-system calico-node-4jlgw 1/1 Running 0 8m3s kube-system calico-node-lvzgx 1/1 Running 0 8m3s kube-system calico-node-qdrmn 1/1 Running 0 8m3s kube-system coredns-65dcc469f7-gktmx 1/1 Running 0 51m kube-system coredns-65dcc469f7-wmppd 1/1 Running 0 51m kube-system etcd-k8s-01 1/1 Running 0 51m kube-system etcd-k8s-02 1/1 Running 0 20m kube-system kube-apiserver-k8s-01 1/1 Running 0 51m kube-system kube-apiserver-k8s-02 1/1 Running 0 19m kube-system kube-controller-manager-k8s-01 1/1 Running 1 (20m ago) 51m kube-system kube-controller-manager-k8s-02 1/1 Running 0 19m kube-system kube-proxy-k7z9v 1/1 Running 0 22m kube-system kube-proxy-sgrln 1/1 Running 0 51m kube-system kube-proxy-wpkjb 1/1 Running 0 20m kube-system kube-scheduler-k8s-01 1/1 Running 1 (19m ago) 51m kube-system kube-scheduler-k8s-02 1/1 Running 0 19m #测试切换 #在当前 VIP 所在主机执行: systemctl stop keepalived #观察另外一台是否接管 VIP: ip a | grep 192.168.30.58 #再次访问: 正常会返回ok curl -k https://192.168.30.58:6443/readyz #恢复 vip会自动漂移回来 systemctl start keepalived#kubectl 正常 [root@k8s-01 ~]# kubectl get cs 2>/dev/null || \ kubectl get --raw='/readyz?verbose' | head NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy

rocky linux 9 安装 多主架构 一、固定IP地址#配置 sudo nmcli connection modify ens160 \ ipv4.method manual \ ipv4.addresses 192.168.30.50/24 \ ipv4.gateway 192.168.30.2 \ ipv4.dns "8.8.8.8,8.8.4.4" #更新配置 sudo nmcli connection down ens160 && sudo nmcli connection up ens160二、准备工作 2.0 修改主机名#每个节点对应一个 hostnamectl set-hostname k8s-01 hostnamectl set-hostname k8s-02 hostnamectl set-hostname k8s-03#提前配好vip ip 三个节点都要做 cat >>/etc/hosts <<'EOF' 192.168.30.50 k8s-01 192.168.30.51 k8s-02 192.168.30.52 k8s-03 192.168.30.58 k8s-vip EOF2.1 配置yum源#sudo mkdir /etc/yum.repos.d/backup #sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/ 直接执行下面的 # 使用阿里云推荐的配置方法 sudo sed -e 's!^mirrorlist=!#mirrorlist=!g' \ -e 's!^#baseurl=http://dl.rockylinux.org/$contentdir!baseurl=https://mirrors.aliyun.com/rockylinux!g' \ -i /etc/yum.repos.d/Rocky-*.repo #清理并重建缓存 sudo dnf clean all sudo dnf makecache #测试更新 sudo dnf -y update sudo dnf -y install wget curl vim tar gzip2.2设置时区#查看当前时区设置 timedatectl #设置时区为中国时区(上海时间) sudo timedatectl set-timezone Asia/Shanghai2.3设置时间#安装并配置 Chrony(推荐) # RHEL/CentOS/Alma/Rocky sudo dnf -y install chrony || sudo yum -y install chrony sudo systemctl enable --now chronyd # 编辑配置文件 sudo vi /etc/chrony.conf #把默认的 pool/server 行注释掉(没外网也无用),然后加入(或确认存在)以下内容: # 把 30.50 作为“本地时间源”,无外部上游时自成一体 local stratum 10 # 允许本网段客户端访问 allow 192.168.30.0/24 # 绑定监听到这块网卡(可选,但建议写上) bindaddress 192.168.30.50 # 客户端第一次偏差大时允许快速步进校时 makestep 1 3 # 用系统时钟做源,且把系统时间同步到硬件时钟(断电后也较准) rtcsync #保存重启 sudo systemctl restart chronyd #防火墙放行 # firewalld(RHEL系) sudo firewall-cmd --add-service=ntp --permanent sudo firewall-cmd --reload #验证服务器状态 # 查看 chrony 源与自我状态 chronyc tracking # 查看已连接的客户端(执行一会儿后能看到) chronyc clients # 确认监听 123/udp sudo ss -lunp | grep :123# 客户端安装 # RHEL系 sudo dnf -y install chrony || sudo yum -y install chrony # Debian/Ubuntu sudo apt -y install chrony # 配置(RHEL: /etc/chrony.conf;Ubuntu/Debian: /etc/chrony/chrony.conf) # 注释掉原来的 pool/server 行,新增: server 192.168.30.50 iburst # 重启并查看 sudo systemctl restart chronyd chronyc sources -v chronyc tracking2.4关闭swap分区sudo swapoff -a sudo sed -ri '/\sswap\s/s/^#?/#/' /etc/fstab2.5关闭selinuxsudo systemctl disable --now firewalld #推荐:保持 Enforcing(Kubernetes + containerd 在 RHEL9 系已支持),同时安装策略包: sudo dnf -y install container-selinux getenforce # 看到 Enforcing 即可 #图省事(不太安全):设为 Permissive: sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config2.6内核模块与 sysctl(所有节点)# 加载并持久化必须内核模块 cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 必备内核参数(转发与桥接) cat <<'EOF' | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system #说明:RHEL9/ Rocky9 默认 cgroup v2,Kubernetes + containerd 完全支持,无需改动。2.7文件描述符(fd/ulimit)与进程数# 系统级最大打开文件数 cat > /etc/security/limits.d/k8s.conf <<EOF * soft nofile 65535 * hard nofile 131070 EOF ulimit -Sn ulimit -Hn2.8kube-proxy 的 IPVS 模式#安装 sudo dnf -y install ipset ipvsadm cat <<'EOF' | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter # 如启用 IPVS,取消以下行的注释: ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack EOF # 立即加载 sudo modprobe overlay sudo modprobe br_netfilter # 如果要用 IPVS,再执行: for m in ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack; do sudo modprobe $m; done #验证模块 lsmod | egrep 'br_netfilter|ip_vs|nf_conntrack'三、安装containerd(所有k8s节点都要做) 3.1 使用阿里云的源sudo dnf config-manager --set-enabled powertools # Rocky Linux 8/9需启用PowerTools仓库 sudo dnf install -y yum-utils device-mapper-persistent-data lvm2 #1、卸载之前的 dnf remove docker docker-ce containerd docker-common docker-selinux docker-engine -y #2、准备repo sudo tee /etc/yum.repos.d/docker-ce.repo <<-'EOF' [docker-ce-stable] name=Docker CE Stable - AliOS baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg EOF # 3、安装 sudo dnf install -y containerd.io sudo dnf install containerd* -y3.2配置# 1、配置 mkdir -pv /etc/containerd containerd config default > /etc/containerd/config.toml #为containerd生成配置文件 #2、替换默认pause镜像地址:这一步非常非常非常非常重要 grep sandbox_image /etc/containerd/config.toml sudo sed -i 's|registry.k8s.io/pause:3.8|registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8|g' /etc/containerd/config.toml grep sandbox_image /etc/containerd/config.toml #请务必确认新地址是可用的: sandbox_image = "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" #3、配置systemd作为容器的cgroup driver grep SystemdCgroup /etc/containerd/config.toml sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml grep SystemdCgroup /etc/containerd/config.toml # 4、配置加速器(必须配置,否则后续安装cni网络插件时无法从docker.io里下载镜像) #参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration #添加 config_path="/etc/containerd/certs.d" sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.tomlmkdir -p /etc/containerd/certs.d/docker.io cat>/etc/containerd/certs.d/docker.io/hosts.toml << EOF server ="https://docker.io" [host."https ://dockerproxy.com"] capabilities = ["pull","resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull","resolve"] [host."https://docker.chenby.cn"] capabilities = ["pull","resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull","resolve" ] [host."http://hub-mirror.c.163.com"] capabilities = ["pull","resolve" ] EOF#5、配置containerd开机自启动 #5.1 启动containerd服务并配置开机自启动 systemctl daemon-reload && systemctl restart containerd systemctl enable --now containerd #5.2 查看containerd状态 systemctl status containerd #5.3查看containerd的版本 ctr version四、安装nginx+keepalived#安装与开启 dnf install -y nginx keepalived curl dnf install -y nginx-mod-stream systemctl enable nginx keepalived #配置 Nginx(两台 Master 都要配) #目标:在本机 0.0.0.0:16443 监听,转发到两个后端的 kube-apiserver(50:16443、51:16443) #编辑 /etc/nginx/nginx.conf(保留 http 段也没关系,关键是顶层加上 stream 段;Rocky9 的 nginx 支持动态模块): # /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # 使用系统提供的动态模块配置(若已安装将自动加载 stream 模块) include /usr/share/nginx/modules/*.conf; events { worker_connections 10240; } # 四层转发到两台 apiserver stream { upstream k8s_apiserver { server 192.168.30.50:6443 max_fails=3 fail_timeout=10s; server 192.168.30.51:6443 max_fails=3 fail_timeout=10s; } server { listen 0.0.0.0:16443; proxy_connect_timeout 5s; proxy_timeout 30s; proxy_pass k8s_apiserver; } } http { # 这里保持nginx默认 http 配置即可,删与不删均可。 include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 81; return 200 "ok\n"; } } #配置 Keepalived(两台 Master) #创建健康检查脚本 /etc/keepalived/check_nginx_kube.sh: cat >/etc/keepalived/check_nginx_kube.sh <<'EOF' #!/usr/bin/env bash # 通过本地Nginx转发口探活K8s apiserver(无认证的 /readyz,HTTP 200 即通过) curl -fsSk --connect-timeout 2 https://127.0.0.1:16443/readyz >/dev/null EOF chmod +x /etc/keepalived/check_nginx_kube.sh #Master1(192.168.30.50) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_50 # vrrp_strict # 若使用部分虚拟化/容器网络会引发问题,可注释掉 } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 # 改为你的网卡 virtual_router_id 58 # 1-255 任意一致值,这里取 58 priority 150 # Master1 高优先 advert_int 1 # 单播,避免二层组播受限环境(强烈推荐) unicast_src_ip 192.168.30.50 unicast_peer { 192.168.30.51 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #Master2(192.168.30.151) 的 /etc/keepalived/keepalived.conf: ! Configuration File for keepalived global_defs { router_id LVS_K8S_51 # vrrp_strict } vrrp_script chk_nginx_kube { script "/etc/keepalived/check_nginx_kube.sh" interval 3 timeout 2 fall 2 rise 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 58 priority 100 # 次优先 advert_int 1 unicast_src_ip 192.168.30.51 unicast_peer { 192.168.30.50 } authentication { auth_type PASS auth_pass 9c9c58 } virtual_ipaddress { 192.168.30.58/24 dev ens160 } track_script { chk_nginx_kube } } #启动 systemctl restart keepalived ip a | grep 192.168.30.58 #停掉 Master1 的 keepalived:systemctl stop keepalived,VIP 应在 Master2 出现,验证完再 systemctl start keepalived。五、安装k8s 5.1 准备k8s源# 创建repo文件 cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF sudo dnf makecache #参考:https://developer.aliyun.com/mirror/kubernetes/setenforce dnf install -y kubelet-1.27* kubeadm-1.27* kubectl-1.27* systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet 安装锁定版本的插件 sudo dnf install -y dnf-plugin-versionlock 锁定版本不让后续更新sudo dnf versionlock add kubelet-1.27* kubeadm-1.27* kubectl-1.27* containerd.io [root@k8s-01 ~]# sudo dnf versionlock list Last metadata expiration check: 0:35:21 ago on Fri Aug 8 10:40:25 2025. kubelet-0:1.27.6-0.* kubeadm-0:1.27.6-0.* kubectl-0:1.27.6-0.* containerd.io-0:1.7.27-3.1.el9.* #sudo dnf update就会排除锁定的应用5.2 主节点操作(node节点不执行)[root@k8s-01 ~]# kubeadm config images list I0906 16:16:30.198629 49023 version.go:256] remote version is much newer: v1.34.0; falling back to: stable-1.27 registry.k8s.io/kube-apiserver:v1.27.16 registry.k8s.io/kube-controller-manager:v1.27.16 registry.k8s.io/kube-scheduler:v1.27.16 registry.k8s.io/kube-proxy:v1.27.16 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.7-0 registry.k8s.io/coredns/coredns:v1.10.1 kubeadm config print init-defaults > kubeadm.yaml[root@k8s-01 ~]# cat kubeadm.yaml # kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration bootstrapTokens: - token: abcdef.0123456789abcdef ttl: 24h0m0s usages: ["signing","authentication"] groups: ["system:bootstrappers:kubeadm:default-node-token"] localAPIEndpoint: # 这里必须是你要执行 kubeadm init 的这台机器的真实IP(Master1) advertiseAddress: 192.168.30.50 bindPort: 6443 nodeRegistration: criSocket: unix:///run/containerd/containerd.sock imagePullPolicy: IfNotPresent # 不要在这里硬编码 name,默认会用主机的 hostname,避免复用此文件时出错 taints: null --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: kubernetes kubernetesVersion: v1.27.16 controlPlaneEndpoint: "192.168.30.58:16443" # 指向 Nginx+Keepalived 的 VIP:PORT certificatesDir: /etc/kubernetes/pki imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 # 供 Calico 使用,此网段可保持不变 apiServer: timeoutForControlPlane: 4m0s certSANs: # 建议把 VIP、两台 Master 的 IP 和主机名都放进 SAN,避免证书不信任 - "192.168.30.58" # VIP - "192.168.30.50" - "192.168.30.51" - "k8s-01" # 如你的主机名不同,请改成实际 hostname - "k8s-02" - "127.0.0.1" - "localhost" - "kubernetes" - "kubernetes.default" - "kubernetes.default.svc" - "kubernetes.default.svc.cluster.local" controllerManager: {} scheduler: {} etcd: local: dataDir: /var/lib/etcd --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: "systemd"root@k8s-01 ~]# kubeadm init --config kubeadm.yaml --upload-certs [init] Using Kubernetes version: v1.27.16 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0906 17:26:53.821977 54526 checks.go:835] detected that the sandbox image "registry.cn-guangzhou.aliyuncs.com/xingcangku/registry.k8s.io-pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-01 k8s-02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost] and IPs [10.96.0.1 192.168.30.50 192.168.30.58 192.168.30.51 127.0.0.1] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-01 localhost] and IPs [192.168.30.50 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 12.002658 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d [mark-control-plane] Marking the node k8s-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 \ --control-plane --certificate-key 0574b43d75ac9722533a3a5042cb86b97441b855371cb34e5fdd3c8733a39d8d Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.30.58:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:26d30a8cbfabc6d8a5b3965b9577a3ce33b01c4958a3e19fd001f06a0f3cb019 #如果出现失败的情况 kubeadm reset -f [root@k8s-01 ~]# kubeadm reset -f [preflight] Running pre-flight checks W0906 17:08:03.892290 53705 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] Deleted contents of the etcd data directory: /var/lib/etcd [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" W0906 17:08:03.899240 53705 cleanupnode.go:134] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory [reset] Deleting contents of directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your systems IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. #还需要手动删除 rm -rf /$HOME/.kube/config systemctl restart containerd rm -rf ~/.kube /etc/kubernetes/pki/* /etc/kubernetes/manifests/*#安装 CNI #Flannel(简单) kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/v0.25.5/Documentation/kube-flannel.yml #Calico(功能更全) kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/calico.yaml [root@k8s-02 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-59765c79db-rvqm5 1/1 Running 0 8m3s kube-system calico-node-4jlgw 1/1 Running 0 8m3s kube-system calico-node-lvzgx 1/1 Running 0 8m3s kube-system calico-node-qdrmn 1/1 Running 0 8m3s kube-system coredns-65dcc469f7-gktmx 1/1 Running 0 51m kube-system coredns-65dcc469f7-wmppd 1/1 Running 0 51m kube-system etcd-k8s-01 1/1 Running 0 51m kube-system etcd-k8s-02 1/1 Running 0 20m kube-system kube-apiserver-k8s-01 1/1 Running 0 51m kube-system kube-apiserver-k8s-02 1/1 Running 0 19m kube-system kube-controller-manager-k8s-01 1/1 Running 1 (20m ago) 51m kube-system kube-controller-manager-k8s-02 1/1 Running 0 19m kube-system kube-proxy-k7z9v 1/1 Running 0 22m kube-system kube-proxy-sgrln 1/1 Running 0 51m kube-system kube-proxy-wpkjb 1/1 Running 0 20m kube-system kube-scheduler-k8s-01 1/1 Running 1 (19m ago) 51m kube-system kube-scheduler-k8s-02 1/1 Running 0 19m #测试切换 #在当前 VIP 所在主机执行: systemctl stop keepalived #观察另外一台是否接管 VIP: ip a | grep 192.168.30.58 #再次访问: 正常会返回ok curl -k https://192.168.30.58:6443/readyz #恢复 vip会自动漂移回来 systemctl start keepalived#kubectl 正常 [root@k8s-01 ~]# kubectl get cs 2>/dev/null || \ kubectl get --raw='/readyz?verbose' | head NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy